- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- MCUXpresso Software and Tools

- :

- Kinetis Software Development Kit

- :

- KL82Z Standalone SDK I2C Master Interrupts not working

KL82Z Standalone SDK I2C Master Interrupts not working

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am currently upgrading a project from the FRDM-KL27Z to the new FRDM-KL82Z, which means using the standalone KL82Z SDK as it has not been integrated with KSDK 1.3 yet. I've been walking through my source files and testing the code that uses the SDK drivers such as SPI, I2C, etc. Everything I tested either worked immediately or needed minor modification until I started in on the I2C Master driver (blocking).

I am using the I2C1 driver to communicate with an Atmel ATECC508 CryptoAuthenticator IC. The code I built on top of the I2C driver wasn't working so I decided to start from scratch (with an SDK driver example) to see if I was missing something. I am using the i2c_blocking_master_example_frdmkl82z project as a testing ground and only made modifications necessary to use I2C1 instead of I2C0 which is the default bus.

These modifications included:

- Change hardware_init() to configure I2C1_IDX instead of I2C0_IDX

- PORTC10 is used for SCL

- PROTC11 is used for SDA

- Changed I2C_DRV_MasterInit to use instance number 1

- Commented out the demo code and replaced with a single call to I2C_DRV_MasterSendDataBlocking() using instance number 1

NOTE: My problem is very similar to the question found here: K22 I2C SDK Driver behaving strangely? (As in failing...) but the suggestions there did not help.

The issue I'm experiencing is that it appears the i2c master interrupt handler is not getting called when using I2C_DRV_MasterSendDataBlocking(). This is what I know:

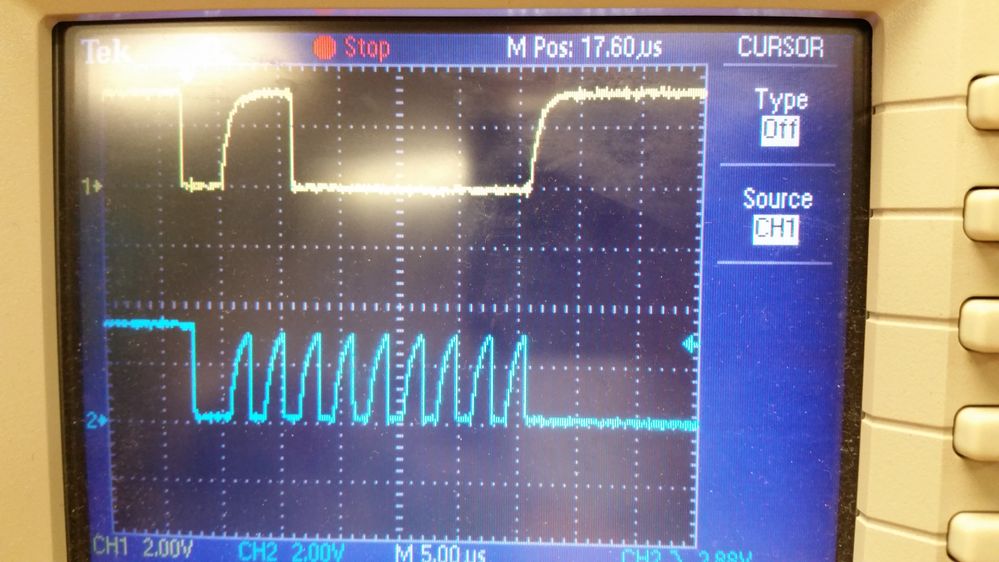

- Master sends a start condition successfully (Oscope)

- Master sends the slave device address successfully (Oscope)

- Slave ACKs the device address successfully (Oscope)

- This is where the transaction stops and the driver returns kStatus_I2C_Timeout every time

(Above is my Oscope readout confirming the device address of 0xC0 is sent and ACK'd by slave)

What I've tried:

- The suggestions here: K22 I2C SDK Driver behaving strangely? (As in failing...)

- Confirming the same code works on the FRDM-KL27Z using SDK 1.3 (Yes it does)

- Walked through SDK calls and confirmed that I2C_HAL_SetIntCmd(baseAddr, true) is called before sending the slave address. (Enabling interrupts)

- I've tried re-installing the KL82Z standalone SDK and rebuilding ksdk_platform_lib_KL82Z7. No change.

- KL27Z uses PE Micro debugger and KL82Z uses JLink debugger (should that matter?)

- I've tried running the i2c driver with debug running and after a power-on-rest without debugger running. Same result.

- I've tried resistances from 1K to 10K for the bus pull ups. Same result)

- Varying baud rates

Test Conditions:

- I2C1 configured as open drain with 5.6K pull-up resistors (I've tried resistances from 1K to 10K. Same result)

- KDS 3.1.0

- KSDK_1.3.0_Standalone_KL82Z

I don't know what else to try since the device does indeed ACK its own address, but the master driver does not seem to "receive" the ACK and follow up with transmitting the byte data to the slave device, indicating that the issue lies in interrupt handler not getting called which I've confirmed with breakpoints in the handler. Please help!

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Cody,

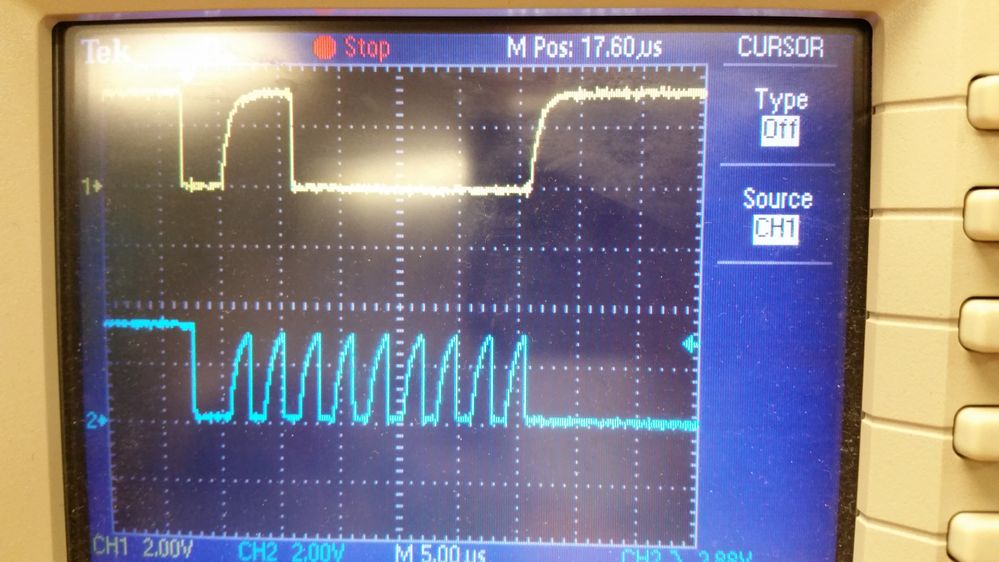

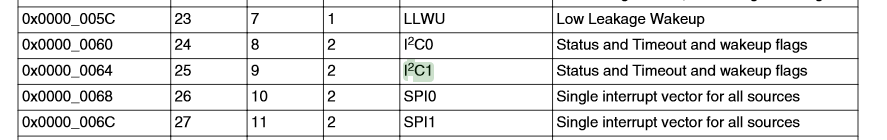

The interrupt mux is present on cortex-M0 devices where the number of interrupts in the system is larger than what the cortex-m0 can handle (32 individual interrupt channels). The I2C1 interrupt is not assigned to one of the 32 core interrupts, but instead is placed on the intmux peripheral. You can see this assignment from the KL82 reference manual, section 3.2.5.

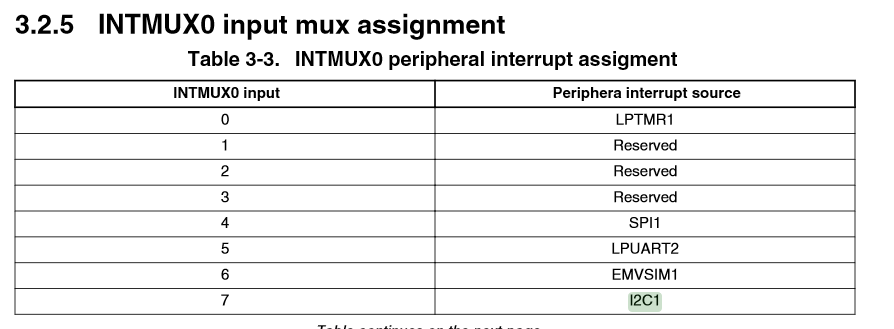

In order to route the interrupt from the I2C1, you must route it through one of the cortex-m NVIC vectors assigned to INTMUX-0. You can see the choices below.

The code that Susan has provided above will enable the INTMUX clock, choose the I2C1 interrupt on the INTMUX, and then finally enable the INTMUX interrupt.

The reason you don't see this special step on the KL27 is assigned to the NVIC controller, table 3-2 in the KL27 reference manual.

I hope this helps you get your I2C1 solution working on the KL82!

Jason

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks everyone for helping this noob!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Cody,

The interrupt mux is present on cortex-M0 devices where the number of interrupts in the system is larger than what the cortex-m0 can handle (32 individual interrupt channels). The I2C1 interrupt is not assigned to one of the 32 core interrupts, but instead is placed on the intmux peripheral. You can see this assignment from the KL82 reference manual, section 3.2.5.

In order to route the interrupt from the I2C1, you must route it through one of the cortex-m NVIC vectors assigned to INTMUX-0. You can see the choices below.

The code that Susan has provided above will enable the INTMUX clock, choose the I2C1 interrupt on the INTMUX, and then finally enable the INTMUX interrupt.

The reason you don't see this special step on the KL27 is assigned to the NVIC controller, table 3-2 in the KL27 reference manual.

I hope this helps you get your I2C1 solution working on the KL82!

Jason

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Update: I switched back to I2C0 and everything works perfectly, so there seems to be an issue with the interrupt handler when I2C1 is configured. Although I can move forward, It would be nice to know why this is occurring on the I2C1 bus.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Cody Lundie,

For the I2C1 can not work well by just calling I2C_DRV_MasterSendDataBlocking() issue, it's because that the interrupt of I2C1 is under INTMUX, and because the intmux is very flexible and user could freely choose to mux the interrupt to one of the channels, so this work is left out to be done by users.

So to use the interrupts that are under INTMUX, user need to enable the intmux interrupt in the application code and configure the according interrupt of the using IP to one of the INTMUX channels, for current case is I2C1. And user also needs to include the interrupt entry of INTMUX implemented in fsl_interrupt_manager_irq.c. The following code could be a reference, hope it could help:

I2C_DRV_MasterInit(BOARD_I2C_COMM_INSTANCE, &master, &masterConfig); #if (FSL_FEATURE_SOC_INTMUX_COUNT) if (g_i2cIrqId[BOARD_I2C_COMM_INSTANCE] >= FSL_FEATURE_INTMUX_IRQ_START_INDEX) { CLOCK_SYS_EnableIntmuxClock(INTMUX0_IDX);

INTMUX_HAL_EnableInterrupt(INTMUX0, kIntmuxChannel0, 1U << (g_i2cIrqId[BOARD_I2C_COMM_INSTANCE] - FSL_FEATURE_INTERRUPT_IRQ_MAX -1U));

INT_SYS_EnableIRQ(INTMUX0_0_IRQn); }

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Chun Su,

Thank you for your response! I'm sorry but I'm very new to your products and I'm unclear as to how your code snippet from your last comment should be used? Could you explain more on what needs to be done in order to use I2C1? I never had to do this for the KL27Z and not sure how to proceed. I appreciate your time on this matter.

Cody