- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- MCUXpresso Software and Tools

- :

- Kinetis Software Development Kit

- :

- K22 I2C SDK Driver behaving strangely? (As in failing...)

K22 I2C SDK Driver behaving strangely? (As in failing...)

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

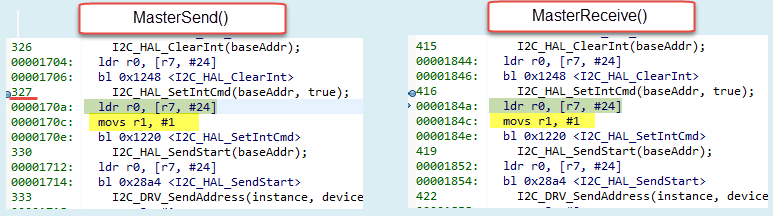

EDIT (The Short Story) : IICIE does not set after I2C_HAL_SetIntCmd(baseAddr, true); in I2C_DRV_MasterSendDataBlocking, but it does set and goes through its proper courses when used in I2C_DRV_MasterReceiveDataBlocking. So, 0x04 timeouts on sends, 0x00 successful receives, but using the exact same subfunction to enable the interrupt? Makes no sense unless there is some other bit combination it isn't compatible with....If I manually set IICIE after the I2C_HAL_SetIntCmd(baseAddr, true); in I2C_DRV_MasterSendDataBlocking, transmission succeeds, so I know the bit needs to be set manually somehow...will update on that.

The Long Story:

Currently trying to program registers of a Max 98090 Audio CODEC. Works perfectly with another device, but running into some static with my K22 FRDM board using the Master Driver.

This version of Max98090 addresses at 0x20 / 0x21 for W/R, so my address variable is set at 0x10 (0010000). hardware seems to be initialized properly and based on scope/timings is operating "properly."

I had the I2C comm demo working and have tried to copy as many of my settings as possible from that.

Variations I have tried:

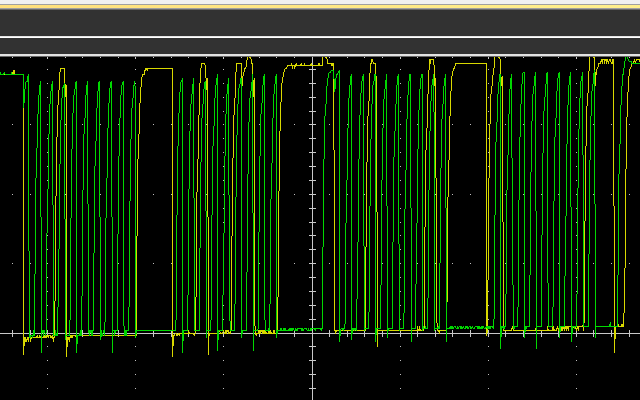

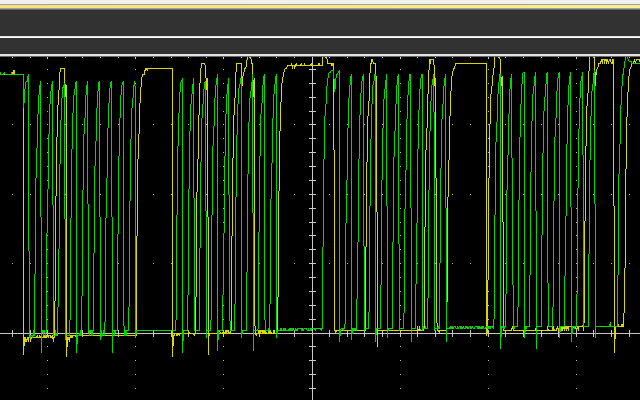

I have tried using I2C1 on different pins, and noticed it helped square off my waveforms, likely because it isn't sharing any leads/trace with another device as I2C0 on PB2/3 is (hooked to the accel/mag).

No pullups, 1k, 2.2k, and 10k. No major differences. Tried 50, 100, 400kHz. 50 kHz times out, but 100 and 400 work.

Using Master Send Data results in the correct address, and ACK from the CODEC as slave, but nothing beyond that....returns time out every time. If I stop the transfer with a breakpoint and manually set the IE before the send address bit it works, otherwise no dice. (The interrupts work for master receive)

Master Receive is successful, but works strangely compared to my previous experience with I2C and TWI. In order to read one byte, it goes through the following sequence :

1)Start, send address (Write address, not read?)

2)Sends the Register value I want to read

3)Sends a read address

4) Reads the actual value (correctly)

Why start out with a write instead of starting with a read address directly, followed by the register, and then receive a value? Is there a specific reason in logic, performance, or mechanics?

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Donald:

Your code seems ok to me.

I did a quick test with KDS v.1.1.1 and FRDM-K22F calling the MasterSendDataBlocking() function and the interrupt triggers just fine.

Can you try removing the call to MasterReceive function? I mean calling just MasterSend, and see if the issue persists.

I am curious about how are you reading the IICIE bit. Did you install the registers view plugin for KDS?

If you already identified that the API I2C_HAL_SetIntCmd is the problem, then it might be related to the compiler (optimizations maybe). In debug mode you have the Disassembly view, so I would compare the code generated for this API when calling MasterReceive vs MasterSend.

Regards!,

Jorge Gonzalez

-----------------------------------------------------------------------------------------------------------------------

Note: If this post answers your question, please click the Correct Answer button. Thank you!

-----------------------------------------------------------------------------------------------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Donald:

- What pins are you using? In the file pin_mux.c you will find a function called "pin_mux_I2C()". The pins you use need to be configured as open-drain and also external pull-ups are necessary.

- Repeated start errata is not present in K22FN512.

- How are you using the API's? Can you show a code snippet vs the codec protocol?

- As you mentioned, both driver functions use the same HAL API to enable interrupts, so it might be strange if this is the problem. Besides we have used the I2C Driver without issues, check this: KSDK I2C EEPROM Example.

Regards!,

Jorge Gonzalez

-----------------------------------------------------------------------------------------------------------------------

Note: If this post answers your question, please click the Correct Answer button. Thank you!

-----------------------------------------------------------------------------------------------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have looked at the i2c_comm master example and had it working with uc_iii to send between I2C0 and 1 on the same board. Right now I'm running this in BM_OS. Will look at the EEPROM example.

Definitely have the open drains enabled in my pin-setup:

/***** I2C PINS *****/

/* I2C0 */

/* PORTB_PCR2 - CLOCK */

PORT_HAL_SetMuxMode(g_portBaseAddr[1], 2u, kPortMuxAlt2);

PORT_HAL_SetOpenDrainCmd(g_portBaseAddr[1], 2u, true);

/* PORTB_PCR3 - DATA */

PORT_HAL_SetMuxMode(g_portBaseAddr[1], 3u, kPortMuxAlt2);

PORT_HAL_SetOpenDrainCmd(g_portBaseAddr[1], 3u, true);

External pull-ups are used. Right now they are 10k's, but I have tried two other values (1k, 2.2k) with the same results in terms of RX working, TX not.

Declared locally to the function :

/***** I2C VARS *****/

i2c_master_state_t i2c0_master_state;

i2c_status_t i2c_status;

i2c_device_t

i2c0_codec =

{

.address = 0x10, (Actually 0x20 and 0x21)

.baudRate_kbps = 400

};

/***** I2C TX/RX VARIABLES *****/

uint8_t cmdbuff[1];

uint8_t txbuff[1];

uint8_t rxbuff[1];

Init:

/****** INITIALIZE I2C ******/

I2C_DRV_MasterInit(0, &i2c0_master_state);

/***** TEST REMOVE AFTERWARDS *****/

cmdbuff[0] = 0x26;

rxbuff[0] = 0;

i2c_status = I2C_DRV_MasterReceiveDataBlocking(0, &i2c0_codec, cmdbuff,

sizeof(cmdbuff), rxbuff, sizeof(rxbuff), 500);

^ THIS SETS rxbuff[0] TO 0x80 WHICH IS THE VALUE OF 0x26 in the codec after Reset... good to go

cmdbuff[0] = 0x26;

txbuff[0] = (0 | (1 << 6));

i2c_status = I2C_DRV_MasterSendDataBlocking(0, &i2c0_codec, cmdbuff,

sizeof(cmdbuff), txbuff, sizeof(txbuff), 500);

^ THIS SENDS THE ADDRESS, SLAVE ACKS, however the function that should set IICIE does not set that bit (bit 6), so it doesn't go through the ISR after HAL Send Start, producing 0x04 time out for the status.

------

I had the cmdbuff, tx and rxbuff set as pointers, but I was getting compiler errors for passing arguments of incompatible type, which makes sense I think because a declaration of an array is already a pointer. Other than that, I'm stupmed, and thank you for the quick response.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Donald:

Your code seems ok to me.

I did a quick test with KDS v.1.1.1 and FRDM-K22F calling the MasterSendDataBlocking() function and the interrupt triggers just fine.

Can you try removing the call to MasterReceive function? I mean calling just MasterSend, and see if the issue persists.

I am curious about how are you reading the IICIE bit. Did you install the registers view plugin for KDS?

If you already identified that the API I2C_HAL_SetIntCmd is the problem, then it might be related to the compiler (optimizations maybe). In debug mode you have the Disassembly view, so I would compare the code generated for this API when calling MasterReceive vs MasterSend.

Regards!,

Jorge Gonzalez

-----------------------------------------------------------------------------------------------------------------------

Note: If this post answers your question, please click the Correct Answer button. Thank you!

-----------------------------------------------------------------------------------------------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

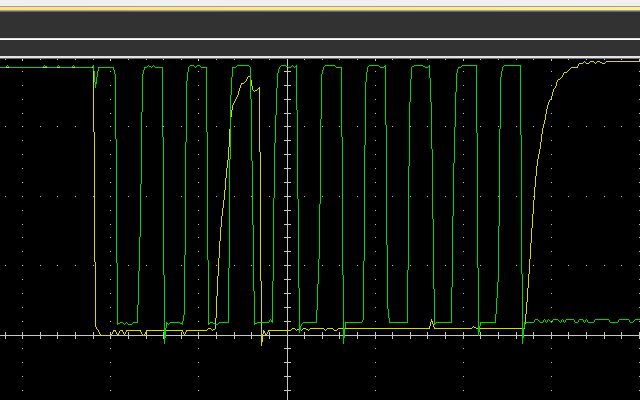

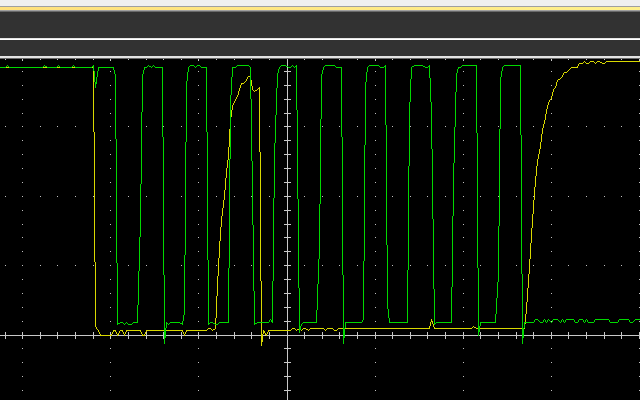

Look at the assembly...bingo! Maybe....?

The question is, how do I change it?

RECEIVE:

413 I2C_HAL_SetDirMode(baseAddr, kI2CSend);

0000157c: ldr r0, [r7, #24]

0000157e: movs r1, #1

00001580: bl 0xe70 <I2C_HAL_SetDirMode>

416 I2C_HAL_ClearInt(baseAddr);

00001584: ldr r0, [r7, #24]

00001586: bl 0xf88 <I2C_HAL_ClearInt>

417 I2C_HAL_SetIntCmd(baseAddr, true);

0000158a: ldr r0, [r7, #24]

0000158c: movs r1, #1

0000158e: bl 0xf60 <I2C_HAL_SetIntCmd>

SEND:

323 I2C_HAL_SetDirMode(baseAddr, kI2CSend);

0000143c: ldr r0, [r7, #24]

0000143e: movs r1, #1

00001440: bl 0xe70 <I2C_HAL_SetDirMode>

326 I2C_HAL_ClearInt(baseAddr);

00001444: ldr r0, [r7, #24]

00001446: bl 0xf88 <I2C_HAL_ClearInt>

328

0000144a: ldr r0, [r7, #24]

0000144c: movs r1, #0

0000144e: bl 0xf60 <I2C_HAL_SetIntCmd>

331 I2C_HAL_SendStart(baseAddr);

00001452: ldr r0, [r7, #24]

00001454: bl 0x25e8 <I2C_HAL_SendStart>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Donald:

Yes, that must be the problem. This is what I get:

This code is part of the platform library, so you should look there instead of your application. Do you remember changing any project setting of the K22 KSDK library (e.g. optimizations)?

Also, notice that in my case the MasterSend() code calls the API at line 327, while in your case it is missing.

Regards!

Jorge Gonzalez

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I rebuilt the library and the i2c master driver c file showed up as modified upon rebuild. Everything is working now. Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for verifying. I haven't changed anything in terms of the lib.a or associated files.

Do you think rebuilding the library could potentially solve this?

I'm not super saavvy when it comes to toolchains and compiler mechanics, so if you could offer some suggestions as to what to look for or how to approach the error, I can probably hunt it down for a fix.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- I am using the EmbSys plug-in to do register reads (another Eclipse on MCU tutorial), and that's how I was able to set the IICEN bit.

- I just built a test function with only the bare essentials running, but same issue - I will upload an exported archive if you get a chance to check it.

- Commented out Master Receive. Send still doesn't work on its own, but returns 0x00 for manually setting the IICEN bit by using a breakpoint before Send Start.

- Will check dis-assembly view, although I'm not much of an assembly guy so I'll probably end up posting it here.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maybe something to do with this? Re: Kinetis K - I2C: Repeat start cannot be generated?