- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- MCUXpresso Software and Tools

- :

- MCUXpresso SDK

- :

- MCUX on RT1050 - MMC stack won't initialize

MCUX on RT1050 - MMC stack won't initialize

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

TL;DR -- When starting the MMC stack by calling mmc_disk_initialize() which calls MMC_Init(), a possibly incorrect switch() statement causes a subroutine to generate an assert(). (EDIT 9/16: The assert() occurs when the extended CSD data comes back all zeroes, and this occurs when a cacheable memory area, i.e. not SRAM_DTC, is used to store global data. See discussion below.)

So I'm trying to get an eMMC device (ISSI IS21ES04G) to work on an RT1050-based board. We have the device attached to GPIO_SD_B1_00-06 (USDHC2) in 4-bit mode. Here's the schematic:

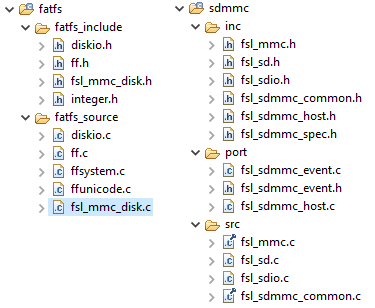

Since there's no SDK 2.6.1 example for an eMMC device, I instead took some files from the "fatfs_usdcard" example, cut out the SD-specific files, and imported MMC-specific source files directly from the SDK. Here's what I have:

Of note, I deleted fsl_sd_disk.* and replaced them with fsl_mmc_disk.*. I also customized my SDHC-specific board.h information:

/* SD/MMC configuration. */

#define BOARD_USDHC1_BASEADDR USDHC1

#define BOARD_USDHC2_BASEADDR USDHC2

/* EVKB uses PLL2_PFD2 (166 MHz) to run USDHC, so its divider is 1. On the

* MCU, we run PLL2_PFD0 at 396 MHz, so its divider is 2, yielding 198 MHz. */

#define BOARD_USDHC1_CLK_FREQ (CLOCK_GetSysPfdFreq(kCLOCK_Pfd0) / (CLOCK_GetDiv(kCLOCK_Usdhc1Div) + 1U))

#define BOARD_USDHC2_CLK_FREQ (CLOCK_GetSysPfdFreq(kCLOCK_Pfd0) / (CLOCK_GetDiv(kCLOCK_Usdhc2Div) + 1U))

#define BOARD_USDHC1_IRQ USDHC1_IRQn

#define BOARD_USDHC2_IRQ USDHC2_IRQn

/* eMMC is always present. */

#define BOARD_USDHC_CARD_INSERT_CD_LEVEL (1U)

#define BOARD_USDHC_CD_STATUS() (BOARD_USDHC_CARD_INSERT_CD_LEVEL)

#define BOARD_USDHC_CD_GPIO_INIT() do { } while (0)

/* eMMC is always powered. */

#define BOARD_USDHC_SDCARD_POWER_CONTROL_INIT() do { } while (0)

#define BOARD_USDHC_SDCARD_POWER_CONTROL(state) do { } while (0)

#define BOARD_USDHC_MMCCARD_POWER_CONTROL_INIT() do { } while (0)

#define BOARD_USDHC_MMCCARD_POWER_CONTROL(state) do { } while (0)

/* Our device is on USDHC2 for Rev. A/B, and on USDHC1 for Rev. C. */

#define BOARD_MMC_HOST_BASEADDR BOARD_USDHC2_BASEADDR

#define BOARD_MMC_HOST_CLK_FREQ BOARD_USDHC2_CLK_FREQ

#define BOARD_MMC_HOST_IRQ BOARD_USDHC2_IRQ

#define BOARD_SD_HOST_BASEADDR BOARD_USDHC2_BASEADDR

#define BOARD_SD_HOST_CLK_FREQ BOARD_USDHC2_CLK_FREQ

#define BOARD_SD_HOST_IRQ BOARD_USDHC2_IRQ

#define BOARD_MMC_VCCQ_SUPPLY kMMC_VoltageWindow170to195

#define BOARD_MMC_VCC_SUPPLY kMMC_VoltageWindows270to360

/* Define these to indicate we don't support 1.8V or 8-bit data bus. */

#define BOARD_SD_SUPPORT_180V SDMMCHOST_NOT_SUPPORT

#define BOARD_MMC_SUPPORT_8BIT_BUS SDMMCHOST_NOT_SUPPORTIn my test function, I initialize the MMC stack:

SDMMCHOST_SET_IRQ_PRIORITY(BOARD_MMC_HOST_IRQ, 11);

DSTATUS dstatus = mmc_disk_initialize(MMCDISK);

if (RES_OK != dstatus) {

printf("mmc_disk_initialize() failed\n");

return -1;

}When I trace into mmc_disk_initialize(), everything seems OK at first. It correctly sets the host base address (USDHC2) and source clock rate (198 MHz... I use PLL2_PFD0 @ 396 MHz divided by 2 to drive the USDHC modules), then calls MMC_Init(). MMC_HostInit() passes fine, then MMC_PowerOnCard(), then MMC_PowerOffCard(). Then it calls MMC_CardInit(), and that goes a fair distance. It sets the 400 kHz clock OK, gets the host capabilities, tells the card to go idle, gets the card CID, sets the relative address, sets max frequency, puts the card in transfer state, gets extended CSD register content, and sets the block size. Then it calls this:

/* switch to host support speed mode, then switch MMC data bus width and select power class */

if (kStatus_Success != MMC_SelectBusTiming(card))

{

return kStatus_SDMMC_SwitchBusTimingFailed;

}The guts of the function:

static status_t MMC_SelectBusTiming(mmc_card_t *card)

{

assert(card);

mmc_high_speed_timing_t targetTiming = card->busTiming;

switch (targetTiming)

{

case kMMC_HighSpeedTimingNone:

case kMMC_HighSpeed400Timing:

if ((card->flags & (kMMC_SupportHS400DDR200MHZ180VFlag | kMMC_SupportHS400DDR200MHZ120VFlag)) && ((kSDMMCHOST_SupportHS400 != SDMMCHOST_NOT_SUPPORT)))

{

/* switch to HS200 perform tuning */

if (kStatus_Success != MMC_SwitchToHS200(card, SDMMCHOST_SUPPORT_HS400_FREQ / 2U))

{

return kStatus_SDMMC_SwitchBusTimingFailed;

}

/* switch to HS400 */

if (kStatus_Success != MMC_SwitchToHS400(card))

{

return kStatus_SDMMC_SwitchBusTimingFailed;

}

break;

}

case kMMC_HighSpeed200Timing:

if ((card->flags & (kMMC_SupportHS200200MHZ180VFlag | kMMC_SupportHS200200MHZ120VFlag)) && ((kSDMMCHOST_SupportHS200 != SDMMCHOST_NOT_SUPPORT)))

{

if (kStatus_Success != MMC_SwitchToHS200(card, SDMMCHOST_SUPPORT_HS200_FREQ))

{

return kStatus_SDMMC_SwitchBusTimingFailed;

}

break;

}

case kMMC_HighSpeedTiming:

if (kStatus_Success != MMC_SwitchToHighSpeed(card))

{

return kStatus_SDMMC_SwitchBusTimingFailed;

}

break;

default:

card->busTiming = kMMC_HighSpeedTimingNone;

}

return kStatus_Success;

}When I step into this function, card->busTiming is 0 (kMMC_HighSpeedTimingNone) and card->flags is 0x100 (kMMC_SupportHighCapacityFlag). According to the logic of this function, because our timing is None, we go through all of the switch() cases. In this application, kSDMMCHOST_SupportHS400 is set to SDMMCHOST_NOT_SUPPORT, so the first branch (HS400) is optimized out. The second branch (HS200) is evaluated, but because neither of the HS200 flags are set, we skip it. Then we fall through into the kMMC_HighSpeedTiming branch, and we call MMC_SwitchToHighSpeed(). This is where things go wrong.

Here is the relevant code in MMC_SwitchToHighSpeed():

static status_t MMC_SwitchToHighSpeed(mmc_card_t *card)

{

assert(card);

uint32_t freq = 0U;

/* check VCCQ voltage supply */

[...]

if (kStatus_Success != MMC_SwitchHSTiming(card, kMMC_HighSpeedTiming, kMMC_DriverStrength0))

{

return kStatus_SDMMC_SwitchBusTimingFailed;

}

if ((card->busWidth == kMMC_DataBusWidth4bitDDR) || (card->busWidth == kMMC_DataBusWidth8bitDDR))

{

freq = MMC_CLOCK_DDR52;

SDMMCHOST_ENABLE_DDR_MODE(card->host.base, true, 0U);

}

else if (card->flags & kMMC_SupportHighSpeed52MHZFlag)

{

freq = MMC_CLOCK_52MHZ;

}

else if (card->flags & kMMC_SupportHighSpeed26MHZFlag)

{

freq = MMC_CLOCK_26MHZ;

}

card->busClock_Hz = SDMMCHOST_SET_CARD_CLOCK(card->host.base, card->host.sourceClock_Hz, freq);

[...]

card->busTiming = kMMC_HighSpeedTiming;

return kStatus_Success;

}freq is initialized to 0 at the top of the function. We tell the MMC controller to switch to HS timing with 50 ohm drive strength, then we set freq based on one of three sets of conditions:

- If 4-bit DDR or 8-bit DDR flag is enabled, set freq to 52000000 (and enable DDR mode).

- If HS 52 MHz flag is enabled, set freq to 52000000.

- If HS 26 MHz flag is enabled, set freq to 26000000.

However, if none of those three conditions are met, then freq remains 0. Thus, the call to SDMMCHOST_SET_CARD_CLOCK() triggers an assert(), because USDHC_SetSdClock() asserts that freq cannot be 0. And that's it, game over.

I have not yet put a scope on the data/clk/cmd lines to verify there is activity; that'll probably be my next step. My question is... what's going wrong here, exactly? By the time execution reaches MMC_SelectBusTiming(), card->busTiming is set to 0 (kMMC_HighSpeedTimingNone) and the only flag set in card->flags is 0x100 (kMMC_SupportHighCapacityFlag). So why does MMC_SwitchToHighSpeed() assume that either the bus width has already been selected to be 4-bit or 8-bit, or that HS26 or HS52 are already indicated in the card flags? Has this code actually been tested on an MMC card? What should I be doing differently? Thanks.

David R.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mike,

So I tried the solution of using AT_NONCACHEABLE_SECTION_ALIGN() macros to declare the Ethernet data buffers, but I found that they had no effect on the application. After exploring what those macros do and examining the MAP files, I arrived at a complete solution. (It's not the only possible solution, but it works for my application.)

Here is the definition for AT_NONCACHEABLE_SECTION_ALIGN() in fsl_common.h:

#define AT_NONCACHEABLE_SECTION(var) __attribute__((section("NonCacheable,\"aw\",%nobits @"))) var

#define AT_NONCACHEABLE_SECTION_ALIGN(var, alignbytes) \

__attribute__((section("NonCacheable,\"aw\",%nobits @"))) var __attribute__((aligned(alignbytes)))It simply declares the variable to belong to a section named "NonCacheable". Importantly, the default linker script does absolutely nothing with this declaration; simply using this declaration will have no effect on the application. I applied these macros to the Ethernet buffers:

err_t ethernetif0_init(struct netif *netif)

{

static struct ethernetif ethernetif_0;

AT_NONCACHEABLE_SECTION_ALIGN(static enet_rx_bd_struct_t rxBuffDescrip_0[ENET_RXBD_NUM], FSL_ENET_BUFF_ALIGNMENT);

AT_NONCACHEABLE_SECTION_ALIGN(static enet_tx_bd_struct_t txBuffDescrip_0[ENET_TXBD_NUM], FSL_ENET_BUFF_ALIGNMENT);

AT_NONCACHEABLE_SECTION_ALIGN(static rx_buffer_t rxDataBuff_0[ENET_RXBD_NUM], FSL_ENET_BUFF_ALIGNMENT);

AT_NONCACHEABLE_SECTION_ALIGN(static tx_buffer_t txDataBuff_0[ENET_TXBD_NUM], FSL_ENET_BUFF_ALIGNMENT);

[...]

}But they remained allocated to the same area of memory (SRAM_OC), and the application remained non-functional (app runs, but unit fails to respond to ping).

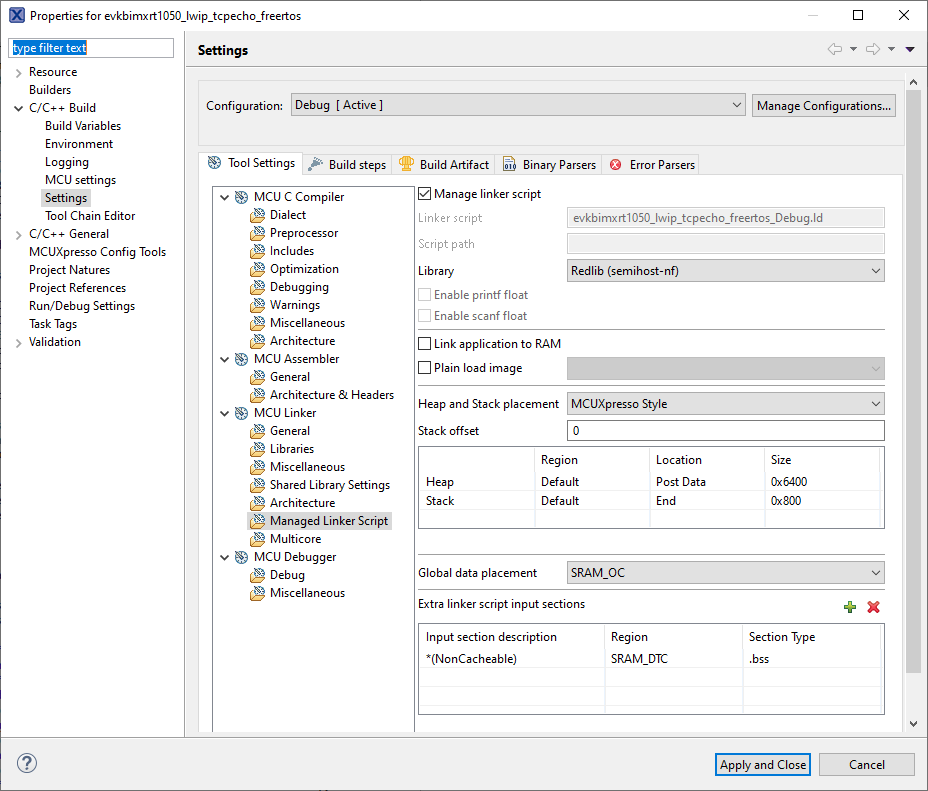

It is necessary to tell the linker to do something with regard to the "NonCacheable" section name. In my case, I created a custom linker script input section, like this:

The entry there says that anything in section "NonCacheable" should be located in SRAM_DTC (by definition non-cacheable) and placed in the .bss section. After making this change and recompiling, the application now works as expected.

This same solution can be applied to the MMC (and presumably SD) examples. For MMC, edit fsl_mmc_host.c like so:

/*******************************************************************************

* Variables

******************************************************************************/

/*! @brief Card descriptor */

AT_NONCACHEABLE_SECTION(mmc_card_t g_mmc);Then add the "NonCacheable" definition to the linker script as above, and now "g_mmc" is located in SRAM_DTC, while the remaining global data is in SRAM_OC as previously declared.

There may well be other means of declaring where "NonCacheable" data should go, but this seems the easiest to implement -- use SRAM_DTC for all non-cacheable data, and remaining global data can reside wherever (SRAM_OC, BOARD_SDRAM, etc).

It should now be NXP's task to re-test each of its SDK examples to ensure that there are no other cache issues in the SDK. As Jack King has already identified, a caching issue exists with the USB examples. Thanks for your assistance in resolving this matter.

David R.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mike,

So I tried the solution of using AT_NONCACHEABLE_SECTION_ALIGN() macros to declare the Ethernet data buffers, but I found that they had no effect on the application. After exploring what those macros do and examining the MAP files, I arrived at a complete solution. (It's not the only possible solution, but it works for my application.)

Here is the definition for AT_NONCACHEABLE_SECTION_ALIGN() in fsl_common.h:

#define AT_NONCACHEABLE_SECTION(var) __attribute__((section("NonCacheable,\"aw\",%nobits @"))) var

#define AT_NONCACHEABLE_SECTION_ALIGN(var, alignbytes) \

__attribute__((section("NonCacheable,\"aw\",%nobits @"))) var __attribute__((aligned(alignbytes)))It simply declares the variable to belong to a section named "NonCacheable". Importantly, the default linker script does absolutely nothing with this declaration; simply using this declaration will have no effect on the application. I applied these macros to the Ethernet buffers:

err_t ethernetif0_init(struct netif *netif)

{

static struct ethernetif ethernetif_0;

AT_NONCACHEABLE_SECTION_ALIGN(static enet_rx_bd_struct_t rxBuffDescrip_0[ENET_RXBD_NUM], FSL_ENET_BUFF_ALIGNMENT);

AT_NONCACHEABLE_SECTION_ALIGN(static enet_tx_bd_struct_t txBuffDescrip_0[ENET_TXBD_NUM], FSL_ENET_BUFF_ALIGNMENT);

AT_NONCACHEABLE_SECTION_ALIGN(static rx_buffer_t rxDataBuff_0[ENET_RXBD_NUM], FSL_ENET_BUFF_ALIGNMENT);

AT_NONCACHEABLE_SECTION_ALIGN(static tx_buffer_t txDataBuff_0[ENET_TXBD_NUM], FSL_ENET_BUFF_ALIGNMENT);

[...]

}But they remained allocated to the same area of memory (SRAM_OC), and the application remained non-functional (app runs, but unit fails to respond to ping).

It is necessary to tell the linker to do something with regard to the "NonCacheable" section name. In my case, I created a custom linker script input section, like this:

The entry there says that anything in section "NonCacheable" should be located in SRAM_DTC (by definition non-cacheable) and placed in the .bss section. After making this change and recompiling, the application now works as expected.

This same solution can be applied to the MMC (and presumably SD) examples. For MMC, edit fsl_mmc_host.c like so:

/*******************************************************************************

* Variables

******************************************************************************/

/*! @brief Card descriptor */

AT_NONCACHEABLE_SECTION(mmc_card_t g_mmc);Then add the "NonCacheable" definition to the linker script as above, and now "g_mmc" is located in SRAM_DTC, while the remaining global data is in SRAM_OC as previously declared.

There may well be other means of declaring where "NonCacheable" data should go, but this seems the easiest to implement -- use SRAM_DTC for all non-cacheable data, and remaining global data can reside wherever (SRAM_OC, BOARD_SDRAM, etc).

It should now be NXP's task to re-test each of its SDK examples to ensure that there are no other cache issues in the SDK. As Jack King has already identified, a caching issue exists with the USB examples. Thanks for your assistance in resolving this matter.

David R.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Addendum: While declaring "mmc_card_t g_mmc" as non-cacheable allows the MMC driver to successfully retrieve the extended CSD information and thus initialize the card, the FatFS stack and demo code still fails to execute with data cache enabled. These are my data declarations in my main program:

AT_NONCACHEABLE_SECTION(static FATFS g_fileSystem); /* File system object */

AT_NONCACHEABLE_SECTION(static FIL g_fileObject); /* File object */

#define BUFFER_SIZE (100U)

AT_NONCACHEABLE_SECTION_ALIGN(uint8_t g_bufferWrite[SDK_SIZEALIGN(BUFFER_SIZE, SDMMC_DATA_BUFFER_ALIGN_CACHE)],

MAX(SDMMC_DATA_BUFFER_ALIGN_CACHE, SDMMCHOST_DMA_BUFFER_ADDR_ALIGN));

AT_NONCACHEABLE_SECTION_ALIGN(uint8_t g_bufferRead[SDK_SIZEALIGN(BUFFER_SIZE, SDMMC_DATA_BUFFER_ALIGN_CACHE)],

MAX(SDMMC_DATA_BUFFER_ALIGN_CACHE, SDMMCHOST_DMA_BUFFER_ADDR_ALIGN));So the working buffers that FatFS uses as well as the card struct should all be in non-cacheable memory, but something fails when the demo code tries to create a directory; the call to find_volume() returns FR_NO_FILESYSTEM because of a bad return value from check_fs(). So I'm going to have to keep data cache disabled until NXP completes their internal review of their RT1050 SDK code and drivers.

David R.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My temporary fix is to place the Globals in the DTCM (non-cached) region. It seems to avoid the massive performance hit when disabling cache.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Glad you got it working! I tried something similar with the USB examples, which have more sections defined in the macros.

It still didn't change the USB enumeration problem.

I'll try your fix on my FatFS sample project.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah, I spoke a bit too soon apparently... the fix works for making the MMC driver itself cooperate, but FatFS still fails apparently, even with the FatFS global objects wrapped with non-cacheable macros. See below.

David R.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hmm, that's unfortunate, but similar to what I am seeing as well. The non-FatFS example does work, but using FatFS, it still fails on mkfs for me.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can anyone from NXP provide some insight on this issue? Thanks.

David R.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi David,

If it is available, Could you help to provide your test project?

Thank.

Mike

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK, so after a thorough exercise in creating a dedicated test program, I think I have a better understanding of the failure mode, but it also raises a number of interesting questions.

I can't post my original program that I have been using for testing, because it contains a number of proprietary libraries. (If you really want to examine it, I can send it to you outside of this forum.) So instead I created a new SDK FreeRTOS program (attached) with the SDMMC and FatFs components, and then modified the board support code to match my environment (debug UART on LPUART8, eMMC device on USDHC2, etc). I then began single-stepping through MMC_Init() and in particular MMC_CardInit() to see exactly what is being done with the card and from what commands certain bits of data are coming from. I made a record of this single-stepping in an Excel sheet (attached).

What was interesting is that MMC_CardInit() was no longer crashing out in the same place that it was previously. This is because card->flags now had a proper value (0x357) instead of just the high-speed transfer flag (0x100). In tracing through, I found that it was the "cardType" field (0x57) in the extended CSD data that was being OR'ed into card->flags. Because of this, the code was now calling into MMC_SwitchToHS200(). And the call very nearly succeeded, except that the response to CMD6 (Switch) was indicating a switch error (0x80), thus causing the process to fail. (If you know why it might fail to switch, do let me know.)

So... the dedicated test program did not fail in the same way as my original program. Strange. So I fire up my original program again, and sure enough, it hits the assert() I described in my original post, and card->flags is now 0x100 again. But why? So I trace through MMC_CardInit() again... it gets the CID data fine, it gets the CSD data fine. But when it calls MMC_SendExtendedCsd(), the response is OK (0x900), but all 512 bytes of raw extended CSD data are 0. This is different from the dedicated test program, which retrieved useful, sensible values for all 512 bytes of data. Because the extended CSD data is now all 0, the cardType is 0, meaning card->flags remains 0x100 instead of becoming 0x157. And that's why my original program hits the assert(), because cardType was an illegal value.

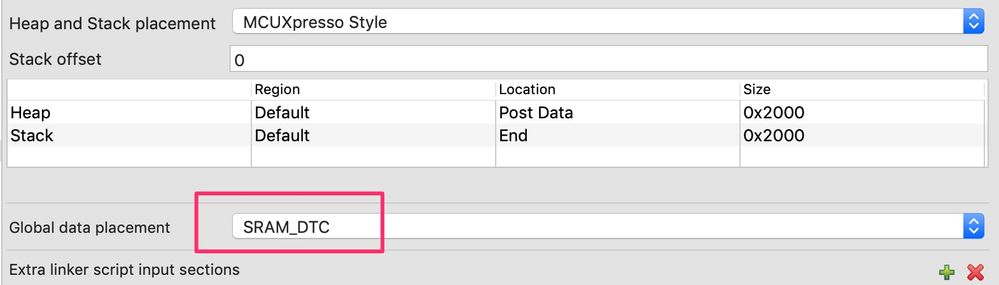

So now my bigger question is... why am I getting back all zeroes in my original program, when I know that my eMMC device returns valid extended CSD when I use the dedicated test program? I am running the exact same SDMMC driver stack in both programs. The only thing I can think of, is that my original program uses SDRAM for all of its .bss and static data (system heap and stack go in SRAM_DTC), while the dedicated test program uses SRAM_DTC for all data (no SDRAM use at all). Could this somehow be affecting the operation of the driver?

Tangentially related, I've tried getting lwIP running within my program, back when my program lived on an EVKB instead of our custom board. And I found that while the SDK example ran fine on the EVKB (I could get ping, etc), if I ran the exact same SDK program within the environment of my custom program, which uses SDRAM for everything, the lwIP example would initialize, but I could never get any ping or tcpecho response. I mean, it's just speculation, but is my use of SDRAM somehow affecting both my MMC stack and my lwIP stack? Is there anything special I have to do when using SDRAM for data memory? (I'm using either the HyperFlash on the EVKB or QSPI flash on our custom board for program storage and execution-in-place.)

Anyway, that's a lot of information I just gave you. Any thoughts?

David Rodgers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Further update: I have a diagnosis and a workaround, but I need you (NXP) to tell me what should be done differently to avoid requiring the workaround.

The issue is the data cache. SRAM_DTC is not cached, and so if the global data (including the mmc_card_t struct) resides in SRAM_DTC, then the driver functions correctly. If the global data is placed in SRAM_OC or BOARD_SDRAM (both cacheable by default), then the MMC_SendExtendedCsd() function fails to retrieve any data, meaning that card->rawExtendedCsd[] remains zero. Thus, the driver later chokes because card->extendedCsd.cardType is zero.

The workaround is to call L1CACHE_DisableDCache() (from fsl_cache.h) during system startup. Once the data cache is disabled, the above program (rt1050_emmc_sdram) successfully retrieves extended CSD data from the eMMC device.

By investigating this problem, I have also inadvertently solved another issue I was having, namely that the lwIP TCP/IP stack seemed to be inoperable when called from my main program, which uses SDRAM for global data. After inserting the call to disable cache at startup, the TCP/IP stack now operates.

I have attached a project that demonstrates this failure. This is the SDK example for the RT1050 for lwIP running on FreeRTOS with the tcpecho demo. I have modified the linker configuration to use SRAM_OC for global data, and I have inserted a call to L1CACHE_DisableDCache() in the main program. As uploaded, the program runs on an RT1050 EVKB. If the call to L1CACHE_DisableDCache() is commented out, then the program will appear to initialize and run, but the unit will not respond to ping or tcpecho.

So... what am I as a developer doing wrong? Or does the fault lie with the SDK drivers not properly managing the cacheability of their data structures? Disabling the data cache is a considerable performance hit. What's the correct way to solve this issue?

David Rodgers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello David,

Really sorry for the delay reply.

I need more time to check this issue and will let you know later.

best regards,

Mike

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for letting me know. This is a pretty important issue, because this potentially affects multiple peripheral drivers for the RT1050. I've only run into issues with ENET and MMC, but there's likely other peripherals I'm not using in my application that could also be affected by this (seeming) data cache issue. All the SDK examples (that I've seen) use SRAM_DTC as their default memory space for heap, stack, and global variables; it's only when you declare a region other than SRAM_DTC as the global variable space that this issue appears. Hopefully you can figure out the correct way to resolve this issue; disabling the whole data cache is a workaround, but it's not ideal.

David Rodgers

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dave,

I think you run into a lot of the same issues I have while I'm learning the I.MX... so thanks for the (always) detailed posts and findings!

The SDK for the most part has no real documentation, so I always have to rely on the samples to try and reverse engineer how the drivers work and post anything I find to the forum. Add to that the constant SDK updates and the MCUXpresso IDE updates and it's very tough to get to a known stable environment.

For the sdcard, I also ran into a similar (?) issue with cache and FreeRTOS. I sort of got it working, by setting up a non-cacheable section in memory, but it still wasn't completely reliable: https://community.nxp.com/thread/507433

I will give your approach a try and see if it helps in my case also.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My "approach" (disabling D-cache globally) is simply a workaround for the shortcomings in the NXP driver and middleware. My real gripe is with NXP releasing drivers and middleware with these kinds of landmines embedded in them.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi David,

I am sorry for that bring the inconvenience to you.

I will highlight this issue with MCUXpresso SDK software team.

I will update if there with any feedback.

best regards,

Mike

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mike,

I appreciate your effort in investigating and resolving these SDK-related issues, but I think you need to understand the "inconvenience" that NXP's customers encounter when trying to integrate incomplete solutions into their products.

My metric for the difficulties I run into is hours. And I have spent many, many hours investigating issues with the silicon, software, and development tools for the RT1050 primarily. Some of these hours we bill as part of the project, some we simply have to eat.

In this instance, the issue is that the middleware for ENET and SD/MMC functions correctly on the RT1050 only when used within the context of the SDK example projects, since they only use SRAM_DTC for global data. And more importantly, there is nothing in the SDK examples to suggest or indicate that changes must be made to the project when used in a different memory environment, let alone explaining what those changes might be and where they need to be made.

So it's both inevitable and entirely predictable that developers like myself and jackking would try to use these middleware packages in projects that use SRAM_OC and/or SDRAM and be confused when they don't work quite right. It's frustrating for me because I now see that others like Jack have encountered this issue before, and we're told "oh, yeah, you need to change your cache settings, edit some stuff in BOARD_ConfigMpu(), and edit the global data declarations in the middleware files."

WHY is NXP not doing this proactively during the SDK development process? You've built this fantastic little screamer of a microcontroller with lots of speed and memory and great peripherals, and then you release software with hidden landmines like this cacheability issue. NXP is supposed to be the expert resource for these parts; surely the engineers who are writing drivers for these peripherals are aware of how the data cache behaves in the Cortex-M7, and would address data cache settings in some way in either the driver/middleware code or in the SDK example application? Instead, we get this:

/*******************************************************************************

* Variables

******************************************************************************/

/*! @brief Card descriptor */

static mmc_card_t g_mmc;That's the culprit in fsl_mmc_disk.c; that's the card structure that must be allocated to non-cacheable memory. There's no code comment about cacheability or anything; we just expect to plug this code into our projects and have it work, and when it doesn't, we burn countless hours (and thus dollars) trying to figure out what's wrong. Because the NXP engineers didn't do a complete job developing the middleware, others pay the penalty when we try to incorporate it into actual projects.

And honestly, this makes me rather unsure about the rest of your drivers and middleware. What other cache-related bugs are lurking in the SDK? I know that the ENET/lwIP stack is affected by this; how do I even determine which global data variables need to be placed in safe memory for TCP/IP to work? Again, NXP should be taking the expert position on this issue, but so far the responses I've seen have put the onus on developers to modify their individual projects, without any indication that NXP will be fixing this issue in the SDK.

Honestly, I have to consider my workaround (disabling D-cache entirely) a permanent fix, because 1) I don't know where in the ENET driver or the lwIP stack I need to be fixing cacheability settings, and 2) I cannot take the risk that some other SDK peripheral driver may also have a caching issue that I won't know about until I start encountering strange errors. I'm probably fine with the performance hit, but when the product simply has to work, slow is better than non-functional. This is a great silicon platform with powerful software put around it, but NXP can and needs to do better in providing software that runs correctly outside of self-contained SDK examples. Thank you for reading this.

David R.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This thread was INCREDIBLY helpful to me. Thank you for posting all of this. I am now able to make progress with my embedded 1062 project - SPECIFICALLY because of the data I learned here.

Larry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maybe to add some context on why I am trying to use OCRAM in the first place, and maybe there is a better approach to avoid these landmines.

I have spent many, many hours tracking down issues that are caused by caching problems with the SDK components. Most of them are still unresolved, and much like drodgers I have to put a temporary workaround in place just to continue other work.

Our design will eventually use the IMXRT1062 which has an additional 512K of OCRAM. It is my understanding that this can only be used as OCRAM, not DTCM blocks.

To use this additional RAM in the future, we are trying to master the use of OCRAM on the IMXRT1052 along with the MCUXpresso SDK, placing certain buffers into DTCM when required.

It would be very useful if NXP could provide a document or application note specifically for using the SDK with caching on the IMXRT series, giving examples on how to convert the SDK samples to use OCRAM for ENET, USB, FatFS, SDcard.

As it currently stands, the only clues are in the config files and SDK comments (and this forum) and it seems that even these don't create a complete picture of what needs to be done to successfully use OCRAM with the SDK components.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi David,

About your attached modified [lwip_tcpecho] demo, please use below code to select non-cacheable memory range:

Make below change to set ENET data buffer memory location:

Original definition:(enet_ethernetif_kinetis.c)

SDK_ALIGN(static rx_buffer_t rxDataBuff_0[ENET_RXBD_NUM], FSL_ENET_BUFF_ALIGNMENT);

SDK_ALIGN(static tx_buffer_t txDataBuff_0[ENET_TXBD_NUM], FSL_ENET_BUFF_ALIGNMENT);

Change to:

AT_NONCACHEABLE_SECTION_ALIGN(static rx_buffer_t rxDataBuff_0[ENET_RXBD_NUM], FSL_ENET_BUFF_ALIGNMENT);

AT_NONCACHEABLE_SECTION_ALIGN(static tx_buffer_t txDataBuff_0[ENET_TXBD_NUM], FSL_ENET_BUFF_ALIGNMENT);

Thantks for the attention.

best regards,

Mike

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I created a separate thread for it, but can you look at how a USB example can be converted to run from OCRAM?

Here is the thread: https://community.nxp.com/message/1209743

It looks like USB stack examples also have cache problems.