- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- 製品フォーラム

- :

- MCX Microcontrollers

- :

- Re: Model Size is too large

Model Size is too large

- RSS フィードを購読する

- トピックを新着としてマーク

- トピックを既読としてマーク

- このトピックを現在のユーザーにフロートします

- ブックマーク

- 購読

- ミュート

- 印刷用ページ

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi everyone,

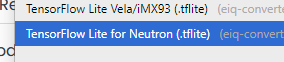

I came across a strange problem. I manually trained a small object detection CNN and successfully created the .tflite file. Then I use the eIQ toolkit to convert it into NPU-supported version (shown below).

We can see that the input and output are in int8, which is great. The total size of this model is just 68KB.

However, when I loaded it to the MCXN947 board, I found that it was bloated up to 512KB!!!

I was using the face detection example (dm-multiple-face-detection-on-mcxn947) from Application Code Hub, with small modifications on the MODEL_GetOpsResolver so that it loads the necessary operations.

Anyone sees this problem before? Did I miss out anything else?

*When I tried with other CNN models, it does not have such a problem.

Thanks!!!

解決済! 解決策の投稿を見る。

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi @wklee

I want to train a model trough your dataset. I downloaded your dataset and train.py.

How do you process the data?

BR

Harry

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Dear Hang,

Yes, you can check both the attached file.

helmet_quantized.tflite --> Trained and quantized to 8-bit using python code on my host PC. I did not use the eIQ toolkit to train it.

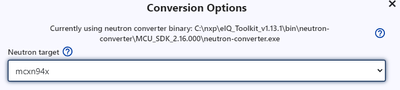

helmet_quantized_converted.tflite --> This is the converted file using eIQv1.13. I use the eIQ Model Tool to open the helmet_quantized.tflite, then convert to NPU file.

I am using FRDM-MCXN947 board.

No quantization was selected because it was already quantized. You can see that the converted version also have 8-bit input and output. It is only ~67KB in size.

However, when I download the latest face detection demo and replace the original model with my helmet_quantized_converted.tflite , the size is much larger than expected.

*You may try converting my helmet_quantized.tflite, see if you get the same problem.

Thanks.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi @wklee

This is the compilation size of the face_detect.tflite model.

This is the compilation size of the helmet_quantized_converted1.tflite model.

BR

Hang

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Dear Hang,

The file size for helmet_quantized_converted is 68KB:

But the compile size becomes 406.5KB, what could be the problem?

There are only ~70K parameters in this model.

Thanks.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi @wklee

You can check the liker script.

It includes the *(.ezh_code) and *(.model_input_buffer).

So the compile size becomes 406.5KB.

BR

Harry

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

I don't think that's the reason.

The ezh_code is very small, only 512*4=2KB.

I verify this point by checking the original compilation:

Then modify the ezh_code to only ezh_code[2], which is 8 bytes.

You can see that the SRAMX region is only changed a little.

I think that the problem should be some problems during the conversion from a trained model to the NPU version. If the trained model is in int8 and contains only 70K parameters, there is no reason for the size to grow up to so big (400KB+).

Thanks.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Sorry for not making the problems clear.

In my first post, I mentioned that the model that I trained only has ~67 K parameters, so I expect that after int8 quantization the size should be around 70KB. However, when it is loaded onto the MCU (FRDM-MCXN947), I see the size grows to ~512KB, which is not what I expected, see the serial port information below.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi @wklee

I tried to convert this model in two ways and ran it on mcxn947, both of which encountered the same error. I think it's a problem with the model itself.

If your model includes operations unsupported by the NPU, increasing the size.

How did you train this model?

BR

Harry

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

The code can be found in the attachment. It is a customized CNN that performs object detection (helmet).

The training dataset is here:

https://drive.google.com/file/d/1xXwR6-OhXmePOGtyy9Yxn0bdhv3gmu4y/view?usp=sharing

Thanks.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi @wklee

I want to train a model trough your dataset. I downloaded your dataset and train.py.

How do you process the data?

BR

Harry

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi Harry Zhang,

I choose to compress the model into very small so that it can fit into the MCXN947 MCU. Although this does not solve the issue, it works for my project.

Thanks for your time and effort to support.