- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- General Purpose Microcontrollers

- :

- Kinetis Microcontrollers

- :

- eGUI Unicode build errors

eGUI Unicode build errors

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm attempting to use unicode with eGUI(D4D). Everything works fine when just using ASCII but I can't get things to compile once I #define D4D_UNICODE. I've never used unicode before so there is probably just something I'm missing. I'm running on a Kinetis K70 with MQX 4.1 with the eGUI 3.01 from github Gargy007/eGUI · GitHub all building in CodeWarrior 10.6. Here is a sample of my code.

#include "wchar.h"

const wchar_t * ws = L"Wide string";

D4D_WCHAR english[] = {L"English"};

Line 3 has the error: unknown type name 'wchar_t'

Line 3 has the error: wide character array initialized from incompatible wide string

From what I understand I need the 'L' before my string to make it a long string but that doesn't seem to help.

Does anyone know what I've done wrong.

Thanks,

Sean

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Sean,

I was working with IAR, whose compiler interprets the short and int type variables different. I compiled a demo in CW and I got the same error, the solution was change the D4D_WCHAR type variable.

In the file "d4d_types.h" you have to change the D4D_WCHAR typedef from unsigned short to unsigned int.

From:

#ifndef D4D_WCHAR

typedef unsigned short D4D_WCHAR;

#endif

To:

#ifndef D4D_WCHAR

typedef unsigned int D4D_WCHAR;

#endif

I hope this solve your problem.

Regards,

Earl Orlando.

/* If this post solve your problem please click the Correct Answer button. */

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Sean,

Please include the file "d4d.h"

#include "d4d.h"

Where is included the file "d4d_types.h", where is defined the data type "D4D_TCHAR", please use it.

#include "d4d.h"

const D4D_TCHAR * ws = L"Wide string";

D4D_TCHAR english[] = {L"English"};

Regards,

Earl.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the suggestion but it is still not working. Here is my code.

#include "d4d.h"

#include "fonts.h"

#include "main.h"

const D4D_TCHAR * ws = L"Wide string";

D4D_TCHAR english[] = {L"English"};

Line 05 is now a warning instead of an error. The warning is "initialization from incompatible pointer type [enabled by default]".

Line 06 is still an error. The error is "wide character array initialized from incompatible wide string".

That got me a little closer to a fix but still not working.

Please let me know if you have any more suggestions.

Thanks,

Sean

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you sure you have the next definition?

#define D4D_UNICODETry with the following code, please:

#include "d4d.h"

#include "fonts.h"

#include "main.h"

D4D_TCHAR ws[] = {D4D_DEFSTR("Wide string")};

D4D_TCHAR english[] = {D4D_DEFSTR("English")};

Regards,

Earl.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I have #define D4D_UNICODE in my d4d_user_cfg.h file.

Gave the above code a try but that't not working for me either. Both lines now have the error "wide character array initialized from incompatible wide string".

Is it possible I have something setup wrong with CodeWarrior or my project properties? I'm just not sure what that would be since commenting out #define D4D_UNICODE removes all these build errors.

Thanks,

Sean

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are these lines in your file "d4d_types.h"?

#ifndef D4D_WCHAR_TYPE

/*! @brief User type definition of eGUI wide char.*/

#define D4D_WCHAR_TYPE unsigned short

#endif

/*! @brief Type definition of eGUI wide char.*/

typedef D4D_WCHAR_TYPE D4D_WCHAR;

/*! @brief Type definition of eGUI ASCII character.*/

typedef char D4D_CHAR;

#ifdef D4D_UNICODE

/*! @brief Type definition of eGUI character (it depends on UNICODE setting if this is \ref D4D_CHAR or \ref D4D_WCHAR).*/

typedef D4D_WCHAR D4D_TCHAR;

#else

/*! @brief Type definition of eGUI character (it depends on UNICODE setting if this is \ref D4D_CHAR or \ref D4D_WCHAR).*/

typedef D4D_CHAR D4D_TCHAR;

#endif

Regards,

Earl.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

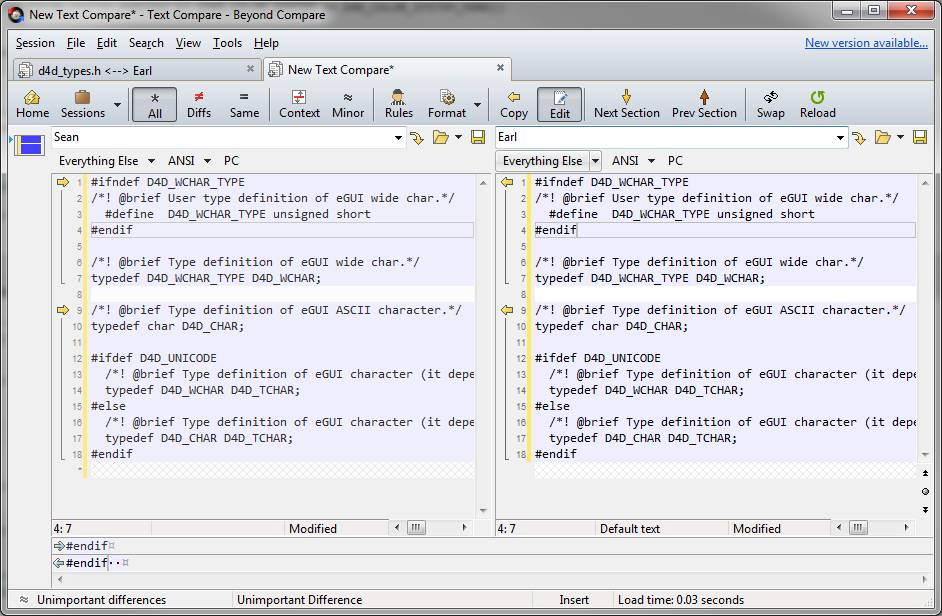

Yes those lines are in my d4d_types.h file. I even copied into beyond compare to be sure. Mine code is on the left and your code is on the right.

Thanks,

Sean

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Sean,

I was working with IAR, whose compiler interprets the short and int type variables different. I compiled a demo in CW and I got the same error, the solution was change the D4D_WCHAR type variable.

In the file "d4d_types.h" you have to change the D4D_WCHAR typedef from unsigned short to unsigned int.

From:

#ifndef D4D_WCHAR

typedef unsigned short D4D_WCHAR;

#endif

To:

#ifndef D4D_WCHAR

typedef unsigned int D4D_WCHAR;

#endif

I hope this solve your problem.

Regards,

Earl Orlando.

/* If this post solve your problem please click the Correct Answer button. */

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Earl Orlando,

I am using the D4D eGui Library with KDS 3.0 for a K20 project.

I found the same solution as yours, changing the D4D_TCHAR from unsigned short to unsigned int, as GDB assumes the wide-chars as 4-bytes characters (such as in Linux environment; Windows, instead, considers the L"...." strings as 2-bytes per character strings).

Have you any suggestion for converting UTF8 strings (received from a communication) to the correct UNICODE format for eGui? Actually I just copy the 1-byte per char UTF8 string to a 4-bytes per character D4D_TCHAR, but when it finds a 2-bytes character in the UTF-8 string, it is not correctly displayed (lowercase accented 'a' becomes a uppercase 'A' with tilde). Only 1-byte characters are correctly displayed.

I used the Freescale Embedded Gui Converter utility to create the font choosing Unicode-16bit encoding and including the Latin and 2 Latin-extended Unicode ranges.

Thanks in advance for any suggestion.

Vittorio

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello again, I found a solution for converting strings from UTF8 to 4-bytes per character UNICODE strings, so now I can display correctly all the 2-bytes UTF8 symbols.

Sorry for bothering.

Best wishes,

Vittorio

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Vittorio,

Could you please share your solution?

Best regards,

Earl.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Earl,

this is the function I used for converting strings from uint8_t * UTF8 format to uint32_t UNICODE (TCHAR when D4D_UNICODE is enabled in eGui):

void utf8ToTCHAR(unsigned int *dst, uint8_t *src) {

while (*src) {

if (*src < 0x80) { // 0x0-7F range

*dst++ = *src++;

} else if (*src > 0b10111111) { // 0x80-7FF range

*dst = (*src & 0x1F) << 8; // first byte

*dst = (*dst | ((*(src+1) & 0b00111111) << 2)) >> 2; // second byte

src += 2;

dst++;

}

}

*dst = 0; // string terminator

}

Cheers,

Vittorio

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That fixed it!

Thanks so much for all your help. I really appreciate it.

Sean

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Sean,

I am currently working to solve that.

Regards,

Earl.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Earl,

Thanks for looking into this. If you find a fix please let me know.

Luckily it's not a feature I need immediately for my current project. Just wanted to be sure I'll be able to use it later when I do need it.

Thanks,

Sean