- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- General Purpose Microcontrollers

- :

- Kinetis Microcontrollers

- :

- Re: Stuck I2C Busy Bit in K21

Stuck I2C Busy Bit in K21

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Stuck I2C Busy Bit in K21

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using a MK21FX512VMC12 micro as the I2C master in a single master system. The bus has strong pullups.

We occasionally have seen the I2C master get stuck in a state where the busy bit becomes set and remains set. We have not yet captured the event happening, but afterward it remains in the stuck state until we power cycle. When it's sitting in the stuck state, we observe that the SCL and SDA lines are high. In this state our micro doesn't do any more i2c accesses because it (erroneously) thinks the bus is busy. Our master is running the I2C bus at a 100 kHz SCL frequency.

In this state the registers are as follows:

I2C0 registers:

- A1: 12

- F: 1f

- C1: 88

- S: 21

- D: 63

- C2: 00

- FLT: 5f (we were experimenting with the max glitch filter - but we also got into this state with glitch filter set to 0)

- RA: 00

- SMB: 00

- A2: c2

- SLTH: 00

- SLTL: 00

PORTB_PCR0 and PORTB_PCR1: 00000220

Can you advise us on the logic that controls the busy bit? That will guide us as we try to reproduce and catch what's happening on the bus to get into that state. Can you also advise us on an algorithm to clear the busy bit?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Debora

The busy bit is set when a start condition is detected and cleared when a stop condition is detected.

This may mean that although the SDA and SCL lines are high they didn't get to that state via a stop condition, which could happen (for example) when data is sent but no stop condition is sent at the end of the frame.

Regards

Mark

Complete Kinetis solutions for professional needs, training and support: http://www.utasker.com/kinetis.html

I2C: http://www.utasker.com/docs/uTasker/uTasker_I2C.pdf (see appendix A for status register content during typical transfers)

K21:

- http://www.utasker.com/kinetis/TWR-K21D50M.html

- http://www.utasker.com/kinetis/TWR-K21F120M.html

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mark,

Thank you; that helps a lot.

Can you suggest a procedure for getting the MK21FX512VMC12 out of this state once it is in it? Is it possible to manipulate bits in the I2C registers to get it to think the bus is unbusy? Or reset the I2C peripheral? Or do we need to reset the whole part?

Regards,

Debora

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Debora

What you most likely have is a firmware issue where the stop condition is not sent (you should be able to confirm this by capturing the last activity that took place on the I2C bus), in which case it is recommended to identify and solve this root cause rather than perform workarounds to recover from it.

Could you give some background?

1. Is it a hobby, school or professional development?

2. Which I2C driver are you using? Own development, NXP example, other?

Note that the uTasker I2C driver (suitable for all Kinetis parts and proven reliability in many industrial products since 2011) is available in the open source uTasker project which may help. A professionally supported version is available (including one-on-one remote desktop help) for mission critical requirements or for general help in problem solving in any Kinetis based project.

Regards

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mark,

Thank you, I understand.

This condition occurs infrequently and perhaps only on specific units; I've not yet been able to capture it with an oscilloscope because it has not yet happened in my presence but I'm working on duplicating it then showing it is handled with a code change.

The code is legacy code that evolved from an old Kinetis demo project provided by Freescale. We are in the process of completely rewriting it from scratch (it's a professional project). My hope was to run down the bug in the old to ensure it is solved in the new; we want to be confident that the new driver handles whatever bus peculiarity that was causing this symptom.

You strongly recommend uTasker but work was already started on the rewrite using FreeRTOS. Do you have any additional advice for us to consider as we move forward?

Regards,

Debora

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Debora

The uTasker project includes FreeRTOS, so FreeRTOS projects can use uTasker drivers.

The advantage of uTasker is that you can then simulate complete projects (including the Kinetis, its peripherals, interrupts, DMA etc), which improves development and debugging efficiency and projects are supported directly by the code developer.

Depending on the present advancement (the investment already made) in the port/upgrade you could consider requesting a quote for a full integration of your existing application (see also http://www.utasker.com/services.html)

since an integration into the uTasker environment usually is much easier and efficient and ensures using well proven interfaces and protocols for reliability. In some cases this can also be offered free of charge to new users.

In any case, in situations where there is a known weakness the ability to reproduce the issue will also be needed in order to investigate and understand the cause, and verify that the subsequent solution has reliably solved it. Hopefully you can identify a method to speed up the failure to aid in the investigation.

Regards

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mark,

Can you explain when a stop condition is generated with and without the stop hold bit set? I would like to understand whether the driver should use that bit. In our code as it is, SHEN in the FLT register is 0, the default.

We captured an event and I am trying to understand how the peripheral could fail to generate a stop condition.

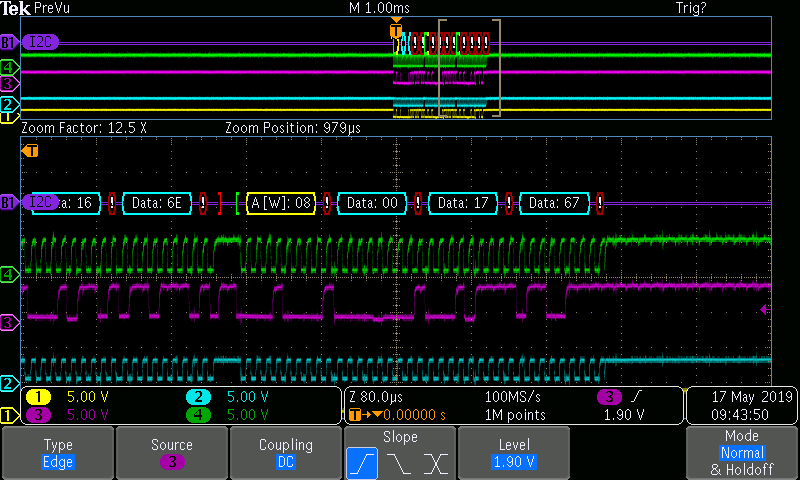

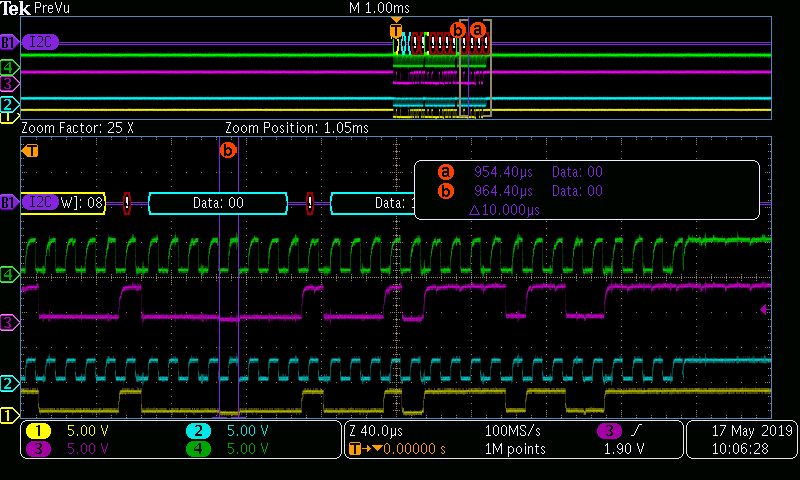

What we are trying to send: start 00010000 (address 08, write) 00000000 00010111 0110 1110 stop

But the slave is confused and is out of sync. We don't know how it could get confused; that's a question for the manufacturer of the slave. The slave doesn't ack on the 9th bit, but it is obviously acking on the 5th bit of the 00 data byte (scope shows SDA at a different low level for one bit time; see cursors in 2nd image) and on the 5th bit of the 17 data byte (again, SDA seems extra low) and perhaps on the 5th bit of the last data byte, changing it from 6e to 67.

Clearly our driver should stop sending when it detects a nack rather than plowing ahead, but what I'm interested in at this point is correct stop bit generation. When we clear MST in C1, why does the K21 not bring SDA low to generate a valid stop?

Secondarily, how does the peripheral react when it sees a mismatch in the data it is transmitting (sending 6e but bus says 67)? A few bits after the mismatch occurred, why would it not transmit a 0 for the LSB of 6e? The ARBL arbitration lost bit was not set. But is it possible that the data mismatch results in the IICIF bit set before all the data bits are transmitted, leading to the driver clearing MST too soon, and, without SHE enabled, the stop bit generation gets messed up? Is the logic is different for the arbitration lost case of IICIF versus the ARBL bit? FWIW SSIE is 0 and the SMB, SLTH, and SLTL regs are all 0, so they aren't affecting IICIF.

Regards,

Debora

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Debora

1. I note that your slave is not responding (no ACKs) before the frame where it looks like it may be be sending ACKs at an unexpected time.

2. If your slave is indeed acking in the 5th clock cycle (rather than in the 9th) it would cause the last data byte to be sent to appear as a 0x66 rather than a 0x6e (since it pulls bit 5 low - b01101110 becomes b01100110). What happens to the final bit (seen as 1') will depend on how the master responds to detecting an arbitration loss. Presumably it will lose arbitration and stop sending data, meaning that it would be '1' and thus a 0x6e.

3. Assuming this behavior is causing the master to detect loss or arbitration it must be reacting to the arbitration loss - the loss flag needs to be reset in SW for it to go back to '0'.

4. I agree that if you are losing arbitration but the driver doesn't handle this case it may well then not be able to send the STOP condition correctly. In fact if arbitration were really lost in a multi-master bus environment the losing master should completely back-off and so it would also be normal to not send a STOP condition.

5. In a single master system I don't see any reason to not send and receive data to end when the slave doesn't ack. This doesn't cause any problems because tx data is still send (clearing output buffers) and reception just receives 0xff (usually allowing the next firmware layer to detected that something is amiss).

6. If you can prove that the slave is not behaving correctly it sounds like an underlying HW issue. I have never experienced such behavior from a slave I2C device (apart from an ASIC that once had a bad implementation and caused some clock glitches, which can't really be compared). In your case I see that there was a good start/stop just before the issue, which would normally have reset the slave.

7. If you can detect the arbitration loss and you can verify that the basic issue is a HW problem at the slave I think that the onl method is to switch the I2C bus to GPIO mode - generate pattern that set the slave back to a known state and then restart the I2C mode again.

Regards

Mark

P.S. I didn't answer specific questions about flags because I also don't know the exact controller behaviour when a slave is behaving in an unexpected manner...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mark,

Thanks for all the time you've been putting into this!

I agree that the slave is behaving badly and that needs to be pursued, but I am also trying to understand the peripheral well enough to be able to ensure that the driver will handle unexpected faults. What I see happening here is that the K21 is NOT generating a stop bit, even though the driver is clearing the MST bit which is supposed to generate a stop. I also know from looking at the registers and driver code that the ARBL bit was NOT set at any time during this sequence - as you pointed out, the bit must be explicitly reset, but the legacy driver, which apparently was written assuming a single master system (which this is), doesn't check or clear ARBL. (It should, but it doesn't.) After the sequence shown above the I2C_S register has the contents 0x21 (bus busy, rx ack not detected).

Is there anybody who does know about the flags? And can you explain the function of the SHEN bit (stop hold enable) more clearly than the reference manual does, so I can know whether the driver should be using it or not? Can anyone explain how the stop condition could fail to be generated even though the driver clears MST? (Nothing is preventing the Kinetis from pulling SDA low to make a stop condition, but it didn't.) And why is ARBL not set in this case?

I understand your points 3 and 4 about the master backing off and not issuing a stop in this case - but that behavior couldn't have been under the control of the driver because the driver didn't see ARBL. Would the peripheral do it automatically?

Regards,

Debora

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Debora

I find that the SR flags are well explained in the user's manual and I can't add anything more to these general explanations. There are however never full details about the exact timing or the flags or their exact behavior in the various exception situation (such as race states, loss of bus arbitration etc.) and these need to be investigated practically rather than relying on the general cases (there may be undocumented errata, for example).

The SHEN bit is only of relevance when you are using low power modes. If you run the processor always in the RUN (and WAIT) modes this bit is of no consequence.

In an arbitration case where a master loses control of the bus it will back-off in HW and not need firmware control to do it - this is an automation of the peripheral.

At the moment your results seem contradictory:

- if arbitration were lost it would be normal that no stop could be sent - but the flag must be set (and subsequently cleared)

- is the theory that the slave is driving the bus 100% sure? From what I have seen it does suggest it is taking place but why no flag?

- are you 100% sure that your analysis of the driver is correct?

In your situation I would do the following:

1. Take a board without the I2C slave on it and send/receive data as normal so that you have a reference (even if the slave doesn't ACK it shouldn't cause any basic problems).

2. Use a GPIO under SW control connected to the SDA line in open drain mode.

3. Use a HW timer synchronised to the start of the frame to pulse the GPIO low at a definable point in the transmission. Like this you can test loss of arbitration at any point in the cycle.

4. After the pulse has been generated, capture the I2C status register state and log it or print it to the debug interface.

- either disable I2C interrupt just before the pulse is generated so that you can be sure that the present driver is not being called and changing flags

- or allow the present interrupt to log the state on its next entry

Based on the results you should be able to quickly be sure of accurate information about the behavior of the controller in such an exception state and thus not need to rely on interpretations of the user's manual. You can quickly confirm your present theories and maybe revise them when other details come to light. Once clarity is achieved on the peripheral behavior (based on practical tests without room for misinterpretation) you will have all knowledge necessary to explain and handle the situation.

Regards

Mark

Complete Kinetis solutions for professional needs, training and support:http://www.utasker.com/kinetis.html

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mark,

Thanks. Your confirmation that the peripheral (not the driver) automatically backs off and does not issue a STOP condition was what I needed to know for now. I can take it from here doing the experiments you described.

Regards,

Debora