- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- Model-Based Design Toolbox (MBDT)

- :

- NXP Model-Based Design Tools for VISION Knowledge Base

- :

- AI and Machine Learning Course #4: CNNs using S32V processor

AI and Machine Learning Course #4: CNNs using S32V processor

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

AI and Machine Learning Course #4: CNNs using S32V processor

AI and Machine Learning Course #4: CNNs using S32V processor

In this article we are going to discuss the following topics:

- how to configure the SBC S32V234 board to be ready for CNN deployment

- what are the Host PC requirements for CNN develpment

- how to use the pre-trained CNN models in MATLAB

- how to run MATLAB CNN on the NXP S32V microprocessor

1. INTRODUCTION

Deploying MATLAB scripts on the NXP proprietary boards can be done using the NXP Vision Toolbox. The utilities offered by the toolbox are bypassing all the hardware configuration and initialization that someone would have had to do, and can provide MATLAB users a way to easily start to run their own code on the boards, without having to know specific hardware and low-level software details. It also includes a module that supports Convolutional Neural Network deployment, so users can:

- train their own CNN models developed within MATLAB;

- use pre-trained CNN from MATLAB;

- adapt a pre-trained CNN model to recognize new objects by using Transfer Learning;

We will get back on Trasfer Learning and cover more of this topic in the next Course #5.

The NXP Vision Toolbox uses MATLAB's capabilities to generate code for CNNs using ARM Neon technology that can accelerate, to some extent, these computation-intensive algorithms. We are actively working on getting things to work with NXP's next generation machine learning software called AIRunner, which leverages the power of the integrated Vision APEX accelerator, to offer support for deploying stable and real-time object detection, semantic segmentation and other useful algorithms that can improve driver's experience behind the wheel.

2. GETTING THINGS UP AND RUNNING

This course assumes you have already set up the MATLAB environment inC1: HW and SW Environment Setup. The steps mentioned there will be listed below just for the completeness of this document:

- First, the NXP Vision Toolbox should be installed and the Linux Yocto distribution should be written onto the SD-Card (the SD-Card image can be downloaded from the NXP Vision SDK package here)

Debian version can also be used, but there are some extra things that need to be installed on the board and our current version of the toolbox does not integrate with it when it comes to deploying scripts on the board. This will most likely change in our next release, which will get out-of-the-box support for Debian as well - Download the ARM Compute Library to the host PC. For more details about ARM Compute Library, why is it needed and what can it do please refer to: https://community.arm.com/developer/tools-software/tools/b/tools-software-ides-blog/posts/getting-st...

The ARM Compute Library can be downloaded from https://github.com/ARM-software/ComputeLibrary

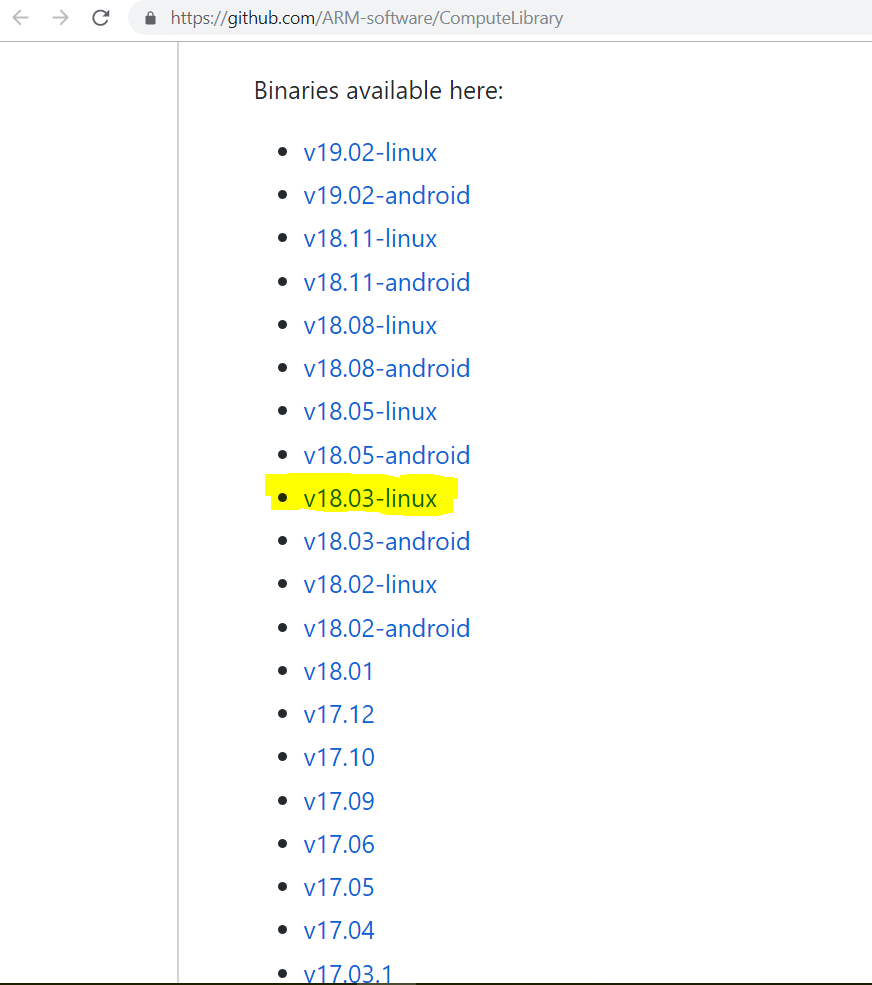

The NXP Vision Toolbox CNN examples were built using version 18.03, so download this one to avoid any backward or forward compatibility issues - scroll down until you find Binaries section like in the image below:

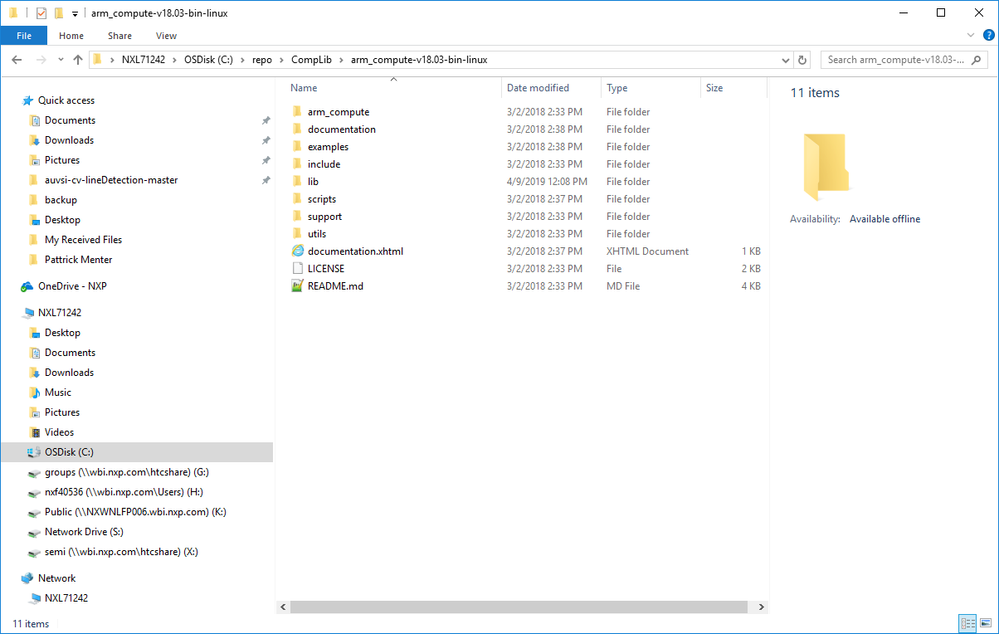

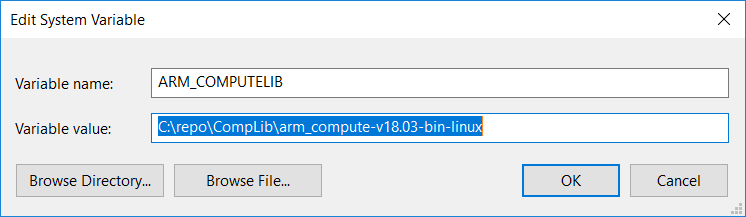

After downloading and unpacking the ARM Compute image the ARM_COMPUTELIB system variable should point at the top of the installation folder:

The ARM Compute library should contain the linux-arm-v8a-neon folder with the correct libraries:

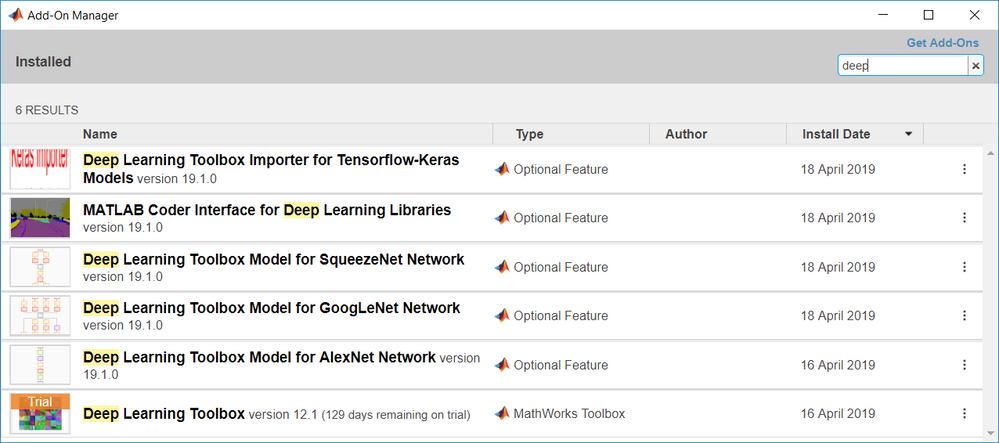

To be able to run the CNN examples in the toolbox, the following MATLAB Add-Ons should be installed :

3. RUNNING THE EXAMPLES

At this point, the NXP Vision Toolbox should be ready for deploying scripts on the board.

If you are not familiar with the concepts of configuring the Linux OS what runs on the NXP SBC S32V234 evaluation or Network Configuration in Windows OS, please refer to this thread: https://community.nxp.com/docs/DOC-335345

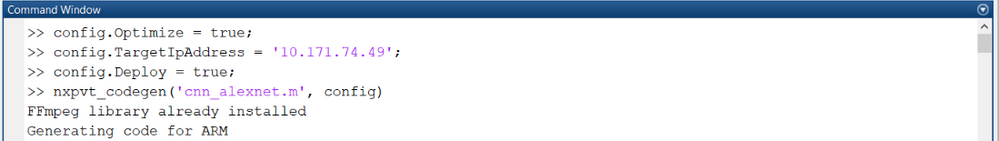

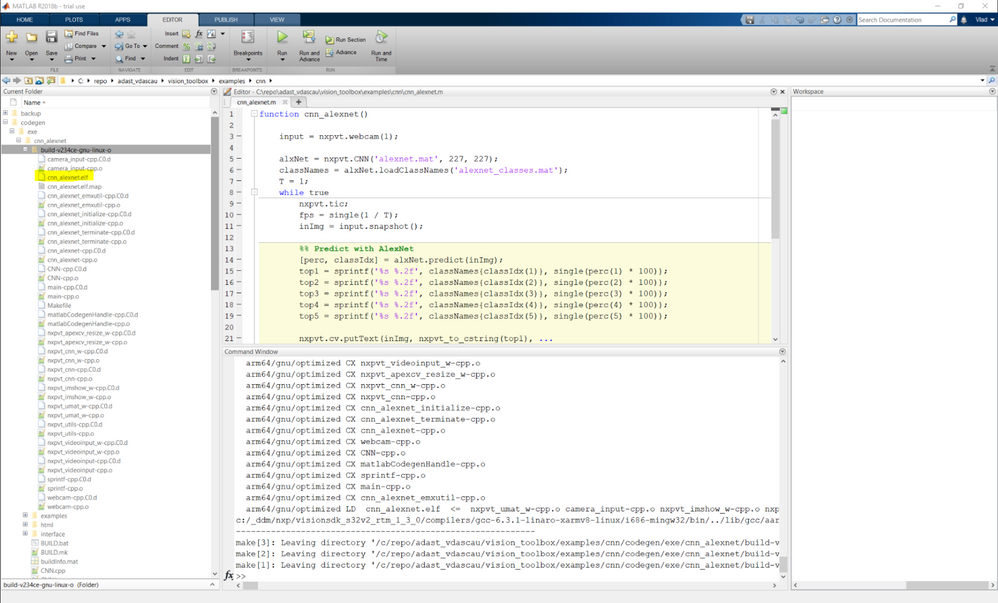

In order to download any application generated and compiled in MATLAB to the NXP microprocessor, you simply need to set the configuration structure with the IP address of the board and then to call the nxpvt_codegen script provided by the NXP Vision Toolbox in the following way:

This is just a mechanism for automatizing the deployment on the board. A script can also be compiled without using the Deploy option in the configuration structure. In this case, the resulting .elf and the binaries generated by MATLAB for the Neural Network should be copied to the board, manually.

The executable (.elf file) can be found in the ../codegen/exe/SCRIPT_NAME/build-v234ce-gnu-linux-o if the compilation was done with optimization ( config.Optimize = true ), or in the ../codegen/exe/SCRIPT_NAME/build-v234ce-gnu-linux-d if the compilation was done with debug ( config.Optimize = false

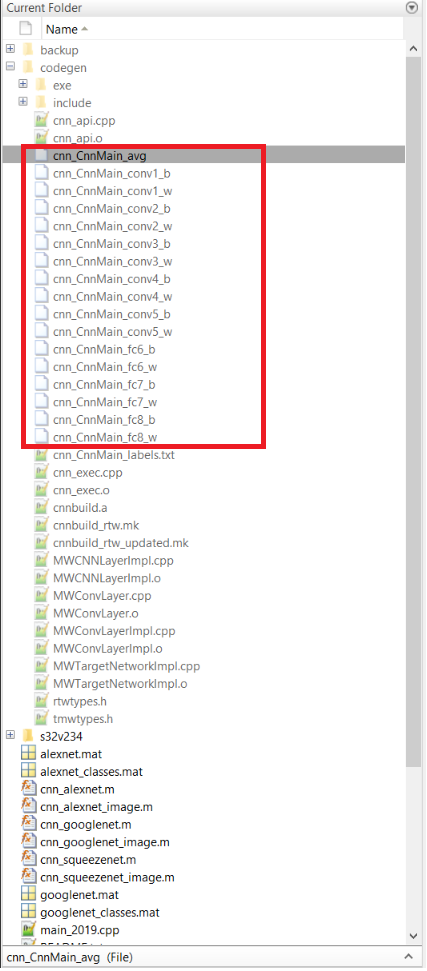

After copying the executable, you can find the network binary files in the folder directly in the codegen/ folder. These files represent the Makefile for compilation, the labels that the class supports, the network implementation together with network's layers, weights and biases. The files you need to be copying to the board are the weights, biases and average binary files:

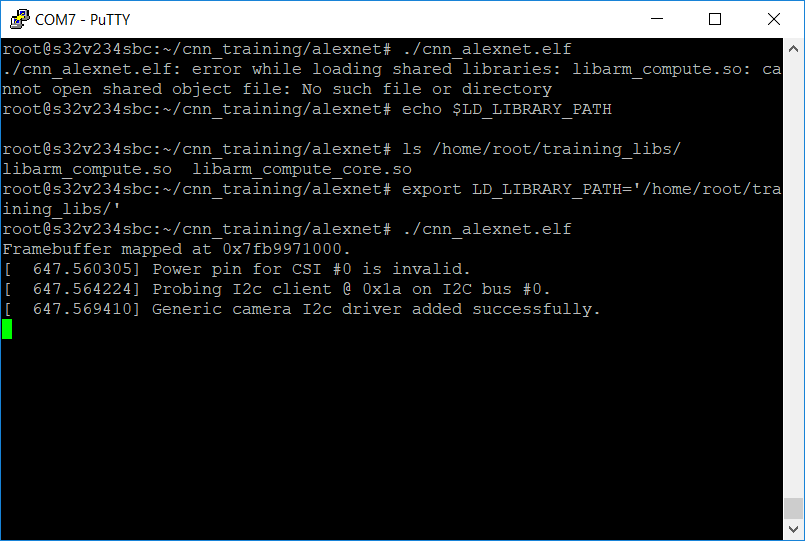

If you use this manual deployment method, you also have to be sure that the libarm_compute.so and libarm_compute_core.so are known by the loader using the LD_LIBRARY_PATH environmental variable. You could either set that to point to a custom folder in which you copied the .so's or you can simply copy them to the /lib/ folder.

If this step is omitted we will get an error stating that the arm_compute so's are not found:

Deploying the with the nxpvt_codegen script takes care of all the copying for you and no extra steps are required

The same 3 ready-to-run examples from the previous course https://community.nxp.com/docs/DOC-343430 that are available in the NXP Vision Toolbox can be deployed with no extra changes to the scripts. You can click on the pictures below to zoom and get an idea of how well the networks are doing in terms of accuracy and performance.

AlexNet Object Detector deployed on the S32V board

GoogLeNet Object Detector deployed on the S32V board

SqueezeNet Object Detector deployed on the S32V board

As expected, SqueezeNet performs best in terms of performance and it delivers a pretty decent result in terms of accuracy. For a short presentation of these 3 pre-trained networks you can look into the second course of this tutorialC2: Introduction to Deep Learning.

4. CODE INSIGHTS

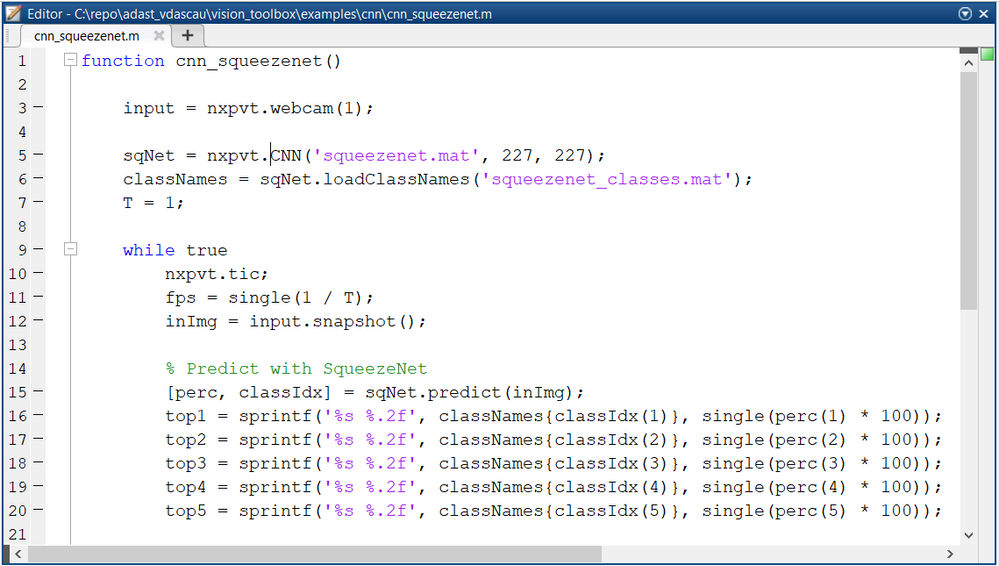

As shown in the previous articles of this course, the idea that writing 20-something lines of code (including displaying and adding annotations) to actually deploy the neural network to the board is making sense in the context of bringing the embedded world into MATLAB. We will use the cnn_squeezenet.m to prove how easy it is to use the toolbox to run things on the S32V:

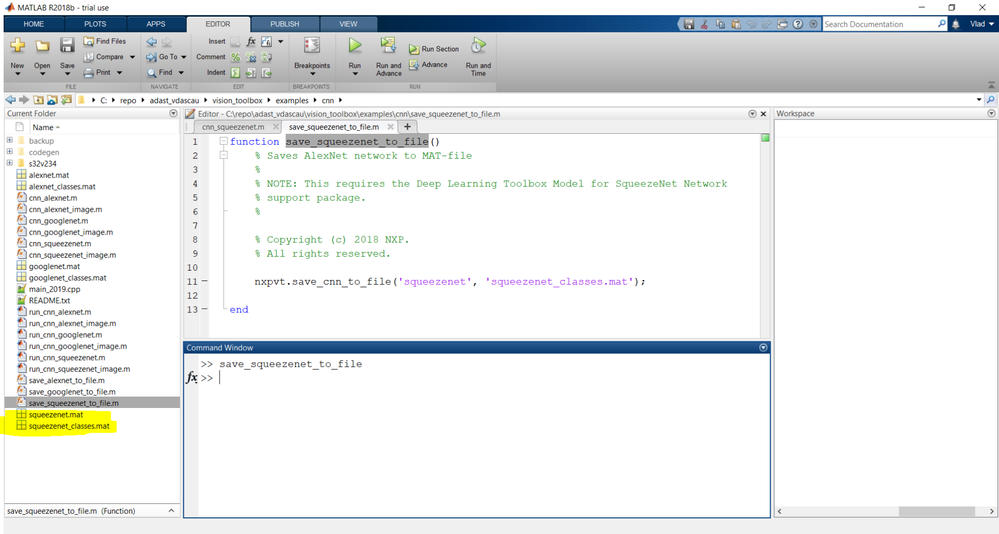

We fist need to save the SqueezeNet network object from MATLAB to a .mat file to be able to generate code from it:

Then we should go and take a look at the cnn_squeezenet.m script.

We start by creating an input object that reads from the MIPI-A attached camera using the 1 parameter to nxpvt.webcam at line 3. We create the CNN using the nxpvt.CNN object by passing the saved squeezenet.mat that represents the actual network and the size that the network accepts. We load the squeezenet_classes.mat using the loadClassNames method of the object. We then loop to get a continuous stream from the camera, get the images with the snapshot() method of the nxpvt.webcam object and run predict on that image. The predict method will return the classes together with the percentages, in descending order of the percentages.

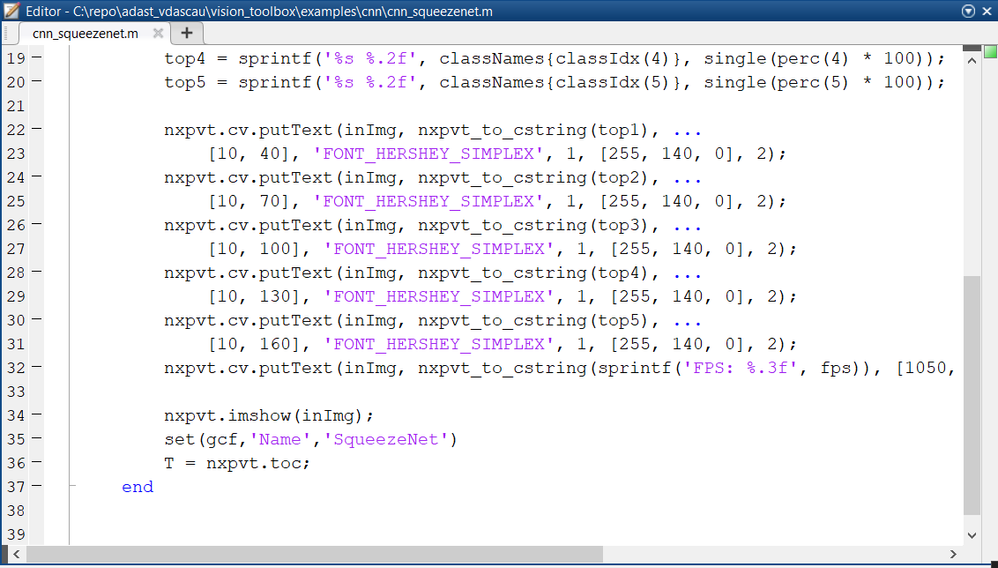

We display the top 5 classes and we call nxpvt.toc() to determine how much time we needed to predict and display the image ( this is computed with regard to the nxpvt.tic call) in order to compute the frames per second. And we're done !

4. CONCLUSIONS

The NXP Vision Toolbox eliminates all the hassle and the extra steps that would be necessary for deploying Convolutional Neural Networks on the target directly from MATLAB. It also allows using custom networks that are supported by MATLAB to be ran with ease, providing smooth integration and headache-free execution. In the next course, we will focus extensively on how to retrain networks with Transfer Learning.