- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- Model-Based Design Toolbox (MBDT)

- :

- NXP Model-Based Design Tools for VISION Knowledge Base

- :

- AI and Machine Learning Course #3: CNN using MATLAB Simulation Environment

AI and Machine Learning Course #3: CNN using MATLAB Simulation Environment

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

AI and Machine Learning Course #3: CNN using MATLAB Simulation Environment

AI and Machine Learning Course #3: CNN using MATLAB Simulation Environment

In this article we are going to discuss the following topics:

- how to use pre-trained CNN in MATLAB

- how to build a simple program to classify objects using CNN

- how to compare 3 types of CNN based on the accuracy & speed

- how to use NXP's SBC S32V234 Evaluation Board ISP camera to feed data into MATLAB simulations in real-time

1. INTRODUCTION

The NXP Vision Toolbox offers support for integrating:

- MATLAB designed built-from-scratch CNN;

- MATLAB provided pre-trained CNN;

- MATLAB imported CNN from other deep learning frameworks via ONNX format;

...into your final application.

The NXP Vision Toolbox has an intuitive m-script API and allows you to simply use a custom built-in wrapper that enables both simulation and deployment of the CNN supported by MATALB on the NXP S32V embedded processors for rapid prototyping and evaluation purposes.

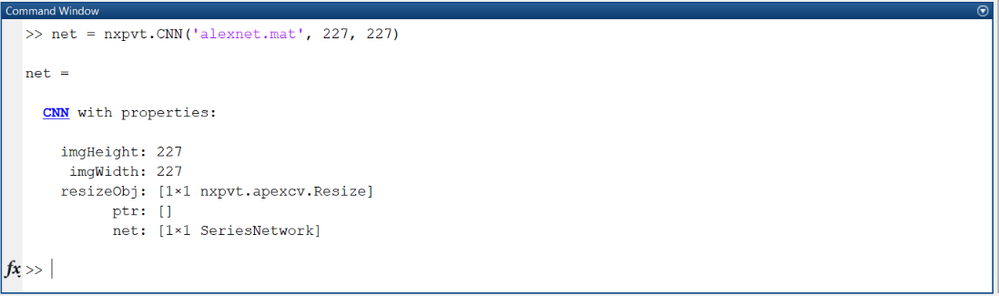

As mentioned in the beginning the main focus will be on MATLAB simulation of CNN algorithms. NXP Vision Toolbox API allows users to create a Neural Network that can be then executed in:

- MATLAB simulation;

- Real-time on NXP S32V hardware;

...using an easy syntax as shown below: nxpvt.CNN(...)

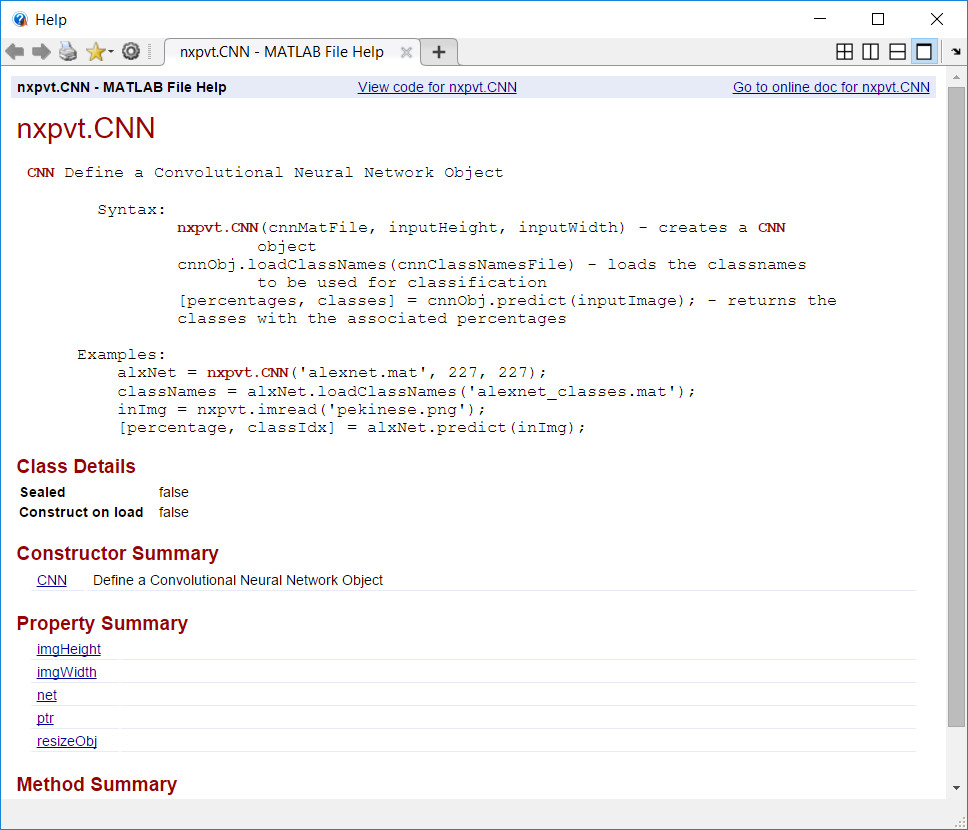

For more information about the nxpvt.CNN object, type help nxpvt.CNN in the MATLAB command line.

Therefore, when you want to create a CNN object from a pre-saved .mat file that contains a MATLAB-formatted and MATLAB-supported Neural Network you just need to specify:

- the .mat file;

- the input size;

You then need to load the class names (still a .mat file with the class names).

Currently, NXP Vision Toolbox supports only 3-channel neural networks, but we are planning to add support for a variable number of channels in the future.

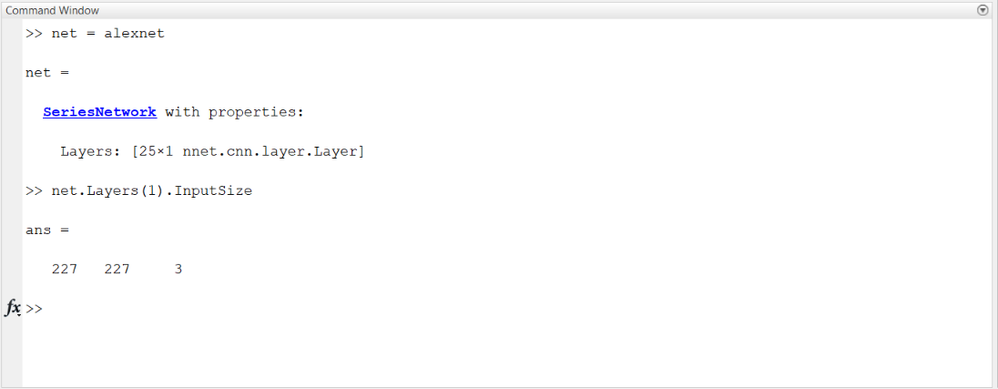

You can find out the input size of a MATLAB CNN very easily by using the command:

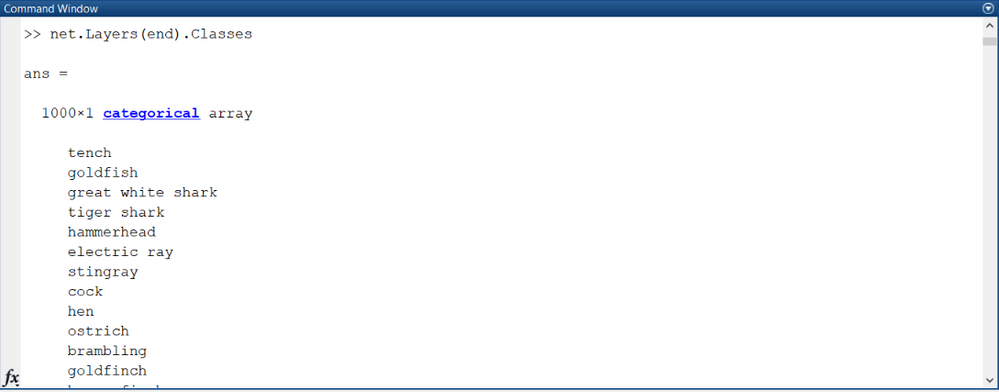

Additional information about the CNN you wish to know such as class names, can be found by looking at the last (classification) layer of the network:

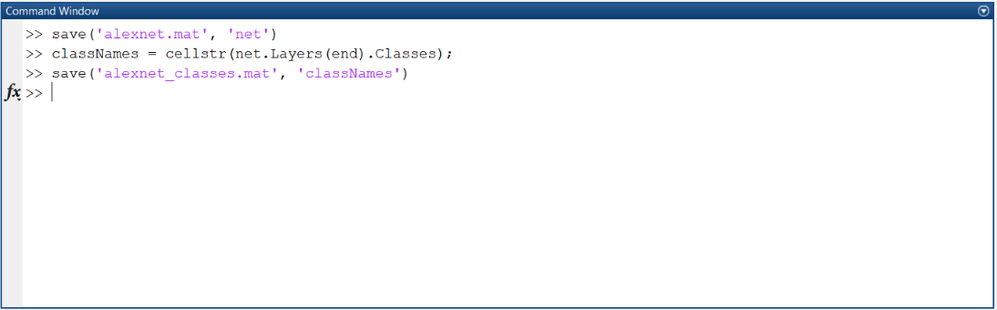

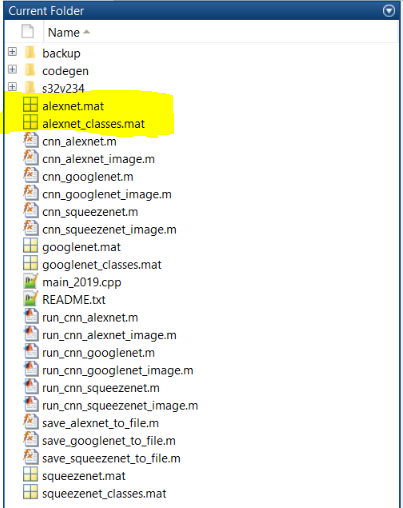

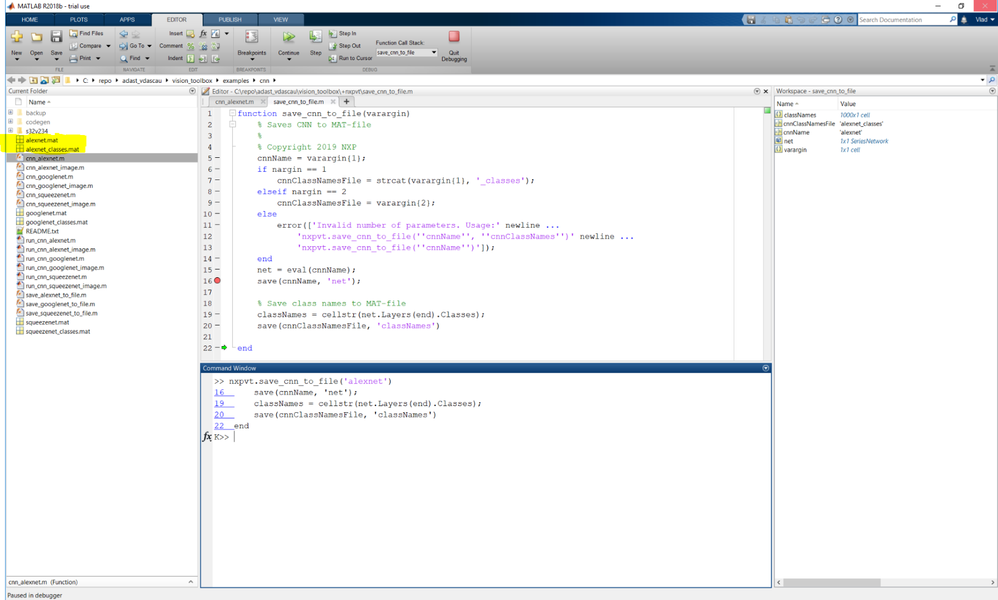

To save the network to a .mat file and to save the class names to a .mat file you should run the following commands:

The result will be the creation of two .mat files in the current folder named alexnet.mat, which will contain the actual network with all the layers, and alexnet_classes.mat which will contain the class names. As mentioned below, the toolbox also supports helper functions for achieving this.

2. USING PRE-TRAINED MODELS

You can find a number of CNN examples in the vision_toolbox\examples\cnn folder. The NXP Vision Toolbox has three CNN examples which can detect objects on an image, using the webcam on your laptop or by gathering the images from the MIPI-CSI attached camera on the S32V board.

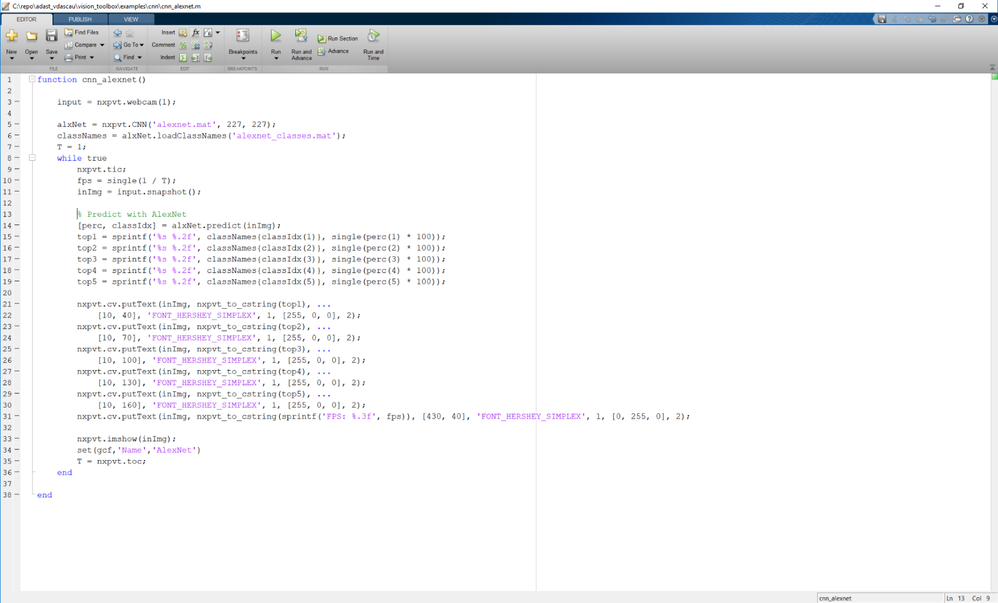

Below is a simple m-script that implement object classification based AlexNet CNN. In this example the input image is obtained from the webcam using the built in NXP Vision Toolbox wrapper nxpvt.webcam()

Then, a new alxNet object is created based on a pre-trained alexnet CNN provided by MATLAB.

The frames captured from the webcam are then processed one-by-one in a infinite loop, by feeding the frames to the predict() method associated with the object we have created in the beginning of the the program.

The first five predictions are then displayed on top of the frame acquired from the webcam using another builtin NXP Vision Toolbox wrapper nxpvt.imshow. Since this is simulation only, the program could be written without any nxpvt. prefixes but in preparation for next module code generation we think is better to get used with this notation as soon as possible.

These 20-something lines of code let you create an AlexNet object and represent all the code one should write to be able to run the algorithm in simulation and deploy on the board. Before using the code, one should get a hold of the pre-trained network alexnet and save it in a file using the nxpvt.save_cnn_to_file command:

There are also 3 scripts that can do this automatically : save_alexnet_to_file, save_googlenet_to_file, save_squeezenet_to_file.

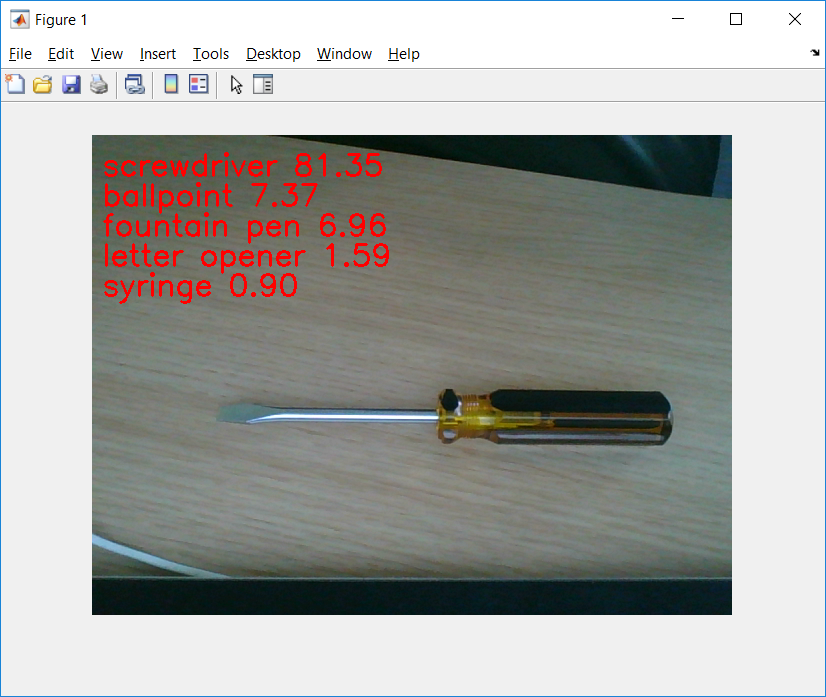

Running the AlexNet CNNexample is done by executing the cnn_alexnet.m.

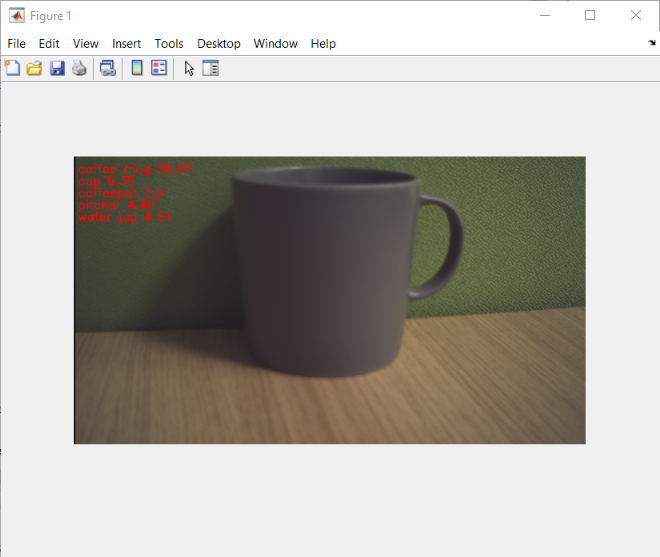

After running the m-script you should be able to see the following information on your MATLAB Figure. As can be seen the algorithm is able to identify objects on background with high confidence.

3. COMPARISON BETWEEN THE MODELS

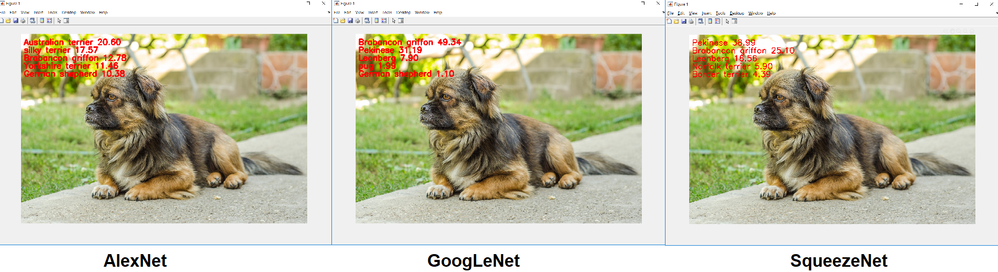

As you can see if you look in the cnn_alexnet.m, cnn_googlenet.m and cnn_squeezenet.m files the same code is being used. Apart from the fact that you should use the appropriate .mat file for the CNN object, all other aspects are pretty much the same.

You can also use the cnn_alexnet_image.m, cnn_googlenet_image.m and cnn_squeezenet_image.m. We have tested the CNN predictions on our colleague mariuslucianandrei's dog and got different results. All of them were able to figure out that it's a dog, but the most accurate one was SqueezeNet which predicted it's a Pekinese.

DISCLAIMER: The dog is mixed-breed so we can't really blame any of them :-)

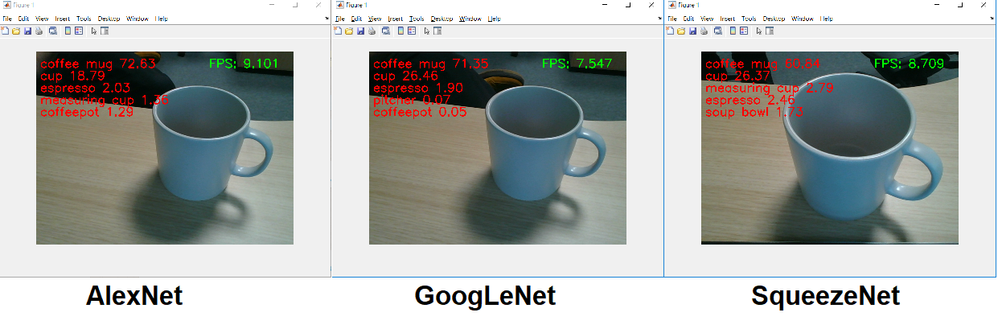

In terms of speed and accuracy on frames from a video using the webcam in MATLAB we have encountered the following performances:

These performance differences aren't necessarily relevant since it depends a lot on the lighting and the shadows in the images. SqueezeNet was optimized for running with a small footprint in terms of size and its performance is comparable with AlexNet.

4. PROCESSOR IN THE LOOP SIMULATION

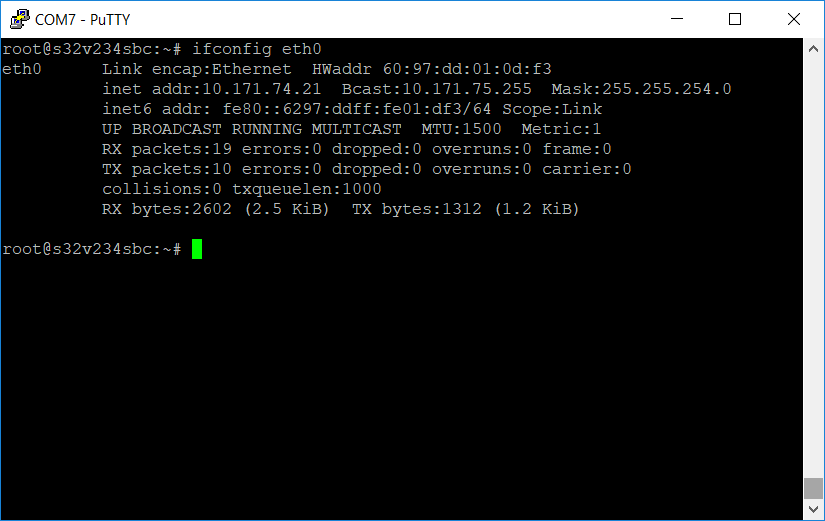

There is also the possibility to get the images from the S32V234 board and run the algorithm in MATLAB. To do so, you have to use the S32V234 connectivity object. An example using SqueezeNet is provided in the vision_toolbox\examples\cnn\s32v234\ folder. To do this, you should get your board's IP Address:

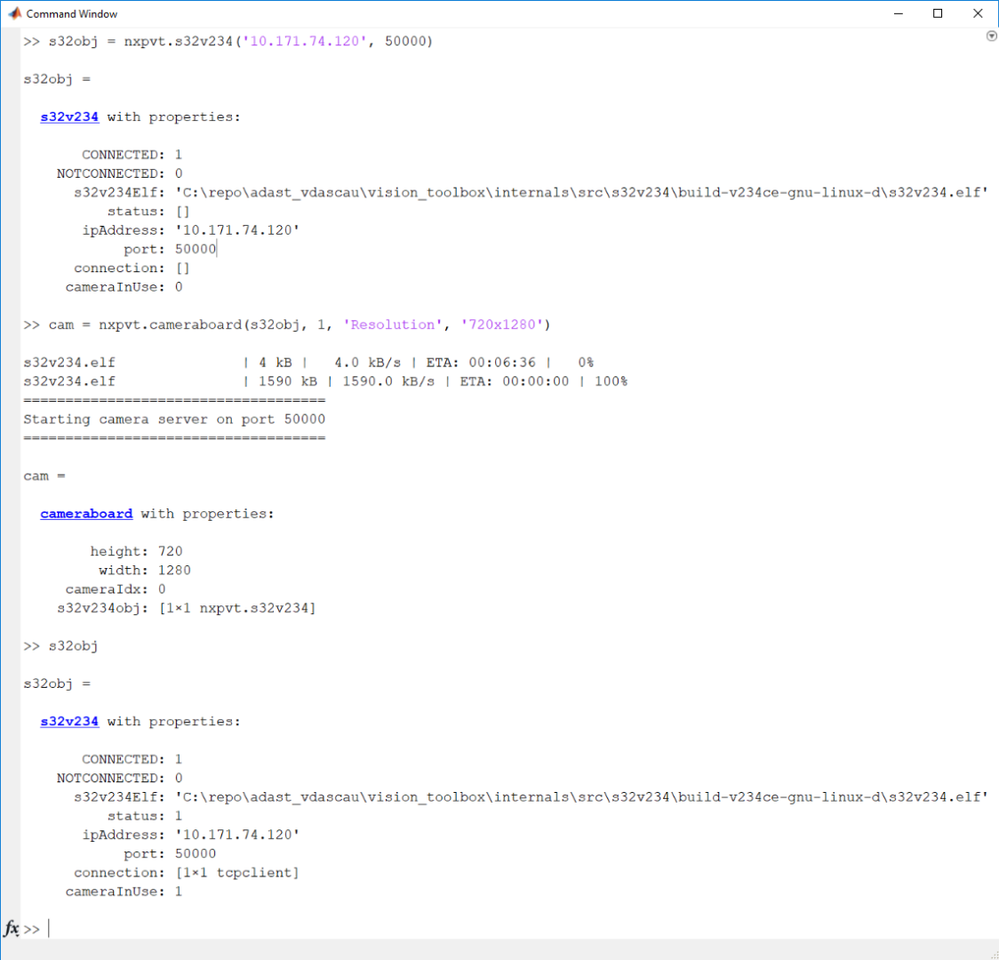

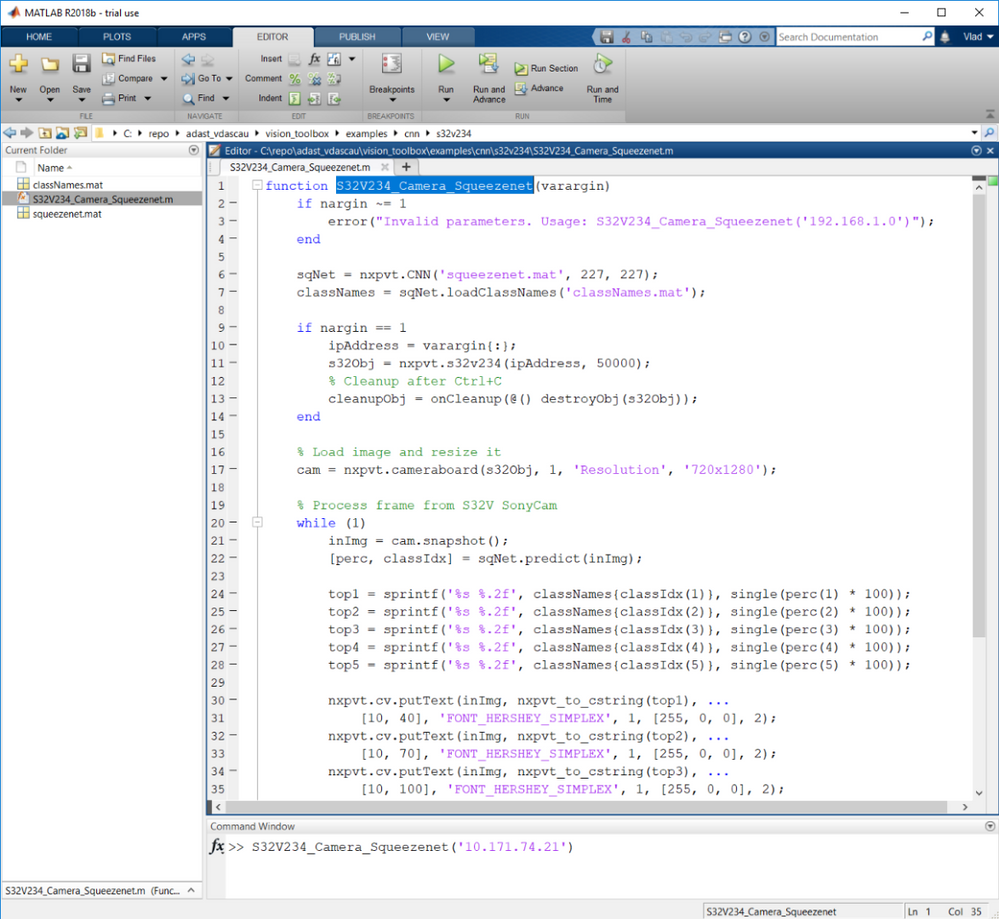

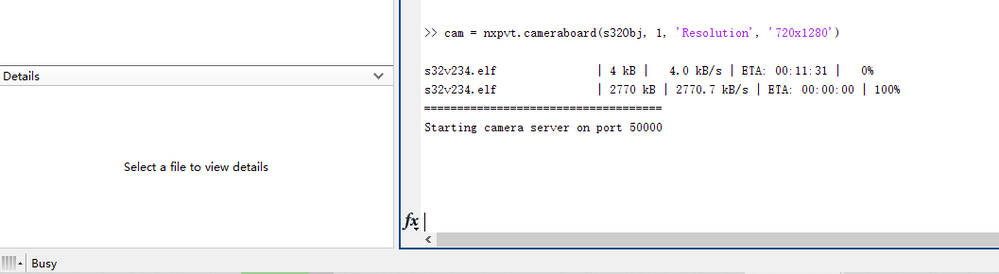

The example uses port 50000 as default, so be sure you have this port free. This example runs SqueezeNet by using the already discussed nxpvt.CNN API to wrap the network and creates an object for communication through a TCP/IP socket with the board. Getting frames from the camera follows the same approach as the Raspberry Pi MATLAB object, so if you're familiar with that, it should be really easy to set this up. To do this, you simply need to create an nxpvt.s32v234 object that takes an IP address and a port. On top of that, in the same manner as you would do for the camera board on the Raspberry Pi you need another object specifying the camera index ( 1 - for MIPI-A connected camera, 2 - for MIPI-B connected camera ) and the resolution the following way:

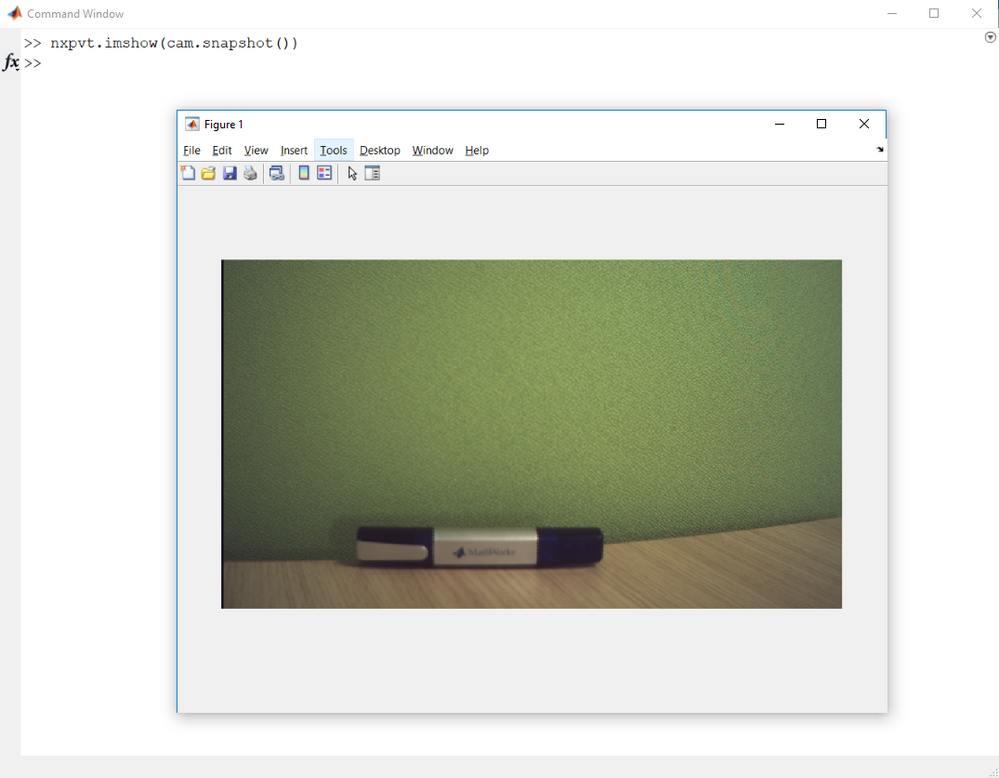

As you can see the s32obj.cameraInUse becomes true after the cameraboard object is connected to the S32V board and the whole rigmarole associated with initializing and configuring the low-level drivers and the ISP-specific details is done automatically. Furthermore, getting a frame from the camera becomes as easy as just calling the snapshot method on the cameraboard object:

This communication object is used in the SqueezeNet demo below, which is running the object detection algorithm in MATLAB with frames from the attached camera:

If we run the above m-script in MATLAB with data from the S32V Sony camera we should obtain:

4. CONCLUSIONS

Using these pre-trained models has the limitation of only being able to classify images based on the predefined classes the models were trained on. However, you can use Transfer Learning, which we will discuss in a future article, to train the network to recognize a set of user-defined custom classes.

In conclusion, Simulation in the MATLAB environment with the NXP Vision Toolbox is pretty much straight-forward and the flexibility that MATLAB provides with the Deep Learning Toolbox and the pre-trained models has been integrated and provides MATLAB developers a friendly and familiar environment.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello, dumitru-daniel.popa nxf40536

I tried to start the camera, but it is stuck there and the server cannot be started.

This is what is looks like, I can see the Matlab is busy running, but the board doesn't respond at all.

Could you please give me some suggestions about this problem?

By the way, the Vision SDK I used is version 1.6. Could this be the compatibility problem? And can I install multiple versions of VIsion SDK?

Thank you very much.

-------------------------------------------------

Update

-------------------------------------------------

Besides, when I tried to compile the example 'IO.m' in Matlab using the SONYCAM from the board, it reports the following errors, wehre there are many undefined references. Could you please give some suggestions in fixing these problems?

Thank you very much.

---

arm64/gnu/optimized LD IO.elf <= nxpvt_umat_w-cpp.o camera_input-cpp.o nxpvt_imread_w-cpp.o nxpvt_imshow_w-cpp.o nxpvt_videoinput-cpp.o nxpvt_videoinput_w-cpp.o nxpvt_videoreader-cpp.o nxpvt_videoreader_w-cpp.o IO_data-cpp.o IO_initialize-cpp.o IO_terminate-cpp.o IO-cpp.o main-cpp.o /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/apex/acf/build-v234ce-gnu-linux-o/libacf.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/apex/drivers/user/build-v234ce-gnu-linux-o/libapexdrv.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/apex/icp/build-v234ce-gnu-linux-o/libicp.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/apexcv_base/apexcv_common/build-v234ce-gnu-linux-o/apexcv_common.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/utils/common/build-v234ce-gnu-linux-o/libcommon.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/utils/communications/build-v234ce-gnu-linux-o/lib_communications.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/utils/log/build-v234ce-gnu-linux-o/liblog.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/utils/sumat/build-v234ce-gnu-linux-o/libsumat.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/utils/umat/build-v234ce-gnu-linux-o/libumat.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/isp/graphs/mipi_simple/dynamic_mipi_simple/build-v234ce-gnu-linux-o/libdynamic_mipi_simple.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/io/frame_io/build-v234ce-gnu-linux-o/libframe_io.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/io/sdi/build-v234ce-gnu-linux-o/libsdi.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/isp/cam_generic/user/build-v234ce-gnu-linux-o/libcamdrv.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/isp/csi/user/build-v234ce-gnu-linux-o/libcsidrv.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/isp/fdma/user/build-v234ce-gnu-linux-o/libfdmadrv.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/isp/h264dec/user/build-v234ce-gnu-linux-o/libh264decdrv.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/isp/h264enc/user/build-v234ce-gnu-linux-o/libh264encdrv.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/isp/jpegdec/user/build-v234ce-gnu-linux-o/libjpegdecdrv.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/isp/sequencer/user/build-v234ce-gnu-linux-o/libseqdrv.a /c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/isp/viu/user/build-v234ce-gnu-linux-o/libviudrv.a

C:/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/io/sdi/build-v234ce-gnu-linux-o/libsdi.a(sdi-cpp.o): In function `sdi_grabber::Boot()':

sdi.cpp:(.text._ZN11sdi_grabber4BootEv+0xd4): undefined reference to `CGL_Config(cgl_SystemExtCfg_t const&)'

C:/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/io/sdi/build-v234ce-gnu-linux-o/libsdi.a(sdi-cpp.o): In function `sdi_grabber::CsiEventHandler(unsigned int)':

sdi.cpp:(.text._ZN11sdi_grabber15CsiEventHandlerEj+0x130): undefined reference to `CGL_StartStr()'

sdi.cpp:(.text._ZN11sdi_grabber15CsiEventHandlerEj+0x160): undefined reference to `CGL_StopStr()'

C:/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/io/sdi/build-v234ce-gnu-linux-o/libsdi.a(sdi-cpp.o): In function `sdi_grabber::ReleasePrv()':

sdi.cpp:(.text._ZN11sdi_grabber10ReleasePrvEv+0xe0): undefined reference to `CGL_Close()'

C:/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/io/sdi/build-v234ce-gnu-linux-o/libsdi.a(sdi-cpp.o): In function `sdi_grabber::Start(unsigned int, unsigned int)':

sdi.cpp:(.text._ZN11sdi_grabber5StartEjj+0xc0): undefined reference to `CGL_StartStr()'

C:/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/io/sdi/build-v234ce-gnu-linux-o/libsdi.a(sdi-cpp.o): In function `sdi_grabber::Stop()':

sdi.cpp:(.text._ZN11sdi_grabber4StopEv+0xc8): undefined reference to `CGL_StopStr()'

C:/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/io/sdi/build-v234ce-gnu-linux-o/libsdi.a(sdi-cpp.o): In function `sdi_grabber::IOsReserve()':

sdi.cpp:(.text._ZN11sdi_grabber10IOsReserveEv+0xbc): undefined reference to `CGL_Open(cgl_System_t const&)'

sdi.cpp:(.text._ZN11sdi_grabber10IOsReserveEv+0xec): undefined reference to `CGL_Close()'

C:/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/io/sdi/build-v234ce-gnu-linux-o/libsdi.a(sdi-cpp.o): In function `sdi_grabber::PreStart(cgl_System_t const*)':

sdi.cpp:(.text._ZN11sdi_grabber8PreStartEPK12cgl_System_t+0x13c): undefined reference to `CGL_Close()'

C:/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/isp/cam_generic/user/build-v234ce-gnu-linux-o/libcamdrv.a(sony_user-cpp.o): In function `SONY_RegConfig(CSI_IDX, unsigned char)':

sony_user.cpp:(.text._Z14SONY_RegConfig7CSI_IDXh+0xb0): undefined reference to `CGL_ExecTable(cgl_CmdTable const&)'

sony_user.cpp:(.text._Z14SONY_RegConfig7CSI_IDXh+0x100): undefined reference to `CGL_Read(cgl_PortIdx_t, cgl_Cmd_t const&, unsigned char*)'

sony_user.cpp:(.text._Z14SONY_RegConfig7CSI_IDXh+0x134): undefined reference to `CGL_Read(cgl_PortIdx_t, cgl_Cmd_t const&, unsigned char*)'

sony_user.cpp:(.text._Z14SONY_RegConfig7CSI_IDXh+0x190): undefined reference to `CGL_Read(cgl_PortIdx_t, cgl_Cmd_t const&, unsigned char*)'

sony_user.cpp:(.text._Z14SONY_RegConfig7CSI_IDXh+0x1c4): undefined reference to `CGL_Read(cgl_PortIdx_t, cgl_Cmd_t const&, unsigned char*)'

sony_user.cpp:(.text._Z14SONY_RegConfig7CSI_IDXh+0x214): undefined reference to `CGL_Read(cgl_PortIdx_t, cgl_Cmd_t const&, unsigned char*)'

C:/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/isp/cam_generic/user/build-v234ce-gnu-linux-o/libcamdrv.a(sony_user-cpp.o):sony_user.cpp:(.text._Z14SONY_RegConfig7CSI_IDXh+0x24c): more undefined references to `CGL_Read(cgl_PortIdx_t, cgl_Cmd_t const&, unsigned char*)' follow

C:/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/isp/cam_generic/user/build-v234ce-gnu-linux-o/libcamdrv.a(sony_user-cpp.o): In function `SONY_GeometrySet(SONY_Geometry&)':

sony_user.cpp:(.text._Z16SONY_GeometrySetR13SONY_Geometry+0xac): undefined reference to `CGL_Write(cgl_PortIdx_t, cgl_Cmd_t const&, unsigned char const*)'

sony_user.cpp:(.text._Z16SONY_GeometrySetR13SONY_Geometry+0x100): undefined reference to `CGL_Write(cgl_PortIdx_t, cgl_Cmd_t const&, unsigned char const*)'

sony_user.cpp:(.text._Z16SONY_GeometrySetR13SONY_Geometry+0x294): undefined reference to `CGL_Write(cgl_PortIdx_t, cgl_Cmd_t const&, unsigned char const*)'

sony_user.cpp:(.text._Z16SONY_GeometrySetR13SONY_Geometry+0x2c8): undefined reference to `CGL_Write(cgl_PortIdx_t, cgl_Cmd_t const&, unsigned char const*)'

sony_user.cpp:(.text._Z16SONY_GeometrySetR13SONY_Geometry+0x314): undefined reference to `CGL_Write(cgl_PortIdx_t, cgl_Cmd_t const&, unsigned char const*)'

C:/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/isp/cam_generic/user/build-v234ce-gnu-linux-o/libcamdrv.a(sony_user-cpp.o):sony_user.cpp:(.text._Z16SONY_GeometrySetR13SONY_Geometry+0x348): more undefined references to `CGL_Write(cgl_PortIdx_t, cgl_Cmd_t const&, unsigned char const*)' follow

C:/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/libs/isp/sequencer/user/build-v234ce-gnu-linux-o/libseqdrv.a(lin_seq_user-cpp.o): In function `SEQ_FwArrDownload(unsigned char const*, SEQ_FwType)':

lin_seq_user.cpp:(.text._Z17SEQ_FwArrDownloadPKh10SEQ_FwType+0xbc): undefined reference to `CSE_IsEnabled()'

lin_seq_user.cpp:(.text._Z17SEQ_FwArrDownloadPKh10SEQ_FwType+0x104): undefined reference to `CSE_Open()'

lin_seq_user.cpp:(.text._Z17SEQ_FwArrDownloadPKh10SEQ_FwType+0x138): undefined reference to `CSE_LoadKey(unsigned char const*, unsigned char const*, unsigned char const*, unsigned char)'

lin_seq_user.cpp:(.text._Z17SEQ_FwArrDownloadPKh10SEQ_FwType+0x160): undefined reference to `CSE_LoadSecRam(unsigned char const*, unsigned char const*, unsigned char const*, unsigned char)'

lin_seq_user.cpp:(.text._Z17SEQ_FwArrDownloadPKh10SEQ_FwType+0x170): undefined reference to `CSE_Close()'

lin_seq_user.cpp:(.text._Z17SEQ_FwArrDownloadPKh10SEQ_FwType+0x1bc): undefined reference to `CSE_Close()'

lin_seq_user.cpp:(.text._Z17SEQ_FwArrDownloadPKh10SEQ_FwType+0x1e8): undefined reference to `CSE_Close()'

lin_seq_user.cpp:(.text._Z17SEQ_FwArrDownloadPKh10SEQ_FwType+0x200): undefined reference to `CSE_LoadSecRam(unsigned char const*, unsigned char const*, unsigned char const*, unsigned char)'

collect2.exe: error: ld returned 1 exit status

make[3]: *** [/c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/build/nbuild/.C0.mk:139: IO.elf] Error 1

make[3]: Leaving directory '/c/Users/tjhua/Documents/MATLAB/Add-Ons/Toolboxes/NXP_Vision_Toolbox_for_S32V234/examples/basic/io/codegen/exe/IO/build-v234ce-gnu-linux-o'

make[2]: *** [/c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/build/nbuild/platforms/coordinator.mk:115: all] Error 2

make[2]: Leaving directory '/c/Users/tjhua/Documents/MATLAB/Add-Ons/Toolboxes/NXP_Vision_Toolbox_for_S32V234/examples/basic/io/codegen/exe/IO/build-v234ce-gnu-linux-o'

make[1]: *** [/c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/build/nbuild/sub.mk:78: allsub] Terminated

make[1]: Leaving directory '/c/Users/tjhua/Documents/MATLAB/Add-Ons/Toolboxes/NXP_Vision_Toolbox_for_S32V234/examples/basic/io/codegen/exe/IO/build-v234ce-gnu-linux-o'

make: *** [/c/NXP/VisionSDK_S32V2_RTM_1_6_0/s32v234_sdk/build/nbuild/platforms/coordinator.mk:115: allsub] Error 2

Warning: Cannot deploy on target. Compilation failed.

---

-------------------------------------------------

Update

-------------------------------------------------

Again, when I tried to compile the cnn demos, the following errors appeared in the end. There seems to be somethingwrong with the source code. Could you please provide some suggestions about this error? Thank you very much.

Deploying convnet.elf on 192.168.1.10 ...

*** Error in `./convnet.elf': free(): invalid pointer: 0x000000001ed2bc08 ***

../../src/apexcv_pro_resize.cpp (176) L1 - lExtWidth 100 < 104 lTargetWidth

C:/Users/tjhua/Documents/MATLAB/Add-Ons/Toolboxes/NXP_Vision_Toolbox_for_S32V234//internals/src/s32v/nxpvt_apexcv_resize_w.cpp : void* nxpvt::apexcv_resize_constructor() [27]

Cleaning up, please wait ...

Best regards

Chao

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi thuhc

You should use the 1.4.0 SDK as there is no support for newer versions at the moment. And yes, you can install multiple versions of the SDK, but keep in mind you also have to configure the S32V234_SDK_ROOT environmental variable to point to the installation you want to use when compiling any of the examples. Also, you should close MATLAB and open it again after you set the env. If you still have issues after installing the 1.4.0 version, I would be more than happy to help.

Thank you,

Vlad Dascau