- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- i.MX フォーラム

- :

- i.MXプロセッサ

- :

- eIQ export TFLite model, output format can't got the correct finish the inference

eIQ export TFLite model, output format can't got the correct finish the inference

- RSS フィードを購読する

- トピックを新着としてマーク

- トピックを既読としてマーク

- このトピックを現在のユーザーにフロートします

- ブックマーク

- 購読

- ミュート

- 印刷用ページ

eIQ export TFLite model, output format can't got the correct finish the inference

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

because we would like to deploy the TF Lite model into our edge device (iMX8M Plus EVK), and we following the guide for the model training, but we cannot show the result successful.

I've used the Model tool to check the in/output result.

also compare with the Google example,

and saw the model from eIQ export was 'MLIR' , but the Google's one was TOCO, not sure it was any impact or how to transfer that.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

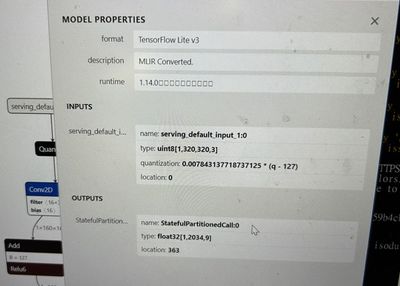

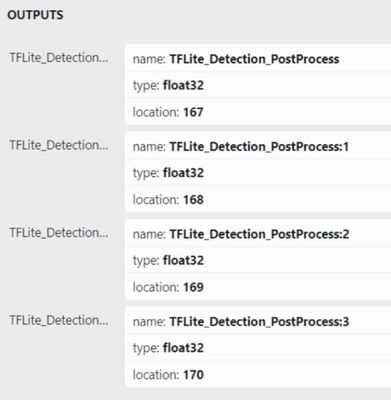

I installed the eIQ(1.8) and used the default model (path: workspace\models\mobilenet_ssd_v3\)

then using the model tool to check the result (in/output)

but I try to used the eIQ training model (tflite) but show different output filed.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi @HenryYeh,

Thank you for your reply.

In this case you will need to export the model after the training process.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告