- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- 无线连接

- :

- Wireless MCU

- :

- Re: JN5169 Data transmit request-confirm time

JN5169 Data transmit request-confirm time

JN5169 Data transmit request-confirm time

Hi,

I am trying to measure the time interval between a data request and a data confirmation in a single transmitting device but I am not 100 % sure my time class is correct or even if this can simply be measured by getting the times in the mcps-data.request and mcps-data.confirm. Perhaps there are some delays I am not aware of? I am using JN-AN-1174 as a base.

My time class looks like this:

PRIVATE void vTimer0Callback(uint32 u32Device, uint32 u32ItemBitmap)

{

tickCount++;

}

PUBLIC void vInitTimer ()

{

tickCount = 0;

// 16 Mhz clock

// With a 2^4 Prescaler = 16/2^4 = 1Mhz clock = 1 000 000 hz

// therefore, 1 tick = 1/1000 000 = 1us (microsecond)

vAHI_TimerEnable (E_AHI_TIMER_0,// Using Timer 0, (Options 0-4)

4, // Prescale 2^4

FALSE, // Enable interrupt at the beginning of timer (lowToHigh)

TRUE, // Enable interrupt in end of timer (highToLow)

FALSE); // OutputEnabled: False= Timer Mode, True= PWN or Delta Sigma Mode

vAHI_TimerClockSelect (E_AHI_TIMER_0,

FALSE, // FALSE = Use the internal 16Mhz clock, TRUE= Use external clock

TRUE);

vAHI_Timer0RegisterCallback(vTimer0Callback);

vAHI_TimerStartRepeat(E_AHI_TIMER_0,

0,

60000); //repeat every 60ms

}

PUBLIC uint32 vGetMicroSeconds()

{

return (u16AHI_TimerReadCount(E_AHI_TIMER_0) + (60000 * tickCount));

}Inside PRIVATE void vTransmitDataPacket(uint8 *pu8Data, uint8 u8Len, uint16 u16DestAdr)

I get the current time :

/* Request transmit */

DBG_vPrintf(TRUE,"%d MicroSec | Transmit Request with size %d \n",vGetMicroSeconds(),sMcpsReqRsp.uParam.sReqData.sFrame.u8SduLength);

vAppApiMcpsRequest(&sMcpsReqRsp, &sMcpsSyncCfm);

and inside PRIVATE void vHandleMcpsDataDcfm(MAC_McpsDcfmInd_s *psMcpsInd)

I get the current time again:

if (psMcpsInd->uParam.sDcfmData.u8Status == MAC_ENUM_SUCCESS)

{

DBG_vPrintf(TRUE,"%d MicroSec | Data transmit Confirm \n",vGetMicroSeconds());

}Finally, I transmit 3 packets of 4 bytes:

vTransmitDataPacket(data,4,0x0000);

vTransmitDataPacket(data,4,0x0000);

vTransmitDataPacket(data,4,0x0000);

The result:

20726445 MicroSec | Transmit Request with size 4

20731540 MicroSec | Transmit Request with size 4

20736663 MicroSec | Transmit Request with size 4

20741786 MicroSec | Data transmit Confirm

20746197 MicroSec | Data transmit Confirm

20750579 MicroSec | Data transmit Confirm

0 random backoff and retries :

// No Csma Backoff chances

MAC_vPibSetMaxCsmaBackoffs(s_pvMac,0);

// No Random BackOff Periods

MAC_vPibSetMinBe(s_pvMac,0);The time between the first request and the first confirm should be not more than 4256 microseconds (dataframe transmission time) + 128 (CCA time) + 0 random backoff and No ack equals to 4384 microseconds for the case of the max payload (114 bytes), but instead, my test with only 4 bytes of payload resulted in 15,341 microseconds (20741786 - 20726445). Could anybody point me in the right direction?

Is the time class or something else?

Hi Alberto,

I hope you are doing great.

Could you please provide more details about your application? What is the reason for getting the time between the data request and data confirmation?

Could anybody point me in the right direction?

Is the time class or something else?

You have to be careful about the time that the MCU needs to read out the time.

The "if", that is getting MAC_ENUM_SUCCESS, the data frame transmission was successful. So, it requires some time in this validation.

You could double-check the packets over the air using a sniffer.

Regards,

Mario

Hi Mario,

Thank you very much for your reply.

The reason to measure the times between request and data confirmation is to know the internal times involved between the moment we give the order to transmit a packet and the moment we successfully sent that packet also, to know when TurnArounds are occurring. This is to reproduce the same behavior in simulations.

You are totally right. In my case, it was not only the delays caused by the MCU time read and the validations in the program but also, there was a huge delay caused by the DBG_vPrintf itself (in the order of 3000us).

I solved this by only taking the time in the moments I needed and, printing these resulting times at the end.

I am also measuring the time between data confirmations to figure out if the PHY goes back to PHY_RX_ON between packets. Apparently, it did not.

I tested sending 4 packets and measuring their intervals

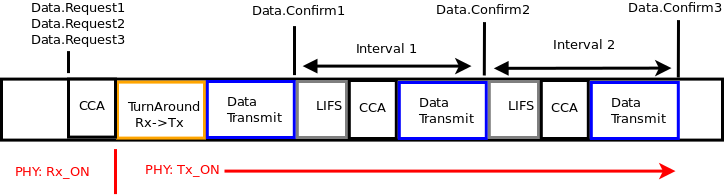

The transmission of data packets in a beaconless mode, with a Beacon Exponent (BE) of 0 (No random backoffs delays before CCA) and no ACK flag, with a payload of 114 bytes (max payload) is:

1 byte = 2 sym 1 sym = 16 us (us = MicroSeconds) LIFS = 40 sym

114 bytes (data Payload) + 13 bytes (Mac header and trailer) + 6 bytes (PHY headers) = 127 bytes = 4256 us

Therefore, the interval between data packets of the same payload size should be:

4256 us (data) + 128 us (1 CCA) + 640 us (LIFS) = 5024 us

The interval values obtained are a little above 5024 us but I think is the overheads you mentioned.

I think the PHY remains TX_ON after the initial packet and there is no more extra turnArounds, is this assumption correct?

Furthermore, is there a way to know the value of the TurnAround (TX->RX and RX->TX) in JN5169? I know it should not be more than 12 sym (192us) but that does not mean the value is exactly 12 sym . Also, I am suspecting it is different between TX->RX and RX->TX.

Thank you for your time and sorry for the lengthy post. Please let me know what you think about this.

Hi Alberto,

The interval values obtained are a little above 5024 us but I think is the overheads you mentioned.

I think the PHY remains TX_ON after the initial packet and there are no more extra turnArounds, is this assumption correct?

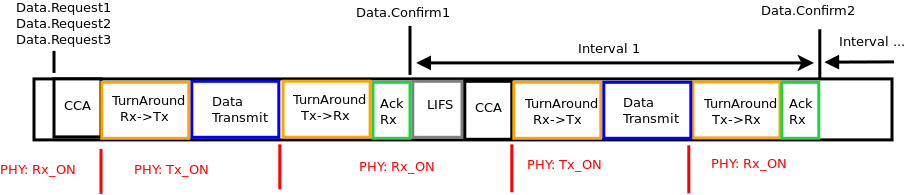

The PHY remains in RX if the TX is setting the ACK flag, this requires around 180 to 204 μs (turnaround time range from ZB_CSG-ZigBee-IP IEEE 802.15.4 Level Test Specification)

Regards,

Mario

Thank you Mario I really appreciate your comments.

Sorry to Insist, but as I mentioned, the figure I posted above was for transmission without Acknowledgement. So, If am not mistaken for this case, the PHY remains in TX after the first transmission until the MAC is idle again, correct?

Now, for the case of Data transmission with Acknowledgment my mental image is this:

Or at least this should be true, but the NXP data rates calculation document mentions that in real cases the backoff delay overlaps the IFS for energy saving reasons. What happens if BE =0 and therefore, there is no backoff delay?

In the figure above of data transmission with ACK, and with BE=0, does the IFS overlaps and therefore absorb the CCA and the TurnAround(Rx->Tx) time??

Furthermore, you mention that the turnaround is between 180 to 204 μs, isn't this above the 192us that is supposed to be the maximum limit according to the IEEE 802.15.4-2006 std?

I really appreciate if you could clarify these questions, thank you for your support.

Sincerely,

Alberto

Hi Alberto,

BE shall be initialized to the value of macMinBE. So, if macMinBE is set to zero, collision avoidance will be disabled during the first iteration of this algorithm. After that, the BE will provide a value of BE = min(BE+1, macMinBE).

Regards,

Mario