- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- Software Forums

- :

- S32 SDK

- :

- Re: How long does an ISELED command needs?

How long does an ISELED command needs?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How long does an ISELED command needs?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am using ISELED BETA_3.9.0 SDK in combination with SDK RTM 3. 0 .0 on S32 K 144.

I would like to know

1. what is the formula to calculate timeout to initialize a strip with N LEDs on it?

2. How long does it take to digLED_Set_RGB () and digLED_Set_DIM () commands?

Currently it's taking over 200us to do that and sounds too long to me. I read in release note that

[Compare with the old function (on S32K116, gcc, -O1 optimization):

Flash usage:

Old: 1724 bytes (text + rodata)

New: 432 bytes (text + rodata) -> ~ 3.99x improvement

Run time (DOWNSTREAM):

Old: 21.166ns / iteration

New: 2.144ns / iteration -> ~ 9.86x improvement

Run time (UPSTREAM):

Old: 8.916ns / iteration

New: 2.494ns / iteration -> ~ 3.57x improvement]

Is it only valid for S32K116?

Thank you for your time and help.

Best regards,

Leila

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Leila,

1. The INIT sequence duration depends on the number of LEDs (max. 4072) and the time propagation delay (max. number will be defined by Inova but should be aprox. 8μs). The INIT command travels downstream and takes 4072*8μs and is being processed in every LED (~ns, can be ignored). After this, the 4072 response frames (length of 50 bits + 16 bit latency) travel upstream and take a tpd of 4072*8μs again. Due to fabrication tolerances, the max. bitwidth is +30% of the nominal value (500ns). The total result, for 4072 LEDs is approximately 245ms.

2. The "Set" commands all last the same amount of time to be transmitted. The transmission mode, however, can impact CPU load during the transmission as follows:

- Interrupts mode, CRC enabled:

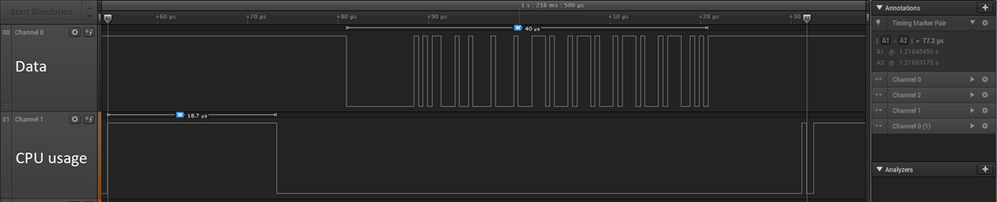

The total time is the interval between the A1 and A2 markers - 68 us. This is the time between calling the digLED_Set_RGB() function, and digLED_Callback is called with the "TRANSMISSION_COMPLETE" event. The second channel represents the CPU usage during the transmission (Frame formatting, CRC calculation, and the transmit interrupts - in total 18.5 us)

- DMA mode single frame, CRC enabled:

Total time is 77 us, with the total CPU load 18.7 us. DMA mode is not more efficient than interrupts mode for single commands.

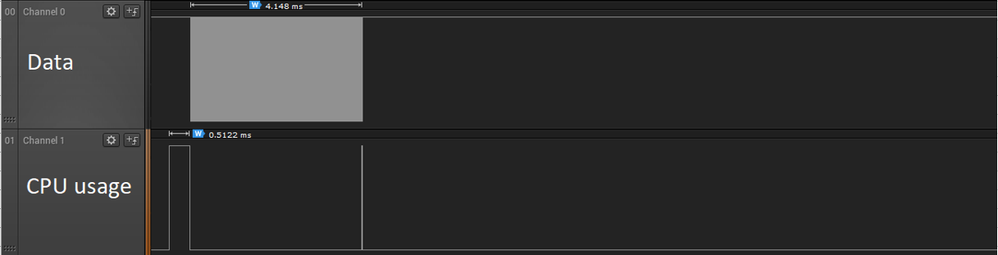

- Block mode DMA, CRC enabled:

This capture represents the Block transmission of 80 SET_RGB or SET_DIM commands using the single "digLED_Send_Cmd_Block" function. This is the most efficient way to address every LED in the strip. In this case, all the processing is done before the transmission begins (512 us) after which DMA sends the 80 frames (4.14 ms) - so about 6.4 us CPU processing time for each frame. The screenshots above were taken using S32K144 running at 80 Mhz, 40 Mhz FlexIO clock, cache enabled.

The times you are citing from the release notes refer to the CRC calculation time for one frame. It was measured on S32K116 as it is the least powerful. Those times are included in the CPU usage shown in the graphs.

Hope this helps.

Best regards,

Dragos