- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

-

- RSS フィードを購読する

- トピックを新着としてマーク

- トピックを既読としてマーク

- このトピックを現在のユーザーにフロートします

- ブックマーク

- 購読

- ミュート

- 印刷用ページ

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi, Martin KovarPetr StancikStanislav Sliva

I'm trying to debug to SEMA42 module in the MPC5775K. I'd like to make one core to communicate with other. Is there some sample codes to share? Thanks.

Regards,

Ron

解決済! 解決策の投稿を見る。

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi Ron,

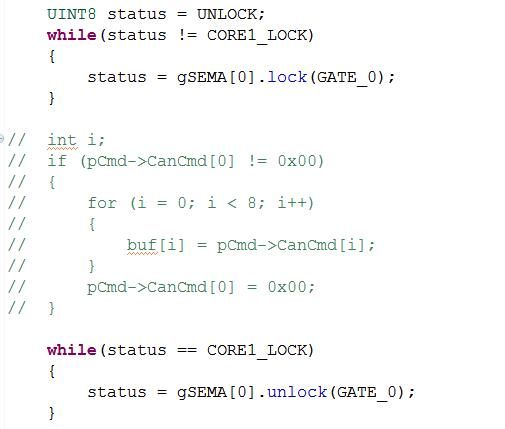

the general (simplified) principle of using semaphores is following:

You have two cores, one shared resource (for example memory) and one gate, which protects the shared resource. If any core wants to access to the shared resource, it has to check, of the gate is unlocked. If yes, core locks the gate and uses the shared resource (this is called critical section). After core leaves critical section (does not want to work shared resource anymore), core has to unlock the gate (because of deadlock) and any other core can use it now.

If the gate is locked, core has to wait, until other core unlock it. Gate can be unlock only by the the core which locked it.

And lets look at the example.

1)what do you mean about status? which core?

Status variable determines the status of the GATE (only gate 0 is used for both cores). If gate is unlock, core can lock it and execute it's critical section (access to shared resource).

2) status = Get_Gate_Status(GATE0 or GATE1)?

In this example only one gate is used, because there is only one shared resource. So the answer is GATE0. This is also answer for your next question.

3) Read shared memory here?

Yes, after you lock the gate, you are in the critical section and you can use (read/write) shared resource. Just realize, which operations are mutual.

read/read - not mutual...more cores can access shared resource

write/read - mutual exclusion

write/write - mutual exclusion

About the use case you describe I do not understand much, but I suppose you have some array called CanCMD[8]. If you want to write to this array, core0 should lock the gate0 and write to the array, then unlock the gate. Core1 has to wait, until gate0 is unlocked. Then core1 locks the gate, reads data from array and unlocks the gate.

Check in the debugger, if memcpy function copies correct data to the correct address and also if pcmd points to correct address.

Regards,

Martin

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi Ron,

This problem you describe is caused by cache memory. You should disable cache memory or use SMPU to inhibit shared RAM to be cached.

Regards,

Martin

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

What should I do to disable DCache? I tried to call this function. But there are a lot of errors.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi Ron,

in startup files, comment the piece of the code, which enable cache memory.

Regards,

Martin

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Martin,

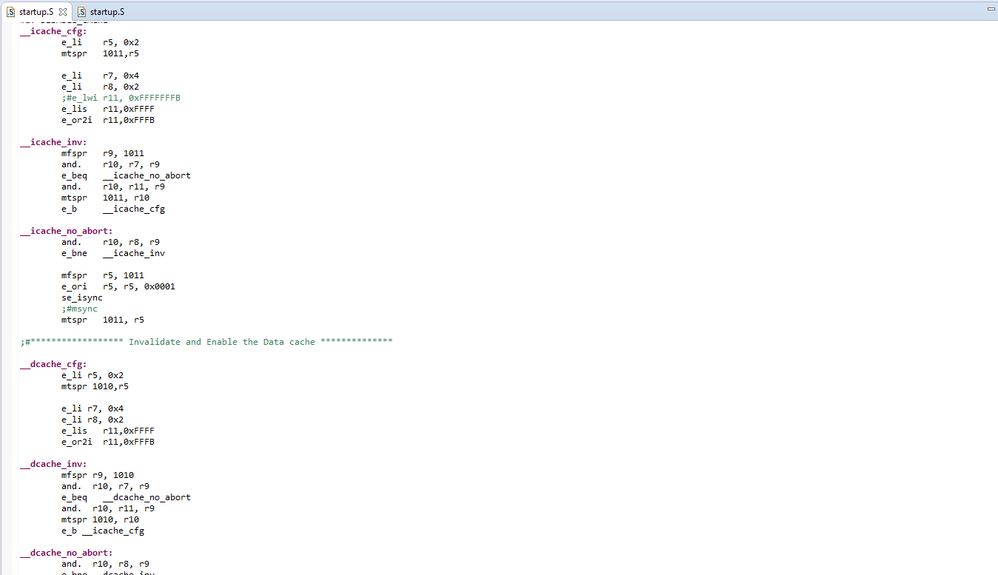

I modified these three startup.s. But the problem still existed. Is it the right way to comment lines about the cache?

Regards,

Ron

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi Ron,

yes, the comment you used is correct. Cache is disabled now. But I worry about the debugger. I tried to use PEMicro debug probe and S32DS debugger and debug your project, but it was nearly impossible.

When I had tried any simpler project (for example the project I shared here) it worked correct. In the end, I tried to debug your project using Lauterbach debugger and it worked correct when I disabled cache.

Regards,

Martin

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi, Martin

I met the same problem as ronliu mentioned.

I've tried to disable dcache, and it seems really has effect. Core1 can accesss the shared variable.

But after disable dcache, I found the same operation will take more execute time.

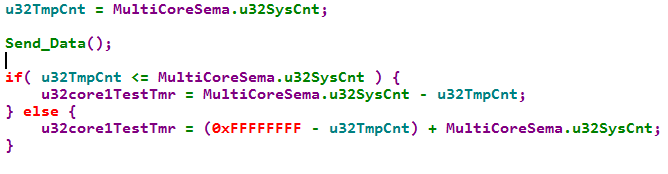

For example, I use below code to test the execute time of function Send_Data(),

when dcache enable, execute time is 27ms.

when dcache disable, execute time is 47ms.

My question is, how can I use the shared ram and keep the execute time at the same time?

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi,

unfortunately, your requirement is not possible. If the memory is shared, it should not be cached. From my point of view, the best way is to keep cache enabled and disable cache only for the small part of memory, which is shared between cores. This should keep the execution speed high for most of your code and only access to shared memory will be affected.

If you have any other questions, please feel free to write me back.

Regards,

Martin

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi Martin

Could you show how to keep cache enabled and disable it only for the shared memory?

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello Marek,

please see the example below. It is not created for MPC5775K, but the SMPU module is the same.

https://community.nxp.com/docs/DOC-328346

Regards,

Martin

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Thanks Martin for the example. In a fact I am working with MPC5748G.

I have placed in the main() code as below:

SMPU_1.RGD[0].WORD0.R = 0x40000000;

SMPU_1.RGD[0].WORD1.R = 0x40000FFF;

SMPU_1.RGD[0].WORD2.FMT0.R=0xff00fc00;

SMPU_1.RGD[0].WORD3.B.CI=1;

SMPU_1.RGD[0].WORD5.B.VLD=1;

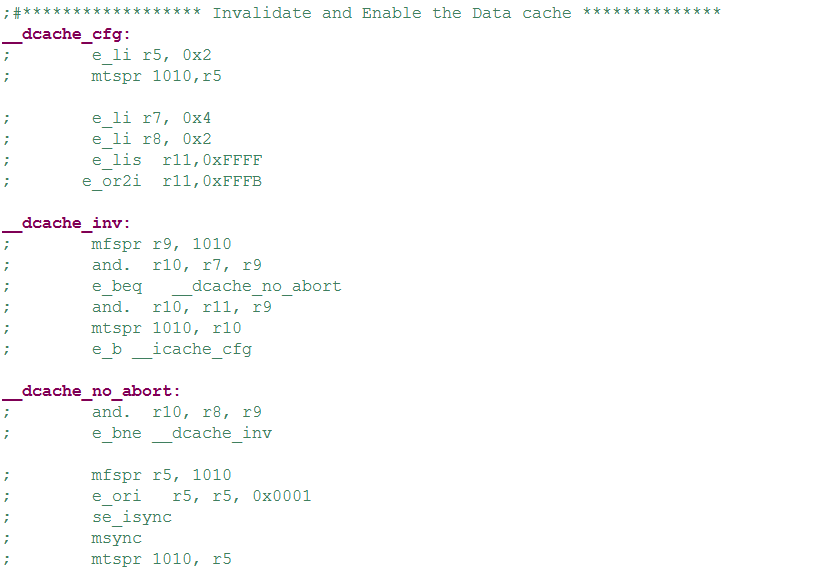

I have kept, default, code in Startup.S as below:

__icache_cfg:

e_li r5, 0x2

mtspr 1011,r5

e_li r7, 0x4

e_li r8, 0x2

;#e_lwi r11, 0xFFFFFFFB

e_lis r11,0xFFFF

e_or2i r11,0xFFFB

__icache_inv:

mfspr r9, 1011

and. r10, r7, r9

e_beq __icache_no_abort

and. r10, r11, r9

mtspr 1011, r10

e_b __icache_cfg

__icache_no_abort:

and. r10, r8, r9

e_bne __icache_inv

mfspr r5, 1011

e_ori r5, r5, 0x0001

se_isync

;#msync

mtspr 1011, r5

#endif

#if defined(D_CACHE)

;#****************** Invalidate and Enable the Data cache **************

__dcache_cfg:

e_li r5, 0x2

mtspr 1010,r5

e_li r7, 0x4

e_li r8, 0x2

e_lis r11,0xFFFF

e_or2i r11,0xFFFB

__dcache_inv:

mfspr r9, 1010

and. r10, r7, r9

e_beq __dcache_no_abort

and. r10, r11, r9

mtspr 1010, r10

e_b __icache_cfg

__dcache_no_abort:

and. r10, r8, r9

e_bne __dcache_inv

mfspr r5, 1010

e_ori r5, r5, 0x0001

se_isync

msync

mtspr 1010, r5

and unfortunately cache still is active for the shared range of the memory (0x40000000-0x40000FFF).

Everything works perfectly when I remove cache initialization from Startup.S, but in that case I don’t need to define non cached range.

What I am doing wrong?

I would like to keep cache enabled by the default but I also want to disable it for the particular range of the memory.

Best regards

Marek

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello Marek,

if you enable cache at first and then configure SMPU, you must invalidate cache. This can be done by writing 1 to DCINV bit in L1CSR0 for data cache and writing 1 to ICINV bit in L1CSR1 for instruction cache.

Another way is to configure SMPU first and then enable cache.

Hope it helps.

Regards,

Martin

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Thanks Martin, it works ☺.

Regards

Marek

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi, Martin

I will try your method, it seems feasible. Thank you very much for your advice!

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

There is still a doubt. If I download the firmware rather than using the S32 debugger. Could it be able to run correctly?

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi Ron,

I do not have any experience with GHS IDE so I am not tell you any relevant information. I also do not know if it is possible to use PEMicro debug probe with GHS IDE.

About your second question, I do not know what you mean by firmware.

But there is one possible way, which could be suitable for you. At the end of June, S32DS v 1.1 should be released and I hope, there will be fixed some bugs in debugger and it will become more usable for complex projects.

The best way, how to debug more complex project is Lauterbach debugger, but I know that the price is high.

Regards,

Martin

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Martin,

Thank you all the same. Do you know it is OK to debug MPC5775K multi-cores project in GHS IDE?

Regards,

Ron

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi Ron,

the general (simplified) principle of using semaphores is following:

You have two cores, one shared resource (for example memory) and one gate, which protects the shared resource. If any core wants to access to the shared resource, it has to check, of the gate is unlocked. If yes, core locks the gate and uses the shared resource (this is called critical section). After core leaves critical section (does not want to work shared resource anymore), core has to unlock the gate (because of deadlock) and any other core can use it now.

If the gate is locked, core has to wait, until other core unlock it. Gate can be unlock only by the the core which locked it.

And lets look at the example.

1)what do you mean about status? which core?

Status variable determines the status of the GATE (only gate 0 is used for both cores). If gate is unlock, core can lock it and execute it's critical section (access to shared resource).

2) status = Get_Gate_Status(GATE0 or GATE1)?

In this example only one gate is used, because there is only one shared resource. So the answer is GATE0. This is also answer for your next question.

3) Read shared memory here?

Yes, after you lock the gate, you are in the critical section and you can use (read/write) shared resource. Just realize, which operations are mutual.

read/read - not mutual...more cores can access shared resource

write/read - mutual exclusion

write/write - mutual exclusion

About the use case you describe I do not understand much, but I suppose you have some array called CanCMD[8]. If you want to write to this array, core0 should lock the gate0 and write to the array, then unlock the gate. Core1 has to wait, until gate0 is unlocked. Then core1 locks the gate, reads data from array and unlocks the gate.

Check in the debugger, if memcpy function copies correct data to the correct address and also if pcmd points to correct address.

Regards,

Martin

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Thanks Martin,

I found that it was running correctly if I just only locked and unlocked the gate 0. As the picture shows,

But if I tried to read or write data in the shared memory. The project jumped into the IVOR1_Vector(). So I doubt that if there is something wrong with the configuration about link files.

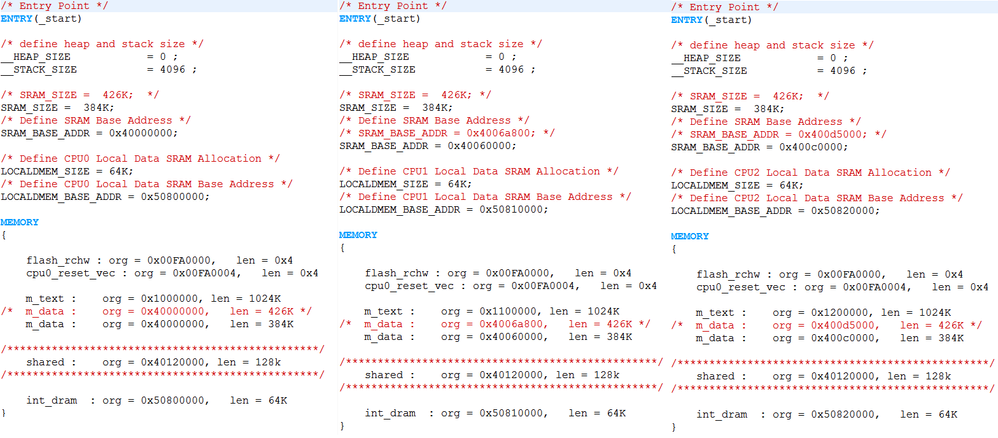

1. This is the configuration about mem.ld.

My project is that each core gets 128K * 3 = 384K RAM. The rest 128K is used as shared memory.

CORE0 0x40000000 ~ 0x4005FFFF

CORE1 0x40060000 ~ 0x400BFFFF

CORE2 0x400C0000 ~ 0x4011FFFF

SHARED 0x40120000 ~ 0x4013FFFF

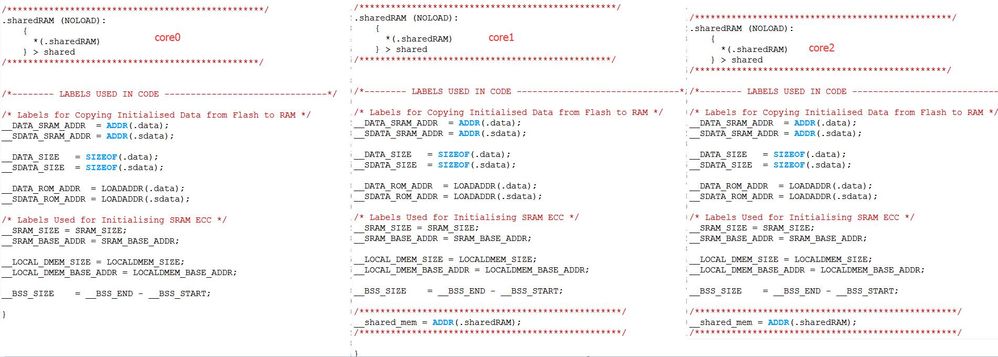

2. I added the parts between red lines in every sections.ld.

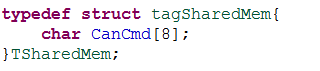

3. The shared memory structure is like that. There are 8 bytes in it. The total size of shared memory is 128K. But I only use 8 bytes now. I think it is not important.

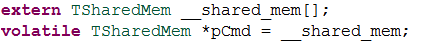

In the first core, I create a new structure in the shared memory and write some new data to 'gCANCmd'.

Then I declare the shared memory in the second core and read data from '_shared_mem[]'

Especially, I really lock and unlocked the GATE_0 when I try to execute the process of reading and writing.

So the problem is why it goes wrong when I am using the shared memory. Any ideas?

Regards,

Ron

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi Ron,

from the screens you sent me it seems, linker file is OK. Is it possible for you to share your project here? I need to check the whole code.

Regards,

Martin