- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- General Purpose Microcontrollers

- :

- Kinetis Microcontrollers

- :

- K10 ADC Incorrect Voltage Readings

K10 ADC Incorrect Voltage Readings

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've notice that voltage readings are off by almost half a volt when reading a 0V channel.

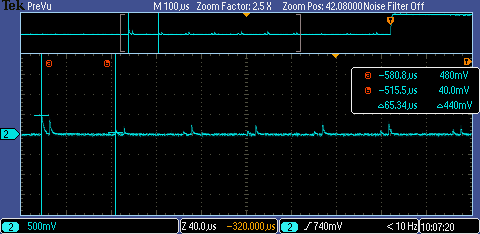

First the channel was set to 8V and the ADC measurement was made. Then, the channel was set to 0V, ensured in a steady state, and then 10 ADC conversations were made. Both the ADC reading and the oscilloscope show that the 0V ADC reading is almost ~500 mV off, the first read and then ~50mv off on the remaining reads:

Each pair of "spikes" correspond directly to an ADC read.

It would make sense that the first reading is off perhaps due to internal ADC capacitance, but it doesn't quite explain why the subsequent reads are always at ~40 mV. Bottom line: it is never reading the true 0V like it should be.

1. The ADC calibration offset is 0, and the ADC calibration gain is ~1.022,

1. The ADC conversation is set to 1 sample in high-speed conversation mode with a conversation time of 6.25 us

2. The 0v reading is connected to a 100kOhm pull-down resistor

- It is also connected in parallel to a 392kOhm resistor with a MOSFET in the off-state to the 8v channel.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Dane,

These voltage disturbances (voltage drops/peaks) at the ADC input can be reduced by choosing the correct conversion time and external RC components. I recommend you to check the following application note that provides guidelines to achieve optimal performance in these circumstances.

https://www.nxp.com/docs/en/application-note/AN4373.pdf

Best regards,

Felipe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Dane,

These voltage disturbances (voltage drops/peaks) at the ADC input can be reduced by choosing the correct conversion time and external RC components. I recommend you to check the following application note that provides guidelines to achieve optimal performance in these circumstances.

https://www.nxp.com/docs/en/application-note/AN4373.pdf

Best regards,

Felipe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That application note was exactly our issue. Thanks for the help!

Increasing the conversion time solved the issue.