- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- General Purpose Microcontrollers

- :

- LPC Microcontrollers

- :

- SD interface driver sets incorrect SD clock

SD interface driver sets incorrect SD clock

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

SD interface driver sets incorrect SD clock

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was looking at the SD bus signals on a scope and I noticed the clock speeds were all WAY off the mark.

- Set 400 KHz, get ~118 KHz. Cards don't work. (Must be why the high level driver enumerates at 20MHz)

- Set 20 MHz, get 16.67 MHz. Usually works but it's slower than it should be.

- Set 50 MHz, get 33.33 MHz. Usually not tolerated.

This looks suspiciously like a divisor being stepped up one notch further than it's supposed to be. When I went through the SDIF driver I found the culprit was exactly that. Look at the function Chip_SDIF_SetClock(). This is how it computes the divisor:

div = ((clk_rate / speed) + 2) >> 1;

This is wrong. It should be + 1. Once corrected I get the expected clocks and everything works perfectly. All cards enumerate, high speed mode works, etc.

This makes your SD controller look much worse than it actually is! When properly configured I can run any SD card I own in high-speed mode and get the full 25 MB/sec the controller is capable of - from ancient SD to SDHC to a brand new SDXC card. The SD controller is really a great piece of work. But I can't say the same for the drivers. :smileysilly:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Lee Coakley,

Thanks a lot for your information sharing.

Do you also check the clk_rate, whether it is correct on your side.

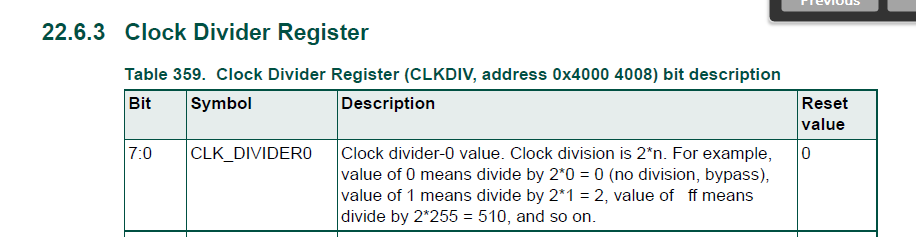

Because, if refer to the user manual:

div = ((clk_rate / speed) + 2) >> 1; is correct.

clk_rate/speed= (div*2-2)=(div-1)*2

So, I think we also need to check clk_rate, whether it is correct or not.

Can you help to check these point?

Set 400 KHz, get ~118 KHz. Cards don't work. (Must be why the high level driver enumerates at 20MHz)

- Set 20 MHz, get 16.67 MHz. Usually works but it's slower than it should be.

- Set 50 MHz, get 33.33 MHz. Usually not tolerated.

Give me the above 3 situations pSDMMC->CLKDIV data with div = ((clk_rate / speed) + 2) >> 1; and div = ((clk_rate / speed) + 1) >> 1;

Please also give me the code about calling Chip_SDIF_SetClock(LPC_SDMMC_T *pSDMMC, uint32_t clk_rate, uint32_t speed), especially clk_rate and speed data.

Have a great day,

Kerry

-----------------------------------------------------------------------------------------------------------------------

Note: If this post answers your question, please click the Correct Answer button. Thank you!

-----------------------------------------------------------------------------------------------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Kerry, I gathered the info.

The clock is enabled in Chip_SDIF_Init():

Chip_Clock_EnableOpts(CLK_MX_SDIO, true, true, 1);

Clk_rate is read using this function, which returns 204 MHz:

Chip_Clock_GetBaseClocktHz(CLK_BASE_SDIO)

Used in this context:

Chip_SDIF_SetClock(pSDMMC, Chip_Clock_GetBaseClocktHz(CLK_BASE_SDIO), g_card_info->card_info.speed);

I verified that the speed parameter makes sense. Console output:

speed = 400000 speed = 25000000 speed = 50000000

With the original computation where I observe the wrong frequency, the divisors are computed as:

- 400 KHz: div = 256

- 20 MHz: div = 6

- 25 MHz: div = 5

- 50 MHz: div = 3

With my change it's one less:

- 400 KHz: div = 255

- 20 MHz: div = 5

- 25 MHz: div = 4

- 50 MHz: div = 2

Also confirmed on the scope that these frequencies are the ones being output.

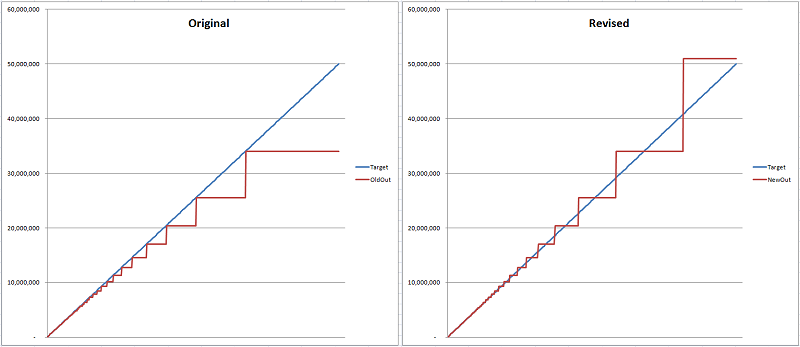

Plugging in the formula from the reference manual it looks like my formula gives the better answer:

Original:

204,000,000 / (2 * 256) = 398,438 (can't actually be set, 256 doesn't fit in the register so it was really 204MHz I guess)

204,000,000 / (2 * 6) = 17,000,000 (15% error)

204,000,000 / (2 * 5) = 20,400,000 (18% error)

204,000,000 / (2 * 3) = 34,000,000 (32% error)

Revised:

204,000,000 / (2 * 255) = 400,000 (0% error)

204,000,000 / (2 * 5) = 20,040,000 (2% error)

204,000,000 / (2 * 4) = 25,500,000 (2% error)

204,000,000 / (2 * 2) = 51,000,000 (2% error)

Adding + 1 picks the nearest divisor resulting in the best match, adding + 2 rounds up the divisor which overshoots the division rate in all cases other than exact multiples.

Here's a plot in 100KHz increments with blue as the target and red as the actual output:

Swept over the range, the average error is halved in the revised version.