- NXP Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- Vigiles

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Home

- :

- i.MX Forums

- :

- i.MX RT Knowledge Base

- :

- Create a eIQ (TensorFlow Lite library) demo for i.MX RT6xx

Create a eIQ (TensorFlow Lite library) demo for i.MX RT6xx

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Create a eIQ (TensorFlow Lite library) demo for i.MX RT6xx

Create a eIQ (TensorFlow Lite library) demo for i.MX RT6xx

i.MX RT6xx

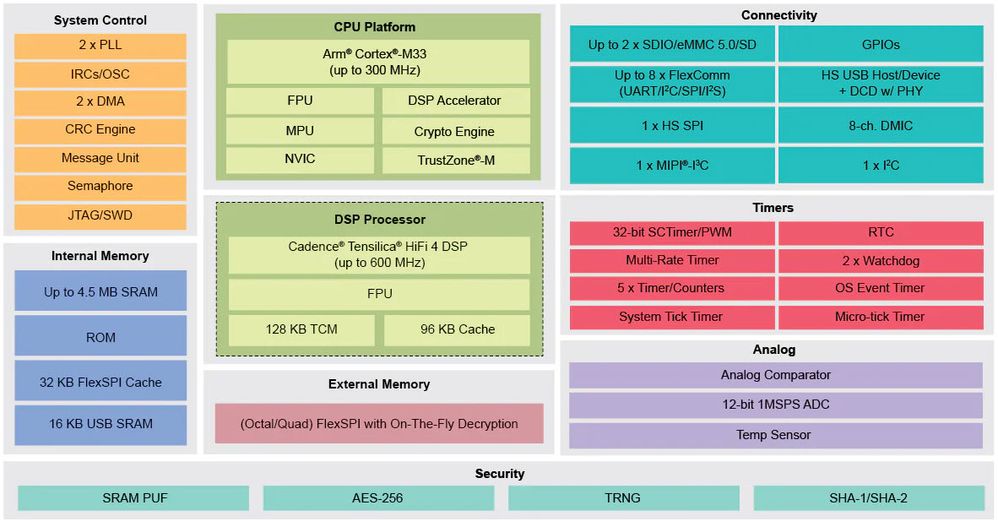

The RT6xx is a crossover MCU family is a breakthrough product combining the best of MCU and DSP functionality for ultra-low power secure Machine Learning (ML) / Artificial Intelligence (AI) edge processing, performance-intensive far-field voice and immersive 3D audio playback applications. Fig 1 is the block diagram for the i.MX RT600.

It consists of a Cortex-M33 core that runs up to 300 MHz with 32KB FlexSPI cache and an optional HiFi4 DSP that runs up to 600MHz with 96KB DSP cache and 128KB DSP TCM. It also contains a cryptography engine and DSP/Math accelerator in the PowerQuad co-processor. The device has 4.5MB on-chip SRAM. Key features include the rich audio peripherals, the high-speed USB with PHY and the advanced on-chip security. There is a Flexcomm peripheral that supports the configuration of numerous UARTs, SPI, I2C, I2S, etc.

Fig 1

Create a eIQ (TensorFlow Lite library) demo

In the latest version of SDK for the i.MX RT600, it still doesn't contain the demos about the Machine Learning (ML) / Artificial Intelligence (AI), so it needs the developers to create this kind of demo by themself.

To implement it, port the eIQ demos cross from i.MX RT1050/1060 to i.MX RT685 is the quickest way. The below presents the steps of creating a eIQ (TensorFlow Lite library) demo.

Greate a new C++ project

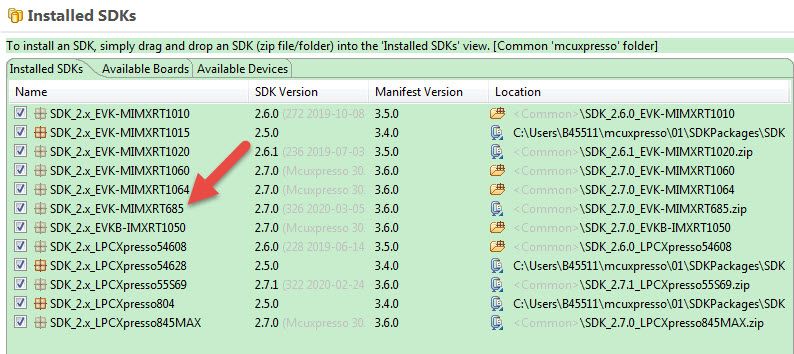

- Install SDK library

Fig 2

- Create a new C++ project using installed SDK Part

In the MCUXpresso IDE User Guide, Chapter 5 Creating New Projects using installed SDK Part Support presents how to create a new project, please refer to it for details

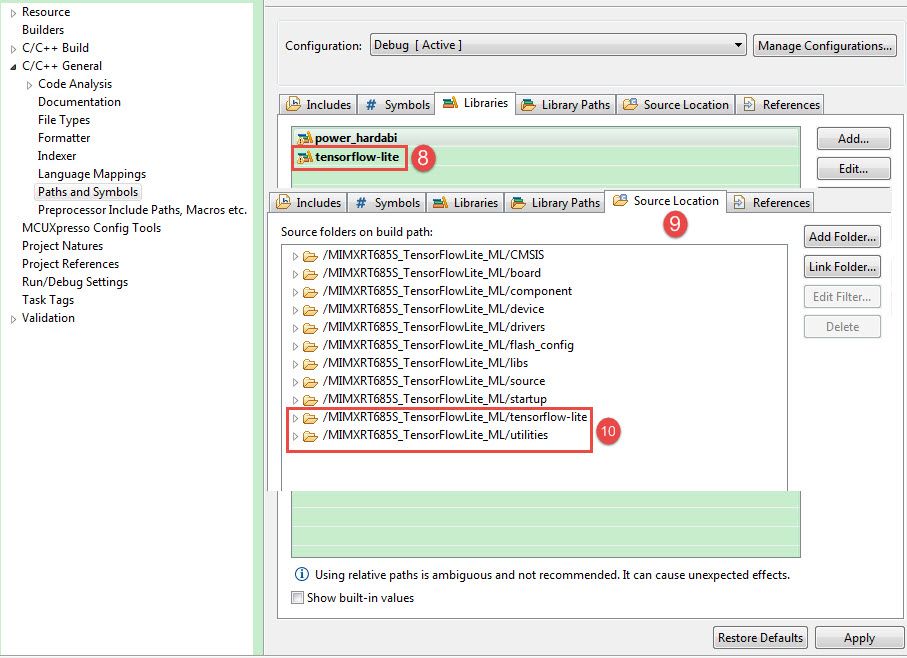

Porting tensorflow-lite

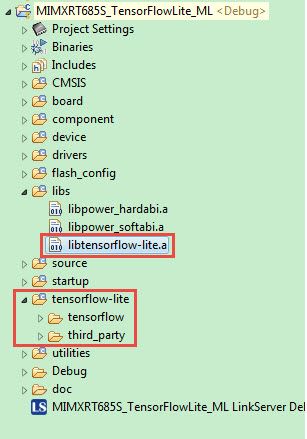

- Copy the tensorflow-lite library to the target project

Copy the TensorFlow-lite library corresponding files to the target project

Fig 3

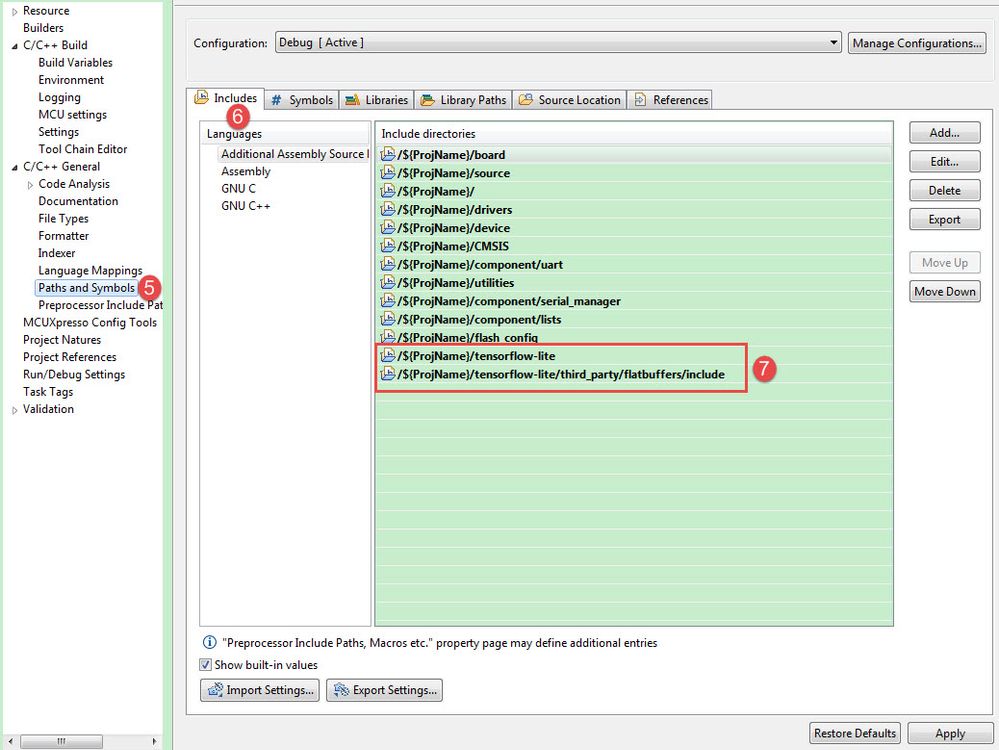

- Add the paths for the above files

Fig 4

Fig 5

Fig 6

Porting main code

The main() code is from the post: The “Hello World” of TensorFlow Lite

Testing

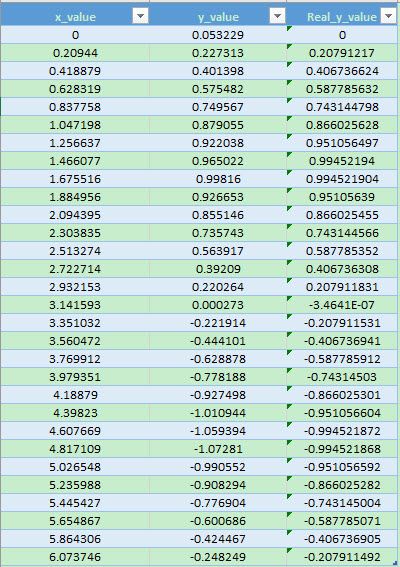

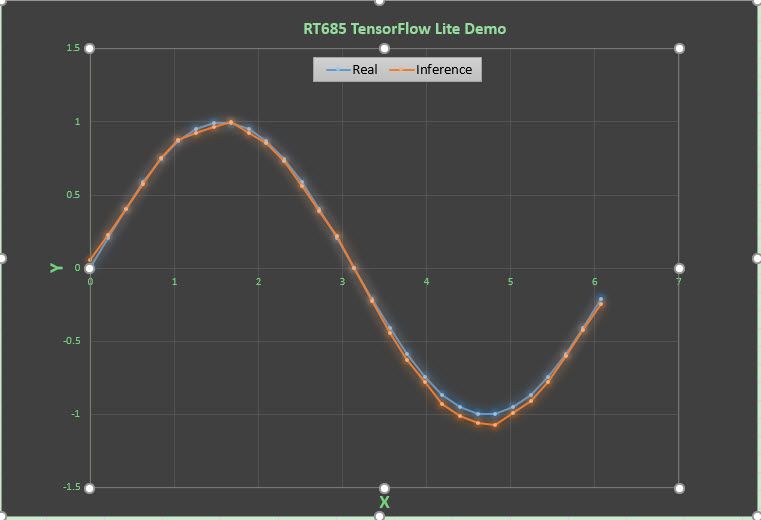

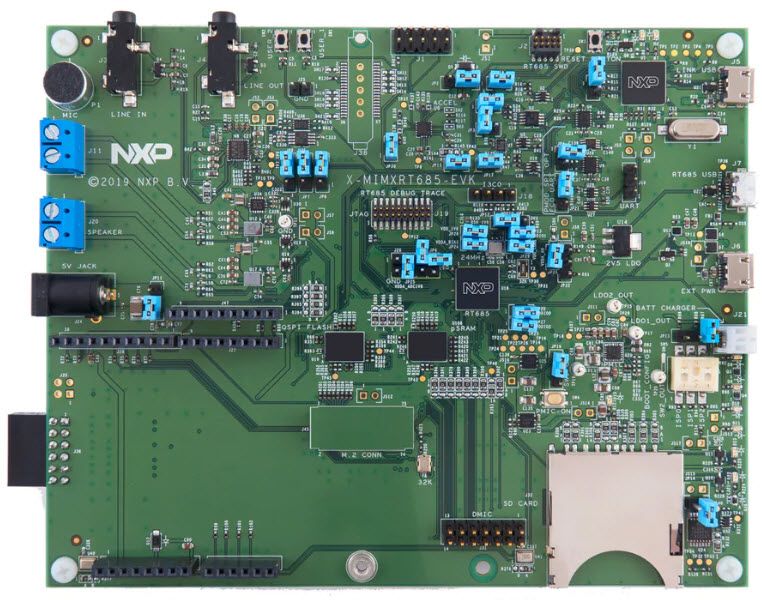

On the MIMXRT685 EVK Board (Fig 7), we record the input data: x_value and the inferenced output data: y_value via the Serial Port (Fig 8).

Fig 8

In addition, we use Excel to display the received data against our actual values as the below figure shows.

Fig 9

In general, In general, it has replicated the result of the The “Hello World” of TensorFlow Lite

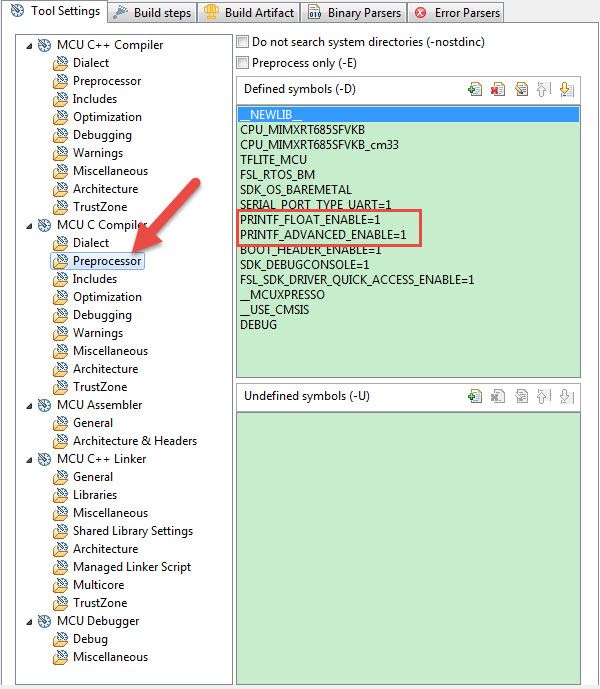

Troubleshoot

- In default, the created project doesn't support print float, so it needs to enable this feature by adding below symbols (Fig 10).

Fig 10

- When a neural network is executed, the results of one layer are fed into subsequent operations and so must be kept around for some time. The lifetimes of these activation layers vary depending on their position in the graph, and the memory size needed for each is controlled by the shape of the array that a layer writes out. These variations mean that it’s necessary to calculate a plan over time to fit all these temporary buffers into as small an area of memory as possible. Currently, this is done when the model is first loaded by the interpreter, so if the area is not big enough, you’ll see a crash event happen.

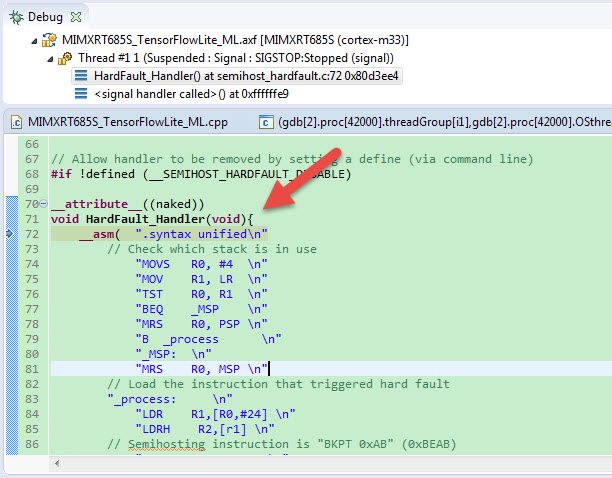

Regard to this application demo, the default heap size is 4 KB, obviously, it's not big enough to store the model’s input, output, and intermediate tensors, as the codes will be stuck at hard-fault interrupt function (Fig 11).

Fig 11

So, how large should we allocate the heap area? That’s a good question. Unfortunately, there’s not a simple answer. Different model architectures have different sizes and numbers of input, output, and intermediate tensors, so it’s difficult to know how much memory we’ll need.

The number doesn’t need to be exact—we can reserve more memory than we need—but since microcontrollers have limited RAM, we should keep it as small as possible so there’s space for the rest of our program.

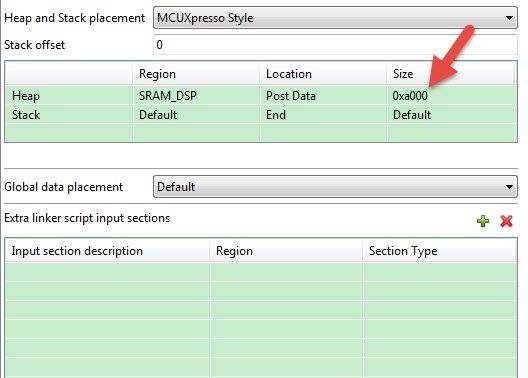

We can do this through trial and error. For this application demo, the code works well after increasing ten times than the previous heap size (Fig 12).

Fig 12