- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

-

- Home

- :

- i.MX论坛

- :

- i.MX RT 交叉 MCU

- :

- RT1062 run tensorflowlite cifar10 sample code error message.

RT1062 run tensorflowlite cifar10 sample code error message.

RT1062 run tensorflowlite cifar10 sample code error message.

My platform: EVK RT1062.

Sample code : Tenorflow_lite_micro_cifar10

Duplicate: I try to modify the inference file(model_data.h) with my model

( .h5 file ==>EIQ tool==> .tflite ==> xxd ==>.model_data.h)

Then I got the error message. (quantize format with int8 and int32)

Would you have any idea about this bug?

===================================================

// int8

CIFAR-10 example using a TensorFlow Lite Micro model.

Detection threshold: 60%

Model: cifarnet_quant_int8

Didn't find op for builtin opcode 'QUANTIZE' version '1'

Failed to get registration from op code ADD

Failed starting model allocation.

AllocateTensors() failed

Failed initializing model

===================================================

// int32

Detection threshold: 60%

Model: cifarnet_quant_int8

Didn't find op for builtin opcode 'RESHAPE' version '1'

Failed to get registration from op code ADD

Failed starting model allocation.

AllocateTensors() failed

Failed initializing model

Hello@crist_xu

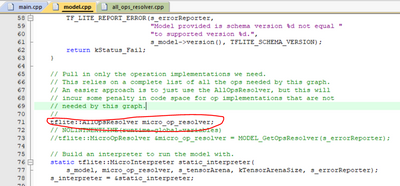

I have very similar challenge (refers to example from SDK2.10.1 named evkmimxrt1170_tensorflow_lite_micro_label_image_cm7), and made all changes you suggested, such us:

tflite::AllOpsResolver micro_op_resolver;

// tflite::MicroOpResolver µ_op_resolver = MODEL_GetOpsResolver(s_errorReporter);

However, there is issue left which has been also mentioned by @marcowang in his first post, it means:

Failed to get registration from op code ADD

Any thoughts? Thanks in advance!

Steps to reproduce:

- import evkmimxrt1170_tensorflow_lite_micro_label_image_cm7 (SDK 2.10.1)

- download model https://tfhub.dev/tensorflow/lite-model/ssd_mobilenet_v1/1/metadata/1?lite-format=tflite

- convert it to *.h with xxd -i ssd_mobilenet_v1_1_metadata_1.tflite > ssd_mobilenet_v1_1_metadata_1.h

- place ssd_mobilenet_v1_1_metadata_1.h in /source/model/

- change ssd_mobilenet_v1_1_metadata_1.h to look in the same way as /source/model/model_data.h

- #include "ssd_mobilenet_v1_1_metadata_1.h" in /source/model/model.cpp

- s_model = tflite::GetModel(ssd_mobilenet_v1_1_metadata_1_tflite); in /source/model/model.cpp

Hi @MarcinChelminsk , i couldn't send private message. It says i reached maximum number of private messages.

Try placing

s_microOpResolver.AddAdd();

at the first, that's the only difference I could see comparing to my code. Also try cleaning the project and build again.

@Ramson, thank you very much for your comment, however unfortunately it does not work on my end, I ma still looking for solution. I have also tried with changing resolver to AllOpsResolver (as mentioned here in troubleshooting: https://community.nxp.com/t5/eIQ-Machine-Learning-Software/eIQ-FAQ/ta-p/1099741 ) however no success so far

Hi,

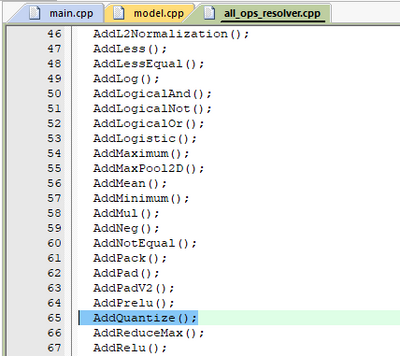

In order to optimize the code size, the newest sdk(sdk 2.10.0) usually registered the nodes needed, so if you change a model ,you need to re-register the nodes inside your model:

So, please help check, if your code is using the line:71 to include all the op-codes into the project not the line 73 of the model.cpp:

And also please check if there is a function named AddQuantize() inside all_op_resolvers.cpp:

Regards,

Crist

Hi @marcowang ,

Thanks for your interest in the NXP MIMXRT product, I would like to provide service for you.

You are using this SDK code:

SDK_2_10_0_EVK-MIMXRT1060\boards\evkmimxrt1060\eiq_examples\tensorflow_lite_micro_cifar10

And you replace your own model.

Could you please give me more details about your own model information, then I can try to reproduce your issues at first.

You mentioned: ( .h5 file ==>EIQ tool==> .tflite ==> xxd ==>.model_data.h)

Please help to provide the related files for the issue reproduction.

Do you refer to any document about your own model generation? I just want to follow your steps and do it again, try to check whether I can also reproduce your issues.

Best Regards,

kerry