- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

-

- Home

- :

- i.MX论坛

- :

- i.MX RT 交叉 MCU

- :

- Further serious bugs in LPSPI driver

Further serious bugs in LPSPI driver

Further serious bugs in LPSPI driver

Trying to use LPSPI to interface to a Superior Sensors ND120 shows further serious bugs in the FSL library LPSPI driver.

My fsl_lpspi.h has FSL_LPSPI_DRIVER_VERSION (MAKE_VERSION(2, 4, 0)),

but I verified there's no relevant fix in FSL_LPSPI_DRIVER_VERSION (MAKE_VERSION(2, 6, 6))

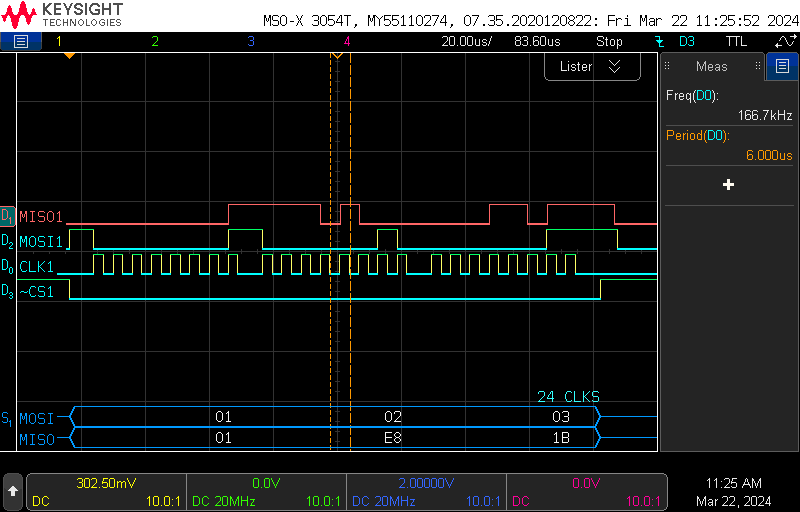

Incorrect delays are generated before, during, and after transfer shown by the logic analyzer for the code below:

// ND120 has *REALLY SLOW* SPI. Two big nuisances:

// - 6uS clock cycle is 166.666kHz clock (48uSec for 8-bit byte)

// - inter-byte delay of 52uSec is needed for minimum byte cycle time 100uSec

// - 100uSec delay required from CS assertion to first clock

// - 20uSec delay required after last clock til de-asserting CS

// LPSPI:

// - can do delay between 8-bit 'frames' with 'continuous CS' but leaves CS asserted at end of operation?

// - DMA sequencer can do all the above, but perhaps FSL API cannot.

static const lpspi_master_config_t ND120_LPSPI_MasterConfig =

{

.baudRate = 166666U, /*!< Baud Rate for LPSPI. 6uS cycle = 166,666Hz */

.bitsPerFrame = 8, /*!< Bits per frame, minimum 8, maximum 4096 */

// Clock SPI mode '01' (CPOL = 0, CPHA = 1)

.cpol = kLPSPI_ClockPolarityActiveHigh, /*!< Clock polarity. */

.cpha = kLPSPI_ClockPhaseSecondEdge, /*!< Clock phase. */

.direction = kLPSPI_MsbFirst, /*!< MSB or LSB data shift direction. */

.pcsToSckDelayInNanoSec = 100000U, /*!< PCS to SCK delay time in nanoseconds. Spec: 100us */

.lastSckToPcsDelayInNanoSec = 20000U, /*!< Last SCK to PCS delay time in nanoseconds. Spec: 20us */

// delay is between *FRAMES*

.betweenTransferDelayInNanoSec = 52000U,/*!< Delay time after SCK in nanoseconds = (100us-8*6us) = 52us. */

.whichPcs = kLPSPI_Pcs0, /*!< Desired Peripheral Chip Select (PCS) - over-ridden in transfer API */

.pcsActiveHighOrLow = kLPSPI_PcsActiveLow,

.pinCfg = kLPSPI_SdiInSdoOut, /*!< Set which pins are used for input and output data

during single bit transfers, in case some moron wired them backwards.*/

.dataOutConfig = kLpspiDataOutTristate, /*!< Is output data is tri-stated between accesses (LPSPI_PCS is negated)? */

/*guess*/.enableInputDelay = false /*true caused infinite loop!!*/, /*!< Enable master to sample the input data on a delayed SCK.

* This can help improve slave setup time. Refer to device data sheet for specific time length. */

};

// FSL LPSPI_MasterInit is buggy: uses inadequate prescale value to support required delays

LPSPI_MasterInit(LPSPI4, &ND120_LPSPI_MasterConfig, BOARD_BOOTCLOCKRUN_LPSPI_CLK_ROOT); // enables clock and LPSI module

// Demonstrate incorrect output on logic analyzer (all delays wrong)

GPIO_PinWrite(BOARD_INITPINS_ND120_RESET_GPIO, BOARD_INITPINS_ND120_RESET_PIN, 0);

vTaskDelay(pdMS_TO_TICKS(1));// 15usec required

GPIO_PinWrite(BOARD_INITPINS_ND120_RESET_GPIO, BOARD_INITPINS_ND120_RESET_PIN, 1);

vTaskDelay(pdMS_TO_TICKS(80));// first response settling time after RESET

uint8_t exampleTx[3] = {1,2,3};

uint8_t exampleRx[3] = {0};

const lpspi_transfer_t transfer =

{

.txData = (uint8_t*)exampleTx, /*!< Send buffer. */

.rxData = (uint8_t*)exampleRx, /*!< Receive buffer. */

.dataSize = (size_t)3, /*!< Transfer bytes. */

.configFlags = kLPSPI_MasterPcs0 | kLPSPI_MasterPcsContinuous,

};

lpspi_transfer_t t1 = transfer; // **bleep**ed-up API requires non-const transfer structure

status_t s1 = LPSPI_MasterTransferBlocking(LPSPI4, &t1);

assert(s1 == kStatus_Success); (void)s1;

Note the incorrect delays in the scope image:

Analyzing the FSL code shows the following bugs:

1) Delays are set to somewhat random values rather than correct results after the FSL code determines it cannot set correct values! In all cases this should generate an error, ideally an assert failure for debug builds. It is unacceptable to silently do the wrong thing!

2) The maximum required delay must be used to establish the minimum prescale value which allows all delay values to fit in the available 8-bit fields. For the example above this prescale value is 5, but the code incorrectly sets 1!

3) In all cases, datasheets (as the one linked above) show minimum required delays and maximum allowable clock speeds. The FSL code goes through a convoluted iteration trying to find the closest value! The correct delay values are the smallest delay above or equal to the specified delays. The correct clock divisor is the fastest possible rate slower than or equal to than the requested clock. These values can be easily calculated with no iteration required!

4) The FSL code uses a lookup table to get 1 raised to a power of 2, rather than a shift operator!

5) Using "continuous" mode here, the bytes are sent in the correct order, unlike previous examples I posted. The driver completely ignores the kLPSPI_MasterByteSwap flag and sends bytes in correct order regardless of setting!

6) In LPSPI_MasterInit, the delay times are set in CCR after enabling the module. In addition, the LPSPI_MasterSetDelayTimes routine has no assertion to verify it is only called when the module is disabled.

7) LPSPI_MasterInit does not reliably re-initialize an LPSPI module. In my case:

- I initialized for sensor A, and IO proceeded correctly.

- I initialized for sensor B, corrected wrong values for CCR and prescale, and IO proceeded correctly.

- I re-initialized for sensor A, and IO proceeded at wrong speed.

Apparently LPSPI_MasterInit does not actually initialize everything...

Has anyone in the community written a working LPSPI driver?

The FSL driver is quite unusable with all these bugs...

Thanks,

Best Regards, Dave

PS: For the example above, here are the correct LPSPI register settings:

// LPSI delays are computed using LPSI_clockHz = (LPSPI_CLK_ROOT/(1<<clockPrescalerExponent)),

// and *NOT* the Clock Control Register CCR divisor used to generate SPI clock signal.

// The SPI clock divisor and delay fields are only 8 bits, so:

// - 255*LPSI_clockHz_Period must be > max desired delay (100uSec here) -> minimum prescale of 5

// - clock signal divisor must also fit -> minimum prescale of 1

// We're using the slowest possible LPSPI_CLK_ROOT of 66MHz (period ~15nSec)

const uint32_t clockPrescalerExponent = 5; // set in transfer control word in FIFO (TCR), not Clock Control Register CCR

const uint32_t LPSI_clockHz = BOARD_BOOTCLOCKRUN_LPSPI_CLK_ROOT / (1<<clockPrescalerExponent); // 66MHz/32 = 2,062,500Hz

const uint32_t LPSI_clockPeriodNsec = 1000000000U/LPSI_clockHz; // 484

const uint32_t clockDivisor = LPSI_clockHz / 166666U; // 166,666Hz for 6uS cycle

const uint32_t LPSI4_CCR_ND120 =

(clockDivisor-1) << LPSPI_CCR_SCKDIV_SHIFT |

(( 52000/LPSI_clockPeriodNsec)+1) << LPSPI_CCR_DBT_SHIFT | // delay between continuous frames = 100uSec - 8*6uSec clock periods

((100000/LPSI_clockPeriodNsec)+1) << LPSPI_CCR_PCSSCK_SHIFT | // delay from CS to first clock

(( 20000/LPSI_clockPeriodNsec)+1) << LPSPI_CCR_SCKPCS_SHIFT ; // delay from last clock to de-asserting CS

LPSPI4->CCR = LPSI4_CCR_ND120;

LPSPI4->TCR |= clockPrescalerExponent<<LPSPI_TCR_PRESCALE_SHIFT;

hi David just started with the EVM seeing similar issues we were going to track this down and see if we can fix the issue, which example did you start with? @davenadler

Hi @davenadler,

Thank you for such an in-depth insight about our LPSPI drivers.

I have reported your findings from this post (along with the previous one you share) with the internal applications team for them to deliberate and fix on a future release of our SDKs. It seems like this issue is well ingrained into the driver of several i.MX RT devices, so if they conclude that a workaround is necessary, the change might still take some time to be fully implemented in all of our SDKs.

Once again, we appreciate the great effort from your side to take the time to investigate this issue and report it.

BR,

Edwin.

Also see partial recoding to work around this problem:

https://community.nxp.com/t5/i-MX-RT/LPSPI-bugs-LPSPI-MasterInit-replacement/m-p/1837996#M29613

Hoping someone at NXP can respond here:

- Have you used the provided example code to replicate and verify the bugs listed?

- Does NXP have any plans to fix this, or is the FSL driver library no longer supported and we should not use iMX.RT parts for new projects?

Thanks,

Best Regards, Dave