- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

-

- Home

- :

- i.MX フォーラム

- :

- i.MXプロセッサ

- :

- Re: iMX8QM 10G ethernet performance issues

iMX8QM 10G ethernet performance issues

- RSS フィードを購読する

- トピックを新着としてマーク

- トピックを既読としてマーク

- このトピックを現在のユーザーにフロートします

- ブックマーク

- 購読

- ミュート

- 印刷用ページ

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello everyone,

I'm having issue with 10G ethernet performance on Toradex Apalis iMX8QM SoM.

We have SoM and AQVC107 10G ethernet controller on our custom carrier board. The SoM interfaces with controller via PCIe x2 link and is running a custom Yocto distribution of Linux, based on Boot2Qt with kernel version 4.14.

The device is connected to Nvidia Pegasus system over 10G ethernet. I've used iperf3 to test the bandwidth. On the device I was running iperf3 server and on the Nvidia system was iperf3 client in UDP mode. With this setup I've achieved about ~1.6 Gbits/sec with ~38% receiver packet loss.

If I reversed the roles so that Nvidia is running server and the device is client I have better results, about ~2 Gbits/sec with ~0.028% receiver packet loss.

I've tried to run iperf3 client on Nvidia with -w 32k parameter and I've succeeded to get ~1.2Gbits/sec with packet loss less than 0,5% on the device.

Could you help me figure out what is causing these huge packet losses when the device (iMX8QM) is running iperf3 server? We've checked HW design and ruled it out as possible cause, you can find the schematics of the PCIe connection to the controller in the attachment.

Could the problem be in the PCIe driver? Is there perhaps some configuration I'm not aware of?

BR!

解決済! 解決策の投稿を見る。

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

@martin_lovric

Hello,

below are my comments:

1) Now we have only the following i.MX8 PCIe performance estimations.

https://www.nxp.com/webapp/Download?colCode=AN13164

2) i.MX8QM system performance also may be restricted by memory: but it is not provided.

Regards,

Yuri.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

@martin_lovric

Hello,

please try to use iperf2 instead of iperf3.

https://community.nxp.com/t5/i-MX-Processors/TCP-Network-performace-issues/m-p/876395

Regards,

Yuri.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello Yuri,

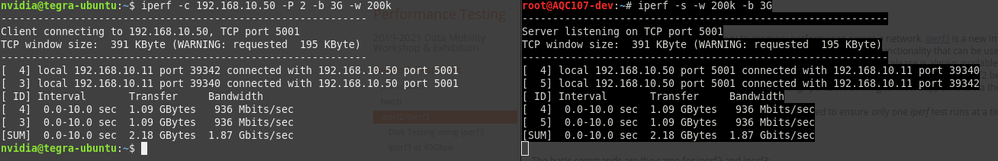

Thanks for this advice, with iperf 2.0.10 I've succeeded to make test using TCP protocol but the maximum speed I've achieved was ~1.8Gbits/sec:

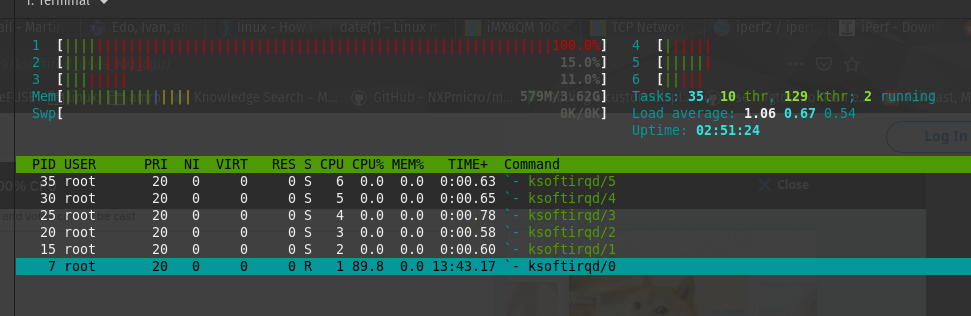

I've noticed when iperf server is handling incoming connection the ksoftirqd/0 has high CPU usage:

Is this normal behavior and could this cause issues with 10G ethernet interface?

Also, do you have any idea what could be causing the 10G ethernet interface to run at only ~1.8Gbits/sec?

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

@martin_lovric

Hello,

I think Your result is not so bad, taking into account i.MX8 PCie performance.

https://community.nxp.com/t5/i-MX-Processors/PCIe-Bandwidth/m-p/1063269

Regards,

Yuri.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

But the Gen3 PCIe x2 should be able to reach 8Gbits/sec bandwidth, Am I correct? Unless there is limitation on iMX8QM side I'm not aware of.

During the UDP test, the kernel thread "ksoftirqd/0" had high CPU usage of core 0 as well. I noticed increased UDP packet loss when I tried to run CPU intensive application during the UDP test.

Could the CPU be bottleneck for the PCIe performance? If it is, how can we solve this?

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

@martin_lovric

Hello,

below are my comments:

1) Now we have only the following i.MX8 PCIe performance estimations.

https://www.nxp.com/webapp/Download?colCode=AN13164

2) i.MX8QM system performance also may be restricted by memory: but it is not provided.

Regards,

Yuri.