- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Digital Signal Controllers

- Vybrid Processors

- ColdFire/68K Microcontrollers and Processors

- 8-bit Microcontrollers

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- Identification and Security

- i.MX Processors

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- CodeWarrior

- Wireless Connectivity

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- Home

- :

- i.MX Forums

- :

- i.MX Processors

- :

- Re: [iMX8MPlus] How to mix two video sources into one with Gstreamer ?

[iMX8MPlus] How to mix two video sources into one with Gstreamer ?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Community,

Gstreamer has the notion of Composition for outputting multiple video displays but I need to do the opposite way: I would like to mix/combine two H264-encoded videos (from /dev/video0 and /dev/video1 for example) from iMX8M Plus. Is it possible with Gstreamer, please ?

Best regards,

Khang

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Khang,

For 1.b. case: can you try the following command on your side and let me know if the performance is increased?

root@imx8mpevk:~# export DISPLAY=:0

root@imx8mpevk:~# gst-launch-1.0 -vvv imxcompositor_g2d name=comp \

sink_0::xpos=0 sink_0::ypos=0 sink_0::width=1440 sink_0::height=1080 \

sink_1::xpos=0 sink_1::ypos=1080 sink_1::width=1440 sink_1::height=1080 \

! queue ! video/x-raw, width=1440, height=2160 ! fpsdisplaysink video-sink="ximagesink" text-overlay=false sync=false \

v4l2src device=/dev/video2 ! video/x-raw, width=1440, height=1080, framerate=15/1 ! comp.sink_0 \

v4l2src device=/dev/video3 ! video/x-raw, width=1440, height=1080, framerate=15/1 ! comp.sink_1

On my side it looks better than previous pipeline. (I'm using 2 Basler cameras)

Best regards,

Diana

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Khang,

For me this sounds more like a synchronisation issue on the client playback side.

However, one can embed several h264 elementary streams into a single multiplexing format. If I remember correctly, matroska (MKV) allows that.

Not sure all clients can render this as you want.

IMHO, you will have more success with sending the two h264 streams separately and embed their respective timestamps. Timestamps should have same relative time base.

Gstreamer client can then playback these two streams in a synchronized manner.

Long story short, I would put the effort on the decoding side and not on the encoder.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @malik_cisse ,

MHO, you will have more success with sending the two h264 streams separately and embed their respective timestamps. Timestamps should have same relative time base

Do you mean that gstreamer could provide the timestamps ?

My observation is that even I launch 2 separate gst-launch commands (H264 enabled) with & on the board as well as on the client machine, there's still issue with arriving frames: they are not sync.

Best Regards,

Khang

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dianna's Solution indeed looks promising.

On the other side, H264 elementary stream itself does not embed time stamps, however, RTP, RTSP or Mpeg-TS does.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

what do you mean mix two video? display two video in the same display? overlay? what's your use case

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear @joanxie ,

My use-case is trying to read 2 (hardware synchronized) camera sensors (/dev/video0, /dev/video1) at same time as possible, encoding, transferring over the network and displaying them in same gstreamer windows on client side. The resolution of each sensor is 1440x1080.

By hardware synchronization, I means one camera acts as master, the other acts as slave to guarantee the capturing period.

Best Regards,

Khang

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @khang_letruong ,

So you want a GStreamer pipeline to compose your two camera streams and stream the composed data over network, right?

gst-launch-1.0 imxcompositor_g2d name=comp \

sink_0::xpos=0 sink_0::ypos=0 sink_0::width=640 sink_0::height=480 \

sink_1::xpos=0 sink_1::ypos=480 sink_1::width=640 sink_1::height=480 \

! queue ! videoconvert ! v4l2h264enc ! rtph264pay config-interval=1 pt=96 ! udpsink host=169.254.235.127 port=5000 \

v4l2src device=/dev/video2 ! imxvideoconvert_g2d ! video/x-raw, width=640, height=480 ! queue ! comp.sink_0 \

v4l2src device=/dev/video3 ! imxvideoconvert_g2d ! video/x-raw, width=640, height=480 ! queue ! comp.sink_1

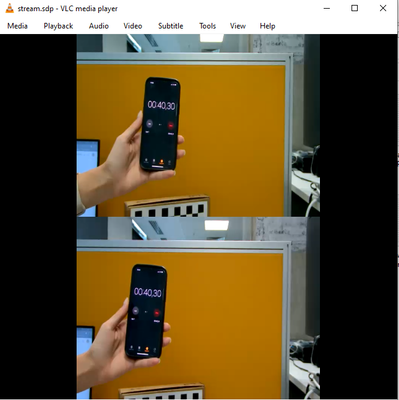

On the client side, the output, with a latency:

Is this helpful?

Diana

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @dianapredescu ,

Thanks for your suggestion. It is nearly what I need. I would like to know if vpuenc_h264 could be used instead of v4l2h264enc and the resolution would be 1440x1080 or 1920x1080 instead of 640x480, please ?

For separate streams, I used :

gst-launch-1.0 -v v4l2src device=/dev/video1 ! imxvideoconvert_g2d ! "video/x-raw, width=1440, height=1080, framerate=30/1" ! vpuenc_h264 ! rtph264pay pt=96 ! rtpstreampay ! tcpserversink host=192.168.110.8 port=5001 blocksize=512000 sync=false & \

gst-launch-1.0 -v v4l2src device=/dev/video0 ! imxvideoconvert_g2d ! "video/x-raw, width=1440, height=1080, framerate=30/1" ! vpuenc_h264 ! rtph264pay pt=96 ! rtpstreampay ! tcpserversink host=192.168.110.8 port=5000 blocksize=512000 sync=false

Best Regards,

K.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear @khang_letruong ,

Please let me try it on my side.

But at the first glance, I'm not sure vpuenc_h264 will work on my scenario because vpuenc_h264 seems to support only up to 1920x1088. In my case, at the end I would have a 1920x2160 stream.

SINK template: 'sink'

Availability: Always

Capabilities:

video/x-raw

format: { (string)NV12, (string)I420, (string)YUY2, (string)UYVY, (string)RGBA, (string)RGBx, (string)RGB16, (string)RGB15, (string)BGRA, (string)BGRx, (string)BGR16 }

width: [ 64, 1920 ]

height: [ 64, 1088 ]

framerate: [ 0/1, 2147483647/1 ]

Best regards,

Diana

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear @dianapredescu .

Could you please also explain why you said "... at the end I would have a 1920x2160 stream" from your given example?

Thanks in advance and best regards,

Khang

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

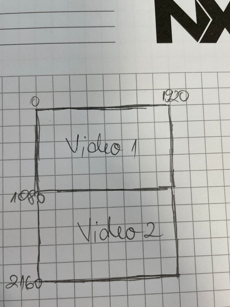

Khang,

I was thinking, if I compose two 1920x1080 (one above the other) before encoding and streaming it over the network, I would have a window of 1920x2160 resolution, right? Were you saying that I can rescale the output window?

Best regards,

Diana

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @dianapredescu ,

I was referring to your example of 640x480 and was wondering how you could have 1920x2160 instead.

Also, 1920x2160 would exceed the capacity of single encoder but would not exceed the capacity of dual encoders. My question is that both encoders participate to encode this composition or just single encoder ?

Best,

K.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear @khang_letruong ,

sorry for mixing things. No, in the case I previously tested with two 640x480 stream, I had on client side a 640x960 video window. The 1920x2160 window came into the discussion from the scenario of using two 1920x1080 video streams. (This is what I'm currently investigating - composition of 2 FHD streams)

In the latest BSP I've seen we have modified the vpu capabilities. It looks like with the latest BSPs one can encode more than 1920x1080 with v4l2h264enc. ( https://github.com/nxp-imx/linux-imx/blob/lf-5.15.y/drivers/mxc/hantro_v4l2/vsi-v4l2-config.c#L755 )

So I would understand that I should be able to encode 1920x2160 with one instance, right?

Best regards,

Diana

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear @dianapredescu ,

Thanks for pointing out the modification of VPU driver in latest BSP.

So I would understand that I should be able to encode 1920x2160 with one instance, right?

Effectively, this is also the question that I am looking for the answer from you NXP who released the BSP. As the spec of iMX8MP said that max. encoding resolution is 1080p, that why I would like to know how many encoders participate into the pipeline of your example?

Discussion about the encoding limitation of VPU here : https://community.nxp.com/t5/i-MX-Processors/IMX8M-Plus-Hantro-4K-Encoder/m-p/1282890

Best Regards,

Khang

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear @khang_letruong,

tried to run few examples for both 1920x1080 and your custom resolution 1440x1080, and here is some feedback.

NXP commitment for best performances for VPU encoding is 1920x1080, as stated in Reference Manual, even though the IP HW can support more than 1080p. As seen in the driver, 8MP maximum encoding resolution is 1920x8192 (8MP introduces HW limitation on width size) . One encoding pipeline only occupies one instance.

So adapting the pipeline I've previously sent for your use case (encoding 1 x 1440x2160 output) appears to be possible, but the performances do not look so good. I suggest to try it on your side and let me know how you evaluate it.

Best regards,

Diana

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @dianapredescu ,

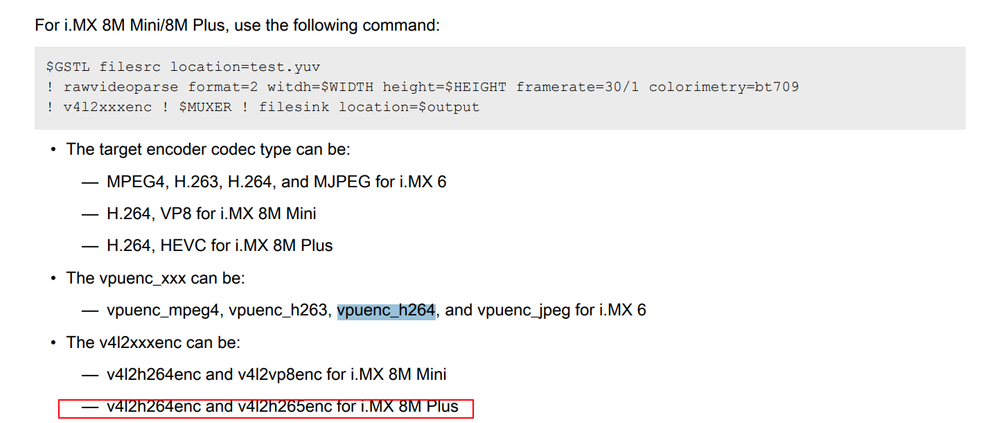

Thanks for your thorough explanation. Another confusion was v4l2h264enc vs vpuenc_h264 as per following question :

Thanks for your suggestion. It is nearly what I need. I would like to know if vpuenc_h264 could be used instead of v4l2h264enc and the resolution would be 1440x1080 or 1920x1080 instead of 640x480, please ?

I was always thinking that vpuenc_h264 was H264 hardware accelerated encoding element of iMX8M Plus but it turned out that it was not in following doc:

Regards,

K.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear @khang_letruong,

I understand where the confusion comes from. When we release 8MP platform, I believe vpudec and vpuenc_h264 were the only codecs we provided. They were HW accelerated. But we used those because the v4l2 software support wasn't ready for VPU decoder/encoder at that time. Now, NXP recommends using the latest VPU drivers: v4l2h264enc & v4l2h264dec.(that's why v4l2 codecs follows hantro supported resolution matrix -> max. 1920x4096 for H264 decoding and 1920x8192 for H264 encoding).

Since we are using open source v4l2 framework and we exposed the full capability, any customer can make use of it. But NXP official commitment will remain 1080p for both encoding/decoding.

Best regards,

Diana

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @dianapredescu ,

I did not know from which BSP that v4l2h264dec and v4l2h264enc were introduced. I need to re-check the release notes and associated documents.

However, thanks for all.

Best,

K.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @dianapredescu ,

For confirmation, the v4l2h264dec and v4l2h264enc have been introduced since BSP 5.10.35 while I am still sticked with BSP-5.4.70 in which there's only vpuenc_h264 and vpudec_h264.

Regards,

K.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @dianapredescu,

I tried the following command on Ubuntu based desktop firmware image (BSP L5.10.72-2.2.0) but had error :

user@imx8mpevk:~$ gst-launch-1.0 imxcompositor_g2d name=comp sink_0::xpos=0 sink_0::ypos=0 sink_0::width=1440 sink_0::height=1080 sink_1::xpos=0 sink_1::ypos=1080 sink_1::width=1440 sink_1::height=1080 ! queue ! videoconvert ! v4l2h264enc output-io-mode=dmabuf-import ! rtph264pay config-interval=1 pt=96 ! udpsink host=192.168.0.244 port=5000 v4l2src device=/dev/video0 io-mode=dmabuf ! imxvideoconvert_g2d ! "video/x-raw, width=1440, height=1080, framerate=15/1" ! queue ! comp.sink_0 v4l2src device=/dev/video1 io-mode=dmabuf ! imxvideoconvert_g2d ! "video/x-raw, width=1440, height=1080, framerate=15/1" ! queue ! comp.sink_1

WARNING: erroneous pipeline: no element "v4l2h264enc"

It seems that v4l2h264enc/v4l2h264dec are not available but vpuenc_h264/vpudec:

user@imx8mpevk:~$ gst-inspect-1.0 | grep v4l2h264

user@imx8mpevk:~$ gst-inspect-1.0 | grep vpu

vpu: vpuenc_h264: IMX VPU-based AVC/H264 video encoder

vpu: vpuenc_hevc: IMX VPU-based HEVC video encoder

vpu: vpudec: IMX VPU-based video decoder

How to add these elements into Ubuntu based desktop firmware image, please ?

Best regards,

Khang

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

v4l2h264enc/v4l2h264dec are part of the gstreamer1.0-plugins-good gstreamer1.0-plugins-bad as far as I know.