- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

-

What about object detection on i.MXRT?

Hi NXP Team!

I am aware that I can import example from SDK called "tensorflow_lite_label_image" (which is example for image classification). I followed the lab called "eIQ Transfer Learning Lab" which is based on this SDK example (https://community.nxp.com/docs/DOC-343827 )

However, the question is: what about object detection on i.MXRT? Do you provide any examples, guides?

Hello,

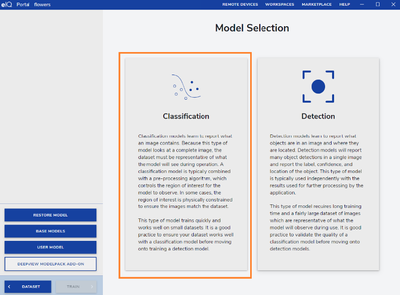

as eIQ toolkit is avialable now, and it supports working with Classification and Detection models

could you provide any guidance on the workflow how to use Detection models and then deploy on embedded side?

Hello Marcin,

with eIQ Portal there aren't any huge differences in the flow between classification and object detection. The main difference from a user perspective is labeling the various objects (instead of whole images) in the dataset curator. When your dataset is ready, you just need to choose an object detection model and afterwards the workflow is the same.

If you have any specific questions, please create a new standalone thread so that we don't keep reviving this old one. Let's discuss everything in a fresh one with a more specific subject so that it's easier to find for others who look for the same information.

Regards,

David

Hi, we are also working on a similar object detection example for tensorflow lite in which we are facing an issue, which i have posted in the forum (https://community.nxp.com/t5/eIQ-Machine-Learning-Software/How-to-add-custom-operators-in-tensorflow...). It would be a great help if you can find any solution for this, so that we all can benefit. Thanks

Hello Marcin, we do not currently have an object detection example for the i.MX RT devices.