- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- Model-Based Design Toolbox (MBDT)

- :

- NXP Model-Based Design Tools Knowledge Base

- :

- Deploying Deep Learning Network to NXP i.MX RT MCUs

Deploying Deep Learning Network to NXP i.MX RT MCUs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Deploying Deep Learning Network to NXP i.MX RT MCUs

Deploying Deep Learning Network to NXP i.MX RT MCUs

Introduction

With the latest advances in microcontrollers, they are getting faster and more efficient, being able to successfully run complex algorithms in a reasonable time. One important category are Artificial Intelligence algorithms. Using both NXP® and MathWorks® ecosystem, the steps to deploy an AI algorithm to NXP hardware are simplified and straight forward.

The article provides guidance on how to implement a State-of-Charge (SoC) estimation algorithm based on a feedforward deep learning network developed by Mathworks' experts (Battery State of Charge Estimation in Simulink Using Deep Learning Network). The algorithm is then deployed on i.MX RT1060 Evaluation Kit using NXP Model Based Design Toolbox for I.MX RT.

As the main objective of the article is to demonstrate how to run an AI algorithm on NXP evaluation board, the example is ran in Processor-in-the-Loop (PIL) simulation mode. This type of simulation represents an important step in the validation process of an algorithm, corner cases can be easily replicated as the input data can be directly loaded from MATLAB's workspace. The execution of the algorithm is done on the microcontroller. For a more detailed overview of the execution of the algorithm, code profiling options can be enabled to generate a report which details execution times.

What BMS is

Battery Management System (BMS) is a critical component in battery-driven devices, such as electric vehicles. Their main objective is to ensure that the battery pack remains in an optimal and safe operating mode. At the core of the most tasks, the BMS must compute the State-of-Charge (SoC) estimation. To make a precise estimation, the algorithm requires an accurate model of the actual cells, which are difficult to characterize. An alternative to this approach is to create data driven models of the cell using AI methods such as neural networks.

Deep Learning Toolbox

The Deep Learning Toolbox™ developed by Mathworks provides a framework for deep neural networks to be used in algorithms. It enables the user to use convolutional neural networks (ConvNets, CNNs) and long short-term memory (LSTM) networks to perform classification and regression on image, time-series and text data.

The network and layer graphs are not mandatory to be created in MathWorks ecosystem, as other frameworks can be used, such as TensorFlow™ 2, TensorFlow-Keras, PyTorch®.

Prerequisite Software

To create, build and deploy a Simulink Model onto the i.MX RT RT1060 EVK, the following software is required:

Prerequisite hardware

The hardware required for this example is i.MX RT1060 Evaluation Kit. The i.MX RT1060 crossover MCUs are part of the EdgeVerse™ edge computing platform. The core of the MCU is a Arm® Cortex®-M7 core at 600 MHz. The device is fully supported by NXP’s MCUXpresso Software and Tools, a comprehensive and cohesive set of free software development tools.

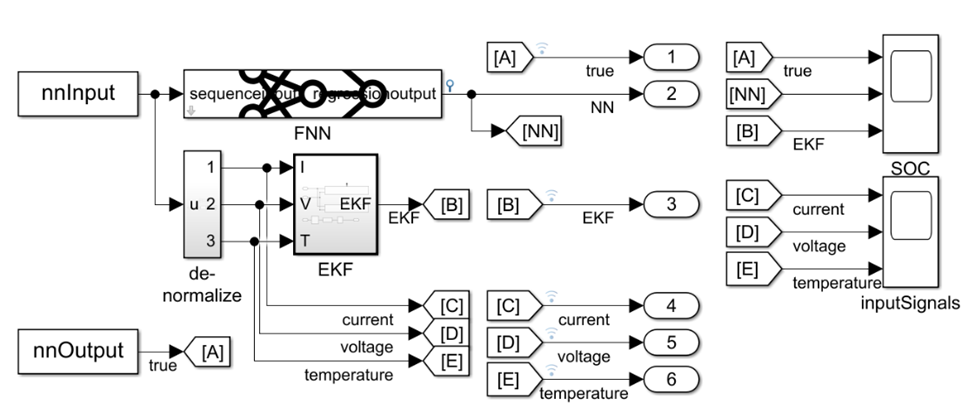

Model - Overview

The BatterySOCSimulinkEstimation model included in the Deep Learning Toolbox computes the SoC estimation using two methods: first method uses a neural network and the second one uses the extended Kalman filter algorithm. By plotting data generated by these two estimations and comparing it to the true values, it is possible to validate that the FNN predicts the SoC with an accuracy of 3 within a temperature range between -10 C and 25 C.

Note! Before making any modification to the model included in the toolbox, it is recommended to create a backup of the example in order to be able to revert to the original state.

For this example, the predictions are done on the i.MX RT1060 evaluation board in PIL mode while the Kalman filter is locally computed on the computer.

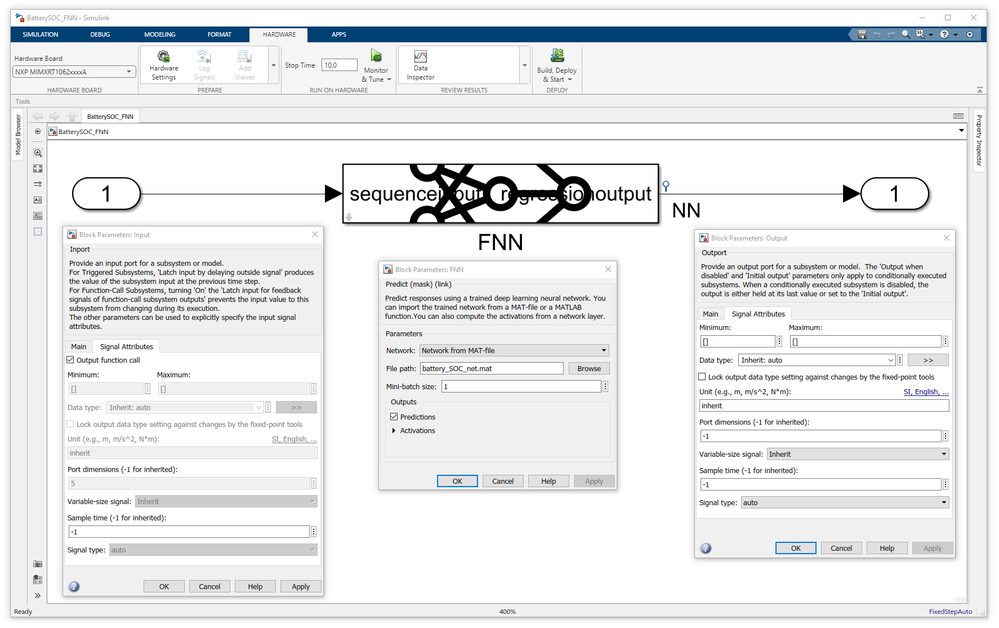

Referenced Model

From the original model, the FNN block must be added in a new blank model. As the newly created model is used in a Referenced model, an input port must be added to received data (make sure the Port dimensions is set to 5), and one output port to return the data computed. The other settings for all these 3 blocks can be left default.

Next, in the Model Settings the following changes must be made:

- Hardware Implementation

- Hardware Board: NXP MIMXRT1062xxxxA

- Target Hardware Resources

- Download

- Type: OpenSDA

- OpenSDA drive: Click on browse and select the partition assigned to the IMXRT1060

- PIL

- Communication interface: Serial Interface

- Hardware UART: LPUART1

- Serial port: The COM port assigned to the board (it can be found by using Device Manager or by running serialportlist command in MATLAB Command Window)

- Baudrate: 115200

- Download

- Code generation

- Verification

- Check Enable portable word sizes

- Verification

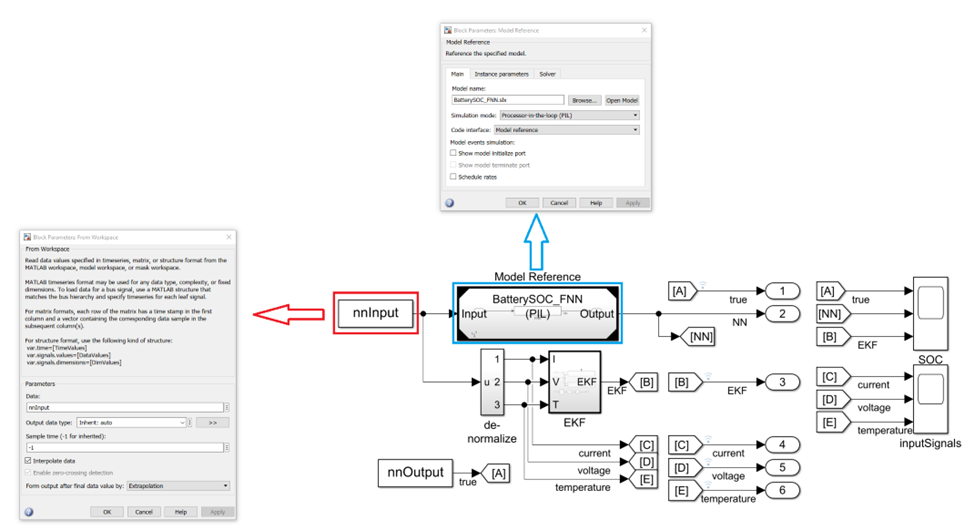

Top model

Based on the BatterySOCSimulinkEstimation model included in the Deep Learning Toolbox, the FNN block must be removed (either deleted or commented). A ModelReference subsystem must be added to the model. In the Block Parameters of the ModelReference subsystem, select the model created and configured above. Simulation Mode must be set to Processor-in-the-Loop (PIL). Another modification that must be done is the sample time of the nnInput Data Read Memory block which must be changed from 0 (continuous) to -1 (inherited).

Next, in the Model Settings the following changes must be made:

- Hardware Implementation

- Hardware Board: NXP MIMXRT1062xxxxA

- Target Hardware Resources

- Download

- Type: OpenSDA

- OpenSDA drive: Click on browse and select the partition assigned to the IMXRT1060

- PIL

- Communication interface: Serial Interface

- Hardware UART: LPUART1

- Serial port: The COM port assigned to the board (it can be found by using Device Manager or by running serialportlist command in MATLAB Command Window)

- Baudrate: 115200

- Download

- Code generation

- Verification

- Check Enable portable word sizes

- Verification

Deployment and validation

Now that both models (top model and referenced model) are configured, SIL/PIL manager can be opened from the APPS tab in Simulink. In the SIL/PIL tab, the simulation must be selected to SIL/PIL only (red rectangle) and the System Under Test to Model Blocks in SIL/PIL mode (blue rectangle).

Before the simulation is started, the BatterySOCSimulinkEstimation_ini.m script must be executed to load the necessary data into MATLAB's workspace. The script can be found next to the Simulink Model included in the Deep Learning Toolbox. From the top model, the SOC scope can be opened to display the generated data. The simulation can be started from the RUN button within the scope.

Note! Diagnostic Viewer can provide important information if there are any errors within the models.

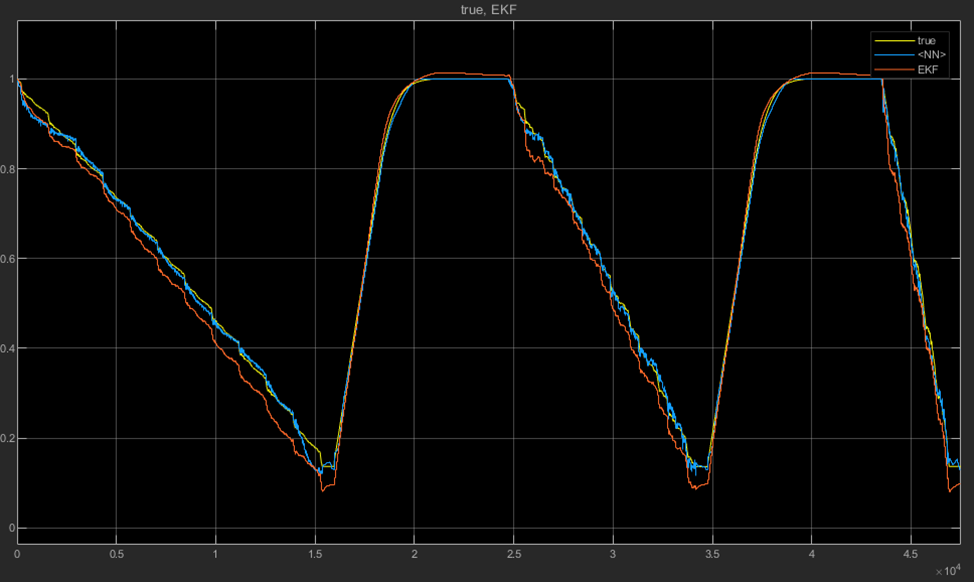

If the simulation is successfully deployed on the target, the data plotted into the scope should look like this:

Code profiling

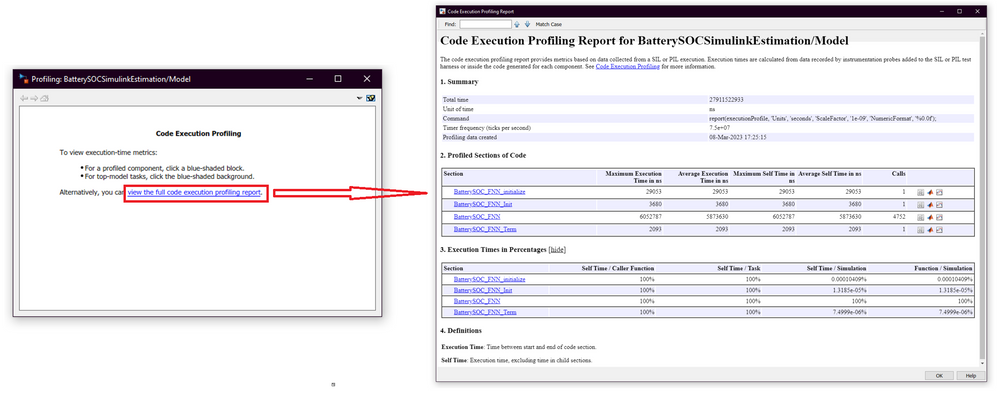

Code profiling is an important tool to validate an algorithm as it provides important information about the execution time. The time is measured by the timer configured in Model Settings -> Hardware Implementation -> Hardware board settings -> Target hardware resources -> Profiling Timers. By default, the PIT timer, channel 0, is used.

The Code generation can be enabled from Model Settings -> Code Generation -> Verification -> Code execution time profiling -> Measure task execution time. The generated report can either be Coarse (referenced models and subsystems only) or Detailed (all function call sites).

When the simulation is completed, a small window is opened. The Profiling report can be opened by clicking on the view the full code execution profiling report.

Conclusion

The NXP and Mathworks ecosystems enable the users to deploy an Artificial Intelligence algorithm onto the NXP hardware.

In the end, I strongly recommend the users interested in BMS and Artificial Intelligence to watch the Deploying a Deep Learning-Based State-Of-Charge (SOC) Estimation Algorithm to NXP S32K3 Microcontrol... webinar hosted by Javier Gazzarri (MathWorks) and Marius Andrei (NXP).

EdgeVerse and NXP are trademarks of NXP B.V. All other product or service names are the property of their respective owners. © 2023 NXP B.V. Arm, Cortex are trademarks and/or registered trademarks of Arm Limited (or its subsidiaries or affiliates) in the US and/or elsewhere. The related technology may be protected by any or all of patents, copyrights, designs and trade secrets. All rights reserved. PyTorch, the PyTorch logo and any related marks are trademarks of The Linux Foundation. MATLAB, Simulink, Stateflow and Embedded Coder are registered trademarks and MATLAB Coder, Simulink Coder, Deep Learning Toolbox are trademarks of The MathWorks, Inc. See mathworks.com/trademarks for a list of additional trademarks. TensorFlow, the TensorFlow logo and any related marks are trademarks of Google Inc.