- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- MCUXpresso Training Hub

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

-

- Home

- :

- QorIQ Processing Platforms

- :

- Layerscape

- :

- Re: Bring up Marvell 88E1543 on LX2160ARDB_REV2

Bring up Marvell 88E1543 on LX2160ARDB_REV2

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi NXP,

We use SerDes 1 (0x7) and SerDes 2 (0xc).

There are two PHYs (Marvell 88E1543) connect to SGMII.

Here is my dts for SGMII

&dpmac7 {

status = "okay";

phy-handle = <&mdio2_phy1>;

phy-connection-type = "sgmii";

};

&dpmac8 {

status = "okay";

phy-handle = <&mdio2_phy2>;

phy-connection-type = "sgmii";

};

&dpmac9 {

status = "okay";

phy-handle = <&mdio2_phy3>;

phy-connection-type = "sgmii";

};

&dpmac10 {

status = "okay";

phy-handle = <&mdio2_phy4>;

phy-connection-type = "sgmii";

};

&dpmac11 {

status = "okay";

phy-handle = <&mdio2_phy5>;

phy-connection-type = "sgmii";

};

&dpmac12 {

status = "okay";

phy-handle = <&mdio2_phy6>;

phy-connection-type = "sgmii";

};

&dpmac17 {

status = "okay";

phy-handle = <&mdio2_phy7>;

phy-connection-type = "sgmii";

};

&dpmac18 {

status = "okay";

phy-handle = <&mdio2_phy8>;

phy-connection-type = "sgmii";

};

&emdio2 {

status = "okay";

mdio2_phy1: ethernet-phy@4 {

reg = <0x4>;

};

mdio2_phy2: ethernet-phy@5 {

reg = <0x5>;

};

mdio2_phy3: ethernet-phy@6 {

reg = <0x6>;

};

mdio2_phy4: ethernet-phy@7 {

reg = <0x7>;

};

mdio2_phy5: ethernet-phy@c {

reg = <0xc>;

};

mdio2_phy6: ethernet-phy@d {

reg = <0xd>;

};

mdio2_phy7: ethernet-phy@e {

reg = <0xe>;

};

mdio2_phy8: ethernet-phy@f {

reg = <0xf>;

};

};

I enabled the driver for Marvell and added the support for 88E1543

static struct phy_driver M88E1543_driver = {

.name = "Marvell 88E1543",

.uid = 0x1410ea2,

.mask = 0xffffff0,

.features = PHY_GBIT_FEATURES,

.config = &m88e151x_config,

.startup = &m88e1011s_startup,

.shutdown = &genphy_shutdown,

.readext = &m88e1xxx_phy_extread,

.writeext = &m88e1xxx_phy_extwrite,

};

I can get mdio info like this

=> mdio list

mdio@8b96000:

mdio@8b97000:

4 - Marvell 88E1543 <--> DPMAC7@sgmii

5 - Marvell 88E1543 <--> DPMAC8@sgmii

6 - Marvell 88E1543 <--> DPMAC9@sgmii

7 - Marvell 88E1543 <--> DPMAC10@sgmii

c - Marvell 88E1543 <--> DPMAC11@sgmii

d - Marvell 88E1543 <--> DPMAC12@sgmii

e - Marvell 88E1543 <--> DPMAC17@sgmii

f - Marvell 88E1543 <--> DPMAC18@sgmii

When I try to use one of them to ping, it occurred this error

Error: MC command failed (portal: 000000080c010000, obj handle: 0x27e, command: 0x1681, status: 0x7)

dprc_disconnect() failed dpmac_endpoint

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please refer to the following suggestion from the expert team.

Suggest customer try to modify EC1_PMUX and EC2_PMUX with non-zero values in RCW in order to disable MAC17/18 RGMII mode.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your console log cannot be open, please attach it again if necessary.

Have you deployed MC and DPC firmware image on your target board?

Have you applied DPL file before booting up Linux Kernel?

Have you modified DPC and DPL files for your custom board?

In LSDK 21.08, you could run "$ flex-builder -c mc_utils", then get DPC and DPL source code in components/firmware/mc_utils/config/lx2160a/.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have verified your dpl file on LX2160ARDB demo board.

I got similar booting up log as you.

[ 7.510687] fsl_dpaa2_eth dpni.11 (unnamed net_device) (uninitialized): PHY [0x0000000008b96000:02] driver [Qualcomm Atheros AR8035] (irq=POLL)

[ 7.524648] fsl_dpaa2_eth dpni.11: Probed interface eth1

[ 7.774684] fsl_dpaa2_eth dpni.10 (unnamed net_device) (uninitialized): PHY [0x0000000008b96000:01] driver [Qualcomm Atheros AR8035] (irq=POLL)

After under into Linux, I can ping server successfully through dpmac.17.

root@TinyLinux:~# ls-listni

dprc.1/dpni.11 (interface: eth1, end point: dpmac.18)

dprc.1/dpni.10 (interface: eth2, end point: dpmac.17)

dprc.1/dpni.9 (interface: eth3)

dprc.1/dpni.8 (interface: eth4)

dprc.1/dpni.7 (interface: eth5)

dprc.1/dpni.6 (interface: eth6)

dprc.1/dpni.5 (interface: eth7)

dprc.1/dpni.4 (interface: eth8)

dprc.1/dpni.3 (interface: eth9, end point: dpmac.6)

dprc.1/dpni.2 (interface: eth10, end point: dpmac.5)

dprc.1/dpni.1 (interface: eth11, end point: dpmac.4)

dprc.1/dpni.0 (interface: eth12, end point: dpmac.3)

root@TinyLinux:~# ifconfig eth2 100.1.1.100

root@TinyLinux:~# ping 100.1.1.1

PING 100.1.1.1 (100.1.1.1): 56 data bytes

64 bytes from 100.1.1.1: seq=0 ttl=64 time=0.509 ms

64 bytes from 100.1.1.1: seq=1 ttl=64 time=0.257 ms

64 bytes from 100.1.1.1: seq=2 ttl=64 time=0.234 ms

64 bytes from 100.1.1.1: seq=3 ttl=64 time=0.216 ms

64 bytes from 100.1.1.1: seq=4 ttl=64 time=0.261 ms

So, it seems that your dpl file can work, would you please try ping on your target board through dpmac.17.

If it failed, please try whether ping can work in u-boot through dpmac.17.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have tested in uboot and linux, both of them are failed.

u-boot,

=> ping 10.0.253.1

Error: MC command failed (portal: 000000080c010000, obj handle: 0x27e, command: 0x1671, status: 0x6)

dprc_connect() failed

linux,

Even through it can link up (only dpmac18@sgmii), but still can't ping out

Settings for eth0:

Supported ports: [ TP MII ]

Supported link modes: 10baseT/Full

100baseT/Full

1000baseT/Full

Supported pause frame use: Symmetric Receive-only

Supports auto-negotiation: Yes

Supported FEC modes: Not reported

Advertised link modes: 10baseT/Full

100baseT/Full

1000baseT/Full

Advertised pause frame use: Symmetric Receive-only

Advertised auto-negotiation: Yes

Advertised FEC modes: Not reported

Link partner advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Link partner advertised pause frame use: Symmetric Receive-only

Link partner advertised auto-negotiation: Yes

Link partner advertised FEC modes: Not reported

Speed: 1000Mb/s

Duplex: Full

Port: Twisted Pair

PHYAD: 15

Transceiver: internal

Auto-negotiation: on

MDI-X: Unknown

Link detected: yes

root@localhost:~# ping 10.0.253.1

PING 10.0.253.1 (10.0.253.1) 56(84) bytes of data.

From 10.0.253.2 icmp_seq=1 Destination Host Unreachable

From 10.0.253.2 icmp_seq=2 Destination Host Unreachable

From 10.0.253.2 icmp_seq=3 Destination Host Unreachable

From 10.0.253.2 icmp_seq=4 Destination Host Unreachable

From 10.0.253.2 icmp_seq=5 Destination Host Unreachable

From 10.0.253.2 icmp_seq=6 Destination Host Unreachable

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please refer to Marvell 88E1543 PHY driver

http://lists.infradead.org/pipermail/barebox/2014-November/021362.html

http://lists.infradead.org/pipermail/barebox/2014-November/021363.html

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have used the driver, but it doesn't solve the issue.

=> setenv ethact DPMAC7@sgmii

=> setenv ipaddr 10.0.253.2

=> ping 10.0.253.1

DPMAC7@sgmii Waiting for PHY auto negotiation to complete................................ TIMEOUT !

Using DPMAC7@sgmii

ARP Retry count exceeded; starting again

Error: MC command failed (portal: 000000080c010000, obj handle: 0x27e, command: 0x1681, status: 0x7)

dprc_disconnect() failed dpmac_endpoint

ping failed; host 10.0.253.1 is not alive

=> ping 10.0.253.1

DPMAC7@sgmii Waiting for PHY auto negotiation to complete................................ TIMEOUT !

Error: MC command failed (portal: 000000080c010000, obj handle: 0x27e, command: 0x1671, status: 0x6)

dprc_connect() failed

How can I solve the "MC command failed"?

Is something wrong with my dpc or dpl configuration?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Would you please try to ping using DPMAC18@sgmii?

In addition, please read DPMAC18@sgmii PHY register to check the link status in u-boot.

=> mdio read DPMAC18@sgmii 1

I didn't find problem in your DPC and DPL file.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

=> mdio read DPMAC18@sgmii 1

Reading from bus mdio@8b97000

PHY at address f:

1 - 0x796d

=> mii dump 0xf 0x1

1. (796d) -- PHY status register --

(8000:0000) 1.15 = 0 100BASE-T4 able

(4000:4000) 1.14 = 1 100BASE-X full duplex able

(2000:2000) 1.13 = 1 100BASE-X half duplex able

(1000:1000) 1.12 = 1 10 Mbps full duplex able

(0800:0800) 1.11 = 1 10 Mbps half duplex able

(0400:0000) 1.10 = 0 100BASE-T2 full duplex able

(0200:0000) 1. 9 = 0 100BASE-T2 half duplex able

(0100:0100) 1. 8 = 1 extended status

(0080:0000) 1. 7 = 0 (reserved)

(0040:0040) 1. 6 = 1 MF preamble suppression

(0020:0020) 1. 5 = 1 A/N complete

(0010:0000) 1. 4 = 0 remote fault

(0008:0008) 1. 3 = 1 A/N able

(0004:0004) 1. 2 = 1 link status

(0002:0000) 1. 1 = 0 jabber detect

(0001:0001) 1. 0 = 1 extended capabilities

=> ping 10.0.253.1

Error: MC command failed (portal: 000000080c010000, obj handle: 0x27e, command: 0x1671, status: 0x6)

dprc_connect() failed

ldpaa_dpmac_bind, DPMAC Type= dpmac

ldpaa_dpmac_bind, DPMAC ID= 3

ldpaa_dpmac_bind, DPMAC State= 0

ldpaa_dpmac_bind, DPNI Type=

ldpaa_dpmac_bind, DPNI ID= 0

ldpaa_dpmac_bind, DPNI State= -1

DPMAC link status: 1 - up

DPNI link status: 0 - down

Using DPMAC18@sgmii device

ARP Retry count exceeded; starting again

DPNI counters ..

DPNI_CNT_ING_ALL_FRAMES= 0

DPNI_CNT_ING_ALL_BYTES= 0

DPNI_CNT_ING_MCAST_FRAMES= 0

DPNI_CNT_ING_MCAST_BYTES= 0

DPNI_CNT_ING_BCAST_FRAMES= 0

DPNI_CNT_ING_BCAST_BYTES= 0

DPNI_CNT_EGR_ALL_FRAMES= 0

DPNI_CNT_EGR_ALL_BYTES= 0

DPNI_CNT_EGR_MCAST_FRAMES= 0

DPNI_CNT_EGR_MCAST_BYTES= 0

DPNI_CNT_EGR_BCAST_FRAMES= 0

DPNI_CNT_EGR_BCAST_BYTES= 0

DPNI_CNT_ING_FILTERED_FRAMES= 0

DPNI_CNT_ING_DISCARDED_FRAMES= 0

DPNI_CNT_ING_NOBUFFER_DISCARDS= 0

DPNI_CNT_EGR_DISCARDED_FRAMES= 0

DPNI_CNT_EGR_CNF_FRAMES= 0

DPMAC counters ..

DPMAC_CNT_ING_BYTE=15

DPMAC_CNT_ING_FRAME_DISCARD=9

DPMAC_CNT_ING_ALIGN_ERR =10

DPMAC_CNT_ING_BYTE=15

DPMAC_CNT_ING_ERR_FRAME=20

DPMAC_CNT_EGR_BYTE =21

DPMAC_CNT_EGR_ERR_FRAME =25

Error: MC command failed (portal: 000000080c010000, obj handle: 0x27e, command: 0x1681, status: 0x8)

dprc_disconnect() failed dpmac_endpoint

ping failed; host 10.0.253.1 is not alive

It looks like DPNI didn't work.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I need to discuss with the expert team.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry, I found that I posted the wrong log for you.

The error code should be like this

ldpaa_dpmac_bind, DPMAC Type= dpmac

ldpaa_dpmac_bind, DPMAC ID= 17

ldpaa_dpmac_bind, DPMAC State= 0

ldpaa_dpmac_bind, DPNI Type= dpni

ldpaa_dpmac_bind, DPNI ID= 0

ldpaa_dpmac_bind, DPNI State= 0

DPMAC link status: 1 - up

DPNI link status: 1 - up

Using DPMAC17@sgmii device

ARP Retry count exceeded; starting again

DPNI counters ..

DPNI_CNT_ING_ALL_FRAMES= 0

DPNI_CNT_ING_ALL_BYTES= 0

DPNI_CNT_ING_MCAST_FRAMES= 0

DPNI_CNT_ING_MCAST_BYTES= 0

DPNI_CNT_ING_BCAST_FRAMES= 0

DPNI_CNT_ING_BCAST_BYTES= 0

DPNI_CNT_EGR_ALL_FRAMES= 0

DPNI_CNT_EGR_ALL_BYTES= 0

DPNI_CNT_EGR_MCAST_FRAMES= 0

DPNI_CNT_EGR_MCAST_BYTES= 0

DPNI_CNT_EGR_BCAST_FRAMES= 0

DPNI_CNT_EGR_BCAST_BYTES= 0

DPNI_CNT_ING_FILTERED_FRAMES= 0

DPNI_CNT_ING_DISCARDED_FRAMES= 0

DPNI_CNT_ING_NOBUFFER_DISCARDS= 0

DPNI_CNT_EGR_DISCARDED_FRAMES= 0

DPNI_CNT_EGR_CNF_FRAMES= 0

DPMAC counters ..

DPMAC_CNT_ING_BYTE=15

DPMAC_CNT_ING_FRAME_DISCARD=9

DPMAC_CNT_ING_ALIGN_ERR =10

DPMAC_CNT_ING_BYTE=15

DPMAC_CNT_ING_ERR_FRAME=20

DPMAC_CNT_EGR_BYTE =21

DPMAC_CNT_EGR_ERR_FRAME =25

Error: MC command failed (portal: 000000080c010000, obj handle: 0x27e, command: 0x1681, status: 0x7)

dprc_disconnect() failed dpmac_endpoint

ping failed; host 10.0.253.1 is not alive

We have two PHYs (88E1543) on our board. And the mode is SGMII-to-Copper (4 ports)

After testing, I found the there were two ports can ping success.

Serdes 1 with SGMII, all of the ports can't ping (DPMAC7~DPMAC10)

Serdes 2 with SGMII, two ports can ping and dhcp (DPMAC11, DPMAC12)

DPMAC17, DPMAC 18 would be failed.

Here is the ping success log

ldpaa_dpmac_bind, DPMAC Type= dpmac

ldpaa_dpmac_bind, DPMAC ID= 11

ldpaa_dpmac_bind, DPMAC State= 0

ldpaa_dpmac_bind, DPNI Type= dpni

ldpaa_dpmac_bind, DPNI ID= 0

ldpaa_dpmac_bind, DPNI State= 0

DPMAC link status: 1 - up

DPNI link status: 1 - up

Using DPMAC11@sgmii device

DPNI counters ..

DPNI_CNT_ING_ALL_FRAMES= 2

DPNI_CNT_ING_ALL_BYTES= 120

DPNI_CNT_ING_MCAST_FRAMES= 0

DPNI_CNT_ING_MCAST_BYTES= 0

DPNI_CNT_ING_BCAST_FRAMES= 0

DPNI_CNT_ING_BCAST_BYTES= 0

DPNI_CNT_EGR_ALL_FRAMES= 2

DPNI_CNT_EGR_ALL_BYTES= 84

DPNI_CNT_EGR_MCAST_FRAMES= 0

DPNI_CNT_EGR_MCAST_BYTES= 0

DPNI_CNT_EGR_BCAST_FRAMES= 1

DPNI_CNT_EGR_BCAST_BYTES= 42

DPNI_CNT_ING_FILTERED_FRAMES= 0

DPNI_CNT_ING_DISCARDED_FRAMES= 0

DPNI_CNT_ING_NOBUFFER_DISCARDS= 0

DPNI_CNT_EGR_DISCARDED_FRAMES= 0

DPNI_CNT_EGR_CNF_FRAMES= 0

DPMAC counters ..

DPMAC_CNT_ING_BYTE=15

DPMAC_CNT_ING_FRAME_DISCARD=11

DPMAC_CNT_ING_ALIGN_ERR =10

DPMAC_CNT_ING_BYTE=15

DPMAC_CNT_ING_ERR_FRAME=22

DPMAC_CNT_EGR_BYTE =23

DPMAC_CNT_EGR_ERR_FRAME =27

host 10.0.253.1 is alive

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please refer to the following suggestion from the expert team.

Suggest customer try to modify EC1_PMUX and EC2_PMUX with non-zero values in RCW in order to disable MAC17/18 RGMII mode.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

This is solved, thanks.

And I have known the critical issue.

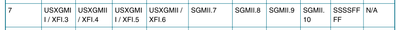

This is the setting we use, but unfortunately, the PLL mapping is not match.

Our PLLF is 156.25 MHz and PLLS is 100 MHz.

XFI (the other issue) and USXGMII should use PLLF, and SGMII should use PLLS.

But the mapping is just reversed. I just tested this setting which SGMII interfaces use PLLS.

And the PHY on Serdes 1 ping to network successfully in uboot.

So, I wonder how to configure the PLL mapping of the option 7 to FFFFSSSS. thanks.