- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- MCUXpresso Training Hub

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

-

- Home

- :

- 汎用マイクロコントローラ

- :

- LPCマイクロコントローラ

- :

- Re: LPC4357, hard fault after reset from application

LPC4357, hard fault after reset from application

- RSS フィードを購読する

- トピックを新着としてマーク

- トピックを既読としてマーク

- このトピックを現在のユーザーにフロートします

- ブックマーク

- 購読

- ミュート

- 印刷用ページ

LPC4357, hard fault after reset from application

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

I found out that my LPC4357 based device (which uses LPCopen 2.12 and no OS) sometimes ends-up in hard fault after reset. Once it runs, it runs fine and does not crash.

The reset is requested by an external device via communication. Communication is handled by the M0 core which resets the whole MCU using Chip_RGU_TriggerReset(RGU_CORE_RST)

It takes randomly from several to hundreds of restarts to replicate the issue.

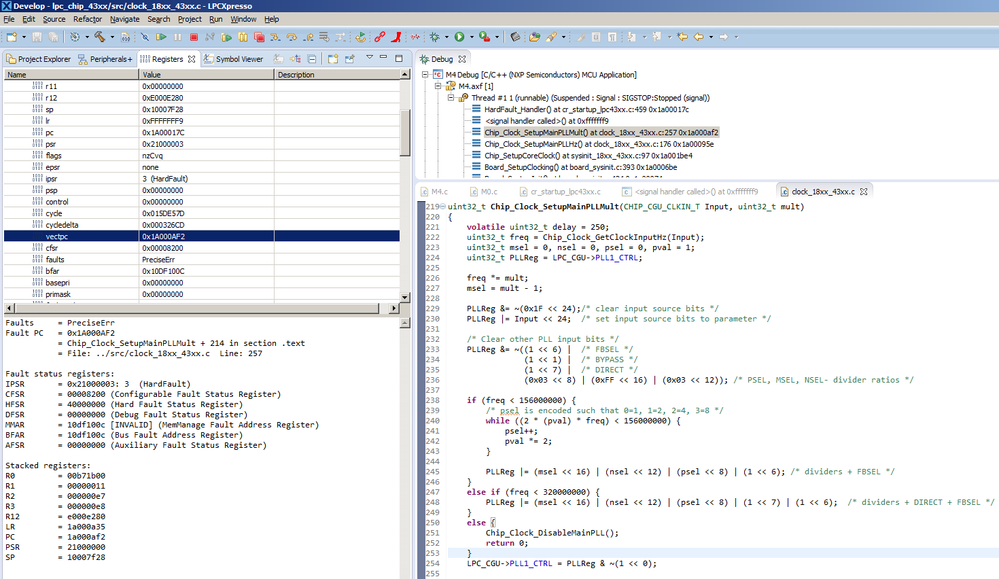

The M4 core ends up in hard fault. The problem happens during setting of clock.

When I repeatedly reset from the user application and connect through JTAG after the crash, I can't get any relevant information from the VECTPC register. If I reset it through the LPCXpresso "Restart" button in an active debug session over JTAG, it shows where the hard fault handler was called.

For this reason I used the restart from LPCXpresso, although I am not 100% sure the behavior is identical.

At the beginning I traced it to the call of Chip_Clock_SetupMainPLLMult():

I thought that the PLL needed more time to stabilize, so I increased the delay.

It actually helped, but eventually it crashed again.

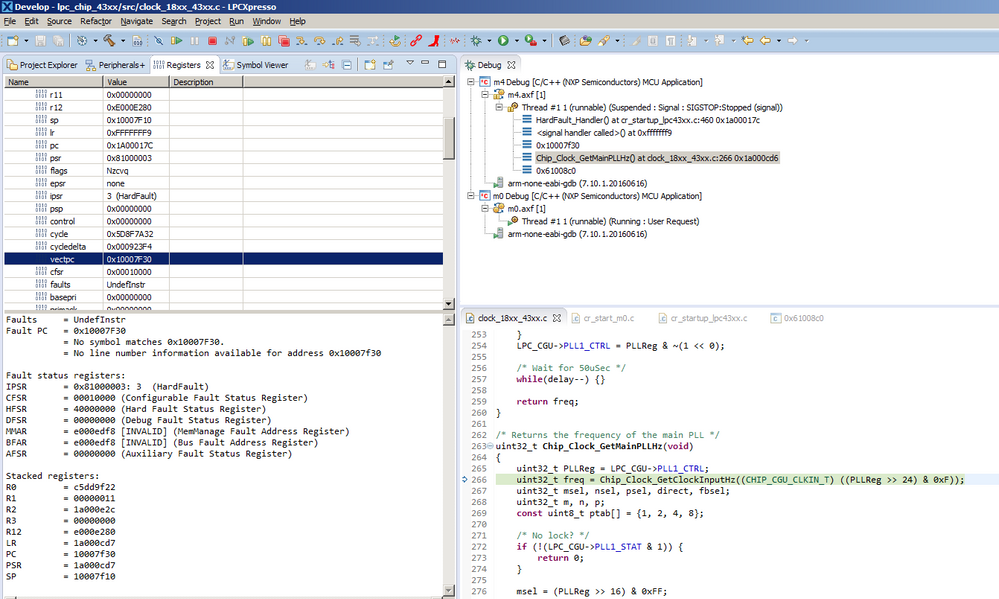

This time it was in the call of Chip_Clock_GetMainPLLHz()

I normally debug at the code level and do not have experience at the instruction level.

Does anybody have any idea how to solve this problem?

I found a thread which describes a similar problem, just with the M0 core...

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

#

Can you look at the function "Chip_Clock_EnableCrystal" (assuming your are using a crystal)?

The "stock" value of 1000 is often not enough for many boards. I just powered up an Embedded Artists 4357 board and would randomly get hard faults at boot. I don't think the crystal is stabilized before the PLL kicks in.

I remember having this issue wayback in 2013 and forgot that I keep a special version of the chip l;ibraries. On my board, I set it obscenely large (1000000) and never have startup issues.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello,

My name is Sabina and I will provide the support necessary to find the root cause of this behavior. To begin with I'd like to make it clear that these types of errors are complicated to resolve specially if they are not consistent, as you mentioned, it is random between several to hundreds of resets for this to be replicated.

I'll guide you through some key points to check out in the code and ask for additional information to target the root cause of this, so I thank you for your patience in advance.

The version that I currently have of this LPCOpen for LPC4357 is 2.19 so my functions are not identically matching line by line with yours.

First I notice that your hardfault in your first image is in the line 257 of that function. Can you provide the whole section of the Chip_Clock_SetupMainPLLMult to see what occurs in that line. In addition can you confirm if it shows this same line in a different instance if you decrease the delay to how it was originally. If not, please provide the line where it stops.

Next you mention that after increasing the delay it seemed to improve but now stops at the Chip_Clock_GetMainPLLHz. Can you confirm if with the delay you had increased, it consistently stops here.

As you mention, it seems to have something to do with the clock. Can you try the following and report the results:

1. Try to do Chip_RGU_TriggerReset(RGU_CREG_RST) before the Chip_RGU_TriggerReset(RGU_CORE_RST). Here I want to force all of the register to reset, as with the core reset it only resets this partly, we can verify if it may have to do with the RTC.

2. If the above has no effect, please also try to disable the PLL prior to resetting the core. It is possible that at some point this is being corrupted and doesn't allow you to effectively access to the core.

Please let me know, the results of the above.

Best Regards,

Sabina

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello Sabina,

thank you for your reply.

I would call the behavior rather consistent.

When I reset from within the application by calling Chip_RGU_TriggerReset(RGU_CORE_RST) and connect to the MCU after it stops resetting itself, it is sometimes in the NMI_Handler() and sometimes in the HardFault_Handler().

In this situation I do not see where the handler was called.

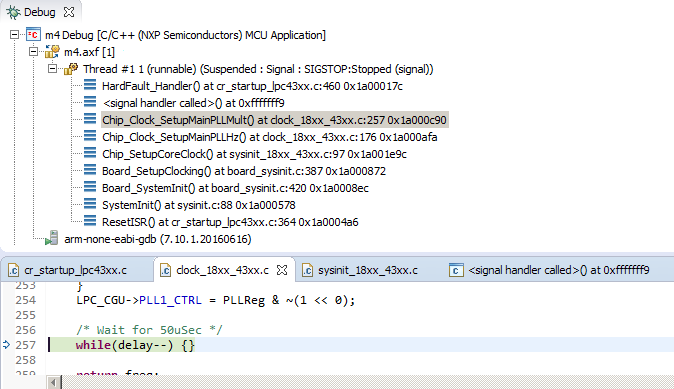

When I reset using the GUI restart button (repeated clicking using a click script tool), it consistently happens on the line 257 of clock_18xx_43xx.c

My version of the Chip_Clock_SetupMainPLLMult from LPCopen 2.12:

/* Directly set the PLL multipler */

uint32_t Chip_Clock_SetupMainPLLMult(CHIP_CGU_CLKIN_T Input, uint32_t mult)

{

volatile uint32_t delay = 250;

uint32_t freq = Chip_Clock_GetClockInputHz(Input);

uint32_t msel = 0, nsel = 0, psel = 0, pval = 1;

uint32_t PLLReg = LPC_CGU->PLL1_CTRL;

freq *= mult;

msel = mult - 1;

PLLReg &= ~(0x1F << 24);/* clear input source bits */

PLLReg |= Input << 24; /* set input source bits to parameter */

/* Clear other PLL input bits */

PLLReg &= ~((1 << 6) | /* FBSEL */

(1 << 1) | /* BYPASS */

(1 << 7) | /* DIRECT */

(0x03 << 8) | (0xFF << 16) | (0x03 << 12)); /* PSEL, MSEL, NSEL- divider ratios */

if (freq < 156000000) {

/* psel is encoded such that 0=1, 1=2, 2=4, 3=8 */

while ((2 * (pval) * freq) < 156000000) {

psel++;

pval *= 2;

}

PLLReg |= (msel << 16) | (nsel << 12) | (psel << 8) | (1 << 6); /* dividers + FBSEL */

}

else if (freq < 320000000) {

PLLReg |= (msel << 16) | (nsel << 12) | (psel << 8) | (1 << 7) | (1 << 6); /* dividers + DIRECT + FBSEL */

}

else {

Chip_Clock_DisableMainPLL();

return 0;

}

LPC_CGU->PLL1_CTRL = PLLReg & ~(1 << 0);

/* Wait for 50uSec */

while(delay--) {}

return freq;

}When I change the value of delay from 250 to 1000 it starts to happen (less frequently) at a call of

Chip_Clock_GetClockInputHz()

which is called within Chip_Clock_SetupMainPLLMult() and Chip_Clock_SetupMainPLLHz().

It can happen in either of these.

I assume that if I did not change the delay, it could still happen at the call of Chip_Clock_GetClockInputHz(). It is just more probable that it happens on line 257, so it happens there first.

I do not really understand why the debugger shows that the problem happens during the delay countdown. I would expect that it should happen after the delay decrements to 0 and the following instruction is processed.

- Calling the Chip_RGU_TriggerReset(RGU_CREG_RST) unfortunately did not change the behavior it still crashes at about the same rate.

Of course I can test that only when I call the reset from the application, not from GUI - How shall I disable the PLL without crashing the MCU? It provides clock for the core and all peripherals. When I call Chip_Clock_DisableMainPLL(), it crashes.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello,

I wanted to follow up on your question, have you been able to resolve this?

Do you have any new observations you can share, after trying the above recommendations provided by bernhardfink.

Also, can you confirm if in line 257, the while(delay--) completes the cycle? or does it crash before delay = 0. What is the value of delay when it goes to hardfault.

Best Regards,

Sabina

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello Sabina and Bernhard,

thank you both for your answers and suggestions.

I was on a business trip this week and haven't had the chance to continue the test yet. (especially I mean learning how to do parts of what was suggested.)

In the application (an industrial sensor) I use GPIO, SGPIO, Timers, EMC (external SDRAM), DMA, CAN, Ethernet.

I got rid of most of those for the test.

I will try further disabling the interrupts using

__disable_irq();

Can you please give me a hint how to switch "the clock tree from the PLL output to the 12MHz IRC"?

Is there a LPCopen function to do that?

The same question about the flash wait states...

All that runs before the crash is basically LPCopen and code derived from examples from Embedded artists (Which seems to be very similar to Keil examples for LPC4357).

My application code starts later.

I will try and see the value of the delay variable. Good idea.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello quex,

I apologize for the delayed response. There are step-by-step instructions on how to properly change the clock in

Chapter 13: LPC43xx/LPC43Sxx Clock Generation Unit (CGU).

Let me know if you have questions on the above, as well as any new observations you have made.

Best Regards,

Sabina

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello Sabina,

thank you for the reply and I also apologize for the delay.

So far I made following tests:

- The value of the "delay" variable which starts at the value 250 is usually 244 after the crash (5 tests, 4 times it ended up 244 and once it was 240). I decreased the value to 220 and it also counted down only by 6 before the MCU ended in hard fault. The same when I increased the starting value to 260.

What is really strange is that when I increase the initial value to 300, The program does not crash on this line any more.

This is a mystery to me. Why should increasing of the value help? It counts down only by 6 or 10 before the crash so the actual value should not matter as long as it is larger than 10... - I got rid of interrupt sources on the M4 and disable interrupts using "__disable_irq();" on the M0 immediately before the M0 executes the reset. Unfortunately that did not help.

- I tried switching the clock source of the M4 and M0 cores from PLL1 to the IRC using:

LPC_CGU->BASE_CLK[CLK_BASE_MX] = CLKIN_IRC;

LPC_CGU->BASE_CLK[CLK_BASE_PERIPH] = CLKIN_IRC;

executed by the M0.

(The user guide describes the steps for switching of the clock source to high frequency PLL1. If I understand it right, switching back to IRC should be simple, no waiting for a lock, no clock dividers)

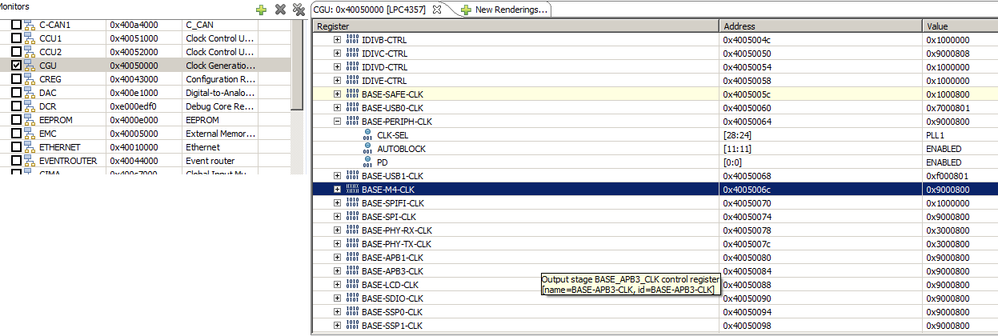

Unfortunately when I stop the controller after executing that and look into the CGU registers, the core and peripherial clocks are still set to PLL1.

When I change all the BASE-XXX-CLK which have PLL1 in them to IRC through LPCXpresso while the MCU is on breakpoint, the MCU crashes when I restart it and I loose the JTAG connection.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

That's a fuzzy topic, so I think you need to try different things to find a solution.

Switching the clock to 12MHz IRC source:

// Switch BASE_M4_CLOCK from whatever clock to IRC

LPC_CGU->BASE_M4_CLK = (0x01 << 11) | (0x01 << 24) ; // Autoblock Enable, Set clock source to IRC

LPC_CGU->PLL1_CTRL |= 1; // Disable PLL1

LPC_CGU->PLL0USB_CTRL |= 1;

LPC_CGU->PLL0AUDIO_CTRL |= 1;

// Further preparations

LPC_CREG->FLASHCFGA |= 0x0000F000; // Set maximum wait states for internal flash

LPC_CREG->FLASHCFGB |= 0x0000F000;

This can be done on the fly, the switchover point between the high frequency and the 12MHz is controlled internally. Doing this under debugger control may not work (as you can see).

What's really interesting is this point with delay = 300. I can't explain this. In the past I have seen strange effects from the compilers, sometimes with high optimization level O3 things were completely optimized away.

It's also very important where the code resides, when it's executed. A loop is sometimes so short that it completely fits into the buffer of the flash accelerator, you can call it cache. Sometimes I have surrounded such a delay by GPIO on/off commands to see with an oscilloscope how long the loop really is.

You should do a test without code optimization and maybe also with a different delay implementation:

for (d = 0; d < delay; d++);

{

__NOP(); // that's for ARM CC, this can't be optimized away

__NOP();

}

Before you write the reset bit, let the M4 core and the bus system finish everything:

__ISB();

__DSB();

// Write reset bit

For MCUXpresso with the GCC compiler the instructions look this way:

__asm volatile ("nop\n");

__asm volatile ("isb\n");

__asm volatile ("dsb\n");

If you perform the chip reset from the M0APP core, then you should stall the M4, maybe like this:

1) Issue an interrupt to the Cortex-M4 side

2) In the ISR on the M4 side perform the ISB() and the DSB() and then go into a while(1);

3) Wait long enough on the M0 side to let the M4 do this and then write on the CORE_RST reset register bit

In general you should carefully think about the things which can happen to the other side if you perform a system kill action from one core. The two cores execute asynchronously, but using the same bus resources. In principle it would be better to delegate this chip reset task to the M4 core, because you can keep the M0APP in reset state while you're working on the wind back and the CORE_RST.

Regards,

Bernhard.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello Bernhard,

thank you a lot for this reply, it gave me a valuable insight into what is going on in there.

I guess that your clock switch code is CMSIS compliant.

The LPC_CGU structure looks different in LPCopen, but I understand the procedure now.

One more question: is switching of the BASE_M4_CLK clock (CLK_BASE_MX in LPCopen) to IRC sufficient before disabling the PLL1?

What about the other clocks still having PLL1 as their clock source?

And you are right, at the moment the project compiles with -O3 optimization level.

I will try the behavior with different settings.

Letting the M4 perform the reset and stopping the M0 looks also quite sensible. I am going to try that. It will require some code changes though.

At the moment I have to take care about a more critical problem, but I will definitely post a follow-up on this once I'll finish the tests.

Thank you again.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

>> What about the other clocks still having PLL1 as their clock source?

That's a generic issue when switching such a central clock source to another clock frequency or even off. What are the IP blocks doing with the other frequency or with even no frequency? Nothing or dreadful things?

You can solve this individually (results in badly maintainable code) or the generic way (which includes overhead). This is what I call "wind back", you bring everything down to the level shortly after reset

Best style for preparation of deep power down and software reset is: (also check chapter 12.2.4 in the UM)

- Re-base everything to 12MHz IRC

- When 12MHz is not possible (Ethernet, USB etc) disable the IP block and then disconnect it from the clock source

- Take care of the performance mode. If I remember correctly (I'm a little bit out of these MCU topics), there is a power API in LPCOpen using code in ROM, which controls the voltage levels of the internal regulators. If you are working on the lowest performance level and then you perform a reset or a wake-up from deep power down mode, then this low voltage level is taken over (it's in the CREG block, but not documented). The ROM bootcode will enable the PLL and switch it to 96MHz and then the chip crashes. So before you make a reset, check that this level is on "normal".

- Stall the second core

Regards,

Bernhard.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

I finally got the time to focus on this problem again, sorry for the delay.

First I tried moving everything to the M4 core. The M4 core did not start the M0 core, the M0 stayed in reset.

Unfortunately it still was crashing at about the same rate. The sharing of resources between the cores was not causing the problem.

The next step was reducing the optimization level from (-O3) to (-O0). Yet it still crashed. Less often, but it always did sooner or later.

There are several steps during clock configuration after reset:

The main clock source after restart is the 12 MHz IRC.

The function Chip_SetupCoreClock() [lpc_chip_43xx\sysinit_18xx_43xx.c] first sets the PLL1 to 108 MHz and then configures the PLL1 as the main clock source.

In the next step it bumps its frequency up to 204 MHz by changing the clock multiplier from 9 to 17.

And this was the part of the code where it crashed.

I tried setting a lower frequency (180 MHz) and it stopped crashing.

I could not give up on the frequency so I tried setting it to an intermediary value of 180 MHz, and after that and some delay up to the full 204 MHz.

Long story short, it worked.

I assume that what Bernhard wrote about the internal voltage regulators could be a key to the problem. The sudden increase of energy consumption after the increase of frequency could perhaps occasionally cause the crash if the regulators would not react fast enough. Unfortunately I haven't found any way to control them. The power management only supports putting the core to deep sleep, power-down etc.

I consider the problem solved by this workaround, but not fully explained.

For higher optimization levels I had to replace the delay loop in the Chip_Clock_SetupMainPLLMult() function.

As @Bernhard Fink explained, the

while(delay--) {}loop is not guaranteed by higher optimization levels. I had to replace it with his:

for (int d = 0; d < delay; d++){

__NOP(); // that's for ARM CC, this can't be optimized away

__NOP();

}

Bernhard, is there a more elegant way to handle the change of frequency? Do you please have a description of the power control API?

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

I have seen that the power API has been changed since I was actively working on this MCU topic, therefore here a description of the discrete setting you could do on the regulator VD1 for the core and the bus system in LPC1800/4300:

// Specify the voltage settings for VD1 (VDDCORE)

// Default is 3, the other values may have side effects

#define VALUE_VD1 3 // 7 = 1.4V 3 = 1.2V 2 = 1.0V

uint32_t temp1;

// Change voltage VD1/2/3 for test purposes

// This register is in the CREG domain.

// 7 = 1.4V 3 = 1.2V 2 = 1.0V 1 = Low Power 0 = Off

// Bit 15 enables power control

// Bit[2:0] = VD3 Bit[5:3] = VD2 Bit[8:6] = VD1 (Core)

temp1 = *(uint32_t *)0x40043008;

*(uint32_t *)0x40043008 = (temp1 & 0xFFFFFE00) | ((VALUE_VD1 << 6) | (3 << 3) | (3 << 0) | (1 << 15));

If I remember correctly, the setting for high performance mode (180 - 204MHz operation) requires 1.4V, please check if this is the case when you run on this frequency.

Regards,

Bernhard.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello Bernhard,

the value on that address is 0x3230DB and does not change with the frequency.

All the VDx have the same value.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Before performing a planned reset, I would

- stop/disable peripherals, especially the ones which could generate interrupts (or alternatively globally disable interrupts)

- switch the clock tree from the PLL output to the 12MHz IRC

- disable the PLL

- set flash wait states to the maximum

- disable the watchdog

- initiate the reset

Because the CORE_RST is not as complete as an external hardware reset or a POR reset, you should also check in detail how your sysinit looks like:

- is there anything which relies on a "default" value after reset?

- are all variables initialized with a defined value?

Even if these things may not be absolutely required, it is "good style" to do it this way and maybe one of the points above causes the problem.

Regards,

Bernhard.