- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- MCUXpresso Training Hub

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

-

- Home

- :

- Product Forums

- :

- ColdFire/68K Microcontrollers and Processors

- :

- Re: Re: I2C Slave on MCF51QE having problems running @ 100KHz

I2C Slave on MCF51QE having problems running @ 100KHz

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello I am using an MCF51QE64 with an I2C module set as a slave with a single master that is an FPGA running an Opencores I2C controller with at the rate of 100KHz.

The issue is that the FPGA master controller issues an arbitration loss error while writing successive bytes to the Coldfire I2C module. The problem turns out to be that the Coldfire after an ACK will hold both the SCL & SDA low for an extended period of time ~2uS which extends the SCL low time. Since this extended time that the Coldfire holds SDA low overlaps when the master is trying to drive SDA high(for the next byte), an arbitration loss error occurs. I know that the SCL is being held low because the clock after the ACK goes from a 4uS on time to a 2uS on-time. Every once in a while the error doesn't happen which tells me it is some kind of timing issue.

The following is the Coldfire register setup

ICSC1 => 0x06 (using internal clock generator)

ICSC2 => 0x00

ICSSC => 0x50 (DCO set to mid level of 32-40MHz)

ICSTRM => this is left at the default setting which is 0x97

IIC1F => 0x0B (we've tried various settings here which seem to change the scope captures but not fix the problem)

IICC1 => 0xC0

If I slow down the Master I2C baud rate to around 50-60KHz everything works without problem. We tried increasing the DCO frequency to the hi-level (48-60MHz) and that increased the probability of success but did not completely fix the problem.

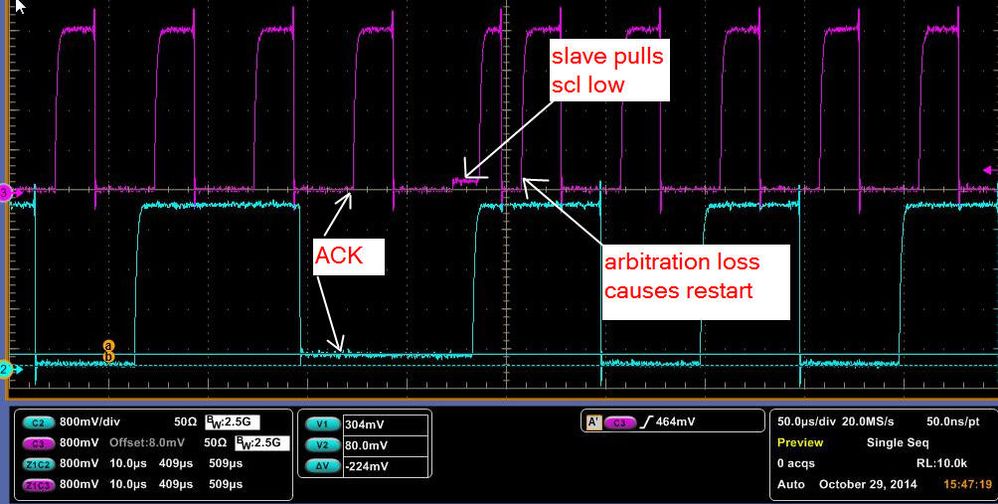

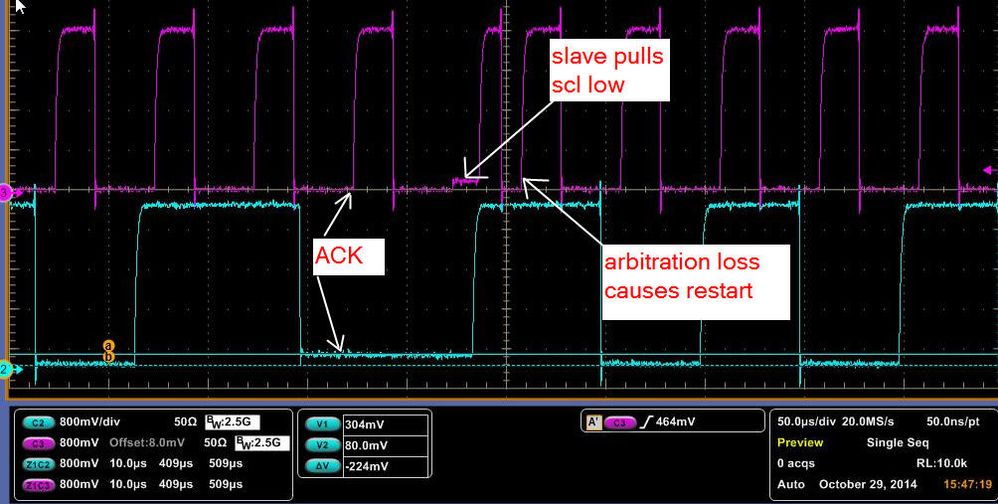

Here is a scope probe. 100 ohm series resistors were added to accentuate the slave pulldown.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tom, again thank you for your analysis, you have been very helpful & insightful.

I believe I've discovered the problem. The reason why what I'm seeing and what you are seeing is different is because we have an older version of the I2C controller. First a disclaimer... I am not the design owner...I am only helping out so I have always assumed that we were running with was the latest version downloaded from Opencores. Not the case.

Turns out the version that we were using (v1.10) did not assert slave_wait correctly (as I mentioned it was only being looked at when cnt = 0). When we run with the latest version of the I2C controller (which fixes slave_wait) all is well.

Sorry for all the run around. I have certainly learned a lot more about I2C :smileyhappy:

Thanks,

Heath

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I thought that pulling SCL down was a legitimate part of the protocol. It is the way the slave generates "flow control" back to the master.

The obvious place...

I²C - Wikipedia, the free encyclopedia

if your FPGA can't handle this then maybe it should be fixed so it does.

Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Tom, the problem is not due to clock stretching. Yes I agree, a slave can hold SCL low if it wants to slow things down, but the problem is that the Coldfire slave is also holding SDA low as well.(which is NOT clock stretching protocol) When the master tries to make SDA go high for the next bit and it doesn't because the line is being pulled low, the master then flags an arbitration loss flag and stops the current transaction. So the question is why is coldfire holding SDA low after ACK causing the arbitration loss error? The FPGA is the only master on the bus so this should not happen.

FYI, the Opencores I2C controller has been around for many years and used on probably many FPGA's and does support clock stretching.(when done correctly)

Thanks,

Heath

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

> Tom, the problem is not due to clock stretching.

I replied very quickly while trying to get home on Friday evening, so I may have jumped to a wrong conclusion. I've now had time to read your report properly and read up on the protocol again.

> Since this extended time that the Coldfire holds SDA low overlaps when the master is

> trying to drive SDA high(for the next byte), an arbitration loss error occurs.

I've just read through Wikipedia's I2C article, NXP's I2C documentation and the MCF51QE's Reference Manual, and I still think the FPGA is buggy. Either that or your bus needs the resistors changed.

Nice oscilloscope trace. Congrats on using the series resistors to work out what is driving when.

I have to assume that the Oscilloscope Trace above is "typical". In it I can see that the Slave is releasing SDA and SCL at the end of the "Clock Stretch" at almost the same time, but with SDA looking like it is released first by about 500ns. I'm reading SDA at 3.5us before the vertical axis mark, and SCL at 3.0us before.

Yes the Master is trying to release SDA so it goes high, but that does not constitute a collision. I2C requires that the data be stable while the clock is high, and changes when it is low, so when would you expect the slave to release SDA? My reading of the specs is that the slave can release the data pin any time after the I2C Standard Hold Time for Standard (100kHz) speed (which is 5uS) and any time before the Setup Time (which is 250ns).

So the slave seems to be obeying the specifications. It could release SDA earlier but is not required to.

The NXP Spec states on Arbitration that "Arbitration proceeds bit by bit. During every bit, while SCL is HIGH, each master checks to

see if the SDA level matches what it has sent.".

If the Master is checking SDA before SCL rises then it isn't obeying I2C.

It would be possible to have the wrong pullups so that SDA rose slower than SCL did, but your trace doesn't show that.

> the Opencores I2C controller

Do you have the source code? Can you read it and see how and when it is checking for arbitration?

> and does support clock stretching.(when done correctly)

When done differently perhaps. It may not work at all edges of the timing allowed by the I2C specification.

Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There's another apparent fault with the FPGA I2C implementation. I'm reading this specification:

http://www.nxp.com/documents/user_manual/UM10204.pdf

The specification for I2C at 100KHz has the high clock time being a minimum of 4us. Looking at your trace I can see the clock is being driven high for 4us and low for 6us normally (min spec 4.7us).

Except for just after the MCF stretches the clock. The high time is then about 2.5us. That's a timing violation. The next low-clock is then about 2us wide , way less than the minimum.

So did the FPGA notice the clock stretch at all, or is it running "blindly"? If it didn't notice at all it would have driven the clock low again 10us after the previous one. It drove it low about 11us later, so it has taken some sort of notice.

Or maybe not. I can't compare that with a "good" trace, so it is possible it always takes 11us after the ACK, and it hasn't noticed the clock stretch at all. Could you please check this?

> FYI, the Opencores I2C controller

OK, which one? There are four of them listed there.

Is it this one?

Dangerous stuff, VHDL. It makes hardware designers think they're programmers living in a perfect world with no timing variations, no metastable states and no limits on the amount of "code" they can type in. I've looked through the above "code" and can't find even a single-rank synchroniser, let alone a dual-rank one. I'm guessing I've clicked on the wrong one because the slave doesn't work in that version. So I'm guessing you're using this one:

http://opencores.org/project,i2c

It won't let me download it without creating an account, so I can't be bothered today.

I suggest you check the arbitration logic and make sure it is waiting until the EXTERNAL SCL goes high before checking the value on SDA. Make sure it isn't using its INTERNAL SCL signal as the qualifying clock. You could also try and see why it is generating those short clocks after an apparent arbitration failure.

Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Assuming you're using the OpenCores I2C controller and have the latest version, then we should be looking at the same VHDL sources.

I'm quite impressed with it. It is well structured, well written and I can't see any obvious mistakes in there.

From the logic, whenever it detects an Arbitration problem it resets everything, so I'd expect those short clocks, except I'd still say it shouldn't be doing that.

The external SDA and SCL signals go through a proper two-rank synchroniser (in "capture_scl_sda") generating the vectors cSDA and cSCL. They then go through more vectors fSDA and fSCL in "filter_scl_sda" clocked at 4 times the bit rate (unless your input clock is too low - check this) and those vectors are then majority-sampled to create sSCL and sSDA, then clocked again to create dSCL and dSCA.

sSCL is then used to create "slave_wait", which should be asserted every time the Master tries to drive SCL high, and should stay that way until the much-delayed sSCL eventually goes high.

While that's happening, "gen_clken" is decrementing "cnt" to create the bit-clock "clk_en". And whenever "slave_wait" is asserted, the counter decrement is blocked for that clock cycle.

Because of the filtering delays, I'd expect to see the results of the counters being stalled in the timing. I'd expect the 10us bit rate to "stretch slightly" by at least 1us (two master clocks plus two filter clocks) due to these delays. I'm not seeing that in your trace and it worries me that "slave_wait" isn't working as expected.

Anyway, you're lucky. You have the sources so you can put "virtual CRO probes" on any signal in the FPGA and drive them out to a spare pin or three. I'd suggest you connect "slave_wait", "sda_chk" and "al" for a start and see what they're doing when it works and when it doesn't.

What is your I2C input clock and what do you have the divider "clk_cnt" set to? If you're not overclocking it fast enough then that may be violating some of the internal design assumptions.

I think the "filter clock" and the "bit clock" aren't synchronised. It looks like they'll slip relative to each other, and can therefore be in four different relative phases, generating four distinct sets of internal delays. That might be part of why the problem is intermittent.

Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here's an (unfortunately) unresolved Bug Report on the I2C OpenCore that seems to match your problem:

http://opencores.org/bug,view,2421

The poster tried to send a fix, but it didn't make it into the system, and then didn't follow up.

You could add a bug report, but there's been no activity or responses since April, so the project might not be "actively supported" any more.

Here's a previous Arbitration problem in 2010 where author fixed it by "moved SDA checking to wr_c state":

http://opencores.org/bug,view,512

Make sure you're running the latest version that has this fix.

Are you using the VHDL or the Verilog version? They should be the same, but I can't prove that by reading the sources.

The documentation hasn't been updated since 2003, but the code has changed since then. It says "the core uses a 5*SCL clock internally" when it may need a minimum 20*SCL clock due to the filter. It may need a higher clock than that to work properly. You should probably simulate the core with your external signals and see what's going on.

Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tom, thanks so much for looking into this. We did see the bug report last week, thanks. FYI we are running the Opencores module @ 25MHz with a 0x31h pre-scale.

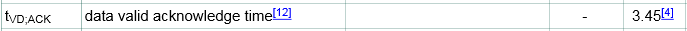

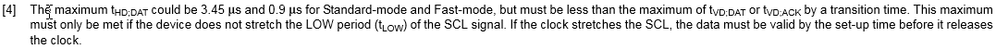

I was convinced the problem was with the Coldfire and now I'm not so sure. I thought that the max hold time for Tvd ACK was 3.45uS but as you point out it may be permissible for the slave to hold on to ACK up to the setup time before the rising edge of the clock.

As you pointed out on the FPGA side it is much easier to debug... we were able to run SignalTap and bring out all of the internal signals with the master controller. From our captures it was apparent that AL (arbitration loss) was occurring before clock stretching was being recognized. From the Opencores code though, I can see that it only checks for clock stretching when the internal counter(cnt) reaches 0 or once every 10uS so a clock stretching event needs to be long enough to be recognized by the master. Holding SCL for only 2uS isn't long enough for the master to recognize it.

As for the AL logic, as you correctly pointed out, the code does not check to see that SCL actually went high before issuing the arbitration loss. Maybe this could be a possible fix. I would have thought though that this fix would have been implemented a long time ago.

I'm also still looking at the Freescale side to see if this behavior is typical. I have some Freescale folks looking at it now.

Thanks Tom,

Heath

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

> From the Opencores code though, I can see that it only checks for clock stretching when the internal counter(cnt) reaches 0

Could you go through the details of that please? From my reading, the only time it WON'T n recognise clock-stretch is when "ctr" is zero:

This bit generates dscl_oen one master (undivided) clock behind iscl_oen, which is driven when the state machine tries to drive SCL high (lets it go to pull high):

elsif (clk'event and clk = '1') then

dscl_oen <= iscl_oen;

"slave_wait" is generated when "iscl_oen" goes high here:

elsif (clk'event and clk = '1') then

slave_wait <= (iscl_oen and not dscl_oen and not sSCL) or (slave_wait and not sSCL);

From me reading, that means that slave_wait should be set on every assertion of "iscl_oen" and should latch on until "sSCL" gets back through the syncronizers and filters and then clear.

This code is clocked by the master clock and generates the 5-times-bit-rate clock:

gen_clken: process(clk, nReset)

...

elsif (clk'event and clk = '1') then

if ((rst = '1') or (cnt = 0) or (ena = '0') or (scl_sync = '1')) then

cnt <= clk_cnt;

clk_en <= '1';

elsif (slave_wait = '1') then

cnt <= cnt;

clk_en <= 0;

else

cnt <= cnt - 1;

clk_en <= 0;

end if;

That counter is meant to be STALLED (and stop counting) when "slave_wait" is true. So that should be checked on every clock EXCEPT when "cnt" is zero.

That's probably a bug. The test against slave_wait should take precedence over the test for (cnt = 0).

Does my analysis match up with what you're seeing with SignalTap?

> As for the AL logic, as you correctly pointed out, the code does not check to see that SCL actually went high before issuing the arbitration loss.

I think it does. The AL logic only checks for the problem when "sda_chk" is high and that only happens during the third part of a write cycle.

Eight Write cycles are run for the data byte being written, then a Read cycle runs to read the Ack. Then it runs another Write cycle which is where the Arb Failure is happening. So it does this, and note each state transition is caused by the above code generating "clk_en" at the decimated rate:

wr_a SCL Low Write cycle writing last bit or a byte

wr_b SCL High

wr_c SCL High sda_chk=1

wr_d SCL Low

idle SCL Low

rd_a SCL Low Read Cycle reading the ACK

rd_b SCL High

rd_c SCL High

rd_d SCL Low

idle SCL Low

wr_a SCL Low Write Cycle for next byte

wr_b SCL High

wr_c SCL High sda_chk=1

wr_d SCL Low

At the start of the last "wr_b" SCL is driven high, and "slave_wait" should be set. HOWEVER this is where the MCF stretches the clock, so it should be in more detail:

wr_a SCL Low Write Cycle for next byte

"cnt" has to go 10, 9, 8, ... 4, 3, 2, 1, 0

wr_b SCL High

"cnt" goes10, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 8, ... 4, 3, 2, 1, 0, stuck at "9" while waiting for "slave_wait" to go away

wr_c SCL High sda_chk=1 **NOW** the code check for Arbitration Lost.

"cnt" has to go 10, 9, 8, ... 4, 3, 2, 1, 0

wr_d SCL Low

So it should work. From your CodeTap view, why isn't the above working like that?

> I would have thought though that this fix would have been implemented a long time ago.

It has been reported at least once and then not followed up. I would guess that most people use the I2C core to connect small memory, temperature or time devices to FPGAs that never stretch the clock. So most people don't run into this, and anybody that has had the problem hasn't put in the time to understand it and fix it.

The whole point of Open Projects is that the users have an obligation to find, report, and sometimes even fix the bugs... don't you?

I think you have enough information to file a detailed bug report on this on OpenCore.org including a pointer back to this discussion and your oscilloscope traces.

(Edit) My regisration on OpenCores has come through so I've already put in a short description and pointer to here:

Arb Lost due to Clock Stretch detection failure :: Bugtracker :: OpenCores

> I'm also still looking at the Freescale side to see if this behavior is typical. I have some Freescale folks looking at it now.

Why? The OpenCore is clearly broken. It doesn't handle Clock Stretching. The Freescale part you're using has to clock stretch, and is doing it legally. Fix the I2C Core or use something else.

Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tom, again thank you for your analysis, you have been very helpful & insightful.

I believe I've discovered the problem. The reason why what I'm seeing and what you are seeing is different is because we have an older version of the I2C controller. First a disclaimer... I am not the design owner...I am only helping out so I have always assumed that we were running with was the latest version downloaded from Opencores. Not the case.

Turns out the version that we were using (v1.10) did not assert slave_wait correctly (as I mentioned it was only being looked at when cnt = 0). When we run with the latest version of the I2C controller (which fixes slave_wait) all is well.

Sorry for all the run around. I have certainly learned a lot more about I2C :smileyhappy:

Thanks,

Heath

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

> Turns out the version that we were using (v1.10) did not assert slave_wait correctly

Well done. We fixed the problem and both learned a lot about I2C and (for me) VHDL all over again.

This project doesn't seem to have a "project version number" at all. The latest versions of all the different parts are:

1 - i2c_master_top.vhd: 1.7

2 - i2c_master_byte_ctrl.vhd: 1.5

3 - i2c_master_bit_ctrl.vhd: 1.17

4 - I2C.VHD: unversioned

Then there's the Verilog ones:

1 - i2c_master_top.v: 1.12

2 - i2c_master_byte_ctrl.v: 1.8

3 - i2c_master_bit_ctrl.v: 1.14

4 - Ii2c_master_defines.v: 1.3

5 - timescale.v: unversioned

The latest "package" is defined by the Subversion sequence number, which is "76".

The latest document version is "i2c_specs.pdf 0.9" from 2003, and doesn't detail any of the consequences of recent changes.

Version 1.10 of what? Was it version 1.10 of "i2c_master_bit_ctrl.vhd" dated from 27 Feb 2004 and updated in 7 May 2004. Or Version 1.10 of "i2c_master_bit_ctrl.v" dated 9 Aug 2003 and updated in 7 May 2004? Or Version 1.10 of "i2c_master_top.v"? I don't see how you can easily track the version you're using of this core when it is like this.

Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Tom, it's the version of "i2c_master_bit_ctrl.v". I see what you mean about version control and how confusing that can be.

On to the next problem.

Thanks again,

Heath

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wrote a few messages back:

> Because of the filtering delays, I'd expect to see the results of the counters being stalled in the timing.

> I'd expect the 10us bit rate to "stretch slightly" by at least 1us (two master clocks plus two filter clocks)

> due to these delays. I'm not seeing that in your trace and it worries me that "slave_wait" isn't working as expected.

That was true. It wasn't working.

Now that it IS working, how much slower than 100kHz is it running? I'd expect the clock edge after the ACK (when the MCF legitimately stretches the clock) to be delayed by that amount, which I remember was up to 4us in some of your tests. As well as that, I'd expect EVERY rising edge to be delayed by two fast clocks for the dual-rank-synchroniser, plus another two half-clocks before it gets through the filter.. With your divider I'd expect to see a 30 to 40 clock delay, which at 25MHz is 1.2 to 1.6us.

So I'd expect to see 11.2 to 11.6us/bit or 86 to 89kHz instead of 100kHz. Running 10% to 15% slower than the expected (and standard and normal) rates might cause problems in some applications.

Are you seeing this or have I got my calculations wrong?

Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tom, yes you are correct the clock rate is reduced from nominal... I think is was around 90-92 KHz. I'll double check and get back to you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Tom, with a 25MHz input clock and the prescale set to 0x31, I am seeing the I2C clock rate at 90.9KHz.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for taking that measurement. I'm writing a bug report at OpenCores now.

90.9kHz is an 11us bit time. You're feeding the core a 25MHz/50 or a 500kHz (2us) clock. It must be stretching the positive phase of SCL from 4us to 5us.

Could you please mark this post "Answered" as the original problem has been solved.

Tom

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Some more info on the Coldfire side...

With the Coldfire debugger,I can go in and change the DCO clock speed and or the TRIM value and make the problem go away. When I increase the DCO speed setting to the hi-range or if I decrease the TRIM value what I see is the Coldfire holding on to SDA for shorter durations after ACK.

Measuring from falling edge of SCL to where Coldfire releases SDA after an ACK…

DCO low range -> 13uS

DC0 mid range -> 4-8uS

DC0 hi range -> 4uS (I can also reduce the TRIM value from 0x97 down to ~ 0x30 to get the same behavior)

With our master I2C controller... as long as the slave doesn’t hold ACK more than 4uS after falling edge of SCL everything works fine. If SDA is held longer than 4uS then we get an arbitration loss error. I know above I mentioned that we tried increasing the DCO to hi-range and it didn't make the problem go away... I'm not quite sure we actually changed the DCO because in the lab now when I try running at the hi-range DCO, it works 100% of the time where I don't ever see the ACK hold go for longer than 4uS.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

> where Coldfire releases SDA after an ACK

I would guess the CF has to force a clock-stretch until the code running on the CF has responded to the interrupt (or status poll) and has read the data, making the SCL hardware ready to receive the next byte. You make the master clock faster, you make the code faster. But how repeatable is the CF code? Is it doing anything else that might delay an I2C register read? Does it have any timer interrupts, ADC interrupts, any other peripherals in use at all, either polled or interrupt driven? What sort of code execution variation (showing up as a longer clock stretch) could that cause?

> as long as the slave doesn’t hold ACK more than 4uS after falling edge of SCL everything works fine.

You can't guarantee that. You can't guarantee that a future MCF code change might break any assumptions you've made. You've got multiple clocks in the two devices running at different (and variable) phases. You've got synchronisers that might slip an extra clock every now and then. It may work on the bench, but these things have a habit of coming undone in the field.

Tom