- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- i.MX Processors

- :

- i.MX Processors Knowledge Base

- :

- Interfacing Depth Sensors on NXP i.MX6 boards

Interfacing Depth Sensors on NXP i.MX6 boards

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Interfacing Depth Sensors on NXP i.MX6 boards

Interfacing Depth Sensors on NXP i.MX6 boards

About this document

This document describe the setup detail for Interfacing, Installing, programming (basis) and testing depth cameras with MX6QDL based boards using Ubuntu as rootfs on i.MX6 processors boards.

Supported NXP HW boards:

- i.MX 6QuadPlus SABRE-SD Board and Platform

- i.MX 6Quad SABRE-SD Board and Platform

- i.MX 6DualLite SABRE-SD Board

- i.MX 6Quad SABRE-AI Board

- i.MX 6DualLite SABRE-AI Board

- Depth sensors tested: Microsoft Kinect, ASUS Xtion. Prime Sense Carmine

Software: Gcc, Ubuntu 14.04v, Openni, Python, ROS.

1. Depth Sensor

Depth sensors are 3D vision sensors, mainly used in 3D vision application and motion gaming and robotics. For this paper we are going to use Kinect sensor and Asus Xtion connection to i.MX6 Processor boards and will get 3D images that are converted to finer points called point cloud.

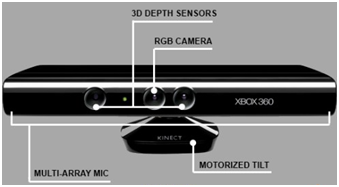

a) Microsoft Kinect

Kinect mainly has an IR camera, IR projector and RGB camera, the IR and projector generates the 3D point cloud of the surroundings. It also has a mic array and motorized tilt for moving up and down.

Kinect reveal that both the video and depth sensor cameras have a 640 x 480-pixel resolution and run at 30 FPS (frames per second). The RGB camera capture 2D color images, whereas the depth camera captures monochrome depth images. Kinect has a depth sensing range from 0.8mts to 3.5 mts

b) Asus Xtion:

Another alternative to kinect is Asus Xtion Pro. Is a 3D sensor designed for motion sensing applications, this sensor is only for 3D sensing and it doesn’t have sound sensing. It has an infrared projector and a monochrome CMOS sensor to capture the infrared data. Xtion can be powered from USB itself and can calculate a sense depth from 0.8mts to 3.5 mts from the sensor.

c) Prime Sense Carmine:

The Prime Sense team (apple bought this company in November 2013) developed the Microsoft Kinect 3D vision, later develop their own 3D vision sensor Carmine. It also works with IR projector, RGB CMOS sensor and a depth CMOS sensor. All sensor are interfaced in System On Chip and is powered trough USB. Carmine capture 640x480 at 30 FPS and can sense from 0.35 mts to 3 mts. Developers can program the device using OpenNI and its wrapper libraries.

All these sensors (Kinect, Carmine and Xtion) support the same software, so there is no special need for programming or general usage. Can be interface to i.MX processor using USB 2.0 interface and programmed using OPENNI and OPENCV.

2. Installation on Ubuntu

For installation steps of Ubuntu trusty on iMX6 boards in your board, please follow up:

https://community.freescale.com/docs/DOC-330147

Install the dependencies:

$ sudo apt-get install -y g++ git python libusb-1.0-0-dev libudev-dev freeglut3-dev doxygen graphviz openjdk-6-jdk libxmu-dev libxi-dev

Create a devel folder

$ sudo mkdir –p devel

$ cd devel

Get OpenNI and the drivers $ git clone https://github.com/OpenNI/OpenNI.git -b unstable $ git clone git://github.com/ph4m/SensorKinect.git

$ git clone https://github.com/PrimeSense/Sensor.git -b unstable

Set the compile flags to build for the i.MX

$ nano OpenNI/Platform/Linux/CreateRedist/Redist_OpenNi.py

From: MAKE_ARGS += ' -j' + calc_jobs_number()

To: MAKE_ARGS += ' –j2'

Must also change the Arm compiler settings for this distribution

$ nano OpenNI/Platform/Linux/Build/Common/Platform.Arm

From: CFLAGS += -march=armv7-a -mtune=cortex-a8 -mfpu=neon -mfloat-abi=softfp #-mcpu=cortex-a8

To: CFLAGS += -mtune=arm1176jzf-s -mfpu=vfp -mfloat-abi=hard

Then run

$ cd OpenNI/Platform/Linux/CreateRedist/ $ ./RedistMaker.Arm $ cd ../Redist/OpenNI-Bin-Dev-Linux-Arm-v1.5.x.x $ sudo ./install.sh

Also edit the Sensor and SensorKinect makefile CFLAGS parameters

$cd ~/devel/

$ nano Sensor/Platform/Linux/Build/Common/Platform.Arm $ nano SensorKinect/Platform/Linux/Build/Common/Platform.Arm

For both files From: CFLAGS += -march=armv7-a -mtune=cortex-a8 -mfpu=neon -mfloat-abi=softfp #-mcpu=cortex-a8

To: CFLAGS += -mtune=arm1176jzf-s -mfpu=vfp -mfloat-abi=hard

And the Sensor and SensorKinect redistribution scripts

$ nano Sensor/Platform/Linux/CreateRedist/RedistMaker $ nano SensorKinect/Platform/Linux/CreateRedist/RedistMaker

for both, change: make -j$(calc_jobs_number) -C ../Build

to: make –j2 -C ../Build

The create the redistributables Sensor (xtion and primesense) and Kinect (sensor Kinect)

$ cd Sensor/Platform/Linux/CreateRedist/ $ ./RedistMaker Arm

$ cd ~/devel/

$ cd SensorKinect/Platform/Linux/CreateRedist/ $ ./RedistMaker Arm

$ cd ~/devel/

Then install PrimeSense and Kinect

$ cd Sensor/Platform/Linux/Redist/Sensor-Bin-Linux-Arm-v5.1.x.x $ sudo ./install.sh

$ cd ~/devel/SensorKinect/Platform/Linux/Redist/Sensor-Bin-Linux-Arm-v5.1.2.x $ sudo ./install.sh

3. Testing Installation:

Connect the sensor power supply, Connect the Kinect to the NXP board USB port. (check with lsusb). For my board:

Imx6q@imx6q:~/devel$ lsusb

Bus 001 Device 022: ID 045e:02ae Microsoft Corp. Xbox NUI Camera

Bus 001 Device 021: ID 045e:02ad Microsoft Corp. Xbox NUI Audio

Bus 001 Device 019: ID 045e:02c2 Microsoft Corp. Kinect for Windows NUI Motor

Now edit theglobal Kinect settings in Ubuntu

$ sudo nano /usr/etc/primesense/GlobalDefaultsKinect.ini

and uncomment this line UsbInterface=1

and changed it to 1 instead of 2

UsbInterface=2

$ sudo modprobe -r gspca_kinect

And then blacklisting to avoid it to be auto-loaded on boot:

$ sudo sh -c 'echo "blacklist gspca_kinect" > /etc/modprobe.d/blacklist-kinect.conf'

Then:

$ cd ~/devel/OpenNI/Platform/Linux/Bin/Arm-Release

$ sudo ./Sample-NiSimpleRead

You should get something like:

Reading config from: '../../../../Data/SamplesConfig.xml'

…

Frame 40 Middle point is: 5050. FPS: 30.771788

Frame 41 Middle point is: 5050. FPS: 30.866173

Frame 42 Middle point is: 5050. FPS: 30.850958

Frame 43 Middle point is: 5050. FPS: 30.779032

Frame 44 Middle point is: 5050. FPS: 30.767746

Frame 45 Middle point is: 5050. FPS: 30.800463

Frame 46 Middle point is: 5050. FPS: 30.653118

Frame 47 Middle point is: 5050. FPS: 30.741659

Frame 98 Middle point is: 5050. FPS: 30.339321

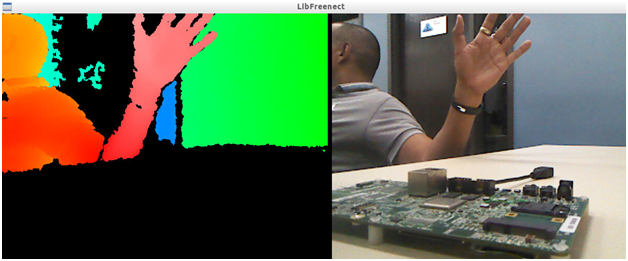

LibFreenect:

$ cd ~/devel/

$ git clone https://github.com/OpenKinect/libfreenect.git $ cd libfreenect $ mkdir build $ cd build $ cmake .. –L –DBUILD_AUDIO=ON $ make $ sudo make install

Connect the kinect sensor power supply, connect the Kinect to the NXP board USB port and test any sample such as:

$ sudo freenect-glview

Note: If glview gives a shared library error:

You need to refresh your ldconfig cache. The easiest way to do this is to create a file usr-local-libs.conf (or whatever name you wish) with the following lines:

/usr/local/libSwitch to root account and move it to /etc/ld.so.conf.d/usr-local-libs.conf. Then update the ldconfig cache:

$ su root$ mv ~/usr-local-libs.conf /etc/ld.so.conf.d/usr-local-libs.conf$ /sbin/ldconfig –v$ exit

References:

2) https://www.asus.com/us/3D-Sensor/Xtion_PRO_LIVE/

3) Learning Robotics using phyton by Lentin Joseph. http://www.amazon.com/Learning-Robotics-Python-Lentin-Joseph/dp/1783287535/ref=sr_1_1