- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- MCUXpresso Training Hub

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

-

What is the output signature of the ssd_mobilenet_v3 object detection model?

Hi team,

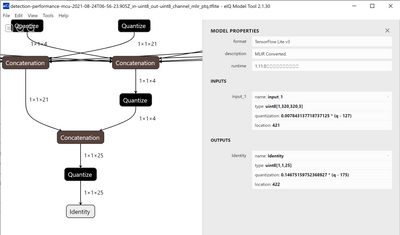

We are using eIQ toolkit for training a object detection model based on VOC dataset. we selected the performance model and trained the model. Once after training the model, we exported the model and by using eIQ model tool, we were able to visualize the model.

The output tensor (Identify) shape of the model is 1x1x25. But as far as we know, the object detection model(https://www.tensorflow.org/lite/examples/object_detection/overview) output tensor will have bounding boxes locations and confidences.

Can anyone please explain the output tensor signature of this model developed in eIQ portal? how to interpret the 1x1x25 tensor output?

Thanks for feedback @Ramson ,

assuming one object per image for performance detection, "[5-7] - Scores" does not make sense as we should get only one score for only one detected class?

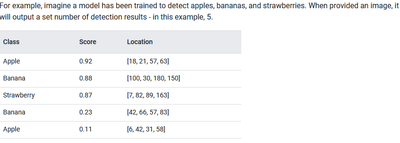

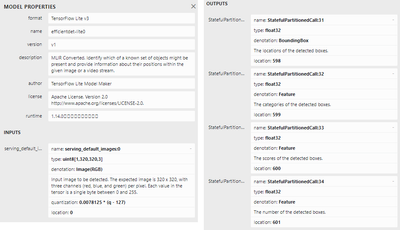

Based on https://www.tensorflow.org/lite/examples/object_detection/overview#output_signature and this image

we should get something like [1,1,6]=[0-3] locations, [4] class, [5] score.

I do not think that printing that values can be helpful as I am getting an error as described above

IndexError: list index out of rangeHowever, I have prepared a piece of code, using SSD Mobilenet v3 in TFLite version, downloaded from TensorFlow Model Zoo.

Repo available here at my Github

At the end, you can see how to interpret model's outputs (box locations, classes, scores, number of detections). Hope it will be helpful in somehow.

Therefore, it is bit strange how model from eIQ Portal looks like and how to interpret it.

Hi @MarcinChelminsk ,

As we can see there is only one output tensor and not 4. Just try to get the output tensor with index 0 as shown below.

boxes = get_output_tensor(interpreter, 0)

And then print the values in the boxes. The boxes will be of shape [1,1,8] . So print those 8 values accordingly. If your are still getting index error while printing. attach the code how you are trying to print.

Regards,

Ramson Jehu K

there you go:

# Get all outputs from the model

boxes = get_output_tensor(interpreter, 0)

print("Printing boxes locations\n", boxes)

print(boxes.shape, boxes.dtype)

np.savetxt("boxes_locations.txt", boxes, delimiter=' ')

And results in attached *.txt file. Shape of the array is:

(2034, 8)

float32

Hi @MarcinChelminsk ,

I see that you have mentioned previously that

" After these changes, in case of my model received after training and exporting from eIQ Portal (model is for detecting 3 categories of fruits, output signature is [1,1,8], I do not why these values),"

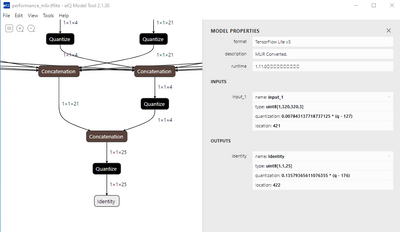

But now the output signature seems to be [2034,8] , it should have been [1,8]. Did you use TOCO optimizer for exporting your model? Because i have seen this difference between TOCO and MLIR optimizations for the same model. Refer below:

This is output when TOCO is used:

And this is when MLIR is used:

(Note: ignore that [1,1,25], because i have 20 classes, In your case it would be [1,1,8])

If this is the case, try exporting using MLIR optimization and try printing those values, so that we get [1,8] which would be easier to interpret compared to [2034,8].

I have used default settings when exporting, and exported name is detection-performance-mcu-2021-09-01T13-55-40.335Z_mlir.tflite (so I believe it refers to MLIR converter option).

[1,1,8] has been read from eIQ Model Tool when I opened exported model, here is how it looks like in my case:

[2034,8] is from code I attached earlier

I have given a try to TOCO converter and see below:

Therefore, looks different comparing to your outputs.

@MarcinChelminsk , the int and float difference is because i have used quantization.

From the image u shared, using MLIR converted model, you are getting [1,1,8] output tensor right?. Then in code also it should be [1,8] and not [2034,8]. Try printing those values as you did before. We will try to infer something from that.

it is totally clear what you are saying, could you try this code on your end (change please model_path)?

# MLIR

print("* * * * * MLIR * * * * *")

# Load the TFLite model

model_path = '/content/detection-performance-mcu-2021-09-01T13-55-40.335Z_mlir.tflite'

interpreter = tf.lite.Interpreter(model_path=model_path)

interpreter.allocate_tensors()

# Get input and output tensors BEFORE interpreter.invoke()

input_details = interpreter.get_input_details()

print("INPUT DETAILS", input_details)

output_details = interpreter.get_output_details()

print("OUTPUT DETAILS", output_details)

interpreter.invoke()

# Get input and output tensors AFTER interpreter.invoke()

input_details = interpreter.get_input_details()

print("INPUT DETAILS", input_details)

output_details = interpreter.get_output_details()

print("OUTPUT DETAILS", output_details)

# TOCO

print("* * * * * TOCO * * * * *")

# Load the TFLite model

model_path = '/content/detection-performance-mcu-2021-09-01T13-55-40.335Z_toco.tflite'

interpreter = tf.lite.Interpreter(model_path=model_path)

interpreter.allocate_tensors()

# Get input and output tensors BEFORE interpreter.invoke()

input_details = interpreter.get_input_details()

print("INPUT DETAILS", input_details)

output_details = interpreter.get_output_details()

print("OUTPUT DETAILS", output_details)

interpreter.invoke()

# Get input and output tensors AFTER interpreter.invoke()

input_details = interpreter.get_input_details()

print("INPUT DETAILS", input_details)

output_details = interpreter.get_output_details()

print("OUTPUT DETAILS", output_details)In my case, MLIR model outputs different output tensor shape after calling interpreter.invoke() than before calling this method (please check [1,1,8] vs [1,2034,8)]

OUTPUT DETAILS [{'name': 'Identity', 'index': 407, 'shape': array([1, 1, 8], dtype=int32), 'shape_signature': array([-1, -1, 8], dtype=int32), 'dtype': <class 'numpy.float32'>, 'quantization': (0.0, 0), 'quantization_parameters': {'scales': array([], dtype=float32), 'zero_points': array([], dtype=int32), 'quantized_dimension': 0}, 'sparsity_parameters': {}}]

OUTPUT DETAILS [{'name': 'Identity', 'index': 407, 'shape': array([ 1, 2034, 8], dtype=int32), 'shape_signature': array([-1, -1, 8], dtype=int32), 'dtype': <class 'numpy.float32'>, 'quantization': (0.0, 0), 'quantization_parameters': {'scales': array([], dtype=float32), 'zero_points': array([], dtype=int32), 'quantized_dimension': 0}, 'sparsity_parameters': {}}]In case of TOCO, they are the same

@MarcinChelminsk , I'm sorry, I'm not able to run my model since im facing this issue :https://community.nxp.com/t5/eIQ-Machine-Learning-Software/Reshape-error-in-ssd-mobilent-v3-object-d...

Hi @MarcinChelminsk ,

I think the performance object detection model detects only one object in the image.

The output signature [1,1,8] can be interpreted as below

[0-3] - locations

[4] - Classes

[5-7] - Scores.

Since you have 3 classes, the scores are of three values. If you see in my case, it was[1,1,25] because I had 20 classes.

The order might change, I'm not sure of this answer. Need clarification from NXP side.

can you run your model through this tutorial: https://www.tensorflow.org/lite/tutorials/model_maker_object_detection ?

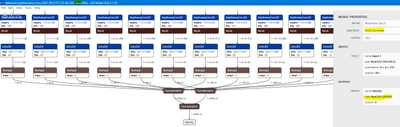

As first step before running your own model, I suggest hitting Run in Google Colab and then Runtime->Run All, it creates model.tflite file (it is for detecting 5 classes of food). Checking this reference model in eIQ Model Tool gives as follows:

So we can see confirmation of the info you have attached above, output tensor will have locations, classes, scores, number of detections https://www.tensorflow.org/lite/examples/object_detection/overview#output_signature

This info is used in notebook code:

# Get all outputs from the model

boxes = get_output_tensor(interpreter, 0)

classes = get_output_tensor(interpreter, 1)

scores = get_output_tensor(interpreter, 2)

count = int(get_output_tensor(interpreter, 3))

Now, import to Google Colab your model by adding this piece of code (by default is should go to in /content directory):

from google.colab import files

uploaded = files.upload()

for fn in uploaded.keys():

print('User uploaded file "{name}" with length {length} bytes'.format(

name=fn, length=len(uploaded[fn])))

Afterwards, look at section (Optional) Test the TFLite model on your image and change few lines of code:

- change model path to your model path

- change classes to your classes

model_path = '/content/detection-performance-mcu-2021-09-01T13-55-40.335Z_mlir.tflite'

# model_path = 'model.tflite'

# Load the labels into a list

classes = ['Apple', 'Banana', 'Orange']

# classes = ['???'] * model.model_spec.config.num_classes

# label_map = model.model_spec.config.label_map

# for label_id, label_name in label_map.as_dict().items():

# classes[label_id-1] = label_name

And final changes here:

- search on the Internet example image you want to detect objects, in my case some fruits and update INPUT_IMAGE_URL

- DETECTION_THRESHOLD up to you

#@title Run object detection and show the detection results

INPUT_IMAGE_URL = "https://messalonskeehs.files.wordpress.com/2013/02/screen-shot-2013-02-06-at-10-50-37-pm.png" #@param {type:"string"}

# INPUT_IMAGE_URL = "https://storage.googleapis.com/cloud-ml-data/img/openimage/3/2520/3916261642_0a504acd60_o.jpg" #@param {type:"string"}

DETECTION_THRESHOLD = 0.6 #@param {type:"number"}

After these changes, in case of my model received after training and exporting from eIQ Portal (model is for detecting 3 categories of fruits, output signature is [1,1,8], I do not why these values), I am getting an error:

---------------------------------------------------------------------------

IndexError Traceback (most recent call last)

<ipython-input-48-497a12c43559> in <module>()

20 TEMP_FILE,

21 interpreter,

---> 22 threshold=DETECTION_THRESHOLD

23 )

24

2 frames

<ipython-input-47-ce04f95b8354> in run_odt_and_draw_results(image_path, interpreter, threshold)

79

80 # Run object detection on the input image

---> 81 results = detect_objects(interpreter, preprocessed_image, threshold=threshold)

82

83 # Plot the detection results on the input image

<ipython-input-47-ce04f95b8354> in detect_objects(interpreter, image, threshold)

51 # Get all outputs from the model

52 boxes = get_output_tensor(interpreter, 0)

---> 53 classes = get_output_tensor(interpreter, 1)

54 scores = get_output_tensor(interpreter, 2)

55 count = int(get_output_tensor(interpreter, 3))

<ipython-input-47-ce04f95b8354> in get_output_tensor(interpreter, index)

38 def get_output_tensor(interpreter, index):

39 """Retur the output tensor at the given index."""

---> 40 output_details = interpreter.get_output_details()[index]

41 tensor = np.squeeze(interpreter.get_tensor(output_details['index']))

42 return tensor

IndexError: list index out of range

This comes after calling this:

classes = get_output_tensor(interpreter, 1)

we try to read output tensor at index which does not exist, we have only one called Identity, as far as can understand it properly.

So I am wondering now, how to handle object detection model received from eIQ Portal?