- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- MCUXpresso Training Hub

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

-

Hi, we are trying to deploy the open-source object detection model ( https://www.tensorflow.org/lite/examples/object_detection/overview) on iMX.RT.1176 evk kit. We imported the tensorflow_lite_label_image example from the SDK v2.9.0. We have converted the object detection model .tflite file to c array using xxd. and replaced the model data and model length in the model_data.h file.

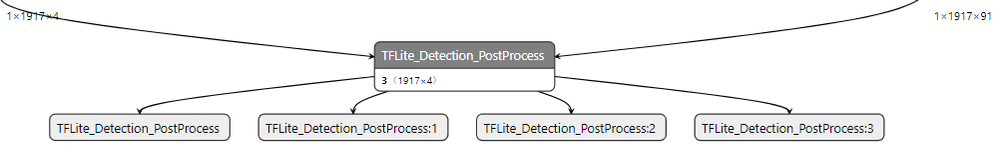

The object detection model contains custom operator TFLite_Detection_PostProcess as show below in the image.

How to add the custom operator to the tflite::MutableOpResolver ?

Since its not added, we are getting the following error:

ERROR: Encountered unresolved custom op: TFLite_Detection_PostProcess.

ERROR: Node number 63 (TFLite_Detection_PostProcess) failed to prepare.

Failed to allocate tensors!

Failed initializing model

Please help. Thanks in Advance

Regards,

Ramson

已解决! 转到解答。

Hello Ramson,

my first suggestion would be to move to SDK 2.10, as it contains all of the latest updates. With 2.10 we moved from TF Lite to TF Lite Micro, which is better optimized for MCUs. The inference engine still supports TF Lite models, it's just the computations and the library that are specifically optimized for ARM MCUs.

Next, switch to the AllOpsResolver first, to make sure the operation is actually supported by TF Lite (Micro). If that works, then you can open the ops cpp file in the source/model folder, register all the required ops and remove all the unnecessary ones.

If that fails, the only option left would be to implement or port an existing implementation of the operation into the tensorflow lite library.

Regards,

David

suggested solution from me:

- source/model/model_mobilenet_ops.cpp add:

#include "tensorflow/lite/kernels/custom_ops_register.h"and update resolver operations with (just add straight after existing resolver.AddBuiltin()

resolver.AddCustom("TFLite_Detection_PostProcess", tflite::ops::custom::Register_TFLite_Detection_PostProcess());- eiq/tensorflow-lite/tensorflow/lite/kernels/custom_ops_register.h add:

TfLiteRegistration* Register_DETECTION_POSTPROCESS();

TfLiteRegistration* Register_TFLite_Detection_PostProcess() {

return Register_DETECTION_POSTPROCESS();

}the same requirements as in first post, i.e. i.MXRT1170-EVK, SDK2.9.0, tensorflow_lite_label_image, model from https://www.tensorflow.org/lite/examples/object_detection/overview

Hello Ramson,

my first suggestion would be to move to SDK 2.10, as it contains all of the latest updates. With 2.10 we moved from TF Lite to TF Lite Micro, which is better optimized for MCUs. The inference engine still supports TF Lite models, it's just the computations and the library that are specifically optimized for ARM MCUs.

Next, switch to the AllOpsResolver first, to make sure the operation is actually supported by TF Lite (Micro). If that works, then you can open the ops cpp file in the source/model folder, register all the required ops and remove all the unnecessary ones.

If that fails, the only option left would be to implement or port an existing implementation of the operation into the tensorflow lite library.

Regards,

David