- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- MCUXpresso Training Hub

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

-

- Home

- :

- ソフトウェア・フォーラム

- :

- eIQ機械学習ソフトウェア

- :

- Re: Glow vs TFLM performance benchmarks on i.MX RT1060?

Glow vs TFLM performance benchmarks on i.MX RT1060?

- RSS フィードを購読する

- トピックを新着としてマーク

- トピックを既読としてマーク

- このトピックを現在のユーザーにフロートします

- ブックマーク

- 購読

- ミュート

- 印刷用ページ

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

I was reading NXP's blog on Glow compiler for PyTorch at https://medium.com/pytorch/glow-compiler-optimizes-neural-networks-for-low-power-nxp-mcus-e095abe149...

and this blog says i.MX RT1060 used to run Glow and Tensorflow lite for Micro (TFLM) frameworks. Are there any recorded performance benchmarks (inference time, memory consumption, supported operations, etc) to compare Glow and TFLM on the same device? Can you please share it if you have it?

解決済! 解決策の投稿を見る。

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello @ramkumarkoppu_p,

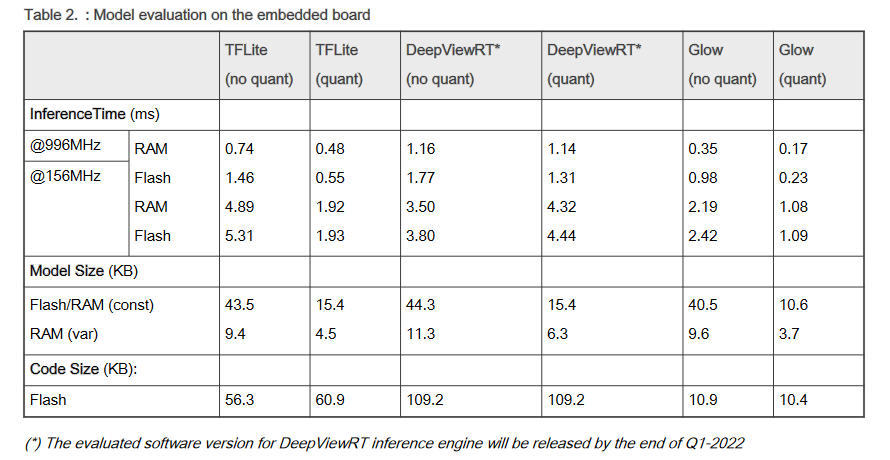

one such comparison can be found in AN13562

However, the results are very model dependent. In general, it has been my experience that GLOW outperformed TF Lite Micro slightly but there have also been cases where the situation was reversed. It all depends on the specific layers used in the model, what versions are supported etc. Ultimately, it just comes down to experimentation.

Since it's possible to convert TensorFlow models to ONNX and then compile them with GLOW, you can always train your model in TensorFlow and then compare the performance by converting it both to TF Lite and with GLOW and decide for yourself, which suits your project better.

As for supported operations, that depends on the version of TensorFlow/GLOW currently supported in the SDK. Please have a look at the user guides in the doc folder in the SDK.

I also found this article: https://towardsdatascience.com/tflite-micro-vs-glow-aot-6524be02ba2a which explains the difference between GLOW and TF Lite Micro pretty nicely. It's almost a year old so the data might be outdated at this point but the general idea presented in the article still applies.

Best Regards,

David

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi David,

I have a TF Lite model which is targeted to run on Google Coral Dev Board. If I run the same TF Lite model on ARM Ethos-U65 microNPU which will be available on i.MX 93 application processor, will there be a performance degradation? Has NXP done any tests with ARM Ethos-U65 microNPU in terms of Inference time, operators availability, power usage?

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello @ramkumarkoppu_p,

I am not able to comment on future releases, sorry. The i.MX 93 is still in preproduction and all its specifications are still subject to change without notice, as stated on its NXP page.

Also, since this is a thread about GLOW vs TFLM on i.MX RT1060, could you please open a new thread in case you have questions that don't relate to the topic here? It helps keep the community easier to navigate. (And since I'm focused on RT devices, I am not able to provide proper i.MX application processors support anyway.)

Best Regards,

David

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello @ramkumarkoppu_p,

one such comparison can be found in AN13562

However, the results are very model dependent. In general, it has been my experience that GLOW outperformed TF Lite Micro slightly but there have also been cases where the situation was reversed. It all depends on the specific layers used in the model, what versions are supported etc. Ultimately, it just comes down to experimentation.

Since it's possible to convert TensorFlow models to ONNX and then compile them with GLOW, you can always train your model in TensorFlow and then compare the performance by converting it both to TF Lite and with GLOW and decide for yourself, which suits your project better.

As for supported operations, that depends on the version of TensorFlow/GLOW currently supported in the SDK. Please have a look at the user guides in the doc folder in the SDK.

I also found this article: https://towardsdatascience.com/tflite-micro-vs-glow-aot-6524be02ba2a which explains the difference between GLOW and TF Lite Micro pretty nicely. It's almost a year old so the data might be outdated at this point but the general idea presented in the article still applies.

Best Regards,

David

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Thanks David for the response. My main issue is, I would like to use Research models (not yet standard) created by PyTorch but could not find PyTorch version of inference engine similar to what we have TF Lite Micro for Tensorflow to run on Cortex-M. The closest I find is Glow which seems to have PyTorch support. But I don't know if Glow can take advantage of NPUs like ARM Ethos which NXP is also planning to have it on new versions of MCUs.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

I see, in that case I would definitely recommend you give GLOW a try. As for the accelerator support, you could have a look at the RT685, which offers neural network acceleration using the HiFi4 DSP and is fully supported by GLOW.

We do plan on supporting NPUs in future devices with our eIQ enablement and will be sharing more details on that in the future.