- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- MCUXpresso Training Hub

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

-

- Home

- :

- QorIQ Processing Platforms

- :

- T-Series

- :

- Re: T2080 10GbE network has low speed

T2080 10GbE network has low speed

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

T2080 10GbE network has low speed

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I tried using the 10GbE (copper) networking on the T2080RDB-PC board and I cannot get the connection to work at 10Gbit/s. I'm getting around 1Gbit/s, which is waay below the requirements.

Do you have some suggestions how to get 10Gbit/s?

The lack of this fast networking is blocking us from using the board in this project.

Thanks!

I'm attaching some configuration of the system:

U-Boot setup

=> print bootcmd

bootcmd=setenv bootargs root=/dev/mmcblk0p1 rw rootwait console=$consoledev,$baudrate; ext2load mmc 0:1 $loadaddr /boot/$bootfile; ext2load mmc 0:1 $fdtaddr /boot/$fdtfile; bootm $loadaddr - $fdtaddr

=> print fdtfile

fdtfile=uImage-t2080rdb-usdpaa.dtb

=> print bootfile

bootfile=uImage

Linux setup

root@t2080rdb:~# fmc --pdl /etc/fmc/config/hxs_pdl_v3.xml --pcd /etc/fmc/config/private/t2080rdb/RRFFXX_P_66_15/policy_ipv4.xml --config /etc/fmc/config/private/t2080rdb/RRFFXX_P_66_15/config.xml

root@t2080rdb:~# ethtool fm1-mac1

Settings for fm1-mac1:

Supported ports: [ ]

Supported link modes: 10000baseT/Full

Supported pause frame use: Symmetric Receive-only

Supports auto-negotiation: No

Advertised link modes: 10000baseT/Full

Advertised pause frame use: Symmetric Receive-only

Advertised auto-negotiation: No

Speed: 10000Mb/s

Duplex: Full

Port: MII

PHYAD: 0

Transceiver: external

Auto-negotiation: on

Supports Wake-on: d

Wake-on: d

Current message level: 0xffffffff (-1)

drv probe link timer ifdown ifup rx_err tx_err tx_queued intr tx_done rx_status pktdata hw wol 0xffff8000

Link detected: yes

root@t2080rdb:~# iperf -s

------------------------------------------------------------

Server listening on TCP port 5001

TCP window size: 85.3 KByte (default)

------------------------------------------------------------

[ 4] local 9.4.113.152 port 5001 connected with 9.4.113.46 port 53618

[ ID] Interval Transfer Bandwidth

[ 4] 0.0-10.0 sec 1.39 GBytes 1.19 Gbits/sec

root@t2080rdb:~# hostname

t2080rdb

root@t2080rdb:~# uname -a

Linux t2080rdb 3.12.37-rt51+g43cecda #11 SMP Wed Mar 30 17:11:05 CEST 2016 ppc64 GNU/Linux

root@t2080rdb:~# cat /proc/cmdline

root=/dev/mmcblk0p1 rw rootwait console=ttyS0,115200

root@t2080rdb:~# cat /etc/lsb-release

LSB_VERSION="core-4.1-noarch:core-4.1-powerpc64"

DISTRIB_ID=fsl-qoriq

DISTRIB_RELEASE=1.9

DISTRIB_CODENAME=fido

DISTRIB_DESCRIPTION="QorIQ SDK (FSL Reference Distro) 1.9"

Original Attachment has been moved to: t2080-linux-config.zip

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

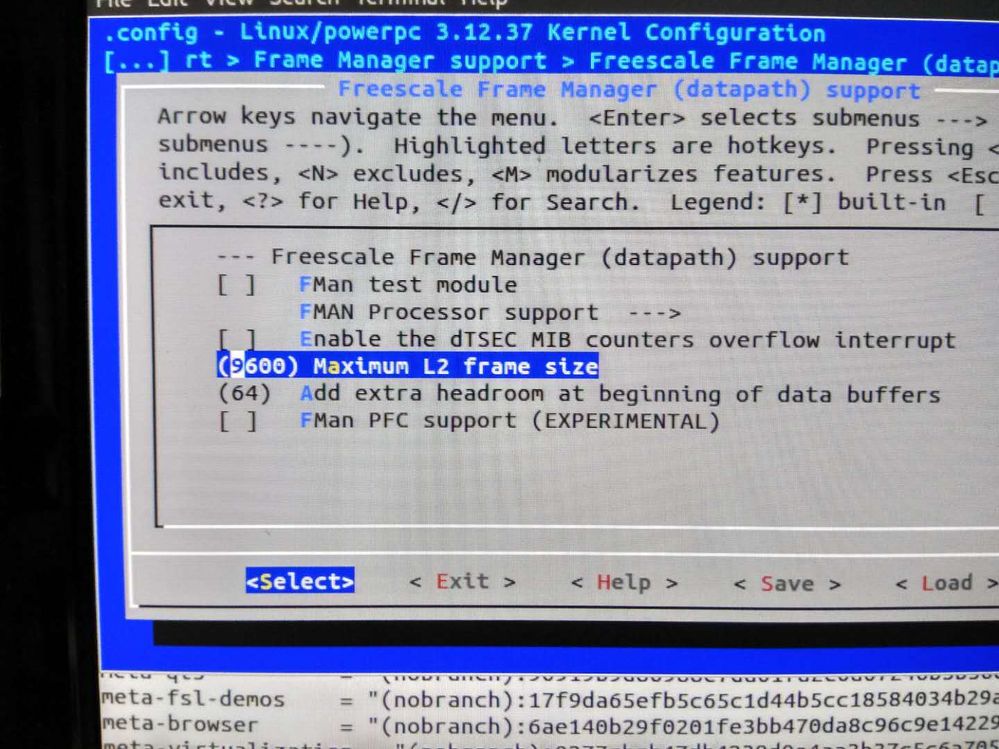

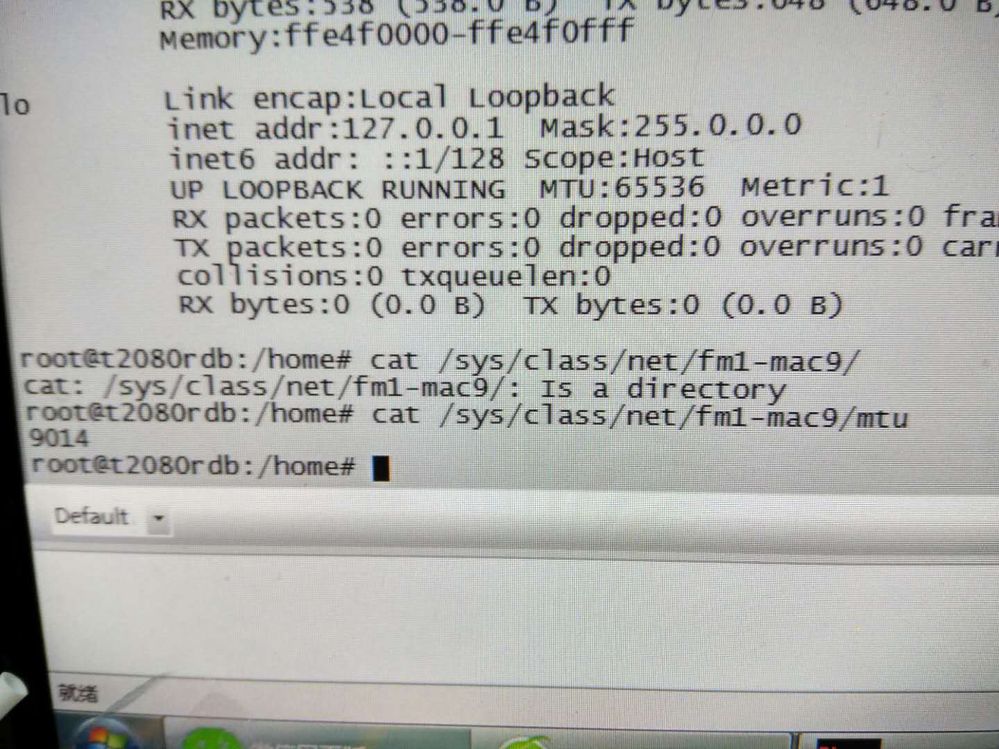

Hello, have you solved this problem? I have encountered this problem now. I have set MAXFRM = 9600 and mtu=9014. Now the network transmission can reach 700MB/s, but the network reception is only 200MB/s.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@小贱 牛

As the upstairs said, set the device MTU to 9600, your peer and routing or switching support MTU 9600 Obviously, you have identified the network interface wrong, all upstairs do not want to answer the mentally handicapped problem. . .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Sasa Tomic,

It seems that you didn't use the dts for USDPAA, because you can see the ethernet port fm1-mac1 in Linux, it is not visible under Linux in USDPAA environment.

Regarding this performance problem, I checked your Kernel configuration file, and suspect that it is caused by the improper configuration for the parameter CONFIG_FSL_FM_MAX_FRAME_SIZE.

In the default MAXFRM is 1522, allowing for MTUs up to 1500, it is configured as 9600 in your Kernel config file.

MAXFRM directly influences the partitioning of FMan's internal MURAM among the available Ethernet ports, because it determines the value of an FMan internal parameter called "FIFO Size".

The DPAA Ethernet driver will conservatively allocate all new skbuffs large enough to accomodate MAXFRM, plus some DPAA-private room. This causes a lot of memory being wasted, and in such cases where the actual MTU is smaller (e.g. 1500), but the MAXFRM is jumbo-sized (e.g. 9600), there will be a high pressure on the buffer pools, possibly leading to memory exhaustion. So please adjust MAXFRM and MTU together to make them suitable for your system.

Have a great day,

Yiping

-----------------------------------------------------------------------------------------------------------------------

Note: If this post answers your question, please click the Correct Answer button. Thank you!

-----------------------------------------------------------------------------------------------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Yiping,

thanks for you answer. I already started thinking that I would get no answer at all.

I made a small mistake in my original post: I have not written that I tried using both uImage-t2080rdb-usdpaa-shared-interfaces.dtb and uImage-t2080rdb-usdpaa.dtb.

If I boot with uImage-t2080rdb-usdpaa.dtb I see no network interfaces and my target application needs to have regular network interfaces to operate. Having to use non-standard network interfaces would be a show-stopper for this project.

Could you please describe how to obtain regular network interfaces if I boot with uImage-t2080rdb-usdpaa.dtb fdtfile? Also, could you please describe how to test the network speed if I boot with the same usdpaa dtb file?

I wanted to use iperf or netperf because these are the tools I know and they are very similar to the target application.

I have only seen instructions to run netperf with USDPAA on P4080DS, but I was not able to reproduce the same setup for T2080. Would you be able to point me to a good reference setup that would work for me on T2080RDB?

In summary, I need:

* 2x10G interfaces (or better), with regular Linux interfaces visible in ifconfig. I would eventually bond these interfaces to get a faster virtual interface.

* Support for jumbo packets would be a plus, since without this our other networking equipment might not be able to take advantage of the full 10G speed.

Can T2080 provide me with this?

Thanks,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Sasa Tomic,

The shared MAC Ethernet interface is shared by Linux Kernel and USDPAA. According to your requirement, it seems that you want to assign parts of Ethernet ports to Linux Kernel and parts to USDPAA.

If you only want to assign FMAN 0xfe4e0000 and 0xfe4e2000 to Linux Kernel, and visible in Linux as fm1-mac1 and fm1-mac2.

Please modify the dts file arch/powerpc/boot/dts/t2080rdb-usdpaa.dts to delete the following Ethernet ports definition for USDPAA, the normal Linux Kernel Ethernet ports have already been defined in t2080rdb.dts included by t2080rdb-usdpaa.dts. Please refer to the attachment.

ethernet@0 {/* 10G */

compatible = "fsl,t2080-dpa-ethernet-init", "fsl,dpa-ethernet-init";

fsl,bman-buffer-pools = <&bp7 &bp8 &bp9>;

fsl,qman-frame-queues-rx = <0x90 1 0x91 1>;

fsl,qman-frame-queues-tx = <0x98 1 0x99 1>;

};

ethernet@1 {/* 10G */

compatible = "fsl,t2080-dpa-ethernet-init", "fsl,dpa-ethernet-init";

fsl,bman-buffer-pools = <&bp7 &bp8 &bp9>;

fsl,qman-frame-queues-rx = <0x92 1 0x93 1>;

fsl,qman-frame-queues-tx = <0x9a 1 0x9b 1>;

};

If you want to configure your system to support jumbo networking environment, it's correct to configure CONFIG_FSL_FM_MAX_FRAME_SIZE as 9600, meanwhile please also configure large MTU value for the Ethernet port.

For example

# ifconfig fm1-gb0 mtu 9014

Have a great day,

Yiping

-----------------------------------------------------------------------------------------------------------------------

Note: If this post answers your question, please click the Correct Answer button. Thank you!

-----------------------------------------------------------------------------------------------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Yiping,

actually the requirements are that we need a completely regular ifconfig network interface (ie no USDPAA) that gives us 10Gbit/s speed with conventional applications. I only started playing with USDPAA because I have been told that I have to do that in order to get high speed. Let me say it again: I do not need USDPAA at all. I just started playing with it out of desperation.

Could you please confirm whether it is possible to get 2x10Gbit/s with regular Linux applications (e.g. NFS, ceph, or something alike) over regular ifconfig network interfaces on T2080? One-time configuration with fmc would be perfectly fine, as well as some kernel tweaks.

After many weeks/months of searching, I still haven't been able to find a reference setup that gives me the target speed.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Sasa Tomic,

In USDPAA environment, the networking interfaces are not assigned to Linux Kernel, the networking application should be developed following USDPAA framework.

If you don't need USDPAA, please just use the dtb t2080rdb.dtb, all the networking interfaces are visible in Linux.

As I mentioned previously, in jumbo frame networking system, please configure a large value MTU to get better performance.

In 8 core 20G jumbo frame netperf TCP stream testing(normal Linux environment), on T2080 1.0 platform, we could get about 12 Gbit/s throughput (Sys Clock_DDR_CCB_FMAN1 1533/800/600/700).

So your performance test data is too low as you mentioned previously, please adjust your test environment.

If further assistance is needed, please provide your test console log to me to do more investigation.

Have a great day,

Yiping

-----------------------------------------------------------------------------------------------------------------------

Note: If this post answers your question, please click the Correct Answer button. Thank you!

-----------------------------------------------------------------------------------------------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Yiping

I have the same problem as the author of this article,the T2080RDB 10GbE (copper) networking has low speed。

I also try to use the method you mentioned:1、MAXFRM = 9600

2、mtu = 9014

But the network is still slow。Is there any other way?