- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

-

- Home

- :

- Product Forums

- :

- MPC5xxx

- :

- Re: Questions about Cache in the MPC5777C

Questions about Cache in the MPC5777C

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Questions about Cache in the MPC5777C

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Everyone,

I used MPC5777C for my application.

Our OEM want to disable the cache for SRAM.

I mean SRAM cache inhibited via MMU TLB configuration.

I supposed that their intend(cache inhibit) is data coherency between core 0 and core 1.

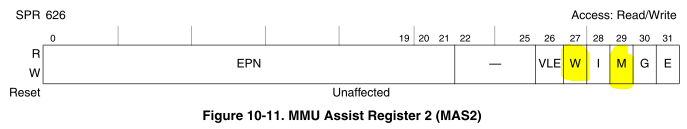

But, I think It can be achieved by Data coherency bit at mas2. Isn't it?

In other words, Even though apply cache for SRAM, Prevent some corrupted data between cores If data coherency bit is set.

Actually, I imlemented data copy routine from core 1 to core 0. There is little bit large data and so that copying time is 100us when cacheable. But cache disabled then 230us takes approximately.

So I think I can get more effective performance through the cache.

Or If there is other side effect, Please let me know.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

yes, Platform Coherency Unit can be used to maintain the coherency between cores and also DMA. It is necessary to configure the MMU pages with M and W bits set:

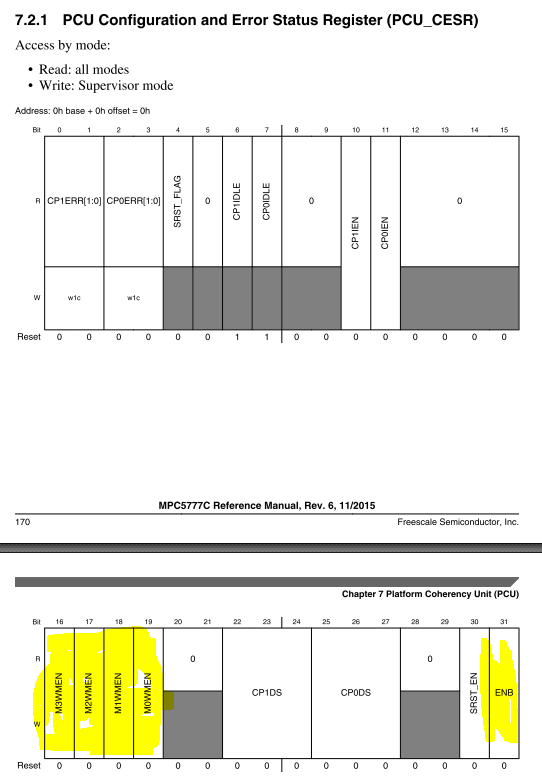

And then the PCU can be enabled by setting MnWMEN bits (to enable individual masters to be monitored) and ENB (to globally enable PCU monitoring) in PCU_CESR register:

This should help to achieve higher performance.

Regards,

Lukas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Lukas,

is setting M2WMEN and M3WMEN required if only coherency between core0 and core1 is needed?

in the simple case of cache coherency required only between core0 and core1 is needed to check for snooping error (CP1ERR[1:0], CP0ERR[1:0]) and to enable/manage snooping error interrupt?

Thanks,

Francesco

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First, overflow error is not expected to occur. There are safeguard mechanisms in place to prevent the queues from overflowing. Specifically, when the queues fill to a certain capacity, the PCU notifies the cores and DMAs to temporarily stall issuing any stores to shared space until the queue dips below the threshold. This should prevent the queue from ever overflowing. The overflow error condition was installed as a backup mechanism in the unlikely event that the DMAs and/or cores do not respond to the stall request.

Yes, user is supposed to check the error flags CP1ERR[1:0] and CP0ERR[1:0]. In case of snooping error, a core can get non-coherent data. In this case, user can invalidate the data cache and reset the PCU by SRST_EN. Before that, user can read more details about the error in error registers. It’s rather application dependent how to react on such error.

Regards,

Lukas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Lukas,

According to your explanations above, data writers are stalled if the PCU's cache

synchronizing queue gets full. This means for repeated writes to a cache coherent memory

area that the memory bandwidth becomes the minimum of the throughput of memory and of

queue. The likelihood that the queue's throughput is significantly lower than the normal memory

throughput seems high. Which would mean that the memory throughput is reduced to that of

the queue.

Is this correct?

If yes, then the question arises to which extend the normal memory throughput is higher

than that of the queue?

Is setting the property "cache inhibited" of a memory area in the MMU of both the cores an

equivalent way of ensuring data coherence (besides timing)?

My final, resulting question: If we want to use bursts of writes of data for cross-core

communication (e.g. memcpy of a few hundred Byte at once), would it be better to use cache

inhibited memory instead of cached memory with PCU maintained cache coherence? Is the PCU

mechanism rather intended for occasional writes?

Regards

Peter

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Francesco,

It is not necessary to configure M2WMEN and M3WMEN, it's for DMA only.

I'm not really sure about the error handling. It is not clear from RM. Let me check. I guess it will take some time...

Regards,

Lukas