- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- MCUXpresso Training Hub

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

-

- Home

- :

- 通用微控制器

- :

- Kinetis微控制器

- :

- K22: Calibrating ADC with VDDA = 3.3V, VREFH = 2.5V?

K22: Calibrating ADC with VDDA = 3.3V, VREFH = 2.5V?

K22: Calibrating ADC with VDDA = 3.3V, VREFH = 2.5V?

Hi there,

I have found one discussion regarding using dual 3.3V supplies for VDDA and VREFH and disussing whether or not this was a good idea. I have searched, but couldn't find anything on this subject.

My device: MK22FN256VLL12

I need to use a 2.5V external, high precision voltage reference for the ADC, so that is connected at the VREFH pin.

However, I also want to ensure the the performance/gain/offset of the ADC is as good as possible, so I am implementing the calibration procedure as also described in AN3949.

AN3949 states that VREFH and VDDA should be above 3V. The Reference manual for my device states that VREFH = VDDA. I meet neither of those requirements.

- What would your recommendation be in such a case?

- Can I just multiply the sum of CLP and CLM registers by 3.3/2.5 or something?

I'd rather not spend money on a DC switch that can be controlled by a GPIO, if this can be avoided.

Thanks for the reply, it is much appreciated.

The "VREFH = VDDA" I agree is not a requirement, but it seems to be "highly suggested" during ADC calibration.

For the MCU I'm using, it is described under "33.4.6 Calibration function", pg. 740 in the reference manual:

For best calibration results:

• Set hardware averaging to maximum, that is, SC3[AVGE]=1 and SC3[AVGS]=11

for an average of 32

• Set ADC clock frequency fADCK less than or equal to 4 MHz

• VREFH=VDDA

• Calibrate at nominal voltage and temperature

And thanks for the circuit - instead of the 3.0V VREFH, I'm running 2.5V, so we're somewhat it the same boat regarding unequal VREFH and VDDA. :smileyhappy:

I'd really appreciate some more details detailing why, for calibration, VREFH should be at VDDA potential, e.g. what happens internally during calibration, which voltage references are used, and how this affects the calibration result, but I've been unable to find any details on this.

The end result of this might be a test setup, where I measure different voltages between 0-2.5V with/without calibration, but then again, this might not tell the true story with only a few samples to try this on.

I don't think 'for best results' means anything more than that given a fixed internal-noise-level, the maximum ENOB is achieved with maximum Vref. The specs are all given at 3V, I chose 3V to be a 'near maximum I could regulate' and insure <= my 3.3V supply.

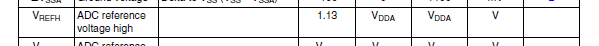

I'm not sure where you get the 'VrefH = Vdda' requirement. The datasheets I look at say this:

So, anything from 1.13V to Vdda. Naturally enough, accuracy specs reduce at lower VrefH.

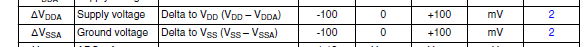

Now I DO agree that at ALL times Vdda must be within 100mV of Vdd:

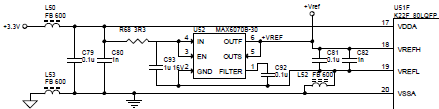

so I can't see the logic in 'dual, analog and digital supplies'. I run a 3V accurate reference myself:

The calibration procedure should relate itself directly to the VrefH you are giving. I wouldn't change any results.