- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- MCUXpresso Training Hub

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

-

- Home

- :

- i.MX Forums

- :

- i.MX Processors

- :

- How to pass DMA buffers from a Driver thru GStreamer 'v4l2src' plugin to Freescale IPU/VPU plugins

How to pass DMA buffers from a Driver thru GStreamer 'v4l2src' plugin to Freescale IPU/VPU plugins

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to pass DMA buffers from a Driver thru GStreamer 'v4l2src' plugin to Freescale IPU/VPU plugins

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Intro

Most of the hardware nowadays is capable of doing DMA transfers on large amount of data. Noticeably, Camera Capture Drivers (or any driver which delivers data to from any outside source to memory) can request DMA-capable buffer and put data into it.

While V4L2 architecture allows any driver to configure its DMA memory usage, it does not facilitate export of such information to Clients. Speccifically, GStreamer plugin implementation suffers terribly from the need to do incredible amount of byte-pushing in User Space, in order to pass buffer data from plugin to plugin. The incurred performance penalty is enormous!

The implementation I worked on involved TW6869 Camera capture chip which is DMA-capable; the captured RGB (or YUV) buffer had to be converted and compressed before further processing. Naturally, IPU mirroring capabilities and VPU compression capabilities determined the pipeline configuration. Also as natural pipeline builder came GST, together with its performance penalty mentioned above.

Hence, the goal becomes to connect "v4l2src" plugin with FSL plugins in a manner which allows recognition and use of DMA buffers, thus avoiding memcpy of data altogether.

Freescale's plugins do employ internal architecture of passing DMABLE info to each other, but in addition to this internal architecture, these plugins do use a more universal method to detect a DMA address.

These methods employed by Freescale plugins require patches in both "Video4Linux2" subsystem and in GStreamer's "libgstvideo4linux2.so" library.

Credit is due to Peng Zhou from Freescale for providing me with to-the-point information regarding Freescale's DMA implementation.

V4L2 patch

V4L2 uses "v4l2_buffer" structure (videodev2.h) to communicate with its clients. This structure, however, does not natively indicate to the Client whether the buffer just captured has DMA address.

Two members in the structure need attention:

__u32 flags;

__u32 reserved;

For "flags" a new flag hasto be defined:

#define V4L2_BUF_FLAG_DMABLE 0x40000000 /* buffer is DMA-mapped, 'reserved' contains DMA phy address */

And, accordingly, the Driver has to set the flag and fill in the "reserved" member in both its "vidioc_querybuf" and "vidioc_dqbuf" implementations.

This done, all V4L2 clients will get the DMA information from the Driver, for each of its buffers.

GST plugin patch

This patch is more difficult to implement. Not just acquisition of a DMA address from V4L2 driver is necessary, but then passing along that information to the next plugin in the pipeline is a must.

Plugins communicate with each other using "GstBuffer" structure, which like its V4L2 cousin has no provisions for passing DMA information.

Naturally, in a pipeline where FSL plugins are involved, a FSL-compatible method should be implemented. These are the macros used in FSL plugins:

#define IS_DMABLE_BUFFER(buffer)

#define DMABLE_BUFFER_PHY_ADDR(buffer)

The most FSL-compatible way to implement DMABLE would be to use "_gst_reserved" extension in the structure. If maximum compatibility with FSL is the goal, then a closer look at "gstbufmeta.c" and "gstbufmeta.h" files in "gst-fsl-plugins-3.0.7/libs/gstbufmeta/" folder shall be taken. Alternatively, GST_BUFFER_FLAG_LAST and GST_BUFFER_OFFSET may be used.

The sources of GStreamer's "libgstvideo4linux2.so" need patches in several places. First of all, right after calls to VIDIOC_QUERYBUF and VIDIOC_DQBUF the information from "v4l2_buffer" must be put in the "GstBuffer" instance. Next, the "need_copy" and "always_copy" flags have to be overridden whenever GST_BUFFER_FLAG_LAST is set. For reasons I cannot explain, the macro PROP_DEF_ALWAYS_COPY in the GST sources is set to TRUE and it is the default for "always_copy"! The final -- and absolutely important patch, is to prevent overwriting of GST_BUFFER_OFFSET memeber with sequential buffer number. Very! Bad! Things! will happen to the system if improper value is passed along as physical address! This last fix can be made conditional, upon the presence of GST_BUFFER_FLAG_LAST.

The outcome

Once these patches are in place, the pipeline is ready to roll, ansd roll it will really fast! My measurements by injection timing traces in FSL's "vpuenc" plugin demonstrated that the time for acquisition of GST input buffer was reduced on the average from 3+ milliseconds to 3-5 microseconds; and this improvement does not include the savings from avoiding the copy inside "libgstvideo4linux2.so" caused by "always_copy" flag!

Universally DMABLE?

The most important conclusion/question from the implementation above ought to be:

"Isn't it time for both V4L2 and GST to enable DMA buffer recognition and physical pointer passing?"

It is my firm belief, that such feature will result in great performance improvements for all kinds of video/audio streaming.

Ilko Dossev

Qualnetics, Inc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Sasamy ,

I could work with kernel 4.19 on imx8qxp your driver for get tw6869 channels via dma.

But I built for imx8qxp with kernel 5.4 but video channels did not appear and error register videos. Is there any version for kernel 5.4 or What I have to change for kernel 5.4?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone,

I am trying to use the tw68 driver with the tw6816. I tried many pipelines already and the last I am trying is:

gst-launch-1.0 --gst-debug-level=3 imxv4l2videosrc input=0 ! imxipuvideosink use-vsync=true

I have added debug print to the functions of the tw68-video.c, and it seems that the device is working (it detects when there is no video input connected, get video standard, etc). It also seems that the DMA is being configured - it is setting 3 buffers for DMA.

When I run the gstreamer pipeline above, there is only a black screen. When I tried a filesink instead of imxipuvideosink, the file always have 0 bytes. It seems that gstreamer is getting no data from the tw6816.

Does anyone have any tips regarding DMA debug? I read in the original question "And, accordingly, the Driver has to set the flag and fill in the "reserved" member in both its "vidioc_querybuf" and "vidioc_dqbuf" implementations." but have no idea how do I know what DMA address to pass to "reserved", for instance.

Any tips are appreciated.

Best regards,

Leonardo

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Doesn't disabling pci msi in kernel help you at all ? passing pci=nomsi in kernel command line should work if there is no problem in your hardware and memory allocation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I try also to capture and encode 8 D1 videos from tw6869 at 25ips.

What's the driver you are using for the tw6869? Have you modify the driver for he send dma address?

I tried to modify the mfw_v4L2src plugin for work with the tw6869, but gstreamer crash in "mfw_gst_ipu_core_start_convert" (in mfw_gst_ipu_csc.c) because the dma address is NULL.

Have you made a patch for v4l2src plugin and the driver ?

Thanks advance.

Fabien

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nice work! is there a patch for this? Any plans or rolling this into the freescale gstreamer and kernel repositories?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The only patch really necessary is for GST v4l2src plugin.

It will be posted shortly, right after I am done with my current & very urgent project. :smileyhappy:

Regarding "videodev2.h" -- it also needs the definition, but for now a workaround like this will be sufficient in any source file:

#ifndef V4L2_BUF_FLAG_DMABLE

#define V4L2_BUF_FLAG_DMABLE 0x40000000

#endif

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ilko Dossev,

As you said, that we've to pass DMAble information from pcie capture card through v4l2 to its clients. I am using ffmpeg for encode/decode purpose.I am keen to know that , as you mentioned in one of your posts

----------------------------------------------------------------------------------------------------------------------------------------------------------------

This is currently working implementation:

CamCap => [RGB DMA buffer] => IPU => [YUV420 DMA buffer] => GStreamer => [YUV420 DMA buffer] => VPU => [AVC DMA buffer] => GStreamer => final sink

To my greatest chagrin, GStreamer operations involve a lot of memcpy from and to DMA buffers, which is performance killer.

A useful practical implementation would be this:

CamCap => [RGB DMA buffer] => IPU => [YUV420 DMA buffer] => VPU => [AVC DMA buffer] => GStreamer => final sink

Feeding directly IPU output to VPU input would save a ton of memcpy operations; leaving one only at the end when compressed data are passed to GStreamer.

Ideal dream-stream would be setting up IPU and VPU to work in sequence, passing data internally:

CamCap => [RGB DMA buffer] => IPU => [...] => VPU => [AVC DMA buffer] => GStreamer => final sink

There is one catch here, though -- because the time for processing a frame is about 2.5 milliseconds, the combined IPU/VPU team should deliver within this limit!

(A total of 360 FPS for the whole system leaves less than 3 milliseconds processing time for each processing step.)

I moved a lot of source code from "libvpu" library to my Kernel Driver, and I am able to initialize VPU properly from my KO.

-------------------------------------------------------------------------------------------------------------------------------------------------------------------

1. Do we need someone in between IPU <==> V4l2 to read those pcie captured frames and feed them to IPU , as currently there is only parallel and mipi camera interface is supported in i.mx6 soc.

2. Does it require to move sources from libvpu to kernel space , to make the pt no. 1 to work properly.

Regards,

Varun

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ilko,

I have users asking about using TW6869 with IMX6 as well. Will you share your patches with the community?

Regards,

Tim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tim,

we are currently working on a prototype boards based on tw6869, driver uses vb2 api, but is also compatible with freescale gst-fsl-plugins through a small transparent hack.

In the Linux kernel driver:

+static int buffer_init(struct vb2_buffer *vb)

+{

+ struct tw6869_vbuffer *vbuf = to_tw6869_vbuffer(vb);

+

+ vbuf->dma = vb2_dma_contig_plane_dma_addr(vb, 0);

+ INIT_LIST_HEAD(&vbuf->list);

+ { /* this hack to make gst-fsl-plugins happy */

+ u32 *cpu = vb2_plane_vaddr(vb, 0);

+ *cpu = vbuf->dma;

+ }

+ return 0;

+}

in tvsrc plugin:

@@ -220,22 +221,19 @@ mfw_gst_tvsrc_start_capturing (MFWGstTVS

GST_BUFFER_SIZE (v4l_src->buffers[i]),

PROT_READ | PROT_WRITE, MAP_SHARED,

v4l_src->fd_v4l, GST_BUFFER_OFFSET (v4l_src->buffers[i]));

- memset (GST_BUFFER_DATA (v4l_src->buffers[i]), 0xFF,

- GST_BUFFER_SIZE (v4l_src->buffers[i]));

{

gint index;

GstBufferMeta *meta;

index = G_N_ELEMENTS (v4l_src->buffers[i]->_gst_reserved) - 1;

meta = gst_buffer_meta_new ();

- meta->physical_data = (gpointer) (buf->m.offset);

+ meta->physical_data =

+ (gpointer)(*(unsigned int *)(GST_BUFFER_DATA (v4l_src->buffers[i])));

v4l_src->buffers[i]->_gst_reserved[index] = meta;

}

+ memset (GST_BUFFER_DATA (v4l_src->buffers[i]), 0xFF,

+ GST_BUFFER_SIZE (v4l_src->buffers[i]));

the current version of the patch and test scripts in an attachment,

known Issues:

1 synchronization of audio and video in the encoding pipeline (probably a bug in the alsasrc plugin in gst 0.10)

2 sometimes, encoding pipeline is stalled for a considerable time (0.5 - 1 second)

Alexander

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Alexander,

This is great - thank you very much for sharing your work.

Have you ever looked into using the IMX6 hardware (IPU/VPU/GPU) to composite multiple streams into one so as to efficiently take the 4 (or more) streams into a single stream prior to sending to vpuenc? I have a user asking specifically for this.

Regards,

Tim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Tim,

I think this is not necessary because VPU "can encode or decode multiple video clips with multiple standards simultaneously", but some frames are discarded even in one simple pipeline, so i think real problem - slow color space conversion (UYVY -> I420 or NV12 that required for VPU) and/or bugs in software. in any case, we have no plans to deep dive in these issues.

Alexander.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Alexander,

Right - the VPU 'can' encode multiple streams simultaneously but what I'm interested in is combining multiple sources into a single stream (think videobox) before encoding it to a single stream. I haven't had time to integrate your patches yet to see if an IMX6DL or IMX6Q has enough CPU to combine 4x of these sources via a non VPU-enhanced videobox and keep up with 30fps. I also want to take a look at the gstreamer-imx plugins for gstreamer1.x. I don't see any documentation o them but it looks like there are a lot more options available.

Regards,

Tim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

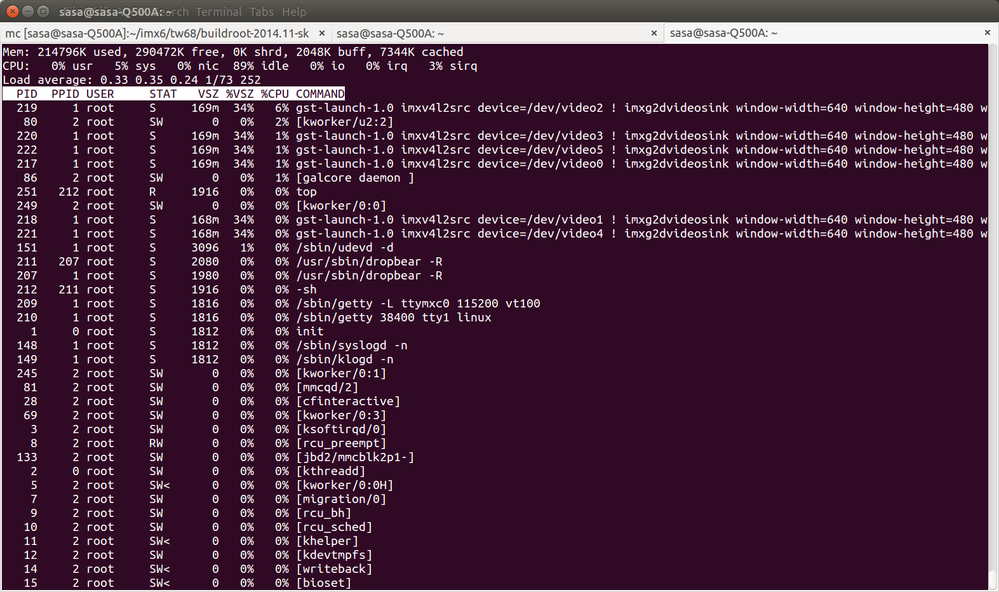

Hi Tim,

I think blit that uses CPU (videobox + videomix) is very inefficient on embedded processors. gstreamer-imx have very good plugins that use gal2d, for example, on i.MX 6Solo and 6 streams I did not see the frame dropping with imxg2dvideosink, unfortunately gal2d has important limitations "RGB and YUV formats can be set in source surface, but only RGB format can be set in destination surface"

Alexander

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

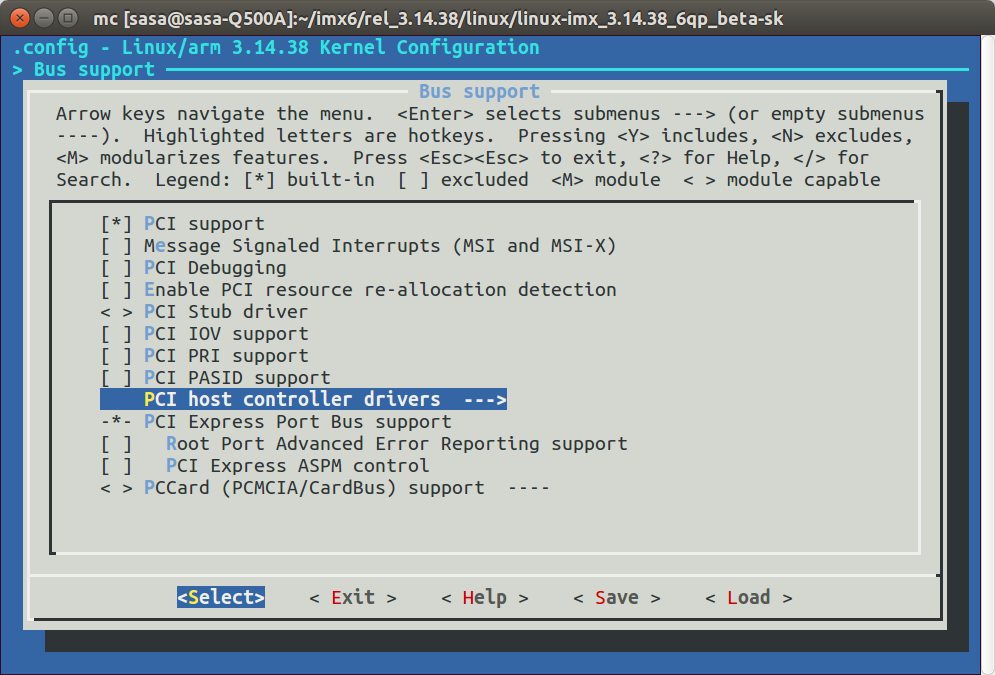

Hello, in attachment the new version of the driver:

1 for gstreamer-imx plugins (master branch) patch/hack isn't required any more

https://github.com/Freescale/gstreamer-imx

2 MSI support should be disabled in the kernel config

3 for FSL Linux kernels 3.14.xx requires a patch to support the legacy interrupts

https://community.freescale.com/thread/353000

https://dev.openwrt.org/browser/trunk/target/linux/imx6/patches-3.14?rev=41004&order=name

0055-ARM_dts_imx_fix-invallid-#address-cells-value.patch

4 theoretically, driver should work with any kernels 3.10+

Alexander

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I've added an alias to the driver so it automatically loads once connected to the PCI bus. Just added this to the bottom of the file.

MODULE_ALIAS("pci:v*00001797d00006869sv*sd*bc*sc*i*");

Sven

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sven,

the kernel provides the macro MODULE_DEVICE_TABLE for generation of such information

static const struct pci_device_id tw6869_pci_tbl[] = {

{PCI_DEVICE(PCI_VENDOR_ID_TECHWELL, PCI_DEVICE_ID_6869)},

{PCI_DEVICE(PCI_VENDOR_ID_TECHWELL, PCI_DEVICE_ID_6865)},

{ 0, }

};

MODULE_DEVICE_TABLE(pci, tw6869_pci_tbl);

I forgot about it. Thanks.

Alexander

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Alexander,

Thanks for all your work on this. I'm curious, where are you getting these new drivers? Is there a git tree somewhere that we can view?

- Pushpal

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content