- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- ARM Microcontrollers

- :

- Kinetis Microcontrollers Knowledge Base

- :

- How to generate microsecond delay with SysTick on KL25

How to generate microsecond delay with SysTick on KL25

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

How to generate microsecond delay with SysTick on KL25

How to generate microsecond delay with SysTick on KL25

The SysTick is a part of the Cortex-M0+ core and so is not chip specific - for details of the Cortex core you generally need to use ARM documents. For SysTick: http://infocenter.arm.com/help/index.jsp?topic=/com.arm.doc.dai0179b/ar01s02s08.html

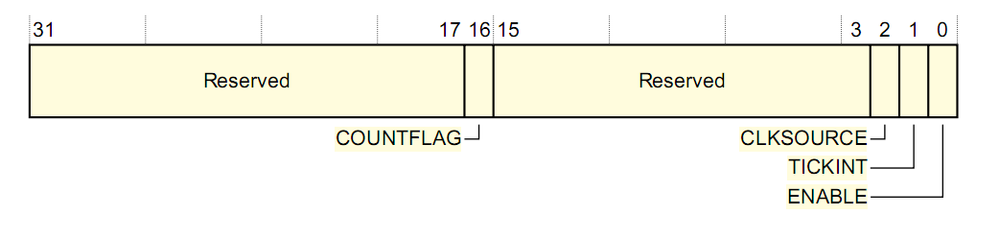

By summary, the SysTick is configured through four registers:

1. SysTick Control and Status(CSR): basic control of SysTick e.g. enable, clock source, interrupt or poll

COUNTFLAG: count-down flag, if down to 0, then this bit will be set to 1, otherwise, it will be 0.

CLKSOURCE: when using internal core clock, it will be 1. If using external clock, it will be 0.

TICKINT: interrupt enabled when setting to 1.

ENABLE: counter enabled when setting to 1.

2. SysTick Reload Value(RVR): value to load Current Value register when 0 is reached.

3. SysTick Current Value (CVR): the current value of the count down.

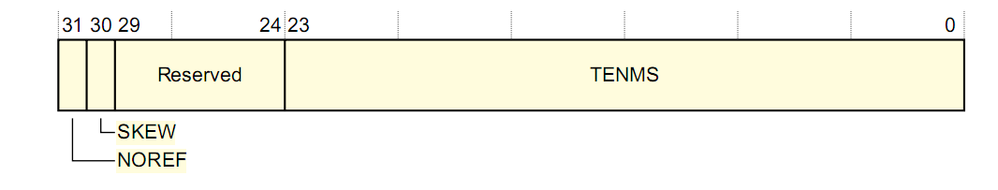

4.SysTick Calibration Value(CALIB): contain the number of ticks to generate a 10ms interval and other information, depending on the implementation.

TENMS: tick value for 10 ms.

To configure the SysTick you need to load the SysTick Reload Value register with the interval required between SysTick events. The timer interrupt or COUNTFLAG bit is activated on the transition from 1 to 0, therefore it activates every n+1 clock ticks. If a period of 100 is required 99 should be written to the SysTick Reload Value register.

See attached code on how to generate microsecond delay.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi

The problem with using the SYSTICK as a software delay is that it is often already in use as a TICK interrupt generator (as it is intended for).

The below code (donated from the uTasker project) shows how to do the same when the SYSTICK is also being used for a its normal interrupt function as well.

Regards

Mark

// This delay ensures at least the requested delay in us

// - it may be extended by interrupts occuring during its us wait loop that take longer that 1us to complete

// - its main use is for short delays to ensure respecting minimum hardware stabilisation times and such

//

extern void fnDelayLoop(unsigned long ulDelay_us)

{

#define CORE_US (CORE_CLOCK/1000000) // the number of core clocks in a us

register unsigned long ulPresentSystick;

register unsigned long ulMatch;

register unsigned long _ulDelay_us = ulDelay_us; // ensure that the compiler puts the variable in a register rather than work with it on the stack

if (_ulDelay_us == 0) { // minimum delay is 1us

_ulDelay_us = 1;

}

(void)SYSTICK_CSR; // clear the SysTick reload flag

ulMatch = ((SYSTICK_CURRENT - CORE_US) & SYSTICK_COUNT_MASK); // first 1us match value (SysTick counts down)

do {

while ((ulPresentSystick = SYSTICK_CURRENT) > ulMatch) { // wait until the us period has expired

if ((SYSTICK_CSR & SYSTICK_COUNTFLAG) != 0) { // if we missed a reload (that is, the SysTick was reloaded with its reload value after reaching zero)

(void)SYSTICK_CSR; // clear the SysTick reload flag

break; // assume a single us period expired

}

}

ulMatch -= CORE_US; // set the next 1us match

ulMatch &= SYSTICK_COUNT_MASK; // respect SysTick 24 bit counter mask

} while (--_ulDelay_us != 0); // one us period has expired so count down the requested periods until zero

}

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Mark, I do not understand this code.

Why is ulPresentSystick constantly being reassigned and not otherwise used?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Bob

I suspect that the variable may have been used a second time in a previous version but it can of course be done with

while (SYSTICK_CURRENT > ulMatch) {Nevertheless there is no practical difference in the two styles (apart from the superfluous variable name used in the code text itself) due to the fact that the variable is declared as a register. If you compile the generated assembler code with or without you get identical binary because there is in fact no additional overhead for "assigning" the unused variable. Practically the SYSTICK current value is read to a register and then the register content compared - as the code verbosely represents it.

I'll remove the register variable to avoid confusion though.

Regards

Mark