- NXP Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Home

- :

- ソフトウェア・フォーラム

- :

- eIQ機械学習ソフトウェア

- :

- AllocateTensors() fails for bigger models (never returns)

AllocateTensors() fails for bigger models (never returns)

- RSS フィードを購読する

- トピックを新着としてマーク

- トピックを既読としてマーク

- このトピックを現在のユーザーにフロートします

- ブックマーク

- 購読

- ミュート

- 印刷用ページ

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello,

I am trying to run TensorFlow Lite image recognition on the iMX RT1060 EVK board (with iMX RT1062).

My client provided me with a trained TensorFlow model that is 12MB.

When I try to initialize Inference with this model the program just hangs after entering AllocateTensors() function. Here is a snippet (it is taken from a tensorflow_lite_label_image example from the SDK):

void InferenceInit(std::unique_ptr<tflite::FlatBufferModel> &model,

std::unique_ptr<tflite::Interpreter> &interpreter,

TfLiteTensor** input_tensor, bool isVerbose)

{

model = tflite::FlatBufferModel::BuildFromBuffer(my_model, my_model_len);

if (!model)

{

LOG(FATAL) << "Failed to load model.\r\n";

return;

}

tflite::ops::builtin::BuiltinOpResolver resolver;

tflite::InterpreterBuilder(*model, resolver)(&interpreter);

if (!interpreter)

{

LOG(FATAL) << "Failed to construct interpreter.\r\n";

return;

}

int input = interpreter->inputs()[0];

const std::vector<int> inputs = interpreter->inputs();

const std::vector<int> outputs = interpreter->outputs();

if (interpreter->AllocateTensors() != kTfLiteOk)

{

LOG(FATAL) << "Failed to allocate tensors!\r\n";

return;

}

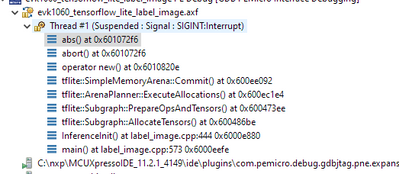

(...)if I pause debugger after that, I see:

I am able to run the same project with 0.5MB mobilenet model with no problems.

Does anybody have some clues about what is going on?

解決済! 解決策の投稿を見る。

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi David,

thank you for your answer!

I was able to eventually overcome this issue by increasing the Heap size from 8MB to 13MB.

(Yes it was just the example project with swapped model)

This is quite a huge amount of memory though and in the next stages of the project, we would like to move to a custom PCB that will not likely have additional RAM on board. Could you point me to some materials about better memory management when using TensorFlow Lite?

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello,

what are your memory settings? Can you share your code? Or did you simply take the label image example and replace the model with the 12MB without any other changes?

Best Regards,

David

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi David,

thank you for your answer!

I was able to eventually overcome this issue by increasing the Heap size from 8MB to 13MB.

(Yes it was just the example project with swapped model)

This is quite a huge amount of memory though and in the next stages of the project, we would like to move to a custom PCB that will not likely have additional RAM on board. Could you point me to some materials about better memory management when using TensorFlow Lite?

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hello Zu,

if you want to optimize memory consumption, I suggest you look into model quantization. TensorFlow supports various quantization methods.

Furthermore, NXP supports Glow and TF Lite Micro, which both allow for memory consumption and performance optimizations.

Keep in mind, that memory consumption is very dependent on the model you use in your application. The model has to be stored in flash and all intermediate results and weights must be stored in ram during inference.

If your original issue was resolved, please mark this thread as resolved. If you run into new problems or have new questions, feel free to create a new thread.

Good luck with your development,

David

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi David,

thank you for your response and thank you for your suggestion about memory optimization.

Have a great day!

Zuza