- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- モデルベース・デザイン・ツールボックス(MBDT)

- :

- NXPモデルベース・デザイン・ツールナレッジベース

- :

- Facial Recognition Application in Co-Simulation mode for IMXRT117X

Facial Recognition Application in Co-Simulation mode for IMXRT117X

- RSS フィードを購読する

- 新着としてマーク

- 既読としてマーク

- ブックマーク

- 購読

- 印刷用ページ

- 不適切なコンテンツを報告

Facial Recognition Application in Co-Simulation mode for IMXRT117X

Facial Recognition Application in Co-Simulation mode for IMXRT117X

Introduction

This article is going to show how to use the IMXRT117X board in co-simulation mode to run a Deep Learning application of facial recognition.

Given that a development board has limited resources, the deployment of a Deep Learning application should be done as efficiently as possible. For now, since the latest version of Matlab (2021a) does not offer support for CNN optimization for Arm Cortex-M processors, we will restrict to developing an application in co-simulation mode. That means that while the data will be provided by the target board, the actual processing will take place in a Matlab environment. By running in co-simulation mode, we will exploit both hardware and the Matlab software capabilities.

The goal of this article is for the user to be able to:

- use a pre-trained CNN model from Matlab;

- use the MBDT IMXRT Board Object for co-simulation;

- develop a standard facial recognition application;

Hardware overview

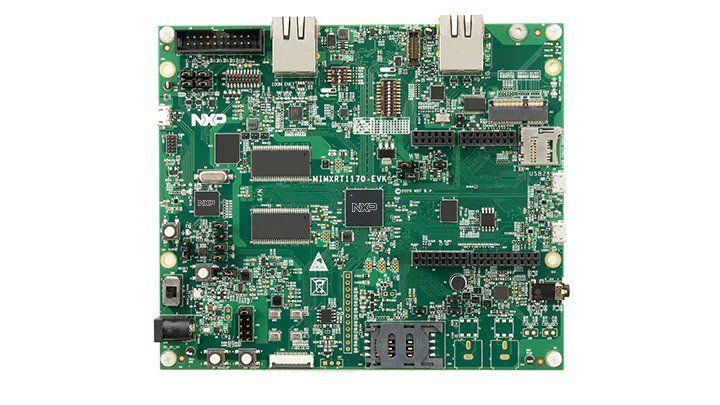

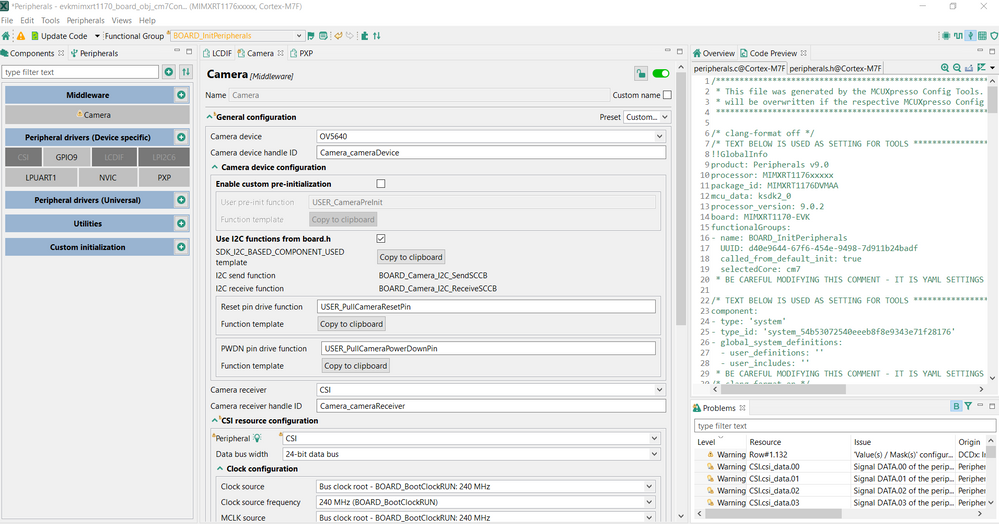

For the development of this application, the i.MX RT1170 Evaluation board was used with a MIMXRT1176 CPU, and the attached ON Semiconductor OV5640 camera. Additionally, the RK055AHD091 LCD panel can be used to visualize the frames from the camera.

The i.MX RT1170 EVK provides a high-performance solution in a highly integrated board. It consists of a 6-layer PCB with through hole design for better EMC performance at a low cost, and it includes key components and interfaces.

Some of the i.MX RT1170 EVK main features are:

- MIPI camera sensor connector and MIPI LCD connector

- JTAG connector and On-board DAP-Link debugger

- Connectivity: 2x Micro-USB OTG connectors, Ethernet (10/100/1000M) connector, Ethernet (10/100M) connector, SIM card slot, CAN transceivers

- 5V Power Supply connector

For a more comprehensive view and a more detailed description regarding hardware specifications and user guides, please check the documentation here.

For this example, we are going to make use of the following peripherals and components hence make sure you have all of them available and you are familiar with their intended scope:

- ON Semiconductor OV5640 camera used for capturing the frames from the board;

- A CAT-5 ethernet cable will be used for getting video frames from the IMXRT on-board camera to be processed in MATLAB;

- Mini/micro USB cable for downloading the executable on the target;

- (Optional, if LCD is used) 5V power supply

Software overview

- Software delivered by MathWorks:

- MATLAB (version 2021a) (we assume you have already installed and configure this). As a hint, please make sure the MATLAB is installed in a path with empty spaces;

- MATLAB Embedded Coder is the key component that allows us to generate the C code that will be cross-compiled to be executed on the target;

- Deep Learning Toolbox allows us to use pre-trained networks or re-train and re-purpose them for other scenarios;

- Image Processing Toolbox for preprocessing the images before training the network;

- Computer Vision Toolbox for performing face detection using the Viola-Jones algorithm;

- Software delivered by NXP:

- NXP MBDT IMXRT Toolbox as IMXRT embedded target support and plug-in for MATLAB environment to allow code generation and deployment. Make sure you install this toolbox in MATLAB 2021a.

Deep Learning – Facial recognition

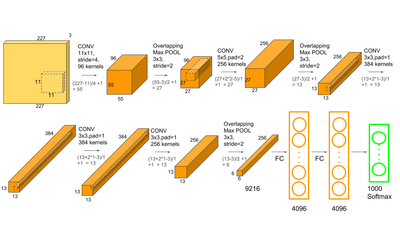

Facial recognition is the process of verifying a person’s identity through their facial features in order to grant access to a service, application, or system. Recognition needs to be performed in 2 steps: 1) face detection – so identifying and isolating the face area from an image and 2) face recognition – analyzing the features of the image and classifying them. In this example, face detection is performed with the CascadeObjectDetector, from the Matlab Computer Vision Toolbox, which is based on the Viola-Jones algorithm, and face recognition will be performed with AlexNet, a pre-trained neural network specialized for object classification.

1.Datasets

In order to train the network for our application, custom datasets will need to be generated containing the faces of the people we want to recognize.

A challenge that arises regarding the dataset are the changes that occur to a person’s appearance: during the day the mood of a person can change, which may affect their facial expressions; also, the lighting of the room can affect the features, by enhancing or diminishing them; nevertheless, the appearance of a person might vary depending on make-up, hairstyle, accessories, facial hair, etc. Thus, this is something that needs to be taken into consideration when creating the dataset used in training. A recommendation for better performance would be to take samples of a person's appearance multiple times during the day and in different lighting conditions.

Another challenge is dealing with the category of unknown people since it is impossible to have and use a picture of every unknown person. A possible approach is to use a threshold value for classifying the pictures so that a person is accepted as “known” only over a certain probability. Another solution would be to create an additional dataset for the “Unknown” category, which should contain various faces of different people. This way the model should be able to generalize and differentiate between known and unknown people.

One of the datasets that can be used for this purpose is the Flickr-Faces-HQ Dataset (FFHQ) which contains over 70,000 images with a high variety in terms of ethnicity, age, emotions, and background.

For this example, 6 classes were used, 5 of them representing known people and 1 for unknown people.

In order to generate a dataset using the attached OV5640 camera, the Matlab client will connect to the Board Object on the target, it will request frames from the camera and it will save them as BMP files.

%% Establish communication with the target board

board_imxrt = nxpmbdt.boardImxrt('162.168.0.102', 'Video', 'GET');

%% Request frames from the camera and save them as bmp images

count = 0;

while (1)

% Camera snapshot

img = board_imxrt.step();

imwrite(img, [num2char(count) '.bmp'])

end

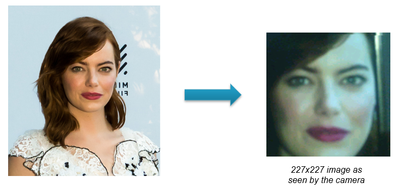

The next step is to extract the face area from an image and resize it to 227x227x3 so that it can be given as input to AlexNet. For that, we can create a directory with all the images that we want to train/test the network with and run the following script, which will perform face detection, then crop and resize the images to the desired dimensions. All the scripts and code snippets presented are also included in the attached archive.

% Create Face Detector Object

faceDetector = vision.CascadeObjectDetector;

inputFolder = 'datastorage/to_process';

outputFolder = 'datastorage/processed/';

pictures = dir(inputFolder);

for j = 3 : length(pictures)

imgName = pictures(j).name;

img = imread([inputFolder '/' imgName]);

% Perform face detection

bboxes = step(faceDetector, img);

if(sum(sum(bboxes)) ~= 0)

detectedFace = imcrop(img, bboxes(1, :));

% Resize image to fit AlexNet requirements

detectedFace = imresize(detectedFace, [227 227]);

imwrite(detectedFace, [outputFolder imgName]);

end

end

2.Training the network

For training the network, we need to modify the output layer of the AlexNet network to match the number of classes we use, in this example 6. To improve the results for the case where we might not have enough input data, we can augment the images, and for that, we use the imageDataAugmenter from Matlab, where we set the images to be flipped horizontally and/or vertically, and rotated with a random angle between -90 and 90 degrees. This will help the model to avoid overfitting and generalize better.

allImages = imageDatastore('datastorage', 'IncludeSubfolders', true,...

'LabelSource','foldernames');

% Split into train and validation sets

[imgSetTrain, imgSetTest] = splitEachLabel(allImages, 0.7, 'randomized');

augmenter = imageDataAugmenter('RandXReflection', true,...

'RandYReflection', true,...

'RandRotation', [-90 90]);

augimds_train = augmentedImageDatastore([227 227], imgSetTrain,...

'DataAugmentation', augmenter);

augimds_test = augmentedImageDatastore([227 227], imgSetTest,...

'DataAugmentation', augmenter); Set the training options, these can be configured by the user as they wish, for this example, we have used a learning rate of 0.001 and batch size of 64, and have run the model for 7 epochs.

opts = trainingOptions('sgdm', 'InitialLearnRate', 0.001,...

'Shuffle', 'every-epoch', ...

'MaxEpochs', 7, 'MiniBatchSize', 64,...

'ValidationData', augimds_test, ...

'Plots', 'training-progress');

trained = trainNetwork(augimds_train, layers, opts);

save('trained_50_50_ep7_6classes', 'trained');Save the trained network into a .mat file. It will be used for classifying the frames received from the camera.

3.Setup

For the installation and usage of the MBDT IMXRT Toolbox please refer to this article.

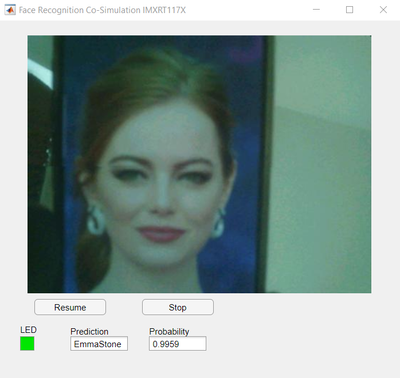

Running in co-simulation mode means that the target board is able to communicate with the host (in this case, Matlab) through the TCP/IP protocol, thus exploiting both hardware and Matlab software capabilities. The logic of the application is presented in the following figure: the attached camera will send the captured frames to the computer through an Ethernet connection; there, the frame will be processed using a Matlab script, which will perform Face detection and be further sent to the AlexNet trained classifier, which will return a label and the probability of that label being correct. Then, based on whether the person was recognized or not, a signal will be sent to the IMXRT board to toggle the USER LED.

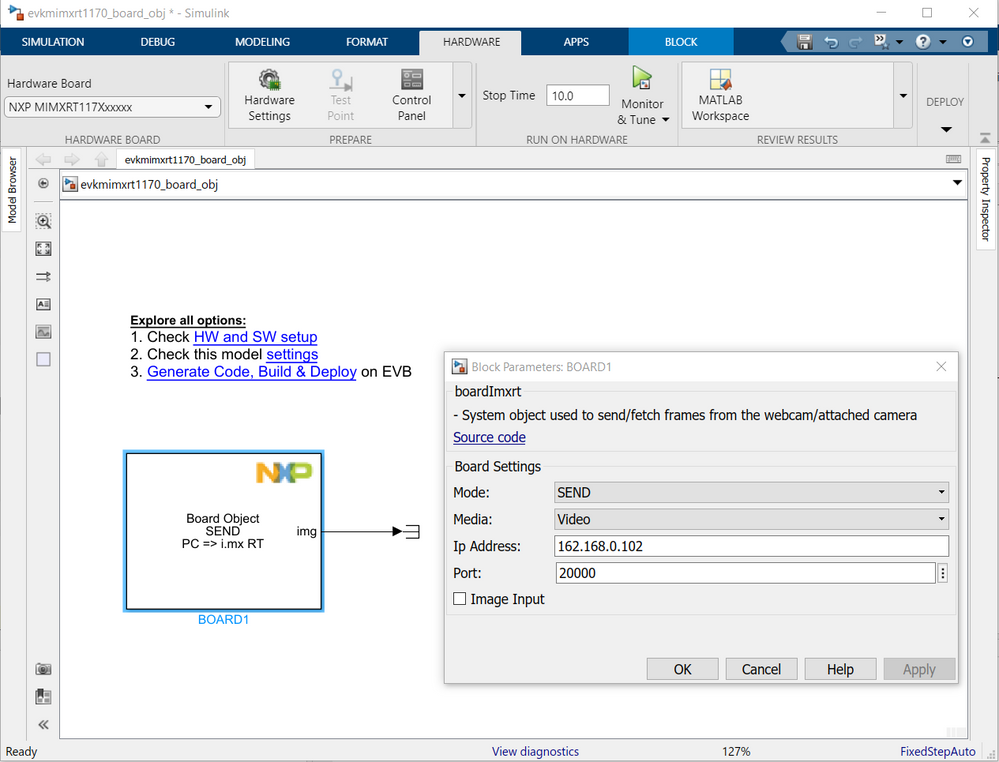

In order to create the executable that will act as the server part of our application, we will create a new Simulink model containing the IMXRT Board Object.

For this example, the Board Object is sufficient for creating the co-simulation environment and running the application, for it makes use of both Camera and LWIP functionalities:

The next step would be to configure the Board Object in order to be able to communicate with the host: open the Block Parameters window and input the IP address and port number you want to use. In the case of the server, it does not matter whether it is configured in SEND or GET mode, because it will wait for commands from the client.

Then the model’s Ethernet parameters need to be configured. Open the Configuration Parameters window from Simulink, select Hardware Implementation from the left menu and then go to the Ethernet tab: here insert the values of the board IP address, subnet mask, and the gateway address.

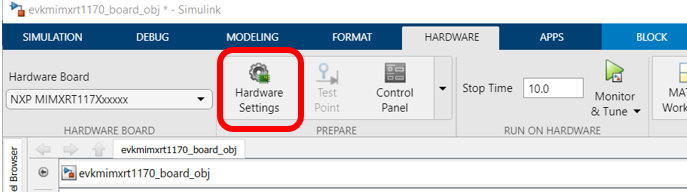

Connect the board through USB to the computer. Go to the Download tab and select the drive you want to download the executable to. More information about this process is presented here. Also, please make sure that you have selected the NXP MIMXRT117Xxxxxx Hardware Board.

The selection of the MIMXRT117X Hardware Board should copy the default ConfigTool MEX file which you can modify, but you can also create or use another MEX file as long as it has the name <model_name>_<core>Config.mex.

In the MEX file are configured the peripherals and pins settings, in this case the Camera peripheral and the GPIO User LED. For better performance, we recommend using a low-resolution for the Camera.

Build the model and the generated executable will be copied on the target board. Restart the board.

Next step is to connect to the target board from Matlab and start receiving frames from the attached camera. For this, we will use a Matlab script that will create a board object configured to request frames from the target.

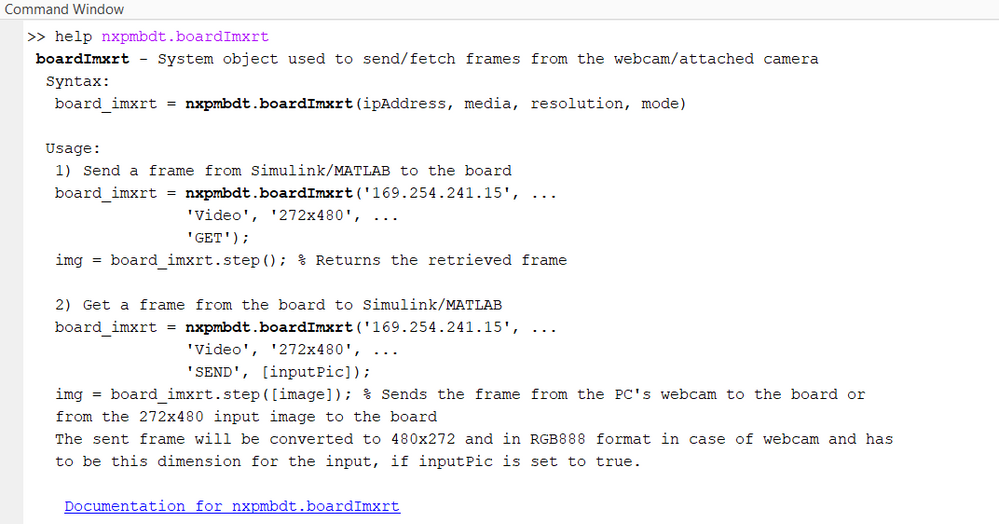

The nxpmbdt.boardImxrt object is the same type as the one used in the previous Simulink model: here we can use it as a function call in a script, so we need to configure it accordingly. For information about how to use the Board Object, use the following command:

The Matlab script that will run in co-simulation and will connect to the target board:

load trained_50_50_ep7_6classes.mat trained;

faceDetector = vision.CascadeObjectDetector;

board_imxrt = nxpmbdt.boardImxrt('162.168.0.102', ...

'Video', 'GET');

while (1)

img = board_imxrt.step();

bboxes = step(faceDetector,uint8(img));

if (sum(sum(bboxes)) ~= 0)

es = imcrop(img, bboxes(1,:));

es = imresize(es, [227 227]);

[label, ~] = classify(trained, es);

if ~strcmp(char(label), 'Unknown')

board_imxrt.led_on();

else

board_imxrt.led_off();

end

else

board_imxrt.led_off();

end

endWhen running simulation mode from a Matlab script, please make sure that the configuration structure (the script mscripts\target\imxrt_code_gen_config) is configured accordingly with the desired hardware.

After the application has received the camera frame from the board, it can start the preprocessing required for classification. We create a face detector object using the CascadeObjectDetector, to which we will input the camera frame and we receive in exchange the margins of the box where it detected a face. In the case a face was detected, we crop the image and resize it to 227x227x3, which is the size the AlexNet network requires, and we classify the image using the loaded model.

The USER LED on the board will be switched on if the person was identified. The script also provides a Matlab UI Figure for better visualization.

Conclusions

The NXP MBDT Toolbox provides a solution for developing Computer Vision applications targeted for Arm Cortex-M processors. This aims to be a temporary approach until the Matlab support required for generating CNN optimized C code is added.