- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- Software Forums

- :

- eIQ Machine Learning Software Knowledge Base

- :

- eIQ Sample Apps - Object Recognition using OpenCV DNN

eIQ Sample Apps - Object Recognition using OpenCV DNN

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

eIQ Sample Apps - Object Recognition using OpenCV DNN

eIQ Sample Apps - Object Recognition using OpenCV DNN

This Lab 3 explains how to get started with OpenCV DNN applications demos on i.MX8 board using eIQ™ ML Software Development Environment.

Get the source code available on code aurora:

OpenCV Inference

The OpenCV offers a unitary solution for both neural network inference (DNN module) and classic machine learning algorithms (ML module). Moreover, it includes many computer vision functions, making it easier to build complex machine learning applications in a short amount of time and without having dependencies on other libraries.

The OpenCV DNN model is basically an inference engine. It does not aim to provide any model training capabilities. For training, one should use dedicated solutions, such as machine learning frameworks. The inference engine from OpenCV supports a wide set of input model formats: TensorFlow, Caffe, Torch/PyTorch.

Comparison with Arm NN

Arm NN is a library deeply focused on neural networks. It offers acceleration for Arm Neon, while Vivante GPUs are not currently supported. Arm NN does not support classical non-neural machine learning algorithms.

OpenCV is a more complex library focused on computer vision. Besides image and vision specific algorithms, it offers support for neural network machine learning, but also for traditional non-neural machine learning algorithms. OpenCV is the best choice in case your application needs a neural network inference engine, but also other computer vision functionalities.

Setting Up the Board

Step 1 - Create the following folders and grant them permissions as it follows:

root@imx8mmevk:# mkdir -p /opt/opencv/model

root@imx8mmevk:# mkdir -p /opt/opencv/media

root@imx8mmevk:# chmod 777 /opt/opencvStep 2 - To easily deploy the demos to the board, get the boards IP address using ifconfig command, then set the IMX_INET_ADDR environment variable as it follows:

$ export IMX_INET_ADDR=<imx_ip>Step 3 - In the target device, export the required variables:

root@imx8mmevk:~# export LD_LIBRARY_PATH=/usr/local/lib

root@imx8mmevk:~# export PYTHONPATH=/usr/local/lib/python3.5/site-packages/

Setting Up the Host

Step 1 - Download the application from eIQ Sample Apps.

Step 2 - Get the models and dataset. The following command-line creates the needed folder structure for the demos and retrieves all needed data and model files for the demo:

$ mkdir -p model

$ wget -qN https://github.com/diegohdorta/models/raw/master/caffe/MobileNetSSD_deploy.caffemodel -P model/

$ wget -qN https://github.com/diegohdorta/models/raw/master/caffe/MobileNetSSD_deploy.prototxt -P model/

Step 3 - Deploy the built files to the board:

$ scp -r src/* model/ media/ root@${IMX_INET_ADDR}:/opt/opencv

OpenCV DNN Applications

This application was based on:

1 - OpenCV DNN example: File-Based

The folder structure must be equal to:

├── file.py

├── camera.py

├── media

└── ...

├── model

│├── MobileNetSSD_deploy.caffemodel

│└── MobileNetSSD_deploy.prototxtThis example runs a single picture for example, but you pass as many pictures as you want and save them inside media/ folder. The application tries to recognize all the objects in the picture.

Step 1 - For copying new images to the media/ folder:

root@imx8mmevk:/opt/opencv/media# cp <path_to_image> .

Step 2 - Run the example image:

root@imx8mmevk:/opt/opencv# ./file.pyNOTE: If GPU is available, the example shows: [INFO:0] Initialize OpenCL runtime

This demo runs the inference using a Caffe model to recognize a few type of objects for all the images inside the media/ folder. It includes labels for each recognized object in the input images. The processed images are available in the media-labeled/ folder. See before and after labeling:

Step 3 - Display the labeled image with the following line:

root@imx8mmevk:/opt/opencv/media-labeled# gst-launch-1.0 filesrc location=<image> ! jpegdec ! imagefreeze ! autovideosink2 - OpenCV DNN example: MIPI Camera

This example is the same as above, except that it uses a camera input. It enables the MIPI camera and runs an inference on each captured frame, then displays it in a window interface in real time:

root@imx8mmevk:/opt/opencv# ./camera.py3 - OpenCV DNN example: MIPI Camera improved

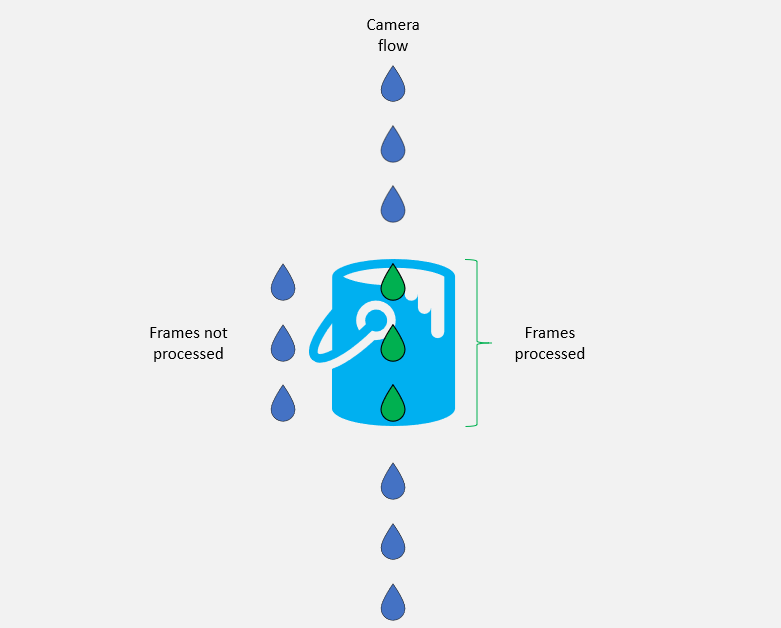

This example differs from the above due the additional support of GStreamer applied to it. Using the Leaky Bucket algorithm idea, the GStreamer pipeline enables the camera to continue performing its own thread (bucket overflow when full), even if the frame was not processed by the inference thread (bucket water capacity).

As a result of this Leaky Bucket algorithm, this demo has smooth camera video at the expense of having some frames dropped in the inference process.

root@imx8mmevk:/opt/opencv# ./camera_improved.py- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hey hi diegodorta how to download the source code to setup host machine from here..

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Dinesh,

Go to the repository and click on "summary", below it will have a clone link so you can download the repository using git tool:

$ git clone https://source.codeaurora.org/external/imxsupport/eiq_sample_apps

Cloning into 'eiq_sample_apps'...

remote: Counting objects: 87, done.

remote: Compressing objects: 100% (79/79), done.

remote: Total 87 (delta 30), reused 26 (delta 4)

Unpacking objects: 100% (87/87), done.

$ cd eiq_sample_apps/

Hope this helps :smileyhappy:

Thanks,

Diego

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks man.. And one more thing, does Opencv perform inference on GPU or CPU?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hi:

I try to test this example with video.However, I found that the time for dnn to parse a frame is almost close to the display time of 10 frames of video.

def dnn_parse(nn,frame,multipe=1): height,width,color_lane=frame.shape if multipe != 1: height = int(height*multipe) width = int(width*multipe) print "h:{}w:{}".format(height,width) frame=opencv.resize(frame,(height,width)) start_time=time.time() blob = opencv.dnn.blobFromImage(frame,0.009718,(height,width),127.5) nn.setInput(blob) det=nn.forward() end_time=time.time() print "height{} width{} time{}".format(height,width,end_time-start_time)h:136w:240height136 width240 time0.264429092407height272 width480 time0.620328903198h:544w:960height544 width960 time2.25029802322Then consider directly analyzing N * k frames when dnn analysis (k =, 1, 2, 3 ...). But found that opencv does not support frame skipping and calculating the total number of video frames.

(python:4431): GStreamer-CRITICAL **: gst_query_set_position: assertion 'format == g_value_get_enum (gst_structure_id_get_value (s, GST_QUARK (FORMAT)))' failed

Using Wayland-EGL

Using the 'xdg-shell-v6' shell integration

frame_count.-1.0

WARN: h264bsdDecodeSeiParameters not valid

How to solve this problem?

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi coindu,

We provided a demo with improved performance in step 3 (3 - OpenCV DNN example: MIPI Camera improved). The same approach was not validated with video input yet. Could you please give it a try and see if it helps?

marcofranchi, FYI.

Thanks,

Vanessa

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I reference the camera_improved.py demo, use video to instead of the v4l2 as input . The fps of video is 15, it means the display on the screen time is 0.067sec. But it will cost 0.6sec to parse one frame,which frame is 480x272. So object recognition block diagram will be delayed than video.

I want to parse the 10th frame when the first frame of the video is played. But this method cannot move to a certain frame.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi coindu,

As vanessamaegima said before, we do not have a validated solution for video file source yet, but I can anticipate that using a video file directly on OpenCV VideoCapture property will return the worst possible results.

So please, try applying the video file to a GStreamer pipeline, such as the example below:

filesrc location=video_device.mp4 typefind=true ! decodebin ! imxvideoconvert_g2d ! video/x-raw,format=RGBA,width={},height={} ! videoconvert ! appsink sync=false

BR,

Marco