- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

- S32Z/E

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

- Generative AI & LLMs

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Knowledge Bases

- ARM Microcontrollers

- i.MX Processors

- Identification and Security

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- Wireless Connectivity

- CodeWarrior

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- NXP Tech Blogs

- Home

- :

- Software Forums

- :

- eIQ Machine Learning Software Knowledge Base

- :

- Using TensorFlow Lite Micro to Perform Handwritten Digit Recognition on i.MX RT devices

Using TensorFlow Lite Micro to Perform Handwritten Digit Recognition on i.MX RT devices

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Using TensorFlow Lite Micro to Perform Handwritten Digit Recognition on i.MX RT devices

Using TensorFlow Lite Micro to Perform Handwritten Digit Recognition on i.MX RT devices

Convolutional Neural Networks are the most popular NN approach to image recognition. Image recognition can be used for a wide variety of tasks like facial recognition for monitoring and security, car vision for safety and traffic sign recognition or augmented reality. All of these tasks require low latency, great security, and privacy, which can’t be guaranteed when using Cloud-based solutions. NXP eIQ makes it possible to run Deep Neural Network inference directly on an MCU. This enables intelligent, powerful, and affordable edge devices everywhere.

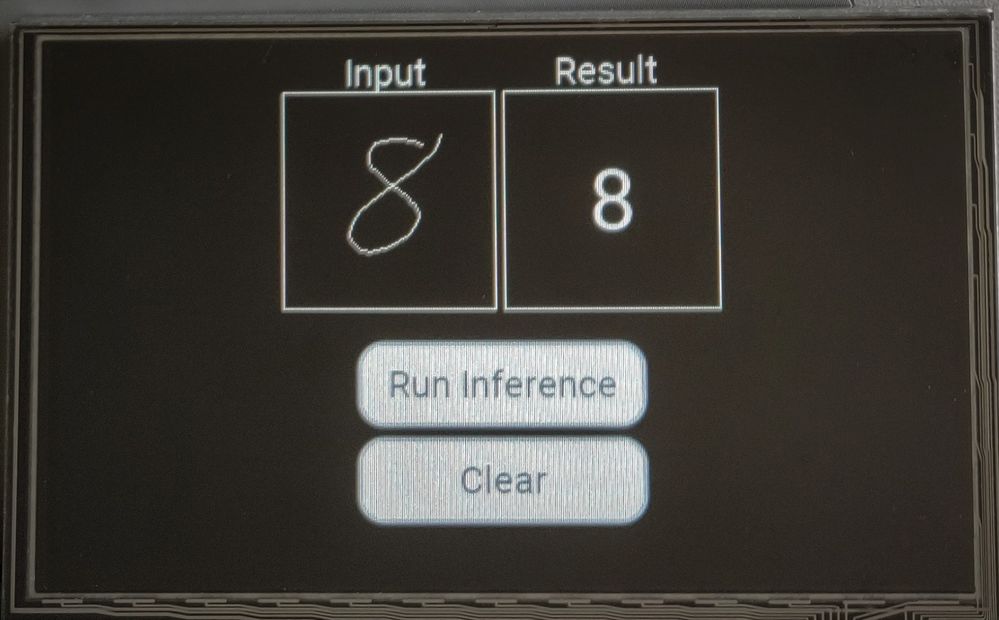

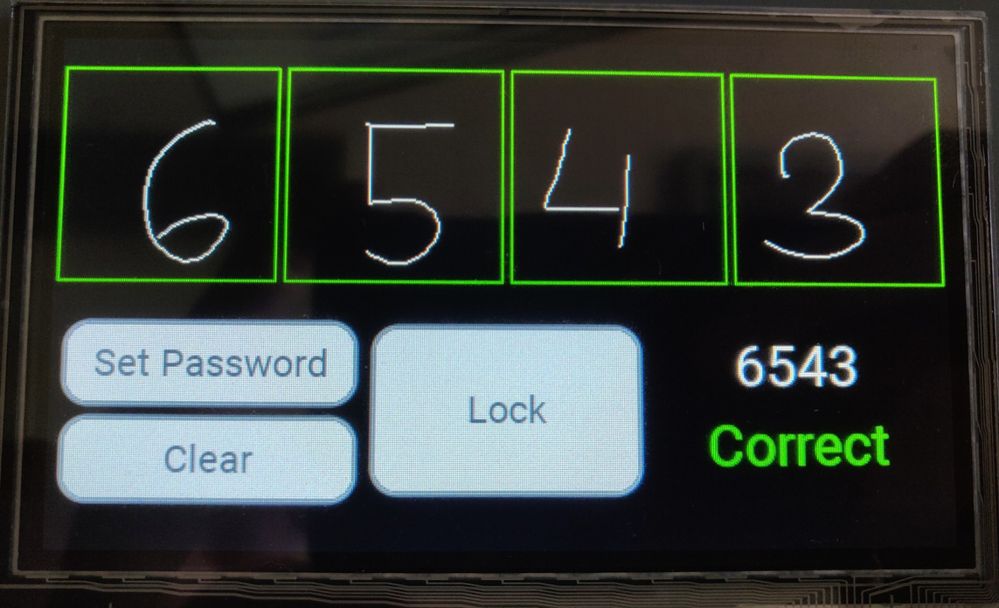

As a case study about CNNs on MCUs, a handwritten digit recognition example was created. It runs on the i.MX RT1060 and uses an LCD touch screen as the input interface. The application can recognize digits drawn with a finger on the LCD.

Handwritten digit recognition is a popular “hello world” project for machine learning. It is usually based on the MNIST dataset, which contains 70000 images of handwritten digits. Many machine learning algorithms and techniques have been benchmarked on this dataset since its creation. Convolutional Neural Networks are among the most successful.

The code is also accompanied by an application note describing how it was created and explaining the technologies it uses. The note talks about the MNIST dataset, TensorFlow, the application’s accuracy and other topics.

Application note URL: https://www.nxp.com/docs/en/application-note/AN12603.pdf (can be found at the documentation page for the i.MX RT1060)

Application code is in the attached zip files: *_eiq_mnist is the basic application from the first image and *_eiq_mnist_lock is the extended version from the second image. The applications are provided in the form of MCUXpresso projects and require an existing installation of the i.MX RT1060/RT1170 SDK with the eIQ component included.

The software for this AN was also ported to CMSIS-NN with a Caffe version of the MNIST model in a follow up AN, which can be found here: https://www.nxp.com/docs/en/application-note/AN12781.pdf