- NXP Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- Vigiles

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Home

- :

- i.MX Forums

- :

- i.MX RT

- :

- How long to response the ENET receive interrupt?

How long to response the ENET receive interrupt?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How long to response the ENET receive interrupt?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Now I use the RT1064, but I don't know how long ENET response the receive-interrupt.Because I am testing the delay time between send_time and recv_time through the ENET. I find that the delay time decreases when the number of the received frames increases.I disable the interrupt coalescing(ENETx_RXIC). I want to know how to set the interrupt response time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the purpose of this sentence“AT_NONCACHEABLE_SECTION_ALIGN(static enet_rx_bd_struct_t g_rxBuffDescrip_0[ENET_RXBD_NUM]FSL_ENET_BUFF_ALIGNMENT)”?I am using the latest SDK 2.8.6. I would like to ask if I change ENET_RXBD_NUM from 4 to 1 if it affects the reception. Because my test found that there is a receive buffer when entering the receive interrupt, the obtained message is not updated in real time, and ENET_RXBD_NUM is changed to 1 to be the latest message. Will the modification affect other mechanisms?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I apologize for the delayed response, this case was mishandled and I didn't see your response on time. Regarding your questions please see my comments below.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I'm not sure that I understood correctly the behavior that you are facing, could you please clarify the information provided? Also, are you using the Ethernet examples that we provide within the SDK? If so, could you please tell me which examples and which version of the SDK?

Regards,

Victor

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I use the SDK version 2.7 and AN12449.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I think there's a misunderstanding, application note AN12449 is for Sensor data protection with the SE050 and it uses a Kinetis K64, not an RT. Are you using an example from the RT SDK? If so, could you please tell me how can I reproduce the behavior that you mentioned while using the RT1064-EVK and the SDK examples? Also, you are using an old version of the SDK, I highly recommend you to migrate to the newest.

Regards,

Victor

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I use the AN12149 note,not AN12449.It is my fault.I am using RT1064 to test the end-to-end latency of Ethernet. The SDK version is 2.7. There are 20 nodes in the network, and all nodes are synchronized in time. Each node sends multicast data in a periodic time, records the node sending time before sending, and records the node receiving time after receiving interruption. We found that the end-to-end delay is at least 5ms, which feels a little too much.

Is it possible that the receiving interrupt occurs because the received frame reaches a certain number or time? But we did not set interrupt coalescence.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for providing more information. Measuring the latency in the interrupt handler increases the latency of the TCP protocol. This is not correct since you are messing with the timings of the communication. Hence, your latency measures are not correct. The correct way to measure this would be to use a trace analyzer along with a hub.

Regarding your question about when the interrupt is triggered, by default our SDK examples are configured to trigger an interrupt in every frame.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are not using the TCP protocol, but sending Ethernet frames directly, without using the TCP protocol stack. What we are testing is not interrupt latency but the end-to-end latency from the sender to the receiver. The delay value tested on the RT1064 platform is 5ms, but we have also tested this delay on other platforms, and its value is only 200us.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The interrupt is not the best place to measure the Ethernet frames or TCPIP packages delays. You should better use network analysis tools, for instance, Wireshark. This tool will give you the exact times and differences of time when the Ethernet frames arrive at and from a device.

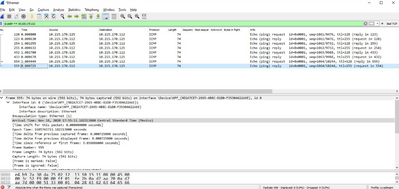

I made a couple of tests on my end and I didn't get the results that you mentioned. For the tests, I used the lwip_dhcp_bm example from the SDK. I used Wireshark to measure the times and I got around 600us. See the below image. IP 125 corresponds to a PC and 112 to the RT.

What is the time that you are getting if you measure it as I suggested before?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the purpose of this sentence“AT_NONCACHEABLE_SECTION_ALIGN(static enet_rx_bd_struct_t g_rxBuffDescrip_0[ENET_RXBD_NUM]FSL_ENET_BUFF_ALIGNMENT)”?I am using the latest SDK 2.8.6. I would like to ask if I change ENET_RXBD_NUM from 4 to 1 if it affects the reception. Because my test found that there is a receive buffer when entering the receive interrupt, the obtained message is not updated in real time, and ENET_RXBD_NUM is changed to 1 to be the latest message. Will the modification affect other mechanisms?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I now change the SDK to version 2.8.6. I use two network ports for redundant sending and receiving. If I do not receive PTP packets, the network delay is 400us, but after receiving PTP packets and retaining the timestamp (modified according to AN12149) , The delay is increased to 100ms. I confirm that the message has been sent, but the message is received a long time later. Does the receiving PTP time stamping mechanism affect the subsequent reception of other data messages and increase the delay?

Let me describe my application scenario. I now use two nodes as slave nodes and synchronize the time with the master node through PTP messages. Each slave node has two network ports for sending and receiving, but only one network port receives PTP packets from the master node. Both network ports receive data packets from the slave node. Now the delay of receiving the PTP packet of the master node on the slave node is much greater than the delay of another network port that does not receive PTP packets.