- NXP Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- Vigiles

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Home

- :

- QorIQ Processing Platforms

- :

- QorIQ

- :

- Re: How to improve LS1046A Ethernet UDP Packet transmission speed?

How to improve LS1046A Ethernet UDP Packet transmission speed?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to improve LS1046A Ethernet UDP Packet transmission speed?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I am using the LS1046A custom board.

I am using QorIQ-SDK-v2.0-1703 (k4.1.35-rt41) by porting it.

We conducted an experiment as follows.

1. Connected from board A to board B with an optical cable to 10G (fm1-mac9).

2. There are 4 cores, but numbers 1 to 3 were isolated.

3. Transmission and reception threads are assigned to the isolation core.

4. The QMAN interrupt is assigned to the isolation core.

5. A SOCK_DGRAM socket was created and a UDP packet of (42+28) bytes was sent from A to B.

6. Using a timer, 1 packet was transmitted every 500us.

7. The gpio TP was toggled in sendto on board A, and the gpio TP was toggled in recvfrom on board B.

8. The gap between TPs of A and B was measured using an oscilloscope.

The results measured an average of 104us and a maximum of 136us.

I expect measurements of less than 125 microseconds with a consistent interval of time.

Looking for ways to reduce spacing and jitter.

Any ideas are great, so if you have a method, please let me know.

Thank you.

Best Regards,

Gyosun.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Gyosun,

If you have jitter, you need to determine where the jitter may appear. For this you can try to use a profiling tool such as perf.

See:

http://www.brendangregg.com/perf.html

http://www.brendangregg.com/perf.html#FlameGraphs

You can profile both the kernel space and user space.

Below is an example script I used for getting a flame graph:

cd FlameGraph/

perf record -F 99 -a -g -- sleep 60

perf script | ./stackcollapse-perf.pl > out.perf-folded

./flamegraph.pl out.perf-folded > $1

You should not use only flame graph.

You can for example measure the cycles spent:

perf record -F 99 -e cpu-cycles -a -g -- sleep 10

perf report --stdio

#this report show how many cpu cycles realloc used (tests were run on 16 cores)

|--9.38%--dev_hard_start_xmit

|

--9.09%--dpaa2_eth_tx

|

|--4.69%--skb_realloc_headroom

| |

| |--1.88%--memcpy

| |

| |--1.53%--pskb_expand_head

| | |

| | --0.67%--__kmalloc_reserve.isra.63

| | |

| | --0.52%--__kmalloc_node_track_

| |

| --0.94%--skb_clone

| |

| --0.67%--kmem_cache_alloc

|

|--1.91%--consume_skb

| |

| |--1.24%--skb_release_all

| | |

| | --0.66%--skb_release_data

| | |

| | --0.61%--skb_free_head

| | |

| | --0.58%--kfree

| |

| --0.56%--kfree_skbmem

| |

| --0.55%--kmem_cache_free

|

--0.96%--dpaa2_eth_enqueue_fq

|

--0.78%--dpaa2_io_service_enqueue_fq

|

--0.71%--qbman_swp_enqueue_mem_back

|

--0.57%--qbman_swp_enqueue_mul

#eliminating the realloc improves performance

|--3.88%--dev_queue_xmit

|

--3.63%--__dev_queue_xmit

|

--1.91%--sch_direct_xmit

|

--1.28%--dev_hard_start_xmit

|

--1.08%--dpaa2_eth_tx

|

--0.55%--dpaa2_eth_enqueue_fq

#You can see that only 1.28 percent of cycles were spent in dpaa2_eth_tx without realloc.

The above example is not DPAA related. It's just for demonstration to show how you can use this tool.

Regards,

Sebastian

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sebastian,

Install iperf and attach the results of the following command.

# perf record -F 99 -e cpu-cycles -a -g -- sleep 10

# perf report --stdio > perf.report

Thank you.

Best Regards,

Gyosun.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Gyosun,

I suggested this tool in order to make an analysis and see if it helps to determine where the jitter comes from.

In the example I gave you can observe clearly the of each function.

Have you determined where it stays the most and between several runs have they observed how the percentages change?

Can you make any statement based on perf tool?

Regards,

Sebastian

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sebastian,

I am not familiar with measuring tools.

I tested it using two boards and measured it using GPIO TP in sendto & recvfrom.

I have measured cumulatively using an oscilloscope.

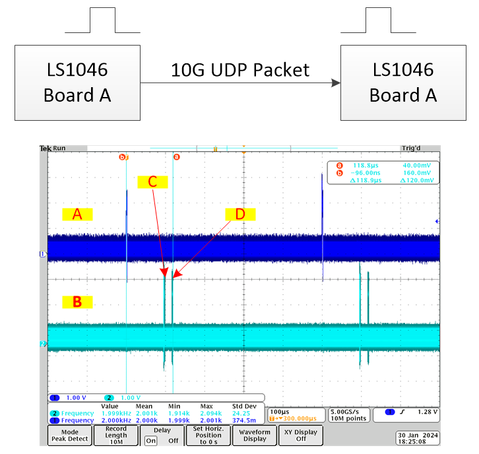

Section description

A: Board A sendto point

B: Board B recvfrom point

C: Average packet transmission time from A to B

We are considering two things about what we want.

1. Sometimes, like section D, it takes longer than the average time.

We want to eliminate this time.

2. How to reduce section C

Kernel options, sysctl, etc. Anything is fine.

Thank you for your proactive response.

Thank you.

Best Regards,

Gyosun.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Gyosun,

The tool I have provided has many examples that could be used as a reference.

It's very difficult to work offline and give you suggestions just based on the log output.

What I would do if I were you:

- As already said I would try to see from perf if there are functions where the time spent is variable between several runs. if I notice something I would try to determine if there is anything I could to to reduce the time spent in it. If not I would go for other approaches:

- Isolate the cores involved in your application (make sure nothing else is scheduled there)

- Run the preempt-rt version of Linux.

- Use DPDK together with a TCP/UDP stack and see how it improves.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Gyosun,

I would like to inform you that I'm working on your questions, I will let you know as soon as I have an update.

Thank you so much for your patience

Regards,

Sebastian

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sebastian,

I debugged for a long time.

The cause was found in the dpaa_eth_refill_bpools function in the drivers/net/ethernet/freescale/sdk_dpaa/dpaa_eth_sg.c file.

When THRESHOLD was exceeded, jitter was occurring as it was reallocated up to MAX.

So, I increased the reallocation buffer and modified the value to allocate only one as follows.

CONFIG_FSL_DPAA_ETH_MAX_BUF_COUNT=32768

CONFIG_FSL_DPAA_ETH_REFILL_THRESHOLD=32767

Then the jitter disappeared and reception became stable.

Will there be any problem if I modify it as above?

Please let me know if there is any way to improve further.

Thank you.

Best Regards,

Gyosun.

dpaa_eth_refill_bpools