- Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- Wireless ConnectivityWireless Connectivity

- RFID / NFCRFID / NFC

- Advanced AnalogAdvanced Analog

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

- S32M

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Cloud Lab Forums

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

-

- Knowledge Bases

- ARM Microcontrollers

- Identification and Security

- i.MX Processors

- Model-Based Design Toolbox (MBDT)

- QorIQ Processing Platforms

- S32 Automotive Processing Platform

- CodeWarrior

- Wireless Connectivity

- MCUXpresso Suite of Software and Tools

- MQX Software Solutions

- RFID / NFC

- Advanced Analog

-

- Home

- :

- NXP Tech Blog

- :

- PyeIQ 3.x Release User Guide

PyeIQ 3.x Release User Guide

PyeIQ 3.x Release User Guide

- RSS フィードを購読する

- 新着としてマーク

- 既読としてマーク

- ブックマーク

- 購読

- 印刷用ページ

- 不適切なコンテンツを報告

A Python Demo Framework for eIQ on i.MX Processors

PyeIQ is written on top of eIQ™ ML Software Development Environment and provides a set of Python classes allowing the user to run Machine Learning applications in a simplified and efficiently way without spending time on cross-compilations, deployments or reading extensive guides.

Now PyeIQ 3.0.x release is announced. This release is based on i.MX Linux BSP 5.4.70_2.3.0 & 5.4.70_2.3.2(8QM, 8M Plus) and can also work on i.MX Linux BSP 5.10.9_1.0.0 & 5.10.35_2.0.0 & 5.10.52_2.1.0. And also, in latest PyeIQ 3.1.0 release, BSP 5.10.72_2.2.0 is also added into supported list.

This article is a simple guide for users. For further questions, please post a comment on eIQ Community or just below this article.

*PyeIQ has no test and maintaining plan on BSP 5.15 and later version. We recommend customers to refer to NXP gopoint (formerly known as NXP Demo Experience) for more ML demos on BSP 5.15 and later version. You can find more details in this link.

Official Releases

| BSP Release | PyeIQ Release | PyeIQ Updates | Board | Date | Status | Notes |

|

|

|

Apr, 2020 |  |

PoC | |

|

|

May, 2020 |  |

|||

|

|

|

Jun, 2020 |  |

Stable | |

|

|

Jun, 2020 |  |

|||

|

|

Aug, 2020 |  |

|||

|

|

|

Nov, 2020 |  |

||

|

|

|

Jul, 2021 |  |

Stable | |

|

|

Sep, 2021 |  |

|||

|

|

Dec, 2021 |  |

|||

|

|

|

Dec, 2021 |  |

*Release v3.0.x can also work on i.MX Linux BSP 5.10.9_1.0.0 & 5.10.35_2.0.0 & 5.10.52_2.1.0. And Release v3.1.0 works on 5.10.72_2.2.0. Reasons and details will be discussed at the end of guide.

Major Changes

3.1.0

- Add VX delegate support to all apps and demos.

3.0.0

- Remove all non-quantization models.

- Change switch video application, now support inference running on CPU, GPU and NPU.

- Add Covid19 detection demo.

2.0.0

- General major changes on project structure.

- Split project into engine, modules, helpers, utils and apps.

- Add base class to use on all demos avoiding repeated code.

- Support for more demos and applications including Arm NN.

- Support for building using Docker.

- Support for download data from multiple servers.

- Support for searching devices and build pipelines.

- Support for appsink/appsrc for QM (not working on MPlus).

- Support for camera and H.264 video.

- Support for Full HD, HD and VGA resolutions.

- Support video and image for all demos.

- Add display info in the frame, such as: FPS, model and inference time.

- Add manager tool to launch demos and applications.

- Add document page for PyeIQ project.

1.0.0

- Support demos based on TensorFlow Lite (2.1.0) and image classification.

- Support inference running on GPU/NPU and CPU.

- Support file and camera as input data.

- Support SSD (Single Shot Detection).

- Support downloads on the fly (models, labels, dataset, etc).

- Support old eIQ demos from eiq_sample_apps CAF repository.

- Support model training for host PC.

- Support UI for switching inference between GPU/NPU/CPU on TensorFlow Lite.

Copyright and License

Copyright 2021 NXP Semiconductors. Free use of this software is granted under the terms of the BSD 3-Clause License. Source code can be found on CodeAurora. See LICENSE for details.

Getting Started

1. Installation for Users

Quick Installation using PyPI

Use pip3 tool to install the package located at PyPI repository:

# pip3 install pyeiq

* PyeIQ v1 and v2 can be installed with "pip3 install eiq". For more details, please check PyPI - eiq.

Easy Installation using Tarball

PyeIQ can be installed with offline tarballs as well:

# pip3 install <tarball>

Here list some available tarballs of main versions:

| PyeIQ Version | Download Link |

|

eiq-1.0.0.tar.gz |

|

eiq-2.0.0.tar.gz |

|

pyeiq-3.0.0.tar.gz |

More other versions can be found and downloaded from PyPI - eiq and PyPI - pyeiq.

2. PyeIQ Manager Tool

The PyeIQ installation generates a tool manager called pyeiq. This tool can be used to run the demos and applications, specify parameters, get info and more.

To start the manager tool:

# pyeiq

The above command returns the PyeIQ manager tool options:

| Manager Tool Command | Descrption | Example |

| pyeiq --list-apps | List the available applications. | |

| pyeiq --list-demos | List the available demos. | |

| pyeiq --run <app_name/demo_name> | Run the application or demo. | # pyeiq --run switch_image |

| pyeiq --info <app_name/demo_name> | Application or demo short description and usage. | # pyeiq --info object_detection_tflite |

| pyeiq --clear-cache | Clear cached media generated by demos. |

3. Running Samples

PyeIQ requires a network connection to retrieve the required model & media package.

Available applications and demos in PyeIQ 3.0.0 release:

Applications: Switch Classification Image(switch_image), Switch Detection Video(switch_video)

Demos: Covid19 Detection(covid19_detection), Object Classification(object_classification_tflite), Object Detection(object_detection_tflite)

Available Demos Parameters

| Parameter | Description | Example of usage |

| -d / --download |

Chooses from which server the model & media package are going to be downloaded. Options: drive, github, wget. Default: wget. |

# pyeiq --run <demo name> --download drive |

| -i / --image | Run the demo on an image of your choice. Default: Use the image in model & media package downloaded. | # pyeiq --run <demo name> --image <root to image>/<image name> |

| -l / --labels | Uses a labels file of your choice within the demo. Labels and models must be compatible for proper outputs. Default: Use default label file in model & media package downloaded. | # pyeiq --run <demo name> --labels <root to label file>/<label file name> |

| -m / --model | Uses a model file of your choice within the demo. Default: Use default model file in model & media package downloaded. | # pyeiq --run <demo name> --model <root to model file>/<model file name> |

| -r / --res | Choose the resolution of your video capture device. Options: full_hd (1920x1080), hd (1280x720), vga (640x480). Default: hd if supported, otherwise uses the best one available. | # pyeiq --run <demo name> --res vga |

| -f / --video_fwk | Choose which framework is used to display the video. Options: opencv, v4l2, gstreamer (experimental). Default: v4l2. | # pyeiq --run <demo name> --video_fwk v4l2 |

| -v / --video_src | Run inference in videos. Videos can be from a video capture device or a video file. Options: True (Find a video capture device automatically), /dev/video<x>, <path to video file>. |

# pyeiq --run <demo name> --video_src True # pyeiq --run <demo name> --video_src /dev/video1 # pyeiq --run <demo name> --video_src <path to video file>/<video file name> |

| -c / --camera_params | (Only used in demo covid19 detection.) Choose eye height, eye angle and eye slope for camera. Options: None or "<num> <num> <num>". Default: "200 60 60" | # pyeiq --run covid19_detection --camera_params "200 60 60" |

| -w / --work_mode | (Only used in demo covid19 detection.) Choose work mode for demo. Options: 0 (Only detect facemask), 1 (Detect both facemask and social distance). Default: 0. | # pyeiq --run covid19_detection --work_mode 1 |

Multiple parameters can be used at once. For example,

# pyeiq --run <demo name> --download github --video_src True --res vga --video_fwk v4l2

4. Switch Classification Image

Overview

This application offers a graphical interface for users to run an object classification demo using either CPU or GPU/NPU to perform inference on a list of available images.

This application uses:

- TensorFlow Lite as an inference engine;

- MobileNet as default algorithm.

More details on eIQ™ page.

Running Switch Classification Image

- Run the Switch Classification Image demo using the following line:

# pyeiq --run switch_image

- Choose an image, then click on CPU or GPU/NPU button:

CPU:

GPU/NPU:

5. Switch Detection Video

Overview

This application offers a graphical interface for users to run an object detection demo using either CPU or GPU/NPU to perform inference on a video file.

This application uses:

- TensorFlow Lite as an inference engine;

- Single Shot Detection as default algorithm.

More details on eIQ™ page.

Running Switch Detection Video

- Run the Switch Detection Video demo using the following line:

# pyeiq --run switch_video

- Type on CPU or GPU/NPU in the terminal to switch between cores.

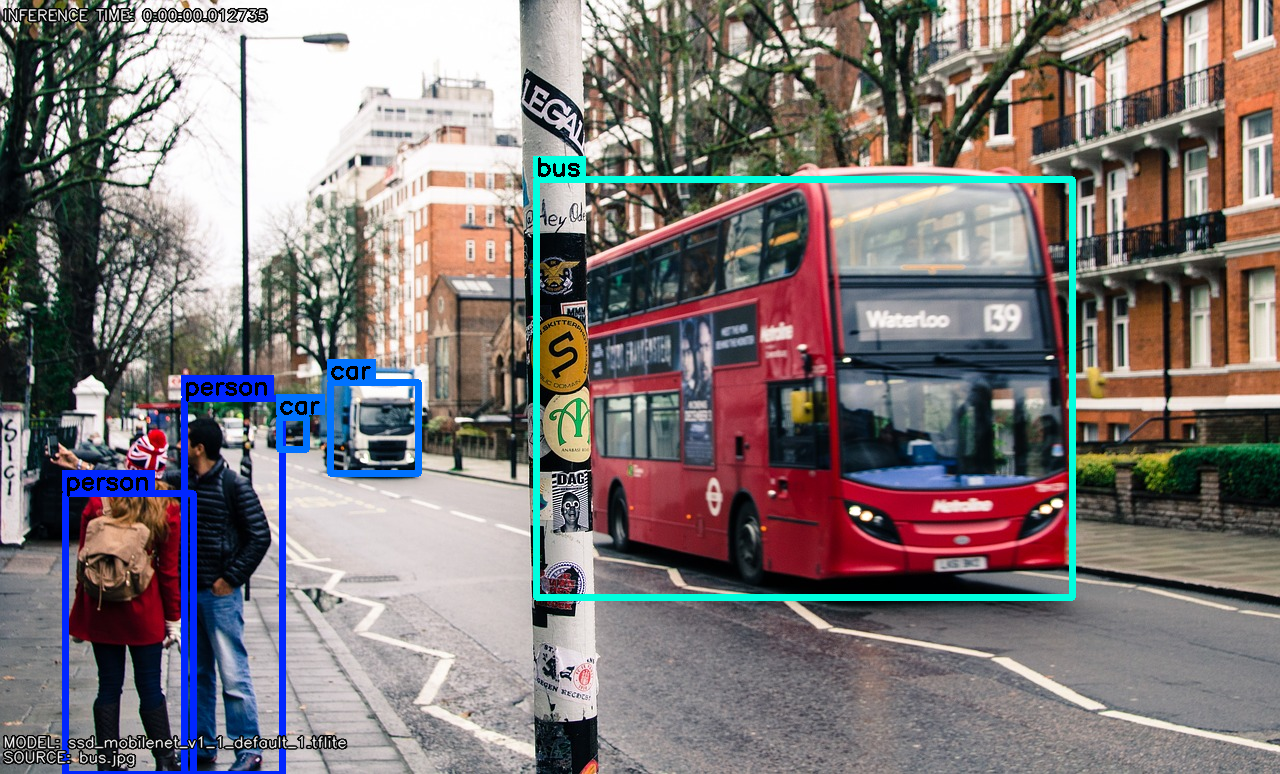

6. Object Detection

Overview

Object detection is a computer technology related to computer vision and image processing that deals with detecting instances of semantic objects of a certain class (such as humans, buildings, or cars) in digital images and videos. Well-researched domains of object detection include face detection and pedestrian detection. Object detection has applications in many areas of computer vision, including image retrieval and video surveillance.

This demo uses:

- TensorFlow Lite as an inference engine;

- Single Shot Detection as default algorithm.

More details on eIQ™ page.

Running Object Detection

Using Images for Inference

Default Image

Run the Object Detection demo using the following line:

# pyeiq --run object_detection_tflite

Custom Image

Pass any image as an argument:

# pyeiq --run object_detection_tflite --image=/path_to_the_imageUsing Video Source for Inference

Video File

Run the Object Detection using the following line:

# pyeiq --run object_detection_tflite --video_src=/path_to_the_video

Video Camera or Webcam

Specify the camera device:

# pyeiq --run object_detection_tflite --video_src=/dev/video<index>

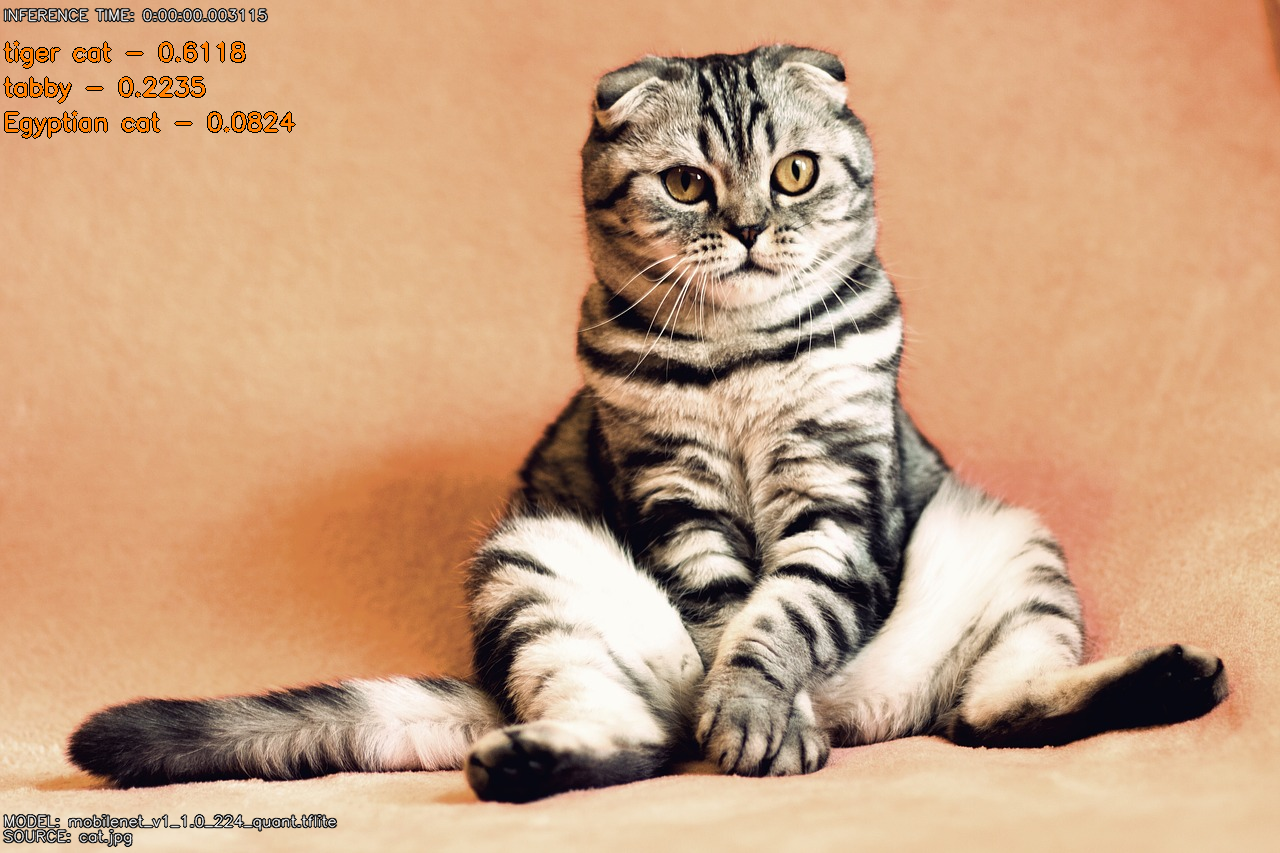

7. Object Classification

Overview

Object Classification is the problem of identifying to which of a set of categories a new observation belongs, on the basis of a training set of data containing observations whose category membership is known. Examples are assigning a given email to the "spam" or "non-spam" class, and assigning a diagnosis to a given patient based on observed characteristics of the patient.

This demo uses:

- TensorFlow Lite as an inference engine;

-

MobileNet as default algorithm.

More details on eIQ™ page.

Running Object Classification

Using Images for Inference

Default Image

Run the Object Classification demo using the following line:

# pyeiq --run object_classification_tflite

Custom Image

Pass any image as an argument:

# pyeiq --run object_classification_tflite --image=/path_to_the_image

Using Video Source for Inference

Video File

Run the Object Classification using the following line:

# pyeiq --run object_classification_tflite --video_src=/path_to_the_video

Video Camera or Webcam

Specify the camera device:

# pyeiq --run object_classification_tflite --video_src=/dev/video<index>

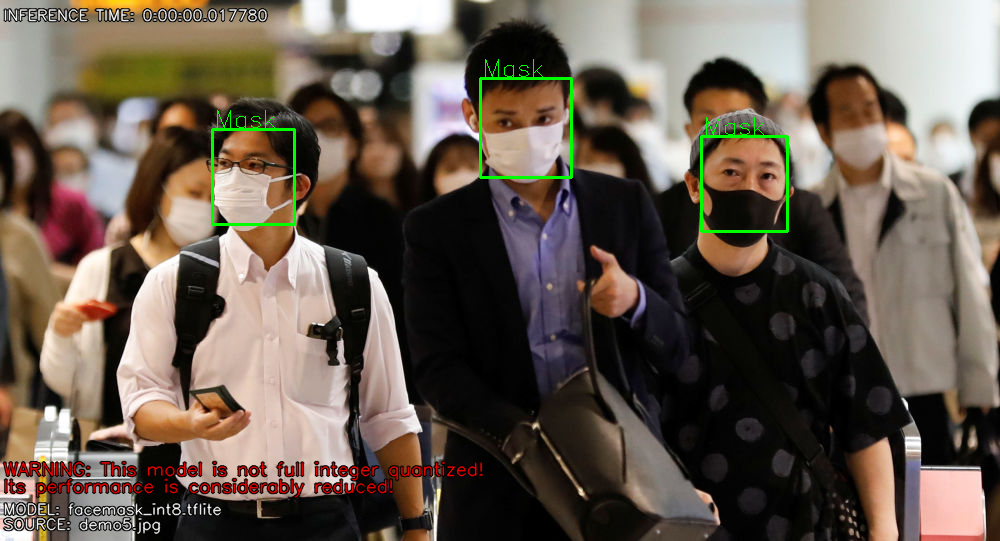

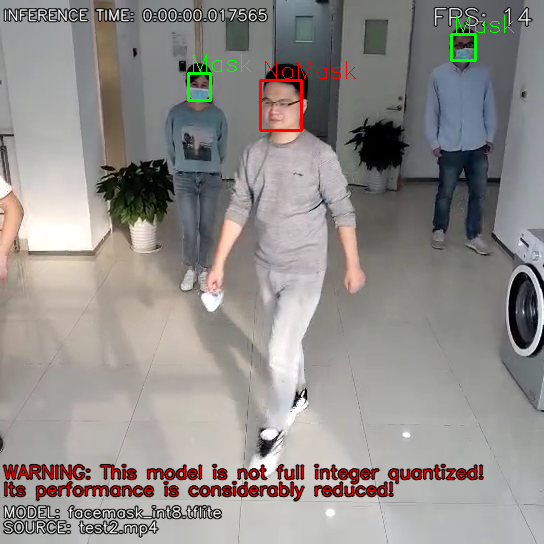

8. Covid19 Detection

Overview

Covid19 Detection is a demo which can detect whether people are wearing masks or not. It can be used to detect the social distance among people as well.

This demo uses:

- TensorFlow Lite as an inference engine;

- Single Shot Detection as default algorithm.

More details on eIQ™ page.

Running Covid19 Detection

Using Images for Inference

Default Image

Run the Covid19 Detection demo using the following line:

# pyeiq --run covid19_detection

Custom Image

Pass any image as an argument:

# pyeiq --run covid19_detection --image=/path_to_the_image

Using Video Source for Inference

Video File

Run the Covid19 Detection using the following line:

# pyeiq --run covid19_detection --video_src=/path_to_the_video

Video Camera or Webcam

Specify the camera device:

# pyeiq --run covid19_detection --video_src=/dev/video<index>

9. BSP 5.10.x support

PyeIQ v3.0.x release is based on i.MX Linux BSP 5.4.70. However, in most cases, it can also work on BSP 5.10.x, including BSP 5.10.9, 5.10.35, 5.10.52 and 5.10.72.

In PyeIQ v3.0.0 and v3.0.1, one known issue about BSP 5.10.x support is about application Switch Detection Video(switch_video). Due to different versions of Opencv on BSP 5.4.70 and 5.10.x, app Switch Detection Video may occur problems during operation.

The expected error message is like this:

/home/root/.cache/eiq/eIQVideoSwitchCore/bin/video_switch_core: error while loading shared libraries: libopencv_objdetect.so.4.4: cannot open shared object file: No such file or directory

To solve this problem, one temporary solution is provided:

One pre-built video_switch_core for 5.10.9 and 5.10.35 BSP(which use tflite lib 2.4.1), one pre-built video_switch_core for 5.10.52 BSP(which use tflite lib 2.5.0) and one pre-built video_switch_core for 5.10.72 BSP(which use tflite lib 2.6.0) can be downloaded here. Use these newly downloaded cores to replace the core in model & media package downloaded by PyeIQ. The old core is located in:

/home/root/.cache/eiq/eIQVideoSwitchCore/bin

After replacement, run app Switch Detection Video(switch_video) again.

In PyeIQ v3.0.2 and v3.1.0, this issue is fixed. PyeIQ now will build the binary which fits BSP environemnt first before running inference. So we recommend users to use PyeIQ of these two versions now.

Another issue is about new feature in BSP 5.10.72. From this version, i.MX Linux BSP supports VX delegate and will gradually give up NNAPI delegate support in furture version. So we provide two different versions of PyeIQ in 2021Q4 release. v3.0.2 is for users who use BSP 5.10.52 and other previous BSP. It continues supporting NNAPI. v3.1.0 is for users who use BSP 5.10.72 and other furture BSP. It supports VX delegate.

To download different versions of PyeIQ, users need to state it when using pip install. For example, the commands used are shown as below:

# pip3 install pyeiq==3.0.2

or

# pip3 install pyeiq==3.1.0

Other previous versions can also be installed in this way. Please choose the version which fits your BSP version.

*If users meet any network problems when installing PyeIQ with pip, users can try command shown as below:

pip3 install --trusted-host pypi.org --trusted-host files.pythonhosted.org pyeiq

or download tar.gz package from pypi and install it offline.

For further questions, please post a comment on eIQ Community or just below this article.

ここにコメントを追加するには、ご登録いただく必要があります。 ご登録済みの場合は、ログインしてください。 ご登録がまだの場合は、ご登録後にログインしてください。

-

101

6 -

communication standards

8 -

General Purpose Microcontrollers

23 -

i.MX RT Processors

46 -

i.MX Processors

52 -

Interface

7 -

introduction

18 -

LPC Microcontrollers

73 -

MCUXpresso

35 -

MCUXpresso Secure Provisioning Tool

1 -

MCUXpresso Conig Tools

30 -

MCUXpresso IDE

43 -

MCUXpresso SDK

27 -

MCX

1 -

Model-Based Design Toolbox

6 -

MQX Software Solutions

2 -

PMIC

1 -

QorIQ Processing Platforms

1 -

QorIQ Devices

5 -

S32N Processors

4 -

S32Z|E Processors

6 -

SW | Downloads

8 -

日本語ブログ

13

- « 前へ

- 次へ »