- NXP Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- Vigiles

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

-

- Home

- :

- i.MX Forums

- :

- i.MX RT

- :

- RT1020 clocking SDRAM at 166Mhz: Zero timing margin?

RT1020 clocking SDRAM at 166Mhz: Zero timing margin?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

RT1020 clocking SDRAM at 166Mhz: Zero timing margin?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I hope you are all ok.

If you look at the RT1020 consumer datasheet:

i.MX RT1020 Crossover Processors for Consumer Products, Rev. 1, 04/2019

Page 43

Section 4.5.1.2.2 SEMC input timing in SYNC mode

With SEMC_MCR.DQSMD = 0x1, the TIS Data input setup parameter is 0.6.

I.e. The data has to be present on the bus 0.6ns before the RT1020 can clock it in.

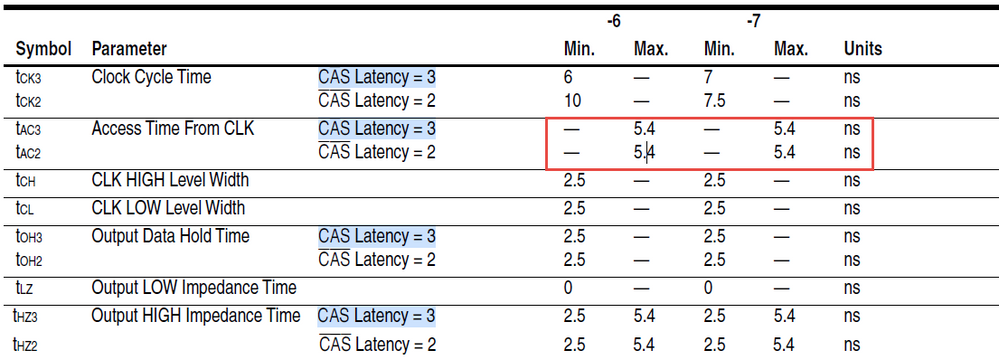

If you look at a typical data sheet for a 166MHz SDRAM device, for example: the ISSI IS42S16160J-6. (Data sheet attached: 42-45S83200J-16160J.pdf)

The Access Time from Clock is 5.4ns. (Probably a JDEC spec.)

So 5.4 ns to present the data, leaving only enough time (0.6ns) before the RT1020 reads it in.

5.4 + 0.6 = 6ns. 166Mhz is a 6.02ns clock cycle. So practically zero margin.

What am I missing here? No one would accept a margin of zero, and the eval board seems to work, so I know I am missing something.

Or is there an adjustment/register that could help here? I couldn't see anything obvious in the reference manual or the semc/sdram demo.

Many thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

Thank you for your interest in NXP Semiconductor products and for the opportunity to serve you.

5.4 ns is the maximum limit time of the Access Time From CLK, in reality, it definitely is less than 5.4 ns.

From the experience, slow down clock frequency is a good way to assure time configuration meets its characteristic requirements.

Have a great day,

TIC

-------------------------------------------------------------------------------

Note:

- If this post answers your question, please click the "Mark Correct" button. Thank you!

- We are following threads for 7 weeks after the last post, later replies are ignored

Please open a new thread and refer to the closed one, if you have a related question at a later point in time.

-------------------------------------------------------------------------------