- NXP Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- Vigiles

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

-

- Home

- :

- General Purpose Microcontrollers

- :

- Kinetis Microcontrollers

- :

- K26 ISR jitter much greater than expected

K26 ISR jitter much greater than expected

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

K26 ISR jitter much greater than expected

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've been developing firmware for a K26-series chip that requires highly repeatable timing for ADC sample intervals. I've tried using the ADC conversion completed interrupt, then switched to the PIT timer interrupt, but have the same issue with both approaches: the interrupt jitter is substantial and highly influenced by code in the main control loop.

My PIT timer ISR has been reduced down to setting a GPIO pin high, performing an ADC conversion, clearing the PIT interrupt status flag, and setting the same GPIO pin low (the high and low pulse allows me to measure frequency and execution time on an oscilloscope).

What I see happening is code in my "while(1)" loop in main.c can significantly alter the time interval (as measured by rising edge to rising edge of my GPIO pin pulse) and influence the execution time of the ISR (as measured by the pulse width of the GPIO pin pulse). Both jitter and execution time vary by as much as 50 cycles.

The main.c while loop, below, is very simple:

while(1){

GPIO_TogglePinsOutput(GPIOE, 2 << 1U);

for (y = 0; y < 10; y++){

__asm("nop\n\t");

}

}

The ISR (set to interrupt at 1 MHz):

void PIT_TIMER_HANDLER(void)

{

GPIO_SetPinsOutput(GPIOE, 1 << 1U);

PIT_ClearStatusFlags(PIT,kPIT_Chnl_0,kPIT_TimerFlag);

adcVals = (uint32_t)((ADC_SMA->R[PDA_CHANNEL_GROUP] << 16) | ADC_PD->R[PDA_CHANNEL_GROUP]);

GPIO_ClearPinsOutput(GPIOE, 1 << 1U);

}

Whether using a loop with a counter or just writing several "nop\n\t" instructions, changing the execution length of this while loop can lead to either rock solid interrupt intervals on my PIT ISR or lead to anywhere between 10 and 50 cycles of jitter (one interrupt will be clearly delayed on the scope, the subsequent interrupt occurs early relative to its predecessor so the average interrupt time is about right, but the edge-to-edge timing varies a great deal).

I've also written the ISR and main while loop in assembler in an attempt to eliminate the compiler as a potential source of error... Same thing, except now I find that simply adding a ".align" compiler directive can have the same influence over jitter and execution time. Similarly, switching between different levels of optimization in Eclipse (Kinetis Studio using GCC compiler and KSDK 2.0) influences jitter and execution time.

I've been reading about instruction cache misses causing some issue, but there's so little going on here that it's difficult to imagine a lot of cache line turnover. I'm really hopeful that someone knows what's causing this and can offer a suggestion or two, I'm not sure how much more my forehead can take of getting pounded into my desk!

Also, as an important point, I'm not using DMA here because at a later point I need to put some real-time intelligence into the ISR and so can't afford to acquire a block of data and post-process it (I wish I could!)

If there's any additional information needed please let me know and I'll provide it right away. Thanks in advance for any help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Xiangjun,

Thank you for your suggestion, I will try that right away. I did originally try just using the ADC ISR with the ADC set to continuous conversion mode, but that had jitter (as measured by my GPIO pin pulse) as bad as the PIT ISR I described above. When both the ADC and PIT ISR's had essentially identical jitter issues my assumption was that there's something fundamental I don't understand with interrupts on this chip, particularly when rather ordinary code (no other interrupts, no interlock structures, no disabling of interrupts) in the main while loop directly influences the amount of jitter I see. I have to admit in 25 years of firmware development I've never seen such a thing.

A second component of this problem seems to be that the execution time of the ISR can be dramatically influenced by code location, alignment, and size (number of instructions) both within and external to the ISR function. For example, adding or removing lines of code from the main while loop can alter the execution time by 20% or more. I've tried the main control loop reading and avoiding memory locations accessed in the ISR (trying to determine if it was a cache impact), I've looked at instruction alignment, I've looked at the disassembly to see if something unusual has happened there... nothing jumps out at me. This seems to be the case whether I compile C code or hand-write assembler. Any ideas??

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, Scott,

As I said it was okay to use hardware triggering mode instead of software triggering mode, the hardware triggering mode can guarantee that sampling interval is the same. if you want to test the jitter, i suggest you use ADC to sample an sinusoidal signal, and use the ADC sample to display the sine signal to check the jitter. It does not make sense to check the GPIO toggle in ISR of either PIT or ADC.

Regarding the ISR execution time, because some assembly instruction has granularity, if the code size is small, you may see the discrepancy, it is normal.

Hope it can help you

BR

Xiangjun Rong

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, Scott,

I suppose that you use PIT module to generate PIT interrupt, in the PIT ISR, start ADC conversion of K26, and you use ADC interrupt mode, in the ADC ISR, read ADC samples. You want to sample analog signal with fixed cycle time, but you find that the ADC ISR happens with jitter. I have to admit that the fixed sampling cycle time can not be guaranteed if you use software triggering ADC mode.

I suggest you use hardware ADC triggering mode, the hardware triggering mode can guarantee the fixed sampling cycle time, in other words all samples are acquired with same interval, if you use ADC interrupt mode to read ADC samples, it is okay even if the ADC interrupt has jitter, the hardware triggering mode can guarantee that there is not any jitter for the sampling interval.

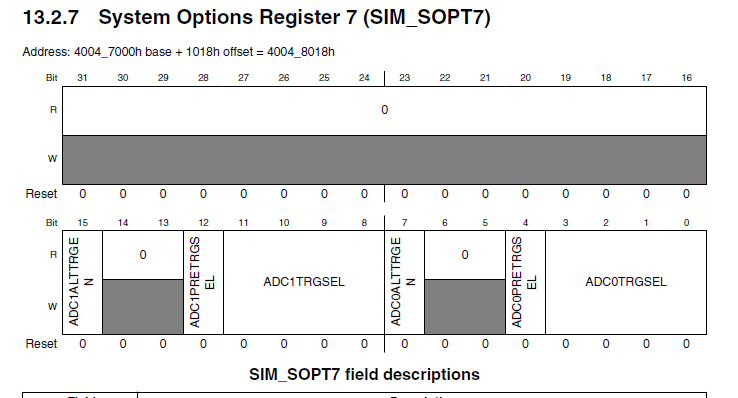

Regarding the ADC hardware triggering source sleection, pls refer to the SIM_SOPT7 in RM of K26.

Hope it can help you

BR

Xiangjun rong