- NXP Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- Vigiles

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

-

- Home

- :

- General Purpose Microcontrollers

- :

- Kinetis Microcontrollers

- :

- Instability of K64F ADC calibration

Instability of K64F ADC calibration

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Instability of K64F ADC calibration

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've attached a histogram showing 6 runs of taking 10,000 ADC readings and plotting ADC count against number of occurrences of each count. Hardware is a FRDMK64F, and the voltage being read is the K64 internal Vref divided by a resistor divider to ground. Each run is preceded by an ADC calibration using the procedure supplied with Freescale sample code. Each individual run looks good: ~13 usable bits which is what I'd expect from a proto board with no optimization of the analog chain.

The stability between runs is not good. There's a spread of more than 64 ADC counts, meaning only 10 usable bits.

My thinking is the ADC readings are stable to +/- 4 ADC counts, so calibration (the peaks of the histograms) should be stable to +/- 4 ADC counts as well.

1. What's the source of this instability?

2. How can this be minimized?

3. Is calibration supposed to be invariant? The reason for asking is that the docs mention saving the calibration values to non-volatile memory and restoring them at boot time - Which seems to imply the values are not voltage or temperature dependent.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have long used algorithms "Leaky integrator" , try.Without calibration.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Konstantin: Thanks for the references! If NXP doesn't offer any advice, what I'm planning to do is perform periodic calibrations assuming temperature does effect calibration, then adjust the gain with an alpha filter on each register. That's sure to eliminate the little output step functions after each recalibration, but doesn't provide any assurance that the ADC is providing best accuracy.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Fred,

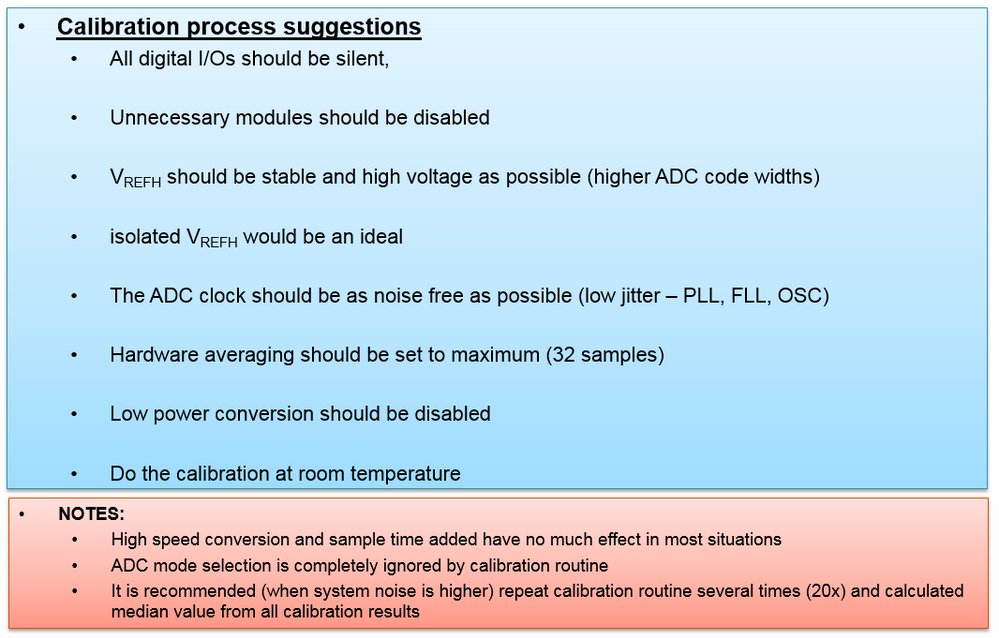

Please refer below picture, which shows some suggestions about ADC module calibration process.

Wish it helps.

Best regards,

Ma Hui

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Hui_Ma,

Thank you for the reply. My histogram test follows those bullet practices other than the Vref which is fixed by the Freedom board. The fact that each of the individual histograms shows ~8 LSB variance indicates that the conversion is as low-noise as can be expected. But given that 8 LSB variance, why should the results over several calibration cycles show close to 64 LSB variance??? Which is my question 1...

The data sheet Table 31 does not spec TUE, DNL, INL, and Efs for 16 bit mode. A 16-bit ENOB is spec'd, I am not an ADC guru, so could you please unwind all those numbers for this one scenario - Given:

- A circuit and code meets those bullet point practices

- ADC calibrated per the reference manual

Then:

- Apply a 2.00000 volt, zero-ohm impedance ADC input

- Have a zero-ohm impedance 3.30000 volt Vref

Ideally the value of a conversion is 2 / 3.3 * 65535 = 39718. Given the chip specs, what is the min and max ADC count?

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry for the so later reply.

The Analog part power supply and reference could affect the ADC conversion result.

Reduce a noise of ADC reference (VDDA, VSSA or VREFH, VREFL) as much as possible (any noise on ADC reference directly affects ADC digital results).

EXAMPLE: 12-bit mode, VREF = 3.0V±10mV, VADIN=2V

ADC result 1 = 2730 at 3.0V, ADC result 2 = 2720 at 3.01V, ADC result 3 = 2739 at 2.99V

Δ = 19

Below Hardware design way is using to reduce the noise:

- Use of linear regulator for analog power supply

- Place low value capacitors 100nF, 10nF (high dynamic capability, low ESR) as close as possible to the power supply pins (VDD, VSS and VDDA, VSSA) to avoid noise caused by PCB tracks,

- Place higher value capacitors 10uF, 47uF close to the power supply pins to reduce low frequency noise (power mains influence)

- Isolate digital and analog power supply lines by ferrite bits for higher frequency noise reduction (low impedance at low frequency and high impedance on higher frequency)

- If possible/available use separate digital and analog power supply (dependent on package/device/VREF pins availability).

NOTE: separate supplies for VDD and VDDA is not recommended (see specification in DS)

- Separate digital and analog parts on PCB

Will reduce capacitive coupling of analog and digital tracks

Will also reduce EMI because of distance

The noise of source could be:

- I/O pins crosstalk – capacitive coupling between I/Os

I/O signal tracks which passing close to each others – same layer of PCB

I/O signal tracks which crossing each other – different layers of PCB

- EMI from close circuits (PCB tracks can have antenna effect)

Below hardware design to reduce the source noise:

- Reduce crosstalk by proper grounding (place GND track between close passing analog and digital signals)

- Proper shielding reduces EMI

Note: Shielding does not mean grounding (avoid grounding current flow via shielding)

- Identify possible EMI sources and receptors and try to separate them (filters, grounding, shielding)

- Reduce the length of PCB tracks to the analog input to be measured (reduce parasitic LC components)

- Use shielding cables to ADC input when measured signal coming from distant locations (sensors etc.)

The ADC module configuration (software way) to enhance the ADC performance is:

1> Reduce ADC conversion clock frequency;

2> Enable hardware average;

The Kinetis SAR only defines static performance up to 12-bit accuracy. Ramp testing is prohibitively difficult beyond 12-bit accuracy, and although we have a capable test setup and alternative methods we could use for 16-bit linearity, the test times are so long that a full characterization of the ADC across all operating conditions and configurations is an impossible resource sink for our limited validation resources. That's why the K64 datasheet only provide ADC module with 12-bit mode data about TUE, DNL, INL and etc. parameters.

Thank you for the understanding.

I would recommend customer to using hardware average enable to enhance the ADC performance.

Wish it helps

Have a great day,

Ma Hui

-----------------------------------------------------------------------------------------------------------------------

Note: If this post answers your question, please click the Correct Answer button. Thank you!

-----------------------------------------------------------------------------------------------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maybe I'm not clearly describing my test data. Each of those curves in the .pdf is the result of taking 10000 readings, where each of the readings uses 32-sample averaging.

One test run returned the following result, which shows how many times a particular value was returned by the ADC: A variation of 9 ADC counts.

35627 0

35628 0

35629 3

35630 71

35631 707

35632 2990

35633 4169

35634 1791

35635 256

35636 11

35637 2

35638 0

35639 0

Then immediately the ADC was recalibrated and the test re-run, giving the following results:

35683 0

35684 0

35685 1

35686 2

35687 74

35688 1225

35689 4565

35690 3516

35691 579

35692 33

35693 4

35694 1

35695 0

35696 0

This time a variation of 10 ADC counts.

Notice where the results are centered:

- 35633

- 35689

The center values are 56 ADC counts apart. So... Why does recalibration make results jump by 56 counts when one set of readings jumps only 9 or 10 counts?

The reason I'm belaboring this issue is that the K64 is being designed into a instrument for which we must spec the instrument accuracy. Based on one set of readings I can state a number maybe based on 13 usable bits from the ADC. If that bouncing around with recalibration can't be fixed, that number must be 5 times worse.

fcw

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Thank you for the more detailed info, now I am quite understanding your issue.

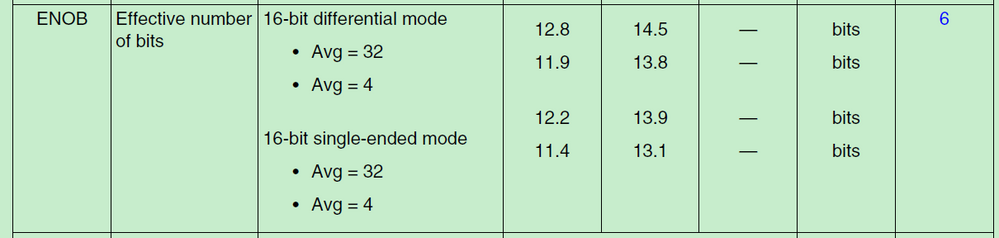

The K64 datasheet shows the ENOB (effective number of bits) for 16-bit single-ended mode as below picture:

So we are confuse that K64 provides 16-bit(resolution) ADC, why the accuracy is just 13bits.

Please check below document for the detailed info.

http://m.eet.com/media/1060300/FreescaleADC_018.pdf

Wish it helps

Have a great day,

Ma Hui

-----------------------------------------------------------------------------------------------------------------------

Note: If this post answers your question, please click the Correct Answer button. Thank you!

-----------------------------------------------------------------------------------------------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Hui_Ma,

we use K64 in our design with the internal ADC single ended 16bit..

In the datasheet "K64P144M120SF5" (Rev. 7, 11/2016)

Topic: 3.6.1.2 16-bit ADC electrical characteristics

There are still only values for 12-bit modes specified.

How can I get valid values for 16-bit?

--> can you provide this values? (TUE / DNL / INL / EFS)-Values for 16-bit

--> do I have to multiply the 12-bit (TUE / DNL / INL / EFS)-Values by 16 (to convert them from 12 to 16 bit??)

I've to calculate the maximum error in our circuit. External errors are known... but the internal ADC --->> ???

Any hint would be appreciated!

Best regards

Manfred

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Bob: Thank you!!! I was almost beginning to think I wasn't explaining the issue.

Hui_Ma: What Bob wrote........

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are welcome. Communications is frequently frustrating.

I have exactly the same calibration problem with the KL27.

I expect the problem lies in poor clock stability from other clock issues I've run into with the clock.

A2D clock variability will show up as jitter/changing voltages in the time domain and as phase jitter in the frequency domain.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Bob:

I've taken the problem to our FAE who has passed it on to an NXP engineer who understands the issue. I'll post his conclusions when he has some.

fcw

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The attached is from an NXP engineer who ran my histogram test on a stand-alone FRDMK64F board, reading the internal voltage reference, with a 100 nF cap added to the Vrefout pin. This shows it is possible for the ADC to return consistent readings with repeated recalibration. Apparently a bit of noise not having a large effect on ADC readings can have a significant effect on internal calibration. No explanation of why, just re-iteration of the need to minimize noise throughout the analog chain. Other points coming from the discussion:

- The min/max specs of the ADC intended to cover the operating temp range, including effects of calibration.

- Factory recommendation is calibrate once, at room temp, save cal values, restore at reset time.

- Calibration varies "slightly" with temp, no temp. coefficient defined.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ENOBs is not the issue.

If multiple calibration runs are done back to back and nothing changes in the environment the calibration values should be the same.

They are not the same

Why?

What does calibration actually do?

Why do they vary so much?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Again, thank you for the reply. All good hints and tips on reducing ADC noise, but please take another look at the histograms. They show a reasonably quiet circuit design: 10000 readings, all returning a stable result +/- four counts - 13 reliable bits.

Given that the circuit IS reasonably quiet, meaning most of the noise sources are not an issue, AND 32-sample averaging in use:

WHY do those nice, tight, histograms bounce around so much - about 6 bits worth - after each recalibration?

fcw

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

While I don't have an answer for you, I have seen the same calibration issues in the KL27.

Simply running the same calibration routine twice in a row produces radically different values. :-(