- NXP Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- Vigiles

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

- Cloud Lab Forums

-

- Home

- :

- General Purpose Microcontrollers

- :

- Kinetis Microcontrollers

- :

- Multithreaded FatFS

Multithreaded FatFS

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Multithreaded FatFS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I'm having more problems using SD card on FRDM-K64. Using KSDK 1.3.0.

I'm trying to record in a single thread while other applications are running in separate threads. I have limited the problem down to the following. The SD card write will not fail if OSA_TimeDelay is commented, otherwise will stop executing before 1000 iterations.

Source Code:

/*

* SD card writes will randomly fail with action from other thread.

* */

#if 1

// SDK Included Files

#include "fsl_os_abstraction.h"

#include "fsl_debug_console.h"

// Project specific files

#include "fsl_mpu_driver.h"

#include "board.h"

#include "ff.h" //remove after test

#include "diskio.h" //remove after test

FATFS fatfs;

static void primary_thread(void *params)

{

FIL file;

uint8_t status;

uint32_t frame_num = 0;

uint8_t frame_buffer[5000];

uint8_t frame_len = 5;

uint32_t size;

char filename[40];

uint8_t i;

status = f_mount(SD, &fatfs);

if (status)

{

PRINTF("f_mount error %i\r\n", status);

while(1);

}

// disable Memory Protection Unit

for(i = 0; i < MPU_INSTANCE_COUNT; i++)

{

MPU_HAL_Disable(g_mpuBase[i]);

}

status = disk_initialize(SD);

if (status != 0)

{

PRINTF("Failed to initialise SD card\r\n");

while(1);

}

while (1)

{

sprintf(filename, "%i:fl%i.txt", SD, frame_num++);

PRINTF("FILENAME =%s\r\n ", filename);

status = f_open(&file, filename, FA_WRITE | FA_CREATE_ALWAYS);

if (status)

{

PRINTF("f_open error %i\r\n", status);

while(1);

}

status = f_write(&file, frame_buffer, frame_len, &size);

if (status || size != frame_len)

{

PRINTF("f_write error %i\r\n", status);

while(1);

}

status += f_close(&file);

if (status)

{

PRINTF("f_close error %i\r\n", status);

while(1);

}

GPIO1_TOGGLE;

}

}

static void secondary_thread(void *params)

{

while (1)

{

GPIO2_TOGGLE;

OSA_TaskYield();

OSA_TimeDelay(25); //commenting out this line will stop the issue

}

}

int main(void)

{

hardware_init();

OSA_Init();

uint32_t status;

status = OSA_TaskCreate(primary_thread, (uint8_t*)"primary_thread", 10000L, NULL, 4L, NULL, false, NULL);

if (kStatus_OSA_Success != status)

{

PRINTF("Error Creating thread \"output_thread\" \r\n");

while(1)

;

}

status = OSA_TaskCreate(secondary_thread, (uint8_t*)"secondary_thread", 10000L, NULL, 4L, NULL, false, NULL);

if (kStatus_OSA_Success != status)

{

PRINTF("Error Creating thread \"output_thread\" \r\n");

while(1)

;

}

OSA_Start();

return 1;

}

#endif

Stack:

Any suggestions as to what is going wrong here?

Cheers,

Sam

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Output :

Will get stuck after this point.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I've found a solution to the problem. Increasing the SDHC interrupt priority by adding

| NVIC_SetPriority(SDHC_IRQn, 6U); |

Will stop the program from crashing. If anyone can explain why this is the case that would be appreciated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

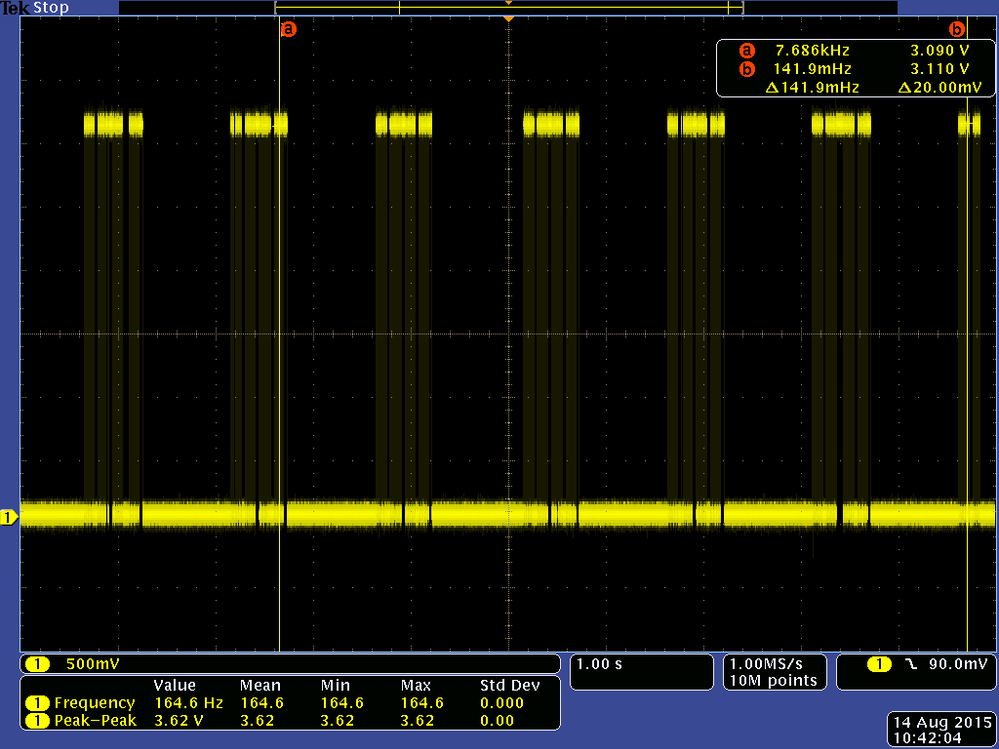

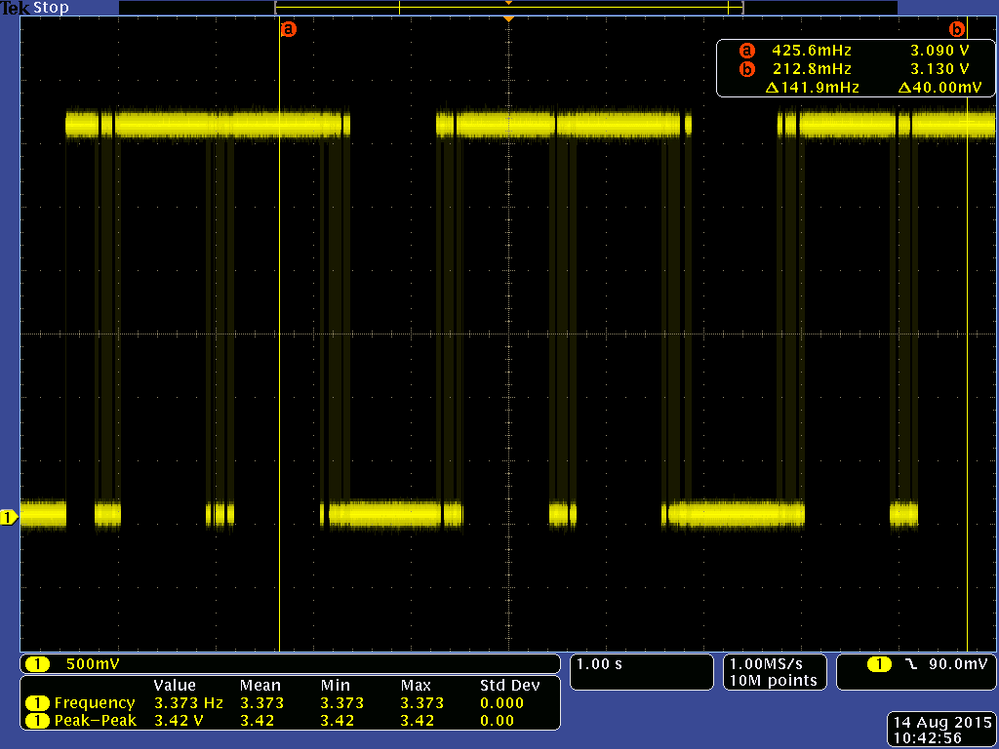

Further investigation : Interrupt priority fix just masks the issue. Found a problem writing to two different files, every few seconds a write takes an entire second. The scope grab shows quick writes followed by long delays of a second.

Any ideas?

Test code:

/*

* SD Card writes will periodically take 1 seconds when writing to two files

* */

// Standard C Included Files

#include <stdlib.h>

#include <stdio.h>

#include <string.h>

// SDK Included Files

#include "fsl_os_abstraction.h"

#include "fsl_debug_console.h"

#include "fsl_clock_manager.h"

#include "fsl_sdhc_card.h"

#include "fsl_sdmmc_card.h"

#include "fsl_mpu_driver.h"

#include "board.h"

#include "ff.h"

#include "diskio.h"

FATFS fatfs;

static void primary_thread(void *params)

{

FIL file1, file2;

uint8_t status;

uint8_t buffer[5120];

uint32_t buffer_len = 5120;

uint32_t size, i;

char filename[40];

configure_sdhc_pins(0);

CLOCK_SYS_EnableSdhcClock(0);

GPIO_DRV_Init(sdhcCdPin, NULL);

NVIC_SetPriority(SDHC_IRQn, 8U); // Will cause erratic bugs with threads when accessing sd card if not included

// disable Memory Protection Unit, required for SD Writes

for(i = 0; i < MPU_INSTANCE_COUNT; i++)

{

MPU_HAL_Disable(g_mpuBase[i]);

}

for (i = 0; i < buffer_len; i++)

{

buffer[i] = i;

}

status = f_mount(SD, &fatfs);

if (status)

{

PRINTF("f_mount error %i\r\n", status);

while(1);

}

status = disk_initialize(SD);

if (status != 0)

{

PRINTF("Failed to initialise SD card\r\n");

while(1);

}

sprintf(filename, "%i:file1.txt", SD);

PRINTF("FILENAME =%s\r\n ", filename);

status = f_open(&file1, filename, FA_WRITE | FA_CREATE_ALWAYS);

if (status)

{

PRINTF("f_open error %i\r\n", status);

while(1);

}

sprintf(filename, "%i:file2.txt", SD);

PRINTF("FILENAME =%s\r\n ", filename);

status = f_open(&file2, filename, FA_WRITE | FA_CREATE_ALWAYS);

if (status)

{

PRINTF("f_open error %i\r\n", status);

while(1);

}

while (1)

{

status = f_write(&file1, buffer, buffer_len, &size);

if (status || size != buffer_len)

{

PRINTF("f_write error %i\r\n", status);

while(1);

}

GPIO1_HIGH;

status = f_write(&file2, buffer, buffer_len, &size);

if (status || size != buffer_len)

{

PRINTF("f_write error %i\r\n", status);

while(1);

}

GPIO1_LOW;

}

status += f_close(&file1);

if (status)

{

PRINTF("f_close error %i\r\n", status);

while(1);

}

status += f_close(&file2);

if (status)

{

PRINTF("f_close error %i\r\n", status);

while(1);

}

}

int main(void)

{

uint32_t status;

hardware_init();

OSA_Init();

status = OSA_TaskCreate(primary_thread, (uint8_t*)"primary_thread", 10000L, NULL, 4L, NULL , false, NULL);

if (kStatus_OSA_Success != status)

{

PRINTF("Error Creating thread \"output_thread\" \r\n");

while(1)

;

}

OSA_Start();

return 1;

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sam

I think that you need to clearly seperate pre-emptive threading, the FAT and the SD card's own behaviour to be able to work out exactly what is happening.

SD cards do get quite slow sometimes (although I would not expect such repetitive slowness but rather something more random as to when it happens and how long it is busy for). It is also a good idea to use an industrial grade SD card since it is even possible to "break" some cards when writing continuous high speed data in small chunks (eg. the card will suddently never allow writing again although it can still be read normally - and doesn't signal any errors or protection in its status register - also a PC will "think" that it is writing to it but the new data will not be stored. The advice from SanDisk was to use an OEM type instead).

I have a (K64 based) product in continuous operation for 6 months or so logging two ADC data streams at each 40kBytes per second - filling up a 16G card each 2 days or so. Randomly the card will slow for a short time (this is because it is doing its own internal clean-up operations and signalling that it is busy, thus blocking the K64 from actually writing anything until it signals that it can accept data again). This uses the uTasker OS and utFAT - a bit of buffer tweaking to hold data input data while the card randomly signals busy was needed to avoid dropping input data.

Better performance can be obtained using SD card block writes (rather than the sector writes that your FAT will probably use) but it complicates the FAT, especially when multiple sources are writing, and can only be used in SPI mode on the K64 due to the problem that its SDIO controller doesn't support suspending transfers in progress (needed when multiple sources can access the card in an interleaved fashion).

When using pre-emptive operation make sure that the tasks use some form of protection so that one task performing an operation is never pre-empted by another one that wants to access the card (also when it is waiting for a card to signal that it is not busy again) - if the FAT doesn't include such protection itself it will probably quickly fail when there are multiple write/read sources..

What sort of (continuous) storage rate are you trying to achieve?

Regards

Mark

Kinetis: µTasker Kinetis support

K64: µTasker Kinetis FRDM-K64F support / µTasker Kinetis TWR-K64F120M support / µTasker Kinetis TWR-K65F180M support

For the complete "out-of-the-box" Kinetis experience and faster time to market

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mark,

Thank you for the detailed response.

I'm looking for the storage rate somewhere in the 1MB/s region.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sam

Is that continuous or burst?

Unfortunately I don't expect that you will achieve more than about 50..100kByte or so "continuous" with FatFS (although there may be some configuration options that may improve it a bit - like enabling data caching and reducing the amount of data object and FAT writes [tradeoff between reliability and potential loss of data]).

To get that rate I think that you will also need to write much larger blocks (make much larger single writes - preferably in the hundreds of kByte range each time).

Regards

Mark

Kinetis: µTasker Kinetis support

K64: µTasker Kinetis FRDM-K64F support / µTasker Kinetis TWR-K64F120M support / µTasker Kinetis TWR-K65F180M support

For the complete "out-of-the-box" Kinetis experience and faster time to market

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Continuous.

The same arrangement shown in the test program works (much smaller and less frequency delays, if any) when just writing to the single output file. I recorded an average of 250 of these 5120 block writes per second or roughly 1.3 MB/s.

How large would you expect the drop of performance caused by adding additional output files to be?

Thanks,

Sam

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sam, Your 1.3MB/s sustained is achievable. We've been able to get well over 9MByte/s sustained streamed data onto a class 10 industrial rated card using a 150Mhz K60. It will take some optimization. The quality of the card will affect throughput.

We are using FATfs. We did have to optimize the block writes to achieve the throughput. We try to restrict our writes to 512 bytes at a time. We only have one file open when we're streaming. While our homegrown OS is multitasking, it is not preemptive. Our SDHC card driver is interrupt driven.

When the card is pushed this hard, the times the card becomes non responsive have a fairly consistent repeat and duration. The rate they happen and the length do differ from manufacturer to manufacturer and card lot to card lot. You will need a large enough buffer to handle the non-responsive times. They can be > 500mS. Smaller block writes cause the non-responsiveness to happen more often.

Norm Davies

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Norm

Your sustained write speed is very high - could you give some insight into how you overcame the following?

1. Is the FAT (low level interface) using block or sector write commands? Typically a sector write (single 512 write of data) requires about 1.5ms (also not much faster on the highest class cards) but when block writes are commanded I then see that the 512 bytes can be written much faster - maybe 70us, as long as there is a multiple of them sent at the same time (this gives around 10MBytes rather than the original 600kBytes). I assume that block write commands must be involved, whereby they are "linear" writes and can't jump around between sectors).

2. When writing 512 bytes (also when aligned) to FAT32 there are typically 2 actual sector writes involved (1 to write the data, 1 to update the file object with its new size) but each time it involves a new cluster being allocated for the data, it adds up to a further 3 sector writes (additional 2 FAT table writes since FAT usually has two copies of the FAT, plus an INFO block write (optional)). This means that normally writing 512 bytes of file data at a time involves two sector writes and so can't actually benefit from block writes - unless block write of single sectors also makes the card a lot faster (?) and basically reduces the data rate by 50% due to the two physical writes for each data content write. On each new cluster there is an additional drop in performance to around 25%.

Does this means that your FAT is additionally caching data to avoid writes to the card until much larger blocks are available?

3. It is true that SD cards sometimes block for up to, and some times longer than, 500ms. For standard' industrial grade cards it seems to repeat every 5s or so, which reduces the storage rate by maybe 10% overall. But to bridge 10Meg Bytes data for 500ms you need to store 5Meg of data. Where do your store this (in SRAM?). With the K64 (256k internal RAM) I needed to use half of it to ensure no ADC loss at around 80kBytes/s.

It I project the achieved approx. 9MBytes sustained data write speed I come up with the following (assuming 32k Cluster size in the FAT). The actual raw data to disk speed is about 24MBytes/s (due to FAT overhead of around 55% and about 10% busy of 500ms every 5s). This means that each 512 byte write made to the disk is achieved in 21us and overall you have a 5 Meg Byte RAM storage in the chain to ensure no input data loss (possible with external RAM).

Can you confirm that the industrial class 10 card can really do this since the cards that I have seen tend to be > 1ms.

Finally, when you write that the SDHC driver is interrupt driven does it means that each long word write is controlled by an individual interrupt? 512 bytes requires 128 interrupts, whereby, assuming tail chaining interrupt (6 cycles from leaving the first interrupt to re-entering again) it would mean that the interrupt can re-enter once every 25MHz if the core is clocked at 150MHz. WIth interrupt overhead to save context and collect data and move it to the SD card it will be around 100% loaded to keep up with a 21us sector write without accounting for FAT SW and any other application code overhead.

It sounds that you have achieved extreme efficiency somewhere that needs to be explained more.

Regards

Mark

Kinetis: µTasker Kinetis support

K64: µTasker Kinetis FRDM-K64F support / µTasker Kinetis TWR-K64F120M support / µTasker Kinetis TWR-K65F180M support

For the complete "out-of-the-box" Kinetis experience and faster time to market

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mark, I was lead engineer on the project. I wasn't the engineer who did the optimizing of the write system. I'll try to tell you what I know. the numbers I gave were for maximum throughput of the card write system with internally generated data. Our product requirement is writing at 3MB/s. We can do this without any failures.

We are using block mode not sector mode. We transfer 512 bytes at a time.

We don't continually update the FAT table as we write. We pre-allocate the room we need for the data in the fat table. We ask for large empty blocks from the FAT table. We can then stream the data into the empty pre-allocated space. When the empty space is filled we go back to the FAT and request more. We trim the file's size after the acquisition stops. We know how much data is in the space by having repetitive block markers stored in the data stream. This greatly reduces the number of FAT table accesses.

Our products are vehicle engineering development tools. We bury our modules into our customers vehicles. We don't have control over the power. We could lose it at any time. Updating the FAT table as we write the data could leave us with an unusable file, when the power goes away. It means we have to update the FAT table before we collect the data. As a side effect it helped with the speed. We allocate large blocks on the file system.

We also believe that by pre-allocating the same sized block all the time helps limit the amount of time the SDHC card reports itself busy. We believe most of the time the card is busy it is trying to find un-erased flash blocks. By making the FAT allocation requests repetitive and consistent seemed to make the card availability more deterministic.

While we are interrupt driven, We're using the DMA to transfer the 512 bytes. So one interrupt for every 512 bytes. We only need to update the DMA table with pointers to the collected data in the interrupt.

You are correct about the data buffering for the long not available times. We found by pre-allocating, and playing with internal buffers sizes we had enough internal RAM on a K60 to meet our 3MB/s requirement.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Norm

Thanks for the clarification.

Pre-priming the FAT and then writing into essentially linear space allows large block writes and thus the maximum card write speed to be achieved.

As I undersand it, you modified some parts of FatFs and trade off general file system operation to achieve max speed (and /or other requirements) for a dedicated single file.

In this case I agree that in the region of 10 Meg Bytes is achievable (this is easy to test by writing large amounts of data to the SD card in raw mode, which suits the block command very well).

Therefore I think the conclusion is that the maximum achievable FAT operation depends on various factors, whereby

- standard out-of-the-box FAT with multiple parallel file reads/writes can be fairly slow

- customised operation (emulating linear raw mode writing) and resticting FAT functionality can be quite fast

For requirements in the middle, some FAT customisation and some restrictions in FAT operation may achieve a compromise between the two. Some custom development may be required in the process.

Returning to the original topic - the (sometimes long) card busy periods are in fact well known and to be expected. Avoiding certain types of operation can reduce their frequency. Whether target data rates can be achieved will be somewhat application specific and some custom development may need to be foreseen in some cases. In pre-emptive multitasking environments with multiple parallel operations care needs to be taken to avoid operations conflicting with each other - it seems a good idea to be sure that this aspect and the card performance itself are strictly separated during analysis to be sure of accurate results.

Regards

Mark

Kinetis: µTasker Kinetis support

K64: µTasker Kinetis FRDM-K64F support / µTasker Kinetis TWR-K64F120M support / µTasker Kinetis TWR-K65F180M support

For the complete "out-of-the-box" Kinetis experience and faster time to market

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see it a little differently Mark,

We optimized large block file writing. Most file operations are going to large block transfers. The dribbling of data into the file during data acquisition is the outlier condition. It is not an efficient use of the file system. We made our Data acquisition system generate large block records for better efficiency.

All of the functionality is still there. You could even interleave multi-file accesses in there with minor performance hits as long as you keep the block accesses large. We just do things in a slightly different order. Whether I claim my files size before I stream the data into the file or update the FAT as I'm streaming the data is irrelevant as long as the FAT is correct once the Data is in place.

The important thing for performance is to keep the DMA primed with data for transfer.