- NXP Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- Vigiles

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

-

- Home

- :

- MQX Software Solutions

- :

- MQX Software Solutions

- :

- Time Slicing Rendered Ineffective

Time Slicing Rendered Ineffective

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Time Slicing Rendered Ineffective

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In some of our MQX based solutions we are experiencing situations where the

MQX time slicing is rendered ineffective due to timing effects. As a result,

some of our task starve for unacceptably long periods of time, rendering the

system useless.

The Issue

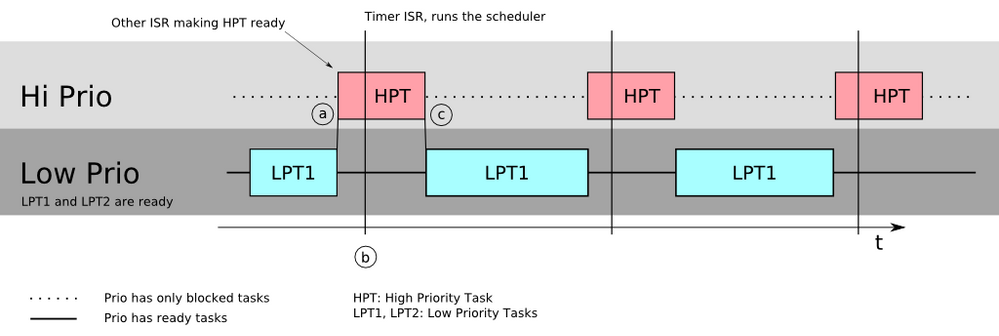

Consider the situation depicted in the figure. We have tasks running at two

different priority levels, high and low. The High Priority group contains

one task which waits on an event, does some work and then waits on the

same event again. The low priority task group contains two tasks, dubbed LPT1

and LPT2. LPT1 is a long-running task, possibly being always ready. LPT2 is

another low prio task which is ready. Since it is never running in the figure,

it is not depicted graphically. We are running an MQX scheduler with time

slicing enabled and both low priority tasks have time slicing enabled.

When the situation depicted in the figure persists, there is no fairness in

the low priority task group. The time slice of a task is only ever incremented

in _time_notify_kernel() which runs during the timer interrupt service routine.

This point in time is marked (b). Shortly before the timer ISR is run, a high

priority task is made ready and consequently the dispatcher runs the

high priority task. This point in time is marked (a). Shortly after the timer

ISR, the high priority task again becomes blocked. Consequently, at (c), the

dispatcher runs the task at the head of the low priority ready queue, LPT1.

When _time_notify_kernel() runs at (b), it increments the time slice of HPT, if

applicable. The time slice of LPT1 is left untouched. As per the figure, if

the HPT is always running when the timer IRQ is asserted, the time slice of

LPT1 will never be incremented. Thus, LPT2 will be starved.

Triggering the Issue

We have a number of setups that exhibit the described problem. We have the

scheduler interval set at 1ms. The scheduler is called by the timer ISR, which

also runs every 1 ms. We have a bus system requiring service by a high

priority task every 1ms, nominally. The timer runs on a local oscillator,

whereas the bus service interrupt is timed through the bus. Effectively this

means that the bus interrupt runs off some other clock source. We have low

priority tasks which are ready for long periods of time, corresponding to LPT1

and we have other low priority tasks such as LPT2. The phase between HPT

becoming ready and the timer IRQ being asserted slowly drifts, according to

the beat frequency between the oscillator frequency and the oscillator

controlling the bus services. When HPT is running between two timer ISRs, we

see normal system behavior. When the HPT is running when the timer IRQ is

asserted, we see large latency for LPT2. In practice we see the system running

as expected for e.g. 50 seconds, then be unresponsive for the next 15 seconds.

Distilling from the previous paragraphs, all of the three following

conditions need to be met to have long latencies:

1. Coarse time slicing granularity.

2. A low prio task group with multiple ready tasks, at least one task being continually ready.

3. Timer IRQ asserted when a high prio task is running.

Solutions

Since all three conditions need to be fulfilled to trigger the misbehavior,

removing one of them will fix the issue.

Changing 1.) requires changes to the scheduler and dispatcher. The code

running at points (a) and (b) is part of the scheduler and written in C. The

code running at (c) is running in the dispatcher and written in Coldfire

assembler. The dispatcher has no knownledge about the offset of the time slice

length and offset within the task descriptor structure. The offset and time

slice length can vary according to compile time configuration. If I had to

guess, the MQX scheduler was never designed to accound for sub-tick

timeslices, even though the time slicing uses tick structs which allow for

sub-tick resolution.

Changing 2.) is difficult since we have large bodies of opaque code running on

our platform. And after all, it is the scheduler's responsibility to provide

fairness in a task group. Changing 2.) is difficult for us and it feels like a

hack, not like solution.

Changing 3.) is a realistic possibility in our setups with the two

plesiochronous 1ms IRQs. The scheduler interval can be set to a value

different from 1 ms. This is not a general solution since other IRQs might

still accidentally be asserted at critical points in time, trigger the

abovementioned issue. If these "misplaced" IRQs happen only sporadically,

latencies are still very acceptable.

Our favourite solution

We are thinking about changing 1.) Our changes would look as follows: During

all (a) like transfers of control from a low prio task to a high prio task we

increment the time slice of the low prio task by one. At (b), in

_time_notify_kernel, we do not only check the time slice of the task at the

head of the active ready queue, but we check the time slices of all the tasks

at the heads of all the lower priority ready queues as well. This pretty much

assures non-starvation in the lower prio task group, albeit at the cost of

tasks receiving potentially very short time slices.

Other Ideas?

Are there other options to solve the issue? Have other people experienced this

phenomenon as well? Is there a well known name for this sort of problem?

Is there a well known solution?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If scheduler works as you described, it looks like a bug.

When time slicing is enabled for both low priority tasks, these tasks have to alternate in running.

I reported it in our internal bug database and designers will analyze that.

In mean time, could you please specify your version of MQX and just for sure also MCU and your toolchain (CW/IAR/Keil,…)?

Have a great day,

RadekS

-----------------------------------------------------------------------------------------------------------------------

Note: If this post answers your question, please click the Correct Answer button. Thank you!

-----------------------------------------------------------------------------------------------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for reporting the bug.

We are running MQX 3.7 on an MCF54418. We are using the CW Toolchain.

We have checked the release notes of MQX 3.7 up to 4.1 and have not found any mention of changes related to time slicing or fairness.