- NXP Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- Vigiles

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

-

- Home

- :

- General Purpose Microcontrollers

- :

- Kinetis Microcontrollers

- :

- Memory to Memory DMA performance reference (K60 150MHz)

Memory to Memory DMA performance reference (K60 150MHz)

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Memory to Memory DMA performance reference (K60 150MHz)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All

I have a test were the following 6 operations are performed using standard memcpy() and memset() [working with a byte pointer and a loop]. On a K60 at 150MHz with M2M operations in SRAM.

1. clear 2k bytes of memory using memset() - 84us

2. perform memcpy() of 2k bytes [both arrays are long word aligned] - 109us

3. perform memcpy() of 2k bytes [source array is long word aligned, destination array is odd address aligned 0xXXXX1] - 109us

4. perform memcpy() of 2k bytes [destination array is long word aligned, source array is odd address aligned 0xXXXX1] - 109us

5. perform memcpy() of 2k bytes [source and destination arrays are odd address aligned both 0xXXXX1] - 109us

6. perform memcpy() of 2k bytes [destination and source arrays are on odd addresses - one with 0xXXXX1 and one with 0xXXXX3] - 109us

As expected with SW the memset() is faster since it only moves one pointer and all memcpy() variations are the same.

Then I tested memset() and memcpy() based on M2M DMA.

1. clear 2k bytes of memory using memset() - 14.4us

2. perform memcpy() of 2k bytes [both arrays are long word aligned] - 14.4us

3. perform memcpy() of 2k bytes [source array is long word aligned, destination array is odd address aligned 0xXXXX1] - 55.2us

4. perform memcpy() of 2k bytes [destination array is long word aligned, source array is odd address aligned 0xXXXX1] - 55.6us

5. perform memcpy() of 2k bytes [source and destination arrays are odd address aligned both 0xXXXX1] - 14.4us

6. perform memcpy() of 2k bytes [destination and source arrays are on odd addresses - one with 0xXXXX1 and one with 0xXXXX3] - 28.0us

As can be seen, if the source and destination pointers are long word aligned the performance is maximum but in some cases, due to restrictions of DMA address alignment, byte or half-word transfers have to be used instead. I wonder whether there are any techniques to achieve greater performance? As a reference I have done the same test with an STM32F407 at 168MHz and achieved virtually identical results (just all 12% faster due to the +12% higher clock) suggesting that the DMA is already at its limit.

Interestingly a pure SW based solution can beat DMA in case 1. If the code is written in assembler to make use of the STMDBCS R0!, {R2-R5} type commands it can save the memset value to multiple registers and then do an instruction based burst write from these registers to SRAM - a time of 8.6us is measured in this case which I don't think that DMA will be able to match (?). This is however only possible for memset() and not memcpy()...or does anyone know how to beat it??

Regards

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mark,

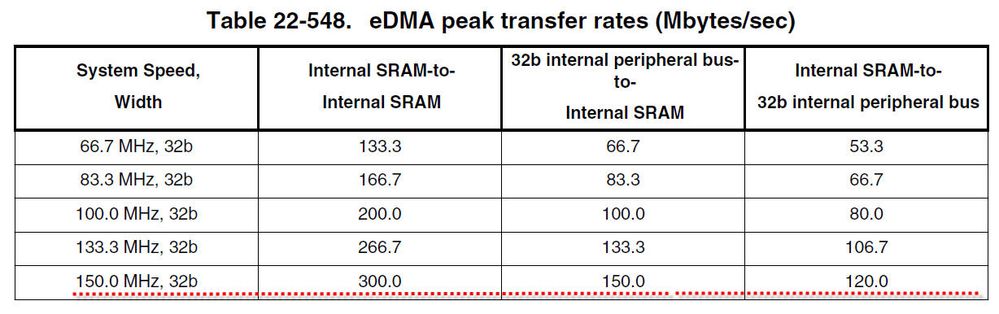

The K60 reference manual with below eDMA peak transfer rates table:

This is based on all accesses are 32-bits with address aligned.

And DMA trigger request response time also need be consider.

I had done a eDMA test with Kinetis K60 100MHz product, using DMA do SRAM data copy to SRAM, the eDMA performance as reference description.

I also attached my eDMA test code for your reference.

Wish it helps.

Best regards,

Ma Hui

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ma

I believe that I am getting 150MBytes/s SRAM->SRAM which would be only half the rate that you expect (?)

This is the DMA configuration which uses a single loop iteration, DMA channel 0 set to lowest priority

ptrDMA_TCD->DMA_TCD_ATTR = (DMA_TCD_ATTR_DSIZE_32 | DMA_TCD_ATTR_SSIZE_32); // ensure standard settings are returned

ptrDMA_TCD->DMA_TCD_DOFF = 4; // destination increment long word

ptrDMA_TCD->DMA_TCD_SOFF = 4; // source increment long word

ptrDMA_TCD->DMA_TCD_CITER_ELINK = 1; // one main loop iteration - this protects the DMA channel from interrupt routines that may also want to use the function

ptrDMA_TCD->DMA_TCD_SADDR = (unsigned long)buffer; // set source for copy

ptrDMA_TCD->DMA_TCD_DADDR = (unsigned long)ptr; // set destination for copy

ptrDMA_TCD->DMA_TCD_NBYTES_ML = ulTransfer; // set number of bytes to be copied

ptrDMA_TCD->DMA_TCD_CSR = DMA_TCD_CSR_START; // start DMA transfer

while (!(ptrDMA_TCD->DMA_TCD_CSR & DMA_TCD_CSR_DONE)) {} // wait until completed

Do you have a binary of your software that can be loaded to a Freescale board to verify with?

Regards

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mark,

Please check attached test image file, which run at TWR-K60N512, please use OSJTAG virtual serial as serial terminal port, baud rate is 115200.

For K60 100MHz product DMA performance should be 200MBytes/second, while I test result is 99MBytes/second.

I am checking with Kinetis product team about this issue.

I will let you know when there with any updated info.

Thank you for the patience.

Best regards,

Ma Hui

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ma

Thanks you for the image.

That is the same transfer speed as I get (1MByte/s per MHz system clock).

I am wondering whether the specified 200MBytes/s for SRAM -> SRAM [at 100MHz system clock] is 100MBytes/s for the read and 100MBytes/s for the write and so in total 200MBytes/s but total transfer still 100MBytes/s (as a peripheral <-> SRAM transfer is specifed as)???

Regards

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mark,

When use DMA access SRAM with 32-bit transfer size setting at TCD Transfer Attributes (DMA_TCD_ATTR), there with one wait state during each read/write. That's why the DMA performance doesn't up to 200MB/s.

Please try to modify the DMA transfer size to 16-bytes or 32-bytes(Kinetis 100MHz silicon V2.x) at DMA_TCD_ATTR register, it will enhance the DMA performance.

I could get the 162MB/s transfer rate with TWR-K60D100M board.

Wish it helps.

Best regards,

Ma Hui

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Hui

When I test on an MK60NF1M0VMD15 (mask 3N96B) I get 99MBytes/s* when all transfers are 32 bits in size TCD Transfer Attributes (DMA_TCD_ATTR).

When I change the attributes to 16 bit transfers the throughput reduces to 50MBytes/s*.

[clocked at 100MHz - 1.5x faster when clocked at 150MHz]

1. This means that I don't see how decreasing the transfer width can help since it requires twice the amount of read/writes.

2. Does it mean that the MK60NF1M0VMD15 is using the wait state (presumably for both 16 and 32 bit accesses) but some other devices don't - I have a TWR-K60D100M which I can test on - do you expect that this will be more efficient?

Regards

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mark,

I think whatever DMA transfer size, there all with one cycle wait state during each data read and write. For 32-bit transfer size, the one cycle wait state affect transfer width dramatically; while 32 bytes size transfer, that one cycle wait state affect affection will be reduced.

That's why using transfer size 32 bytes will be close to the peak value 200MB/s.

Wish it helps.

Best regards,

Ma Hui