- NXP Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- Vigiles

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

-

- Home

- :

- i.MX Forums

- :

- i.MX RT

- :

- MODEL_RunInference in evkmimxrt1060_tensorflow_lite_kws example

MODEL_RunInference in evkmimxrt1060_tensorflow_lite_kws example

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

MODEL_RunInference in evkmimxrt1060_tensorflow_lite_kws example

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I run the evkmimxrt1060_tensorflow_lite_kws example.

After preprocessing audio, I see two function called after that to inference the output: MODEL_RunInference(); and MODEL_ProcessOutput(outputData, &outputDims, outputType, endTime - startTime);

But the example model use the Softmax Regression :

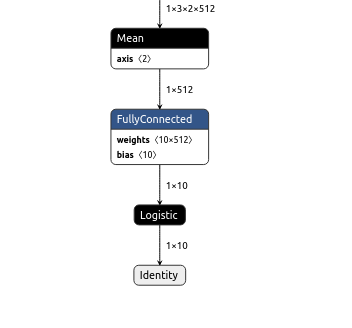

And my model use Logistic Regression:

The question is can I re-use 2 function above for my model? If not, could you suggest me somthing to run inference for my model?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can use those 2 function to predict the output, thanks for your reply.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi nahan_trogn,

Glad to hear you can reuse it on your side.

If you still have questions about this case, just kindly let me know.

Any new issues, welcome to create the new case.

Best Regards,

Kerry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi nahan_trogn,

Do you use the DeepView tool for your related picture?

About your Logistic Regression. I think you can try to call that 2 function after your model and test it on your side.

If you meet any issues, just kindly let me know.

Best Regards,

Kerry