- NXP Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- Vigiles

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

-

eIQ Sample Apps - TFLite Quantization

eIQ Sample Apps - TFLite Quantization

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This tutorial demonstrates how to convert a Tensorflow model to TensorFlow Lite using post training quantization and run the inference on an i.MX8 board using the eIQ™ ML Software Development Environment. It uses a TensorFlow mobilenet_v1 model pre-trained with ImageNet.

NOTES:

1. Except for the section "Build Custom Application to Run the Inference" this tutorial can also be applied to any i.MX RT platform.

2. For more information on quantization check the 'QuantizationOverview.pdf' attached to this post.

Environment

- Ubuntu 16 host PC

- Any i.MX8 platform

- Yocto BSP 4.14.98/sumo 2.0.0 GA with eIQ for the chosen i.MX8 platform. The following are needed:

- Successful build environment located in $YOCTO_BUILD_FOLDER, for fsl-image-qt5 image, using imx-4.14.98-2.0.0_machinelearning.xml manifest. Make sure to set 'local.conf' to include tensorflow and tensorflow-lite. All the required steps can be found in the following documentation: NXP eIQ(TM) Machine Learning Enablement

- Build and install of YOCTO SDK:

$: bitbake fsl-image-qt5 -c populate_sdk

$: ./$YOCTO_BUILD_FOLDER/bld-wyland/tmp/deploy/sdk/fsl-imx-xwayland-glibc-x86_64-fsl-image-qt5-aarch64-toolchain-4.14-sumo.sh

Prerequisites

- Install Anaconda3 on Ubuntu host. Instructions here.

- Create anaconda Tensorflow environment:

$: conda create -n tf_env pip python=3.6

$: conda env list

$: conda activate tf_env

$: pip install tensorflow==1.12.0NOTE: make sure that the installed TensorFlow version is the same as the one used in eIQ (currently 1.12)

- Clone TensorFlow github repo; use branch 1.12 to match eIQ version:

$ git clone https://github.com/tensorflow/tensorflow.git

$ cd tensorflow

$ git checkout r1.12- Download and extract mobilenet_v1 model to convert.

$: mkdir ~/tf_convert

$: cd ~/tf_convert/

$: wget http://download.tensorflow.org/models/mobilenet_v1_2018_08_02/mobilenet_v1_1.0_224.tgz

$: tar -xzvf mobilenet_v1_1.0_224.tgzNOTE: On the tensorflow github there are multiple model versions available for MobileNet_v1. The intention is to provide different options to fit various latency and size budgets. For this tutorial mobilenet_v1_1.0_224 is used. For a brief explanation of the naming convention:

- v1 - model release version

- 1.0 - model size (determined by alpha parameter)

- 224 - input image size (224 x 224pixels)

Convert Mobilenet v1 Tensorflow Model to TF Lite

- Create ‘convert.py’ python script in folder '~/tf_convert:'

import tensorflow as tf

graph_def_file = 'mobilenet_v1_1.0_224_frozen.pb'

input_arrays = ["input"]

output_arrays = ["MobilenetV1/Predictions/Reshape_1"]

converter = tf.contrib.lite.TFLiteConverter.from_frozen_graph(graph_def_file, input_arrays, output_arrays)

converter.post_training_quantize = True

tflite_model = converter.convert()

open("converted_model.tflite", "wb").write(tflite_model)

NOTE: for details on how to set the values for 'input_arrays' and 'output_arrays' see section 'How to obtain input_arrays and output_arrays for conversion'

- Run script:

$: conda activate tf_env

$: python convert.py

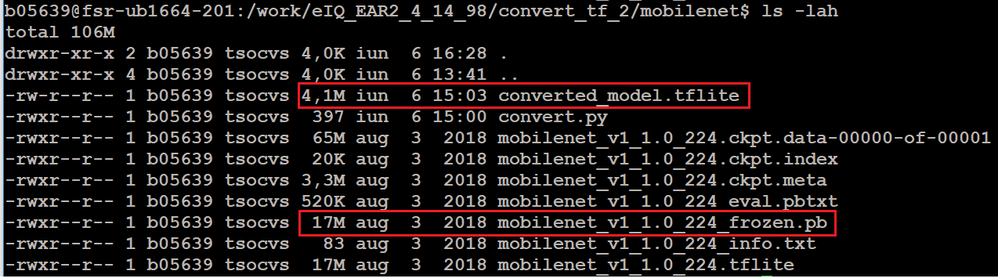

- Expected output: tflite model is created 'converted_model.tflite'

NOTE: notice that after quantization the model size was reduced ~x4 times in moving from 32-bits to 8-bit.

How to obtain input_arrays and output_arrays for conversion

This tutorial describes two ways to obtain this data: using tensorflow API to inspect the model and dump the information OR using a GUI tool to visualize and inspect the model. Both will be detailed in the following sections.

Method 1 - programmatically using Tensorflow API to dump the model graph operations in a file

- Download and run the following script to dump the operations in the model:

$: wget https://gist.githubusercontent.com/sunsided/88d24bf44068fe0fe5b88f09a1bee92a/raw/d7143fe5bb19b8d5beb...

$: python dump_operations.py mobilenet_v1_1.0_224_frozen.pb > ops.log

$: vim ops.log- Check the first and last entry in the Operations list to get input_array and output_array:

Operations:

- Placeholder "input" (1 outputs)

- Const "MobilenetV1/Conv2d_0/weights" (1 outputs)

- ...........

- Shape "MobilenetV1/Predictions/Shape" (1 outputs)

- Reshape "MobilenetV1/Predictions/Reshape_1"(1 outputs)

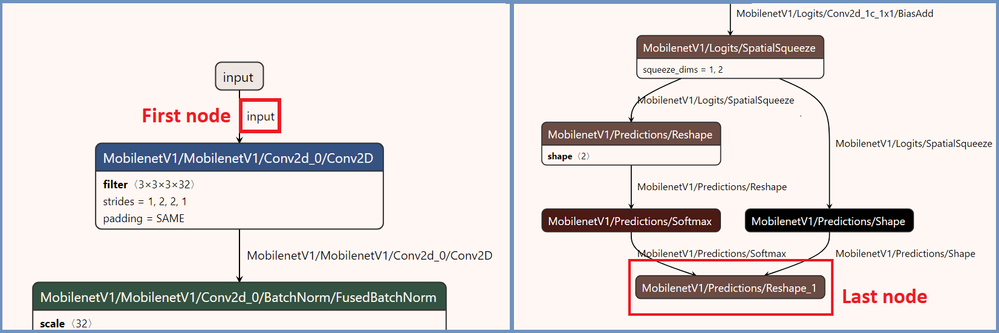

Method 2 - using netron GUI tool

Netron is a viewer for neural network, deep learning and machine learning models.

Install it on the Ubuntu host and use it to inspect the *.pb tensorflow frozen model:

$: pip install netron

$: netron mobilenet_v1_1.0_224_frozen.pb

Serving 'mobilenet_v1_1.0_224_frozen.pb' at http://localhost:8080Open the specified page in the browser to visualize the graph and check the name of the first and last node:

Build Custom Application to Run the Inference

- To easily follow the code, set the following environment variables:

TF_REPO - full path to the tensorflow repo (see Prerequisites section for details)

MOBILENET_PATH - full path to the location of the mobilenet_v1 models (see Prerequisites section for details)

YOCTO_SDK - full path to the location of the YOCTO SDK install folder (see Prerequisites section for details)

BOARD_IP - IP of the i.MX8 board

- Cross-compile tensorflow lite label_image example with Yocto toolchain:

$: cd $TF_REPO/tensorflow/contrib/lite/examples/label_image/

$: source $YOCTO_SDK/environment-setup-aarch64-poky-linux

$: $CXX --std=c++11 -O3 bitmap_helpers.cc label_image.cc -I $YOCTO_SDK/sysroots/aarch64-poky-linux/usr/include/tensorflow/contrib/lite/tools/make/downloads/flatbuffers/include -ltensorflow-lite -lpthread -ldl -o label_image

- Deploy the label_image binary, tflite model and input image on the i.MX8 device:

$: scp label_image root@$BOARD_IP:~/tflite_test

$: scp $MOBILENET_PATH/converted_model.tflite root@$BOARD_IP:~/tflite_test

$: scp -r testdata root@$BOARD_IP:~/tflite_test

$: scp ../../java/ovic/src/testdata/labels.txt root@$BOARD_IP:~/tflite_test- Run app:

$: ./label_image -m converted_model.tflite -t 1 -i testdata/grace_hopper.bmp -l labels.txt- Expected output:

Loaded model converted_model.tflite

resolved reporter

invoked

average time: 571.325 ms

0.786024: 653 military uniform

0.0476249: 466 bulletproof vest

0.0457234: 907 Windsor tie

0.0245538: 458 bow tie

0.0194905: 514 cornet

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

101

6 -

communication standards

4 -

General Purpose Microcontrollers

19 -

i.MX RT Processors

45 -

i.MX Processors

43 -

introduction

9 -

LPC Microcontrollers

73 -

MCUXpresso

32 -

MCUXpresso Secure Provisioning Tool

1 -

MCUXpresso Conig Tools

30 -

MCUXpresso IDE

40 -

MCUXpresso SDK

25 -

Model-Based Design Toolbox

6 -

MQX Software Solutions

2 -

QorIQ Processing Platforms

1 -

QorIQ Devices

5 -

S32N Processors

4 -

S32Z|E Processors

6 -

SW | Downloads

4

- « Previous

- Next »