- NXP Forums

- Product Forums

- General Purpose MicrocontrollersGeneral Purpose Microcontrollers

- i.MX Forumsi.MX Forums

- QorIQ Processing PlatformsQorIQ Processing Platforms

- Identification and SecurityIdentification and Security

- Power ManagementPower Management

- MCX Microcontrollers

- S32G

- S32K

- S32V

- MPC5xxx

- Other NXP Products

- Wireless Connectivity

- S12 / MagniV Microcontrollers

- Powertrain and Electrification Analog Drivers

- Sensors

- Vybrid Processors

- Digital Signal Controllers

- 8-bit Microcontrollers

- ColdFire/68K Microcontrollers and Processors

- PowerQUICC Processors

- OSBDM and TBDML

-

- Solution Forums

- Software Forums

- MCUXpresso Software and ToolsMCUXpresso Software and Tools

- CodeWarriorCodeWarrior

- MQX Software SolutionsMQX Software Solutions

- Model-Based Design Toolbox (MBDT)Model-Based Design Toolbox (MBDT)

- FreeMASTER

- eIQ Machine Learning Software

- Embedded Software and Tools Clinic

- S32 SDK

- S32 Design Studio

- Vigiles

- GUI Guider

- Zephyr Project

- Voice Technology

- Application Software Packs

- Secure Provisioning SDK (SPSDK)

- Processor Expert Software

-

- Topics

- Mobile Robotics - Drones and RoversMobile Robotics - Drones and Rovers

- NXP Training ContentNXP Training Content

- University ProgramsUniversity Programs

- Rapid IoT

- NXP Designs

- SafeAssure-Community

- OSS Security & Maintenance

- Using Our Community

-

-

Introducing the i.MX 8M Plus – The New Standard Bearer for AI/ML at the “Edge”.

The NXP i.MX family has a long heritage in what I consider “solid” applications processor design fundamentals. My first encounter with an applications processor design was with the ARM9 based i.MX233 and it now seems galaxies apart from the current offerings. I have always considered NXP to be “the” reference in how applications processors should be designed. One differentiating aspect of NXP’s offerings is the specialized IP attached to the core complex. In addition to the IP, I have personally found that the breadth of readily available documentation and availability of open-source hardware drivers make NXP the “go to” source for application processors.

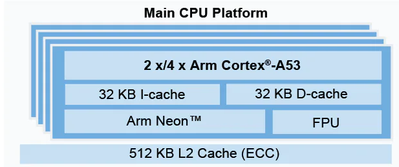

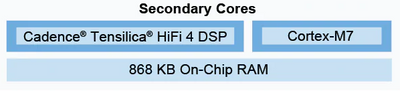

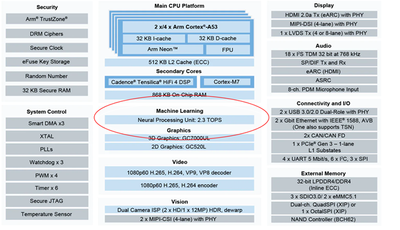

Many applications processors are defined primarily by their main CPU core complex. The ubiquity of the Arm Cortex® A-class CPUs ensures easy software development. This is the foundation for a solid product and the i.MX 8M Plus is built upon a dual/quad Cortex® A53 platform.

Applications processors running a sophisticated operating systems such as Linux or Android serve the needs of many automotive and industrial problems with their incredible processing throughput. There are however situations where hard real-time response using a microcontroller running bare metal code or an RTOS can be valuable. While raw processing throughput can be achieved with an Arm A53, some applications call for real-time response through bare metal code or an RTOS running on a microcontroller core. NXP has been second to none in enabling these applications with secondary real-time processing cores. The i.MX 8M Plus offers a Cortex® M7 for real time applications.

I do want to mention the asymmetric CPU architecture is not limited to the i.MX applications processor families. NXP has offered this capability in the several of LPC microcontroller families as well. Even the recently announced i.MX RT1170 crossover MCU combines a Cortex® M7 with a Cortex® M4 in a compact package. The key take-way is exceptional scalability across the portfolio of NXP’s product offerings.

Last year I had quite a bit to say about multi-core integration in the LPC55 microcontroller series and would like to draw some parallels between multi-core at the “nano” scale and the “applications” scale in the i.MX8M Plus. I am generally attracted to parts that combine a general reference CPU with specialized co-processing and DSP functions. This intersection enables unique applications and makes NXP the go to platform when ones need to implement truly special designs. I wrote quite a bit about how the LPC55 PowerQuad math co-processor and how it enables extremely low power applications in DSP, control and machine learning at the extreme edge. Likewise, the i.MX 8M Plus also adds specialized cores, such the Tensilica HiFi DSP. By themselves, the addition of the Cortex-M7 and HiFi 4 DSP make for an interesting product, but I want to take a moment to talk about a real gem in this part.

The i.MX 8M Plus SoC Neural Processing Unit (NPU)

In 2021 we are at an inflection point for the implementation of AI and machine learning algorithms. We have observed real inroads made into practical applications such as computer vision and machine health monitoring. It is common for developers and data scientists to use high level languages such as Python and toolkits like TensorFlow to develop their ML/AI applications. It is also not uncommon to see developers use the application CPUs themselves as the “PC” to do all their development. The Cortex-A53 core complex is more than capable of running high level languages and tools to work on modern AI and machine learning problems. However, many of these algorithms require quite a bit of CPU time to execute in real-time.

When I am 1st learning about a new processing algorithm, I often like to strip away as much as I can to understand what the math is doing. In many cases, the “kernel” of computation is very simple and repetitive. For example, in some of my LPC55 DSP articles, I broke down topics such as digital filter and Fast Fourier Transforms into their fundamental operations to understand what is going on under the hood. Demystifying the math is helpful in understand why co-processing is a good idea.

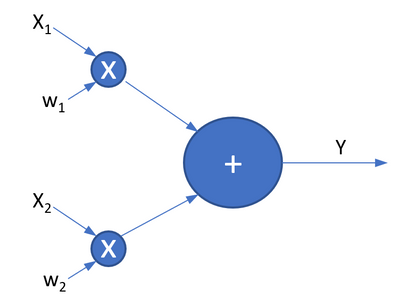

The algorithms underpinning machine learning and AI are no different. We tend to think of them as “magic” and many developers rely on high level libraries to do the work without every understanding what they are really doing. At the core of many of these algorithms is a simple processing node (or neuron):

Y = X1 * w1 + X1 * w1 …..

The output of the processing structure is a simple weighted some of its inputs. In addition to the weight sum, it is common to pass the output to non-linear function to “squash” the extremes. This is called a sigmoid function. The sigmoid can help introduce non-linearity into the computation to be able to model more complicated behaviors.

Side note…. A popular sigmoid is the arctan(x) function. As an interesting anecdote, is also used to model the grid-plate transfer function of a vacuum tube. A while back I demonstrated this sigmoid running on early Kinetis M4 microcontroller simulate an “old school” tube guitar amplifier

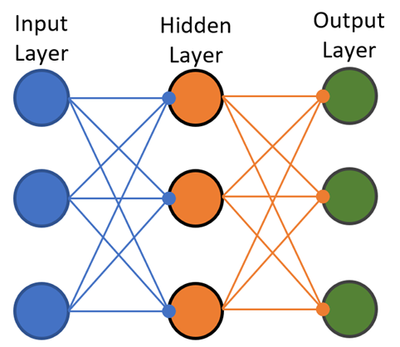

A single neuron is not very useful by itself, so we usually take an input vector and link the neurons together through successive “layers”.

I do not want to over-trivialize what is going on here, but many AI/ML processing algorithms are variations on this theme. For large networks, this graph easily turns into a large “rats nest” with all the interconnections. Implementation of the “rats nest” translates to a large number multiply-add operations that look like convolution in the DSP world combined along with lookup tables (or optimized computations) for the sigmoids. A lot of the magic in ML/AI is figuring the internal “weights” and setting up the overall processing structure. There are other details in how to train a network, but the underlying computation is repetitive and straightforward.

At the “edge” it is important to optimize the implementation for speed and power consumption and a co-processor makes perfect sense to move implementation in a real-time performance mode. This is where the Neural Processing Unit (NPU) in the i.MX 8M Plus comes into play. We can use the general purpose A53 cores for our high level and general-purpose system functions and let the NPU take care of the repetitive computations.

The NPU capability lies in the “sweet spot” for performance to enable real time response for common AI/ML problems. Combined with the video and vision co-processing capabilities, the i.MX 8M Plus will be the standard bearer for Edge ML/AI implementations. Along with all the common IO you would expect in an applications processor, the i.MX 8M Plus is an impressive new piece of hardware and certainly satisfies my curiosity in finding the latest parts that have the ability to power the “next big thing”.

只有注册用户才能在此添加评论。 如果您已经注册,请登录。 如果您还没有注册,请注册并登录。

-

101

6 -

communication standards

4 -

General Purpose Microcontrollers

19 -

i.MX RT Processors

45 -

i.MX Processors

43 -

introduction

9 -

LPC Microcontrollers

73 -

MCUXpresso

32 -

MCUXpresso Secure Provisioning Tool

1 -

MCUXpresso Conig Tools

30 -

MCUXpresso IDE

40 -

MCUXpresso SDK

25 -

Model-Based Design Toolbox

6 -

MQX Software Solutions

2 -

QorIQ Processing Platforms

1 -

QorIQ Devices

5 -

S32N Processors

4 -

S32Z|E Processors

6 -

SW | Downloads

4

- « 前一个

- 下一个 »